Chapter 18 - deep learning algorithm cartpole

Hi!

Might it be possible to use the same code at page 634 that is reported in the book for the acrobot?

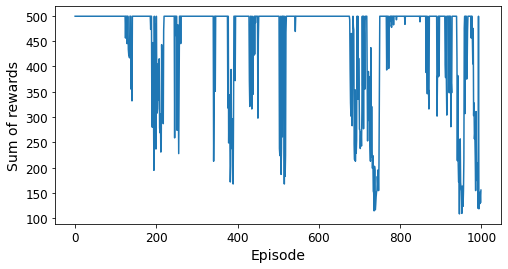

I've gave it a try, these are the results

Hi @OrnellaFanais ,

Sorry for the late response, I was busy on other projects but I'm back. The code in the book can definitely be used almost as-is with many gym environments, but there are still a few tweaks needed here and there, such as adapting the number of inputs and outputs of the DQN network. In the case of the acrobot, there are 6 inputs and 3 outputs (instead of 4 and 2 for the cart-pole). Also, the cart-pole must try to last as long as possible, whereas the acrobot must finish as quickly as possible. For this reason, the cart-pole gets a reward of +1 at each step, whereas the acrobot gets a negative reward of -1 at each step. In my code I used the fact that the cart-pole's total rewards in an episode is just equal to the number of steps until it finishes, which is why I wrote rewards.append(step), but in the case of the acrobot, the total rewards is actually the opposite of that, so you should replace that line and the next few lines with something like this:

sum_rewards = -step

rewards.append(sum_rewards)

if sum_rewards > best_score:

best_weights = model.get_weights()

best_score = sum_rewards

Also make sure to initialize best_score with -500 (instead of 0).

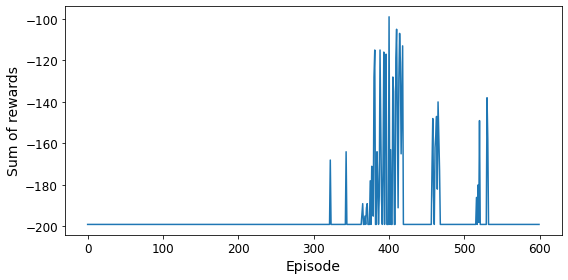

So your figure should be flipped vertically: 500 is actually -500. At the end of training, the agent was capable of finishing in about 120 steps. That's about what I get as well:

That's not great, so I think the hyperparameters need to be tuned (e.g., the learning rate). If that doesn't help, it might be a good idea to tweak the rewards, for example by making the reward -2 instead of -1 when the acrobot is low. This will provide more signal for training. I haven't tried it though.