bees

bees copied to clipboard

bees copied to clipboard

Constant IO write rate of 1.14 MB/s

This seems new as I don't remember observing it in earlier versions so it may have been introduced within the last year:

When looking at htop I see a constant write rate of 1.14 MB/s. It only occasionally drops to 0 for a few seconds. The system is mostly idle otherwise, or at least it doesn't generate as much new extents that it would explain a constant hash writeback of 1.14 MB/s for prolonged periods of time.

THREADS (work queue 0 tasks, 4 workers):

tid 665: bees: [432699s] waiting for signals

tid 666: progress_report: [699.055s] idle 3600

tid 667: status_report: writing status to file '/run/bees//8e2b78fb-d246-46df-a1ac-34539bed55ef.status'

tid 673: crawl_transid: [286.132s] waiting 302.372s for next 10 transid RateEstimator { count = 2164250, raw = 955.996 / 28907.6, ratio = 955.996 / 29193.7, rate = 0.0327466, duration(1) = 30.5375, seconds_for(1) = 1 }

tid 674: crawl_writeback: [698.907s] idle, dirty

tid 675: hash_writeback: flush rate limited after extent #2138 of 8192 extents

tid 676: hash_prefetch: [2657.32s] idle 3600s

This same behavior can be observed on multiple systems as soon as there is at least a minimum amount of filesystem changes going on.

This seems like a pretty huge write-amplification to me. Did anything change that makes bees not tracking dirty hashes correctly and just write too many (even untouched) hashes?

I can observe this while hash writeback is in "flush rate limited" state. It slowly counts up the extents. When it's finished, write activity eventually (but not always) stops for a few seconds, then it starts over again with 1.14 MB/s while counting the extents in hash_writeback.

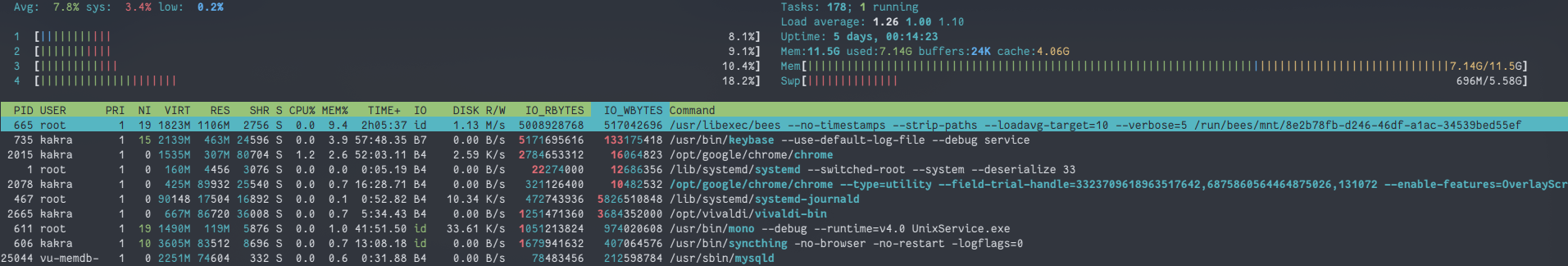

Screenshot of htop sorted by IO_WBYTES:

Except the keybase service, there's no exceptional high write amount.

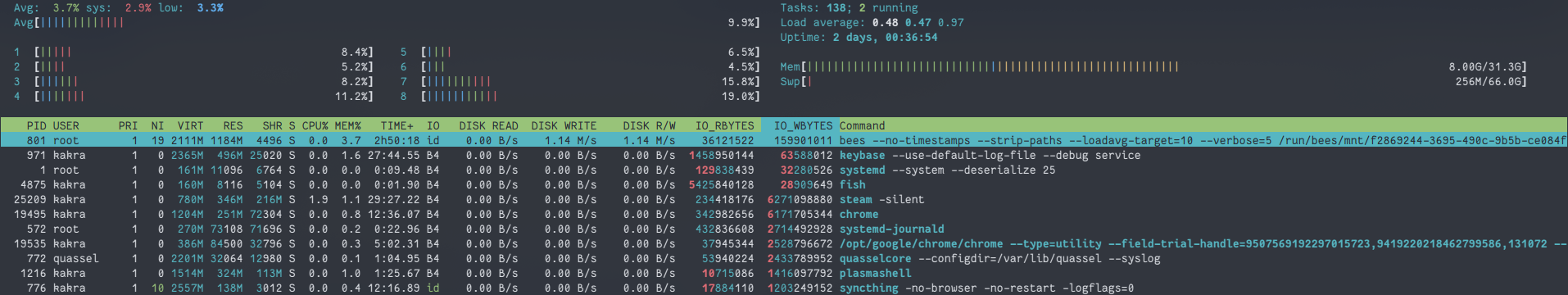

Here's another system showing the same behavior:

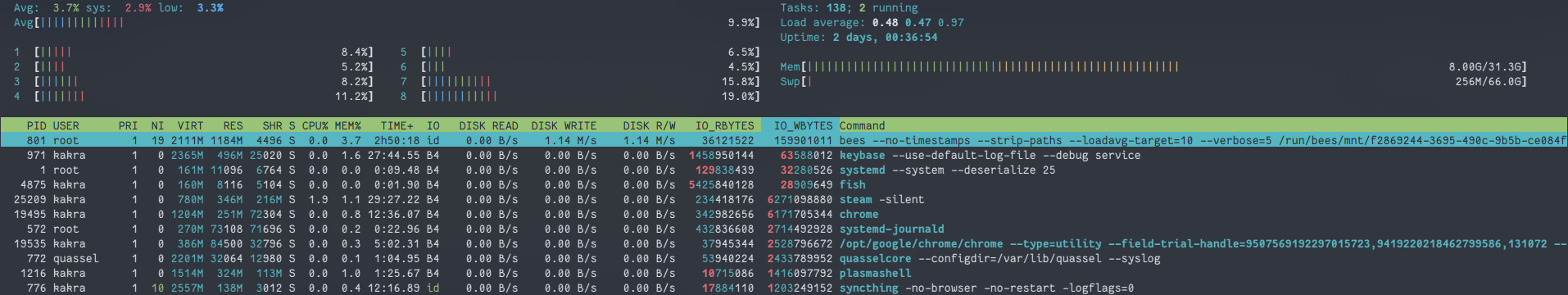

And another which doesn't show this behavior:

The last system doesn't have the rootfs on btrfs, only a storage pool for hosting users is on btrfs and that sees almost no writes.

It seems that some low write workload (which I'd consider idle as it only has writes by the usual log activity) is enough to trigger a constant hash writeback. Even then, the most write-intensive processes (journald, keybase) write to nodatasum files only, so bees shouldn't even consider them.

autodefrag is off on all systems, and mounted with noatime.

So I'm guessing this behavior is unexpected?

Maybe there's a feedback loop between bees logging to journal, and then bees seeing new extents and flushing seemingly dirty hashes? Although journal writes to a nocow/nodatasum directory...

The hash table should be tracking which hash extents are used and only writing out those that were touched. That said, extents are pretty big, and it doesn't take many hashes to touch all of them.

Maybe try adding some logs to BeesHashTable::flush_dirty_extent to see what's going on?

You do have a point about write multiplication, though: if the minimum hash-extent (we really need to rename those) size is 128KB, and every 4K of new data triggers at least one hash-extent write, then we are multiplying writes by 32 just for the hash table. When the block scan rate drops below the hash table's IO throttle rate then it would probably be better to skip a few scan intervals.

Maybe hack up the crawlers so they try to make a list of $N MB of new extent blocks before we start any scanners; if not enough blocks are found, don't pass any of the new extents to the scanner, reset the crawl positions, and go back to waiting for new transids.

The hash table should be tracking which hash extents are used and only writing out those that were touched. That said, extents are pretty big, and it doesn't take many hashes to touch all of them.

Maybe try adding some logs to

BeesHashTable::flush_dirty_extentto see what's going on?

I'm not sure yet what logs to add - maybe just change BEESTOOLONG to BEESLOGWARN?

However, I made a small fish script to see the statistics change over time:

while true

awk -v DATE=(date +%H:%M:%S) '

/^(TOTAL|RATES|THREADS)/ { PREFIX=$0 }

/\shash_/ { print DATE " " PREFIX $0 }

END { print "----" }

' f2869244-3695-490c-9b5b-ce084f597dfc.status | tee -a /tmp/hash_log.txt

sleep 10

end

From that I see hash_extent_in stuck at 8192 but hash_extent_out is constantly increasing. I'm not sure how those counters are related (or if they are at all). But at a first glance this looks like an endless loop.

This may happen when the extent would not be marked clean (stays dirty). But OTOH, this would mean that an exception occured and it would skip over wrote_extent = true. So I don't think that is the case.

One question is: Why are no extents coming in but constantly being written out?

Maybe this is an effect of resizing the hash table? The affected machines both had their hash table size doubled without resetting $BEESHOME.

There's this code before the cells loop:

if (verify_cell_range(bucket[0].p_cells, bucket[1].p_cells)) {

duplicate_bugs_found = true;

}

And after the buckets loop there's:

if (duplicate_bugs_found) {

set_extent_dirty_locked(ext);

}

So it seems there is some fixup logic that's supposed to fix duplicate cells. But maybe it cannot handle this case correctly?

bees only reads the hash table once, then writes dirty pages as they are dirtied. So hash_extent_in is going to be the number of hash table extents (unless there are read IO errors). hash_extent_out is triggered by updates to the hash table. It is an infinite loop because it's a daemon (ok, there is an exit condition for the loop now, but that condition is bees termination).

"duplicate bugs" are duplicate hash table entries within a cell (same hash and block number). These aren't possible with current versions of bees, but they could appear if there was corruption of the hash file. The set_extent_dirty_locked just makes sure that if we correct any of those, we write it back to disk.

I was thinking more along the lines of just adding

BEESLOG("flushing extent #" << extent_index << " of " << m_extents << " extents");

just before the catch_all. That will log which extent numbers are being flushed. The log will then contain a timeline of extent scan activity and dirty hash flushes, and we can see if there are a lot of dirty hash flushes after a crawl restart. We can also see how many extents are dirty, i.e. if it's the same ones over and over (normal for a crawl that is seeing a small number of new blocks), or the whole hash table (might be failing to clear the dirty flag).

Also check the rate that the other hash table stats increase. Most of them (insert, erase, bump) will trigger a write of that extent of the hash table.

Well, I purged my hash table on this machine. Let's look again when the first full scan is done. I can still do this on a second machine but that's at another location, I won't have that easy access to it.

I was thinking more along the lines of just adding

BEESLOG("flushing extent #" << extent_index << " of " << m_extents << " extents");

Actually, there's already a BEESNOTE line doing that. But it's never seen in the status (or maybe only for a very short time). Should we turn it into a log?

BEESNOTE sets up a callback to report the current thread status. The BEESNOTE text is incorporated in a closure stored on a stack for each thread. When bees does print_status() it iterates over the threads and calls the top function on each stack to report what they're doing.

BEESLOG* writes a message to the log. It doesn't affect the thread status.

If you want to do both things then you need to use both macros. There was once a BEESLOGNOTE but that was removed when we had all the BEESLOG{DEBUG,INFO,NOTICE,WARN,ERROR} variants.

In this case I think we want to add a BEESLOG in a different place:

BEESNOTE("flushing extent #" << extent_index << " of " << m_extents << " extents");

auto lock = lock_extent_by_index(extent_index);

// Not dirty, nothing to do

if (!m_extent_metadata.at(extent_index).m_dirty) {

return false;

}

bool wrote_extent = false;

BEESLOGINFO("flushing extent #" << extent_index << " of " << m_extents << " extents");

We aren't interested in extents that weren't flushed...or maybe we are? I think you'll get a lot of noise from putting the LOGINFO next to the NOTE, bees calls this function a lot when it's idle.

I think you're correct, I should move it down. I'll bump log verbosity up once I run into the problem again.

On the other machine, I reverted the hash table size back to the original size (without purging it), and the behavior stopped: It no longer constantly writes 1.14 MB/s. So there's clearly a problem when sizing the hash table up without purging the bees state.

That's...interesting. bees doesn't do range checks on hash values, and I can't think of anything else that would depend on a match between the contents of a hash table page and its position.

just before the

catch_all. That will log which extent numbers are being flushed. The log will then contain a timeline of extent scan activity and dirty hash flushes, and we can see if there are a lot of dirty hash flushes after a crawl restart. We can also see how many extents are dirty, i.e. if it's the same ones over and over (normal for a crawl that is seeing a small number of new blocks), or the whole hash table (might be failing to clear the dirty flag).

Okay, back on the first machine where I purged beeshome and started over: Interestingly, it scanned my whole filesystem in under a day this time while it usually took around a week previously (some time ago). Did you secretly implement some super charger? ;-)

The effect is back, however, this time it's stuck at 1.13 MB/s instead of 1.14... With the log in place, I see it just writes most of the extent indexes from 1 to 8192 (it skips a few):

Nov 19 01:06:27 jupiter beesd[2459207]: hash_writeback[2459252]: flushing extent #8157 of 8192 extents

Nov 19 01:06:27 jupiter beesd[2459207]: hash_writeback[2459252]: flushing extent #8158 of 8192 extents

Nov 19 01:06:27 jupiter beesd[2459207]: hash_writeback[2459252]: flushing extent #8161 of 8192 extents

Nov 19 01:06:28 jupiter beesd[2459207]: hash_writeback[2459252]: flushing extent #8181 of 8192 extents

Nov 19 01:06:28 jupiter beesd[2459207]: hash_writeback[2459252]: flushing extent #5 of 8192 extents

Nov 19 01:06:28 jupiter beesd[2459207]: hash_writeback[2459252]: flushing extent #9 of 8192 extents

Nov 19 01:06:28 jupiter beesd[2459207]: hash_writeback[2459252]: flushing extent #28 of 8192 extents

Nov 19 01:06:28 jupiter beesd[2459207]: hash_writeback[2459252]: flushing extent #30 of 8192 extents

Nov 19 01:06:28 jupiter beesd[2459207]: hash_writeback[2459252]: flushing extent #31 of 8192 extents

Nov 19 01:06:28 jupiter beesd[2459207]: hash_writeback[2459252]: flushing extent #45 of 8192 extents

Nov 19 01:06:28 jupiter beesd[2459207]: hash_writeback[2459252]: flushing extent #56 of 8192 extents

This cycle repeats. The crawlers just start somewhere inbetween:

Nov 19 01:06:30 jupiter beesd[2459207]: hash_writeback[2459252]: flushing extent #560 of 8192 extents

Nov 19 01:06:30 jupiter beesd[2459207]: hash_writeback[2459252]: flushing extent #582 of 8192 extents

Nov 19 01:06:30 jupiter beesd[2459207]: crawl_transid[2459251]: Polling 275.595s for next 10 transid RateEstimator { count = 814974, raw = 3.99 / 109.959, ratio = 3.99 / 109.965, rate = 0.0362842, duration(1) = 27.5602, seconds_for(1) = 27.5537 }

Nov 19 01:06:30 jupiter beesd[2459207]: crawl_master[2459245]: Crawl started BeesCrawlState 5:0 offset 0x0 transid 814971..814974 started 2019-11-19-01-06-30 (0s ago)

Nov 19 01:06:30 jupiter beesd[2459207]: crawl_master[2459245]: Crawl started BeesCrawlState 257:0 offset 0x0 transid 814971..814974 started 2019-11-19-01-06-30 (0s ago)

Nov 19 01:06:30 jupiter beesd[2459207]: crawl_master[2459245]: Crawl started BeesCrawlState 258:0 offset 0x0 transid 814971..814974 started 2019-11-19-01-06-30 (0s ago)

Nov 19 01:06:30 jupiter beesd[2459207]: crawl_master[2459245]: Crawl finished BeesCrawlState 258:0 offset 0x0 transid 814971..814974 started 2019-11-19-01-06-30 (0s ago)

Nov 19 01:06:30 jupiter beesd[2459207]: crawl_master[2459245]: Crawl started BeesCrawlState 259:0 offset 0x0 transid 814971..814974 started 2019-11-19-01-06-30 (0s ago)

Nov 19 01:06:30 jupiter beesd[2459207]: crawl_master[2459245]: Crawl finished BeesCrawlState 259:18446744073709551611 offset 0x5d7da3 transid 814971..814974 started 2019-11-19-01-06-30 (0s ago)

Nov 19 01:06:30 jupiter beesd[2459207]: crawl_master[2459245]: Crawl started BeesCrawlState 260:0 offset 0x0 transid 814971..814974 started 2019-11-19-01-06-30 (0s ago)

...

It's always just a few or even only one transid to be scanned. I don't think there is much filesystem activity going on that would explain the amount of dirty extents to be flushed?

When stopping bees, it flushes around 3500 extent indexes before exiting.

I'm attaching a short log, I stopped bees after one or two cycles of extent flushing because it generates a lot of log noise. log.txt

BTW: I could only observe this effect after bees started idling... While it was busy with catching up all the transids, it did some bursty writes but usually was idle (aka nothing written) most of the time.

The machine where I reduced the hash table size also returned to this behavior over night... It fluctuates between 1.12 and 1.14 MB/s now. So it probably has nothing to do with resizing the hash table.

Watching the bees process in htop shows that it is just writing, IO_WBYTES increases at a constant rate while IO_RBYTES only changes a little every once in a while.

I see block_read=6724 which will touch most of the extents in an 8192-extent hash table. Each extent is 128K, so in theory reading just 32MB of data will touch the whole thing.

There's a rate limiter on the output to limit the speed to a little over a MB/s (BEES_FLUSH_RATE sets the limit) to avoid overwhelming the system with writes. It could be set lower or made configurable.

So, is this a feedback loop? I don't think that rate limiting the writeback even further isn't constructive.

Would anything change if I placed $BEESHOME on another partition? Does bees exclude the $BEESHOME subvolid from its scanner? Or maybe I am on the wrong track with this idea?

I probably like the idea much more to pause writeback for some time if not enough data has accumulated so it would work more bursty. It should still be rate-limited, tho. But I wasn't able to grok your code around this issue so I deferred that idea.

bees excludes beeshash.dat from scanning (it prevents the file from being opened at all by either scan or dedupe). You can also place BEESHOME on another filesystem (doesn't have to be btrfs, could be NFS, VFAT, tmpfs, ext4...). beesstats, beescrawl, etc are not excluded because they keep moving to new inodes and would fill memory with blacklist entries.

The design inputs for the hash table were:

- don't flood the disk with writes

- don't fragment the file with small, out-of-order writes

- writeback is better than fsync

- crashes are rare 1: we don't need to update the hash table on disk immedately

- crashes are rare 2: we can afford to repeat unsaved work from the last checkpoint

- hash table update bandwidth must be limited to avoid degrading system performance

- hash tables have very low data locality

- data will appear on the filesystem faster than we can process

The result is that we flush out the entire hash table every time there's a small change to disk contents, because we track which parts of the table are updated at very low granularity (anywhere from 4K to 64MB of data will trigger a 128KB hash table write).

Item 8 is significantly different in this use case: there's a small amount of data to process and we process it all immediately, then spend an hour trickle-writing most of the hash table even though only small parts were modified.

Two ways to fix that:

- Increase the granularity of the tracking to e.g. 4K pages. Slows things down, both during execution and when reloading a saved hash table.

- Decrease the frequency of scanning. bees already tracks filesystem update rate, maybe all that is needed is a different scaling factor.

So try setting BEES_TRANSID_FACTOR to 100 or more. On a busy filesystem the transaction rate will be high and bees will be continuously active, while on an idle filesystem this may lead to some hours between scans.

There is kind of a side-bug here: currently we flush beescrawl.dat on 15-minute intervals and beeshash.dat on 4GB-per-hour intervals (so for a 1GB hash table those are 15-minute intervals too), but there is no synchronization between these. beescrawl.dat could point to data that was scanned and hashes inserted into the hash table, but those hashes are not yet persisted on disk. A crash would make those hashes unavailable for future matches because the crawler will not rescan those blocks. These should really be synchronized, so we only write out beescrawl.dat just after the last extent of the hash table is written, and the contents of that file are a copy of the data taken just before the first extent of the hash table is written.

Seems to be fixed with current master.

I'm affected too with this problem, bees writes constantly at rate ~1.2 MiB/s.

iotop says that write comes from bees --no-timestamps --workaround-btrfs-s~6-48df-a28c-a68c839387ca [hash_writeback]

Bees v0.8, Manjaro, kernel: 6.1.7-1-MANJARO How can I debug this issue?

You can change the writeback rate in src/bees.h:

// Bytes per second we want to flush (8GB every two hours)

const double BEES_FLUSH_RATE = 8.0 * 1024 * 1024 * 1024 / 7200.0;

Set it to 128 * 1024 for a trickle write rate of 128K/second. Whatever rate you set, bees will be fairly constantly writing at that rate, unless the filesystem is completely idle (no new extents discovered by bees).

Note this doesn't affect the write rate on a SIGTERM exit, as that will always be the maximum rate your IO subsystem can bear. If you need long idle times (e.g. overnight), you can terminate the bees process to make the disks idle and restart it when the disks no longer need to be idle.

Whatever rate you set, bees will be fairly constantly writing at that rate, unless the filesystem is completely idle (no new extents discovered by bees).

So, basically, I can't keep bees WORK_DIR in a filesystem which is monitored by bees? As if I do this - bees will recursively change hash file, detect those changes and change hash file and so on? Maybe, bees should have a path exclude list to exclude changes caused by its activity, like syslog directory and WORK_DIR? Or maybe a subvolume exclude list?

The hash table is excluded already. beescrawl.dat and beesstats.txt are not, but they will generate only a few pages of IO activity every writeback interval (15 minutes). They could be excluded by a similar mechanism.

Exclude lists (by filename or subvol) are a frequently requested feature.