Realtime_Multi-Person_Pose_Estimation

Realtime_Multi-Person_Pose_Estimation copied to clipboard

Realtime_Multi-Person_Pose_Estimation copied to clipboard

CPM Evaluation in Python

Dear Zhe Cao,

I've succeeded to run, train and evaluate your model with 4 scales in Matlab (everything for research purposes). Now I'm moving to python, and I arranged the evaluation code you provide in demo.ipynb to evaluate on multiple images from the coco dataset. Testing your model with trained for 440000 iterations, I obtained an average precision of 0.583 using your Matlab code. As for the evaluation in Python I obtained an average precision of 0.43. This is a big decrease. Is there anything that have to be considered in the Matlab implementation to take into account in for the python code?

I would really appreciate your help. Thanks!

@legan78 May I know your AP of 0.583 is got on test-challenge or test-dev of COCO dataset? I am also trying to evaluate the model provided by the authors in Matlab, but failed to achieve the same accuracy reported in their paper.

@legan78 Sorry that we haven't used either python or C++ code for the COCO challenge. But thank you for reporting this, we will look at this issue and post any finding here.

@Ding-Liu I obtained such result on the val2014 set. I have obtained a similar performance as the ones reported in #35 (Matlab evaluation). What is the AP you get?

@ZheC Thanks for your quick response. I will be pendant for any news.

@legan78 I followed the procedure in https://github.com/ZheC/Realtime_Multi-Person_Pose_Estimation/blob/master/training/genJSON.m#L36 to use the first 2644 images in the val2014 set as a customized validation set, and used the provided model trained with 440000 iters and the code in https://github.com/ZheC/Realtime_Multi-Person_Pose_Estimation/blob/master/testing/evalCOCO.m to generate predictions. I obtained a similar result as xiaoyong in #35.

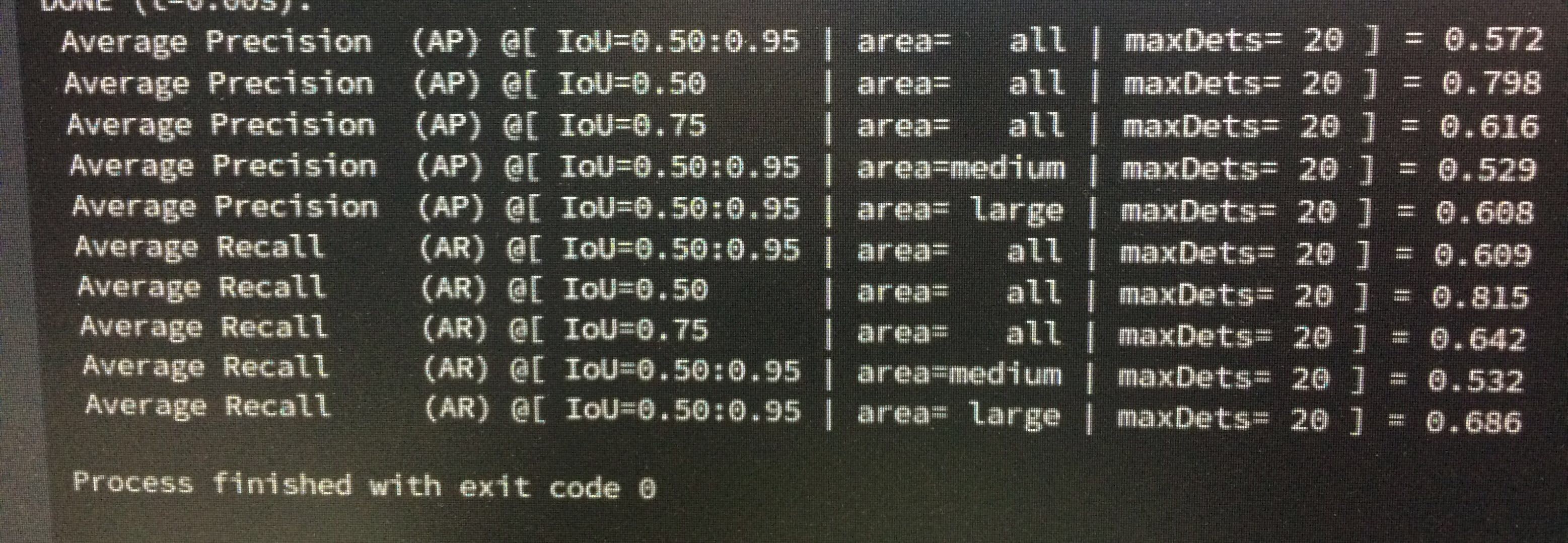

Below is my detailed accuracy: Average Precision (AP) @[ IoU=0.50:0.95 | area= all | maxDets= 20 ] = 0.577 Average Precision (AP) @[ IoU=0.50 | area= all | maxDets= 20 ] = 0.797 Average Precision (AP) @[ IoU=0.75 | area= all | maxDets= 20 ] = 0.627 Average Precision (AP) @[ IoU=0.50:0.95 | area=medium | maxDets= 20 ] = 0.545 Average Precision (AP) @[ IoU=0.50:0.95 | area= large | maxDets= 20 ] = 0.629 Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 20 ] = 0.621 Average Recall (AR) @[ IoU=0.50 | area= all | maxDets= 20 ] = 0.814 Average Recall (AR) @[ IoU=0.75 | area= all | maxDets= 20 ] = 0.662 Average Recall (AR) @[ IoU=0.50:0.95 | area=medium | maxDets= 20 ] = 0.555 Average Recall (AR) @[ IoU=0.50:0.95 | area= large | maxDets= 20 ] = 0.706

@ZheC Please enlighten me if there is any discrepancy with your setting or results. Thank you so much in advance! I would appreciate your help!

Is there any updates for For python code evaluation? I could get 0.55 use the first 2644 images in the val2014 set. I change the original python evaluation code a little bit. I evaluate in mxnet framework, https://github.com/dragonfly90/mxnet_Realtime_Multi-Person_Pose_Estimation/blob/master/evaluation_coco.py. The scales seem to play an important role in evaluation result. But I don't know how to get the optimal scales.

Average Precision (AP) @[ IoU=0.50:0.95 | area= all | maxDets= 20 ] = 0.551 Average Precision (AP) @[ IoU=0.50 | area= all | maxDets= 20 ] = 0.801 Average Precision (AP) @[ IoU=0.75 | area= all | maxDets= 20 ] = 0.611 Average Precision (AP) @[ IoU=0.50:0.95 | area=medium | maxDets= 20 ] = 0.545 Average Precision (AP) @[ IoU=0.50:0.95 | area= large | maxDets= 20 ] = 0.579 Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 20 ] = 0.595 Average Recall (AR) @[ IoU=0.50 | area= all | maxDets= 20 ] = 0.818 Average Recall (AR) @[ IoU=0.75 | area= all | maxDets= 20 ] = 0.649 Average Recall (AR) @[ IoU=0.50:0.95 | area=medium | maxDets= 20 ] = 0.554 Average Recall (AR) @[ IoU=0.50:0.95 | area= large | maxDets= 20 ] = 0.654

@Ding-Liu @dragonfly90 smaller and faster model based on the @ZheC

@Ding-Liu @dragonfly90 smaller and faster model based on the @ZheC

@dragonfly90 can u please tell how did u arrive at result.json file from the caffemodel that is obtained after training?