Exception: Found multiple get_by_name matches, undefined behavior

Greetings,

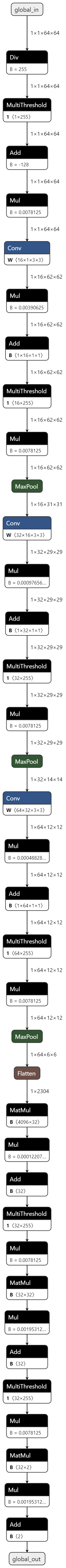

I'm currently trying to compile a convolutional model generated via Brevitas and structured as follows:

When coming to the streamline step, I get the following Exception:

---------------------------------------------------------------------------

Exception Traceback (most recent call last)

<ipython-input-7-c3ce74697ba8> in <module>

8 # model = model.transform(MoveScalarLinearPastInvariants())

9 # streamline

---> 10 model = model.transform(Streamline())

11

12 chkpt_name = build_dir + model_name + streamline + ".onnx"

/home/jacopo/git/finn/deps/qonnx/src/qonnx/core/modelwrapper.py in transform(self, transformation, make_deepcopy, cleanup)

138 model_was_changed = True

139 while model_was_changed:

--> 140 (transformed_model, model_was_changed) = transformation.apply(transformed_model)

141 if cleanup:

142 transformed_model.cleanup()

/home/jacopo/git/finn/src/finn/transformation/streamline/__init__.py in apply(self, model)

99 model = model.transform(RemoveIdentityOps())

100 model = model.transform(GiveUniqueNodeNames())

--> 101 model = model.transform(GiveReadableTensorNames())

102 model = model.transform(InferDataTypes())

103 return (model, False)

/home/jacopo/git/finn/deps/qonnx/src/qonnx/core/modelwrapper.py in transform(self, transformation, make_deepcopy, cleanup)

138 model_was_changed = True

139 while model_was_changed:

--> 140 (transformed_model, model_was_changed) = transformation.apply(transformed_model)

141 if cleanup:

142 transformed_model.cleanup()

/home/jacopo/git/finn/deps/qonnx/src/qonnx/transformation/general.py in apply(self, model)

128 out_num = 0

129 for o in n.output:

--> 130 model.rename_tensor(o, "%s_out%d" % (n.name, out_num))

131 out_num += 1

132 init_in_num = 0

/home/jacopo/git/finn/deps/qonnx/src/qonnx/core/modelwrapper.py in rename_tensor(self, old_name, new_name)

302 util.get_by_name(graph.initializer, old_name).name = new_name

303 # sweep over quantization annotations

--> 304 if util.get_by_name(graph.quantization_annotation, old_name, "tensor_name") is not None:

305 util.get_by_name(graph.quantization_annotation, old_name, "tensor_name").tensor_name = new_name

306 # sweep over node i/o

/home/jacopo/git/finn/deps/qonnx/src/qonnx/util/basic.py in get_by_name(container, name, name_field)

80 inds = [i for i, e in enumerate(names) if e == name]

81 if len(inds) > 1:

---> 82 raise Exception("Found multiple get_by_name matches, undefined behavior")

83 elif len(inds) == 0:

84 return None

Exception: Found multiple get_by_name matches, undefined behavior

FINN branch: main (v0.8.1) OS: Windows 10 (WSL2 Ubuntu 18.04) Python: 3.8

The model is a custom-made model made with Brevitas and exported in the ONNX format.

EDIT: I'm also copying the original Brevitas model I used.

import brevitas.nn as qnn

import torch.nn as nn

from brevitas.quant import (

Int8ActPerTensorFixedPoint as ActQuant,

Int8WeightPerTensorFixedPoint as WeightQuant,

)

class ExtendedActQuant(ActQuant):

bit_width = 16

class ExtendedWeightQuant(WeightQuant):

bit_width = 16

class QSPTModel(nn.Module):

def __init__(self, in_channels: int, image_size: int, convolution_sizes: list, dense_sizes: list, output_size: int) -> None:

super(QSPTModel, self).__init__()

self.identity = qnn.QuantIdentity(

act_quant=ExtendedActQuant,

return_quant_tensor=True

)

self.layers = nn.Sequential()

in_size = in_channels

for idx, size in enumerate(convolution_sizes, 1):

self.layers.add_module(

name=f"conv_{idx}",

module=qnn.QuantConv2d(

in_size,

size,

kernel_size=(3, 3),

padding_type="same",

weight_quant=ExtendedWeightQuant,

return_quant_tensor=True

)

)

self.layers.add_module(

name=f"conv_{idx}_relu",

module=qnn.QuantReLU(

act_quant=ExtendedActQuant,

return_quant_tensor=True

)

)

self.layers.add_module(

name=f"maxpool_{idx}",

module=qnn.QuantMaxPool2d(

(2, 2),

return_quant_tensor=True

)

)

in_size = size

self.layers.add_module(name="flatten", module=nn.Flatten())

# the convolution layers squeeze the image of a factor 2

# after len(convolution_sizes) layers, the final size will be

# convolution_sizes[-1]*((image_size / (2**len(convolution_sizes)))**2)

maxpool_final_size = image_size // (2**len(convolution_sizes))

in_size = convolution_sizes[-1]*(maxpool_final_size*maxpool_final_size)

for idx, size in enumerate(dense_sizes, 1):

self.layers.add_module(

name=f"linear_{idx}",

module=qnn.QuantLinear(

in_size,

size,

bias=True,

weight_quant=ExtendedWeightQuant,

return_quant_tensor=True

)

)

self.layers.add_module(

name=f"linear_{idx}_relu",

module=qnn.QuantReLU(

act_quant=ExtendedActQuant,

return_quant_tensor=True

)

)

in_size = size

self.layers.add_module(

name=f"linear_output",

module=qnn.QuantLinear(

in_size,

output_size,

bias=True,

weight_quant=ExtendedWeightQuant,

return_quant_tensor=False

)

)

def forward(self, x):

x = self.identity(x)

x = self.layers(x)

return x

Quick update: oddly enough, but I really cannot give an explanation to this, now the Streamline call does not cause Exception and works fine. I'll leave the issue open in case something new comes up.

I tried "hacking" the workflow a bit by copy-pasting the original Streamline class source code and removing the line of code which causes the exception as follows:

def local_streamline(model):

from qonnx.transformation.base import Transformation

from qonnx.transformation.batchnorm_to_affine import BatchNormToAffine

from qonnx.transformation.general import (

ConvertDivToMul,

ConvertSubToAdd,

GiveReadableTensorNames,

GiveUniqueNodeNames,

)

from qonnx.transformation.infer_datatypes import InferDataTypes

from qonnx.transformation.remove import RemoveIdentityOps

from finn.transformation.streamline.absorb import (

Absorb1BitMulIntoConv,

Absorb1BitMulIntoMatMul,

AbsorbAddIntoMultiThreshold,

AbsorbMulIntoMultiThreshold,

AbsorbSignBiasIntoMultiThreshold,

FactorOutMulSignMagnitude,

)

from finn.transformation.streamline.collapse_repeated import (

CollapseRepeatedAdd,

CollapseRepeatedMul,

)

from finn.transformation.streamline.reorder import (

MoveAddPastConv,

MoveAddPastMul,

MoveMulPastMaxPool,

MoveScalarAddPastMatMul,

MoveScalarLinearPastInvariants,

MoveScalarMulPastConv,

MoveScalarMulPastMatMul,

)

from finn.transformation.streamline.round_thresholds import RoundAndClipThresholds

from finn.transformation.streamline.sign_to_thres import ConvertSignToThres

streamline_transformations = [

ConvertSubToAdd(),

ConvertDivToMul(),

BatchNormToAffine(),

ConvertSignToThres(),

MoveMulPastMaxPool(),

MoveScalarLinearPastInvariants(),

AbsorbSignBiasIntoMultiThreshold(),

MoveAddPastMul(),

MoveScalarAddPastMatMul(),

MoveAddPastConv(),

MoveScalarMulPastMatMul(),

MoveScalarMulPastConv(),

MoveAddPastMul(),

CollapseRepeatedAdd(),

CollapseRepeatedMul(),

MoveMulPastMaxPool(),

AbsorbAddIntoMultiThreshold(),

FactorOutMulSignMagnitude(),

AbsorbMulIntoMultiThreshold(),

Absorb1BitMulIntoMatMul(),

Absorb1BitMulIntoConv(),

RoundAndClipThresholds(),

]

for trn in streamline_transformations:

model = model.transform(trn)

model = model.transform(RemoveIdentityOps())

model = model.transform(GiveUniqueNodeNames())

model = model.transform(InferDataTypes())

model = model.transform(GiveReadableTensorNames())

return model

This fixes the problem, but still no clue why calling GiveReadableTensorNames() causes that exception.