Dataflow partition fails

Greetings everyone,

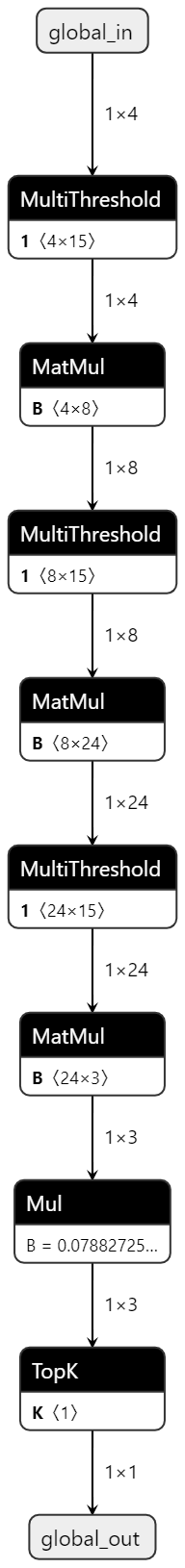

I'm trying to port a Brevitas model for Iris classification into FPGA using FINN. The model I'm using is shown here.

I'm using a custom notebook by taking as a reference the tfc_end2end_example in the repository. I'm able to come up with a reasonable model up the HLS conversion step. This is the model that I have so far:

When performing the dataflow partition step though, FINN throws the following exception:

---------------------------------------------------------------------------

AssertionError Traceback (most recent call last)

<ipython-input-8-8e79fe16bf71> in <module>

2

3 model = ModelWrapper(build_dir + model_name + "_hls_layers.onnx")

----> 4 parent_model = model.transform(CreateDataflowPartition())

5 parent_model.save(build_dir + model_name + "_dataflow_parent.onnx")

6 showInNetron(build_dir + model_name + "_dataflow_parent.onnx")

/workspace/finn-base/src/finn/core/modelwrapper.py in transform(self, transformation, make_deepcopy, cleanup, fix_float64)

139 model_was_changed = True

140 while model_was_changed:

--> 141 (transformed_model, model_was_changed) = transformation.apply(

142 transformed_model

143 )

/workspace/finn/src/finn/transformation/fpgadataflow/create_dataflow_partition.py in apply(self, model)

76

77 # first, use the generic partitioning functionality to split up the graph

---> 78 parent_model = model.transform(

79 PartitionFromLambda(

80 partitioning=assign_partition_id, partition_dir=self.partition_model_dir

/workspace/finn-base/src/finn/core/modelwrapper.py in transform(self, transformation, make_deepcopy, cleanup, fix_float64)

139 model_was_changed = True

140 while model_was_changed:

--> 141 (transformed_model, model_was_changed) = transformation.apply(

142 transformed_model

143 )

/workspace/finn-base/src/finn/transformation/create_generic_partitions.py in apply(self, model)

122 for node in to_check:

123 if node is not None:

--> 124 assert (

125 self.partitioning(node) != partition_id

126 ), """cycle-free graph violated: partition depends on itself"""

AssertionError: cycle-free graph violated: partition depends on itself

I don't exactly understand what this means, so any input is appreciated. In case you need more information I'll happily provide them. Thank you.

My setup is:

- OS: Ubuntu 20.04.4 LTS (running on Windows 10 with WSL2)

- Python: 3.8.10

- FINN branch: main

Hi, dataflow partitioning fails because not all nodes were converted to their respective HLS Custom Op in the prior steps. Specifically, the MatMul nodes should have been converted to StreamingFCLayer_Batch nodes.

Do you apply the InferQuantizedStreamingFCLayer() transformation as part of the HLS conversion?

Hi @fpjentzsch , thank you for your reply. Yes, adding the transformation to the HLS conversion step did the trick. I'll close the issue.

Greetings,

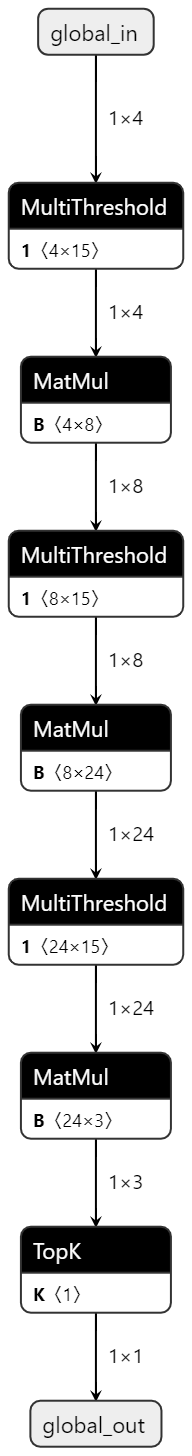

I'm reopening this issue for the following reason: I changed the FINN version (I checked out the "dev" branch, commit hash 281af25500cfbfb8e7abd23c956e4599851fdda8) and tried re-running my notebook to generate again the IP core for my model. I noticed that some of the functionalities were moved to the qonnx module but apart from that everything was the same. My problem is in the pre-HLS conversion step. The model I have is the following:

Afterwards I apply the following transformations:

from qonnx.transformation.bipolar_to_xnor import ConvertBipolarMatMulToXnorPopcount

from finn.transformation.streamline.round_thresholds import RoundAndClipThresholds

from qonnx.transformation.infer_data_layouts import InferDataLayouts

from qonnx.transformation.general import RemoveUnusedTensors

chkpt_name = build_dir + model_name + pre_hls + ".onnx"

model = model.transform(ConvertBipolarMatMulToXnorPopcount())

model = model.transform(absorb.AbsorbAddIntoMultiThreshold())

model = model.transform(absorb.AbsorbMulIntoMultiThreshold())

# absorb final add-mul nodes into TopK

model = model.transform(absorb.AbsorbScalarMulAddIntoTopK())

model = model.transform(RoundAndClipThresholds())

# bit of tidy-up

model = model.transform(InferDataLayouts())

model = model.transform(RemoveUnusedTensors())

model.save(chkpt_name)

showInNetron(chkpt_name)

This produces the following model:

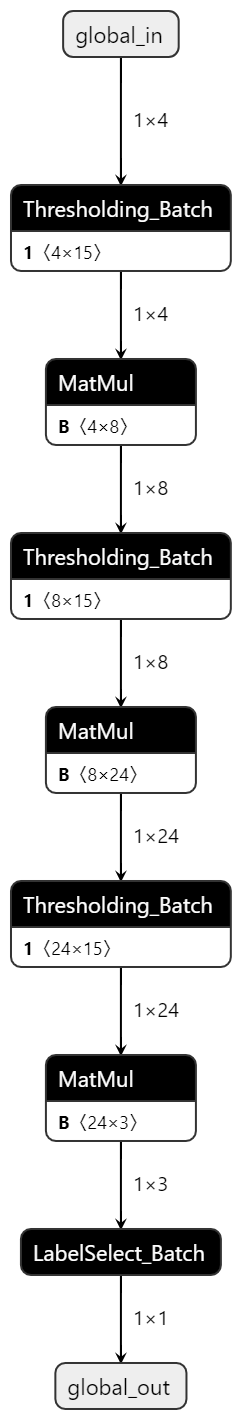

Then I perform the HLS conversion as follows:

import finn.transformation.fpgadataflow.convert_to_hls_layers as to_hls

chkpt_name = build_dir + model_name + pre_hls + ".onnx"

model = ModelWrapper(chkpt_name)

model = model.transform(to_hls.InferBinaryMatrixVectorActivation(mem_mode=mem_mode))

# TopK to LabelSelect

model = model.transform(to_hls.InferLabelSelectLayer())

# input quantization (if any) to standalone thresholding

model = model.transform(to_hls.InferThresholdingLayer())

chkpt_name = build_dir + model_name + hls_layers + ".onnx"

model.save(chkpt_name)

showInNetron(chkpt_name)

At this point though I should not be seeing anymore the MatMul layers as these should have been collapsed within the MultiThreshold layers. I'm not exactly sure I understand what's going on. At any rate I afterwards try to create a dataflow partition as follows:

from finn.transformation.fpgadataflow.create_dataflow_partition import CreateDataflowPartition

chkpt_name = build_dir + model_name + hls_layers + ".onnx"

model = ModelWrapper(chkpt_name)

parent_model = model.transform(CreateDataflowPartition())

chkpt_name = build_dir + model_name + dataflow_part + ".onnx"

parent_model.save(chkpt_name)

showInNetron(chkpt_name)

Which then fails:

---------------------------------------------------------------------------

AssertionError Traceback (most recent call last)

<ipython-input-9-9b65553d6b9e> in <module>

3 chkpt_name = build_dir + model_name + hls_layers + ".onnx"

4 model = ModelWrapper(chkpt_name)

----> 5 parent_model = model.transform(CreateDataflowPartition())

6

7 chkpt_name = build_dir + model_name + dataflow_part + ".onnx"

/home/jacopo/git/finn/deps/qonnx/src/qonnx/core/modelwrapper.py in transform(self, transformation, make_deepcopy, cleanup)

138 model_was_changed = True

139 while model_was_changed:

--> 140 (transformed_model, model_was_changed) = transformation.apply(transformed_model)

141 if cleanup:

142 transformed_model.cleanup()

/home/jacopo/git/finn/src/finn/transformation/fpgadataflow/create_dataflow_partition.py in apply(self, model)

78

79 # first, use the generic partitioning functionality to split up the graph

---> 80 parent_model = model.transform(

81 PartitionFromLambda(

82 partitioning=assign_partition_id, partition_dir=self.partition_model_dir

/home/jacopo/git/finn/deps/qonnx/src/qonnx/core/modelwrapper.py in transform(self, transformation, make_deepcopy, cleanup)

138 model_was_changed = True

139 while model_was_changed:

--> 140 (transformed_model, model_was_changed) = transformation.apply(transformed_model)

141 if cleanup:

142 transformed_model.cleanup()

/home/jacopo/git/finn/deps/qonnx/src/qonnx/transformation/create_generic_partitions.py in apply(self, model)

116 for node in to_check:

117 if node is not None:

--> 118 assert (

119 self.partitioning(node) != partition_id

120 ), """cycle-free graph violated: partition depends on itself"""

AssertionError: cycle-free graph violated: partition depends on itself

Hi @jacopoabramo , I see that you are using the InferBinaryMatrixVectorActivation() function. Is your network binary? I had a brief look into the model you've sent in the beginning of the conversation and it looked for me like you're using 4-bit. Is that the same model you're still using? If yes, you can try replacing that function with InferQuantizedMatrixVectorActivation(). You can now also use the current main branch to run your model.

Hi @auphelia , thanks for the reply. That did the trick. Now I'm having an issue because apparently Vitis HLS is not installed (which makes sense since I only installed Vivado). I'll keep the issue open just in case I find more problems.

Hello again,

I now have the following output after trying to build the model with Vitis HLS. The code for the building is shown below (target is PYNQ-Z2):

from finn.util.basic import pynq_part_map

from finn.transformation.fpgadataflow.make_zynq_proj import ZynqBuild

# set the correct part map

fpga_part = pynq_part_map[target]

target_clk_ns = 10

chkpt_name = build_dir + model_name + folding + ".onnx"

model = ModelWrapper(chkpt_name)

model = model.transform(ZynqBuild(platform = target, period_ns = target_clk_ns))

chkpt_name = build_dir + model_name + post_synth + ".onnx"

model.save(chkpt_name)

showInNetron(chkpt_name)

This is what I get as traceback:

---------------------------------------------------------------------------

RemoteTraceback Traceback (most recent call last)

RemoteTraceback:

"""

Traceback (most recent call last):

File "/opt/conda/lib/python3.8/multiprocessing/pool.py", line 125, in worker

result = (True, func(*args, **kwds))

File "/opt/conda/lib/python3.8/multiprocessing/pool.py", line 48, in mapstar

return list(map(*args))

File "/home/jacopo/git/finn/src/finn/transformation/fpgadataflow/hlssynth_ip.py", line 69, in applyNodeLocal

inst.ipgen_singlenode_code()

File "/home/jacopo/git/finn/src/finn/custom_op/fpgadataflow/hlscustomop.py", line 339, in ipgen_singlenode_code

assert os.path.isdir(

AssertionError: IPGen failed: /tmp/finn_dev_jacopo/code_gen_ipgen_StreamingDataflowPartition_0_IODMA_0_sryta8e_/project_StreamingDataflowPartition_0_IODMA_0/sol1/impl/ip not found. Check log under /tmp/finn_dev_jacopo/code_gen_ipgen_StreamingDataflowPartition_0_IODMA_0_sryta8e_

"""

The above exception was the direct cause of the following exception:

AssertionError Traceback (most recent call last)

<ipython-input-12-bfbbda2f9c4e> in <module>

8 chkpt_name = build_dir + model_name + folding + ".onnx"

9 model = ModelWrapper(chkpt_name)

---> 10 model = model.transform(ZynqBuild(platform = target, period_ns = target_clk_ns))

11

12 chkpt_name = build_dir + model_name + post_synth + ".onnx"

/home/jacopo/git/finn/deps/qonnx/src/qonnx/core/modelwrapper.py in transform(self, transformation, make_deepcopy, cleanup)

138 model_was_changed = True

139 while model_was_changed:

--> 140 (transformed_model, model_was_changed) = transformation.apply(transformed_model)

141 if cleanup:

142 transformed_model.cleanup()

/home/jacopo/git/finn/src/finn/transformation/fpgadataflow/make_zynq_proj.py in apply(self, model)

353 PrepareIP(self.fpga_part, self.period_ns)

354 )

--> 355 kernel_model = kernel_model.transform(HLSSynthIP())

356 kernel_model = kernel_model.transform(

357 CreateStitchedIP(

/home/jacopo/git/finn/deps/qonnx/src/qonnx/core/modelwrapper.py in transform(self, transformation, make_deepcopy, cleanup)

138 model_was_changed = True

139 while model_was_changed:

--> 140 (transformed_model, model_was_changed) = transformation.apply(transformed_model)

141 if cleanup:

142 transformed_model.cleanup()

/home/jacopo/git/finn/deps/qonnx/src/qonnx/transformation/base.py in apply(self, model)

103 # Execute transformation in parallel

104 with mp.Pool(self._num_workers) as p:

--> 105 new_nodes_and_bool = p.map(self.applyNodeLocal, old_nodes, chunksize=1)

106

107 # extract nodes and check if the transformation needs to run again

/opt/conda/lib/python3.8/multiprocessing/pool.py in map(self, func, iterable, chunksize)

362 in a list that is returned.

363 '''

--> 364 return self._map_async(func, iterable, mapstar, chunksize).get()

365

366 def starmap(self, func, iterable, chunksize=None):

/opt/conda/lib/python3.8/multiprocessing/pool.py in get(self, timeout)

769 return self._value

770 else:

--> 771 raise self._value

772

773 def _set(self, i, obj):

AssertionError: IPGen failed: /tmp/finn_dev_jacopo/code_gen_ipgen_StreamingDataflowPartition_0_IODMA_0_sryta8e_/project_StreamingDataflowPartition_0_IODMA_0/sol1/impl/ip not found. Check log under /tmp/finn_dev_jacopo/code_gen_ipgen_StreamingDataflowPartition_0_IODMA_0_sryta8e_

This is the log content:

****** Vitis HLS - High-Level Synthesis from C, C++ and OpenCL v2022.1 (64-bit)

**** SW Build 3526262 on Mon Apr 18 15:47:01 MDT 2022

**** IP Build 3524634 on Mon Apr 18 20:55:01 MDT 2022

** Copyright 1986-2022 Xilinx, Inc. All Rights Reserved.

source /tools/Xilinx/Vitis_HLS/2022.1/scripts/vitis_hls/hls.tcl -notrace

INFO: [HLS 200-10] Running '/tools/Xilinx/Vitis_HLS/2022.1/bin/unwrapped/lnx64.o/vitis_hls'

/tools/Xilinx/Vitis_HLS/2022.1/tps/tcl/tcl8.5/tzdata/Europe/Dublin can't be opened.

INFO: [HLS 200-10] For user 'jacopo' on host 'finn_dev_jacopo' (Linux_x86_64 version 5.10.16.3-microsoft-standard-WSL2) on Thu Jul 21 13:35:22 +0000 2022

INFO: [HLS 200-10] On os Ubuntu 18.04.5 LTS

INFO: [HLS 200-10] In directory '/tmp/finn_dev_jacopo/code_gen_ipgen_StreamingDataflowPartition_0_IODMA_0_holym8ev'

Sourcing Tcl script '/tmp/finn_dev_jacopo/code_gen_ipgen_StreamingDataflowPartition_0_IODMA_0_holym8ev/hls_syn_StreamingDataflowPartition_0_IODMA_0.tcl'

INFO: [HLS 200-1510] Running: source /tmp/finn_dev_jacopo/code_gen_ipgen_StreamingDataflowPartition_0_IODMA_0_holym8ev/hls_syn_StreamingDataflowPartition_0_IODMA_0.tcl

HLS project: project_StreamingDataflowPartition_0_IODMA_0

HW source dir: /tmp/finn_dev_jacopo/code_gen_ipgen_StreamingDataflowPartition_0_IODMA_0_holym8ev

finn-hlslib dir: /home/jacopo/git/finn/deps/finn-hlslib

custom HLS dir: /home/jacopo/git/finn/custom_hls

INFO: [HLS 200-1510] Running: open_project project_StreamingDataflowPartition_0_IODMA_0

INFO: [HLS 200-10] Creating and opening project '/tmp/finn_dev_jacopo/code_gen_ipgen_StreamingDataflowPartition_0_IODMA_0_holym8ev/project_StreamingDataflowPartition_0_IODMA_0'.

INFO: [HLS 200-1510] Running: add_files /tmp/finn_dev_jacopo/code_gen_ipgen_StreamingDataflowPartition_0_IODMA_0_holym8ev/top_StreamingDataflowPartition_0_IODMA_0.cpp -cflags -std=c++14 -I/home/jacopo/git/finn/deps/finn-hlslib -I/home/jacopo/git/finn/custom_hls

INFO: [HLS 200-10] Adding design file '/tmp/finn_dev_jacopo/code_gen_ipgen_StreamingDataflowPartition_0_IODMA_0_holym8ev/top_StreamingDataflowPartition_0_IODMA_0.cpp' to the project

INFO: [HLS 200-1510] Running: set_top StreamingDataflowPartition_0_IODMA_0

INFO: [HLS 200-1510] Running: open_solution sol1

INFO: [HLS 200-10] Creating and opening solution '/tmp/finn_dev_jacopo/code_gen_ipgen_StreamingDataflowPartition_0_IODMA_0_holym8ev/project_StreamingDataflowPartition_0_IODMA_0/sol1'.

INFO: [HLS 200-1505] Using default flow_target 'vivado'

Resolution: For help on HLS 200-1505 see www.xilinx.com/cgi-bin/docs/rdoc?v=2022.1;t=hls+guidance;d=200-1505.html

INFO: [HLS 200-435] Setting 'open_solution -flow_target vivado' configuration: config_interface -m_axi_latency=0

INFO: [HLS 200-1510] Running: set_part xc7z020clg400-1

INFO: [HLS 200-1611] Setting target device to 'xc7z020-clg400-1'

INFO: [HLS 200-1510] Running: config_compile -disable_unroll_code_size_check -pipeline_style flp

WARNING: [XFORM 203-506] Disable code size check when do loop unroll.

WARNING: [HLS 200-643] The 'config_compile -disable_unroll_code_size_check' hidden command is deprecated and will be removed in a future release.

INFO: [HLS 200-1510] Running: config_interface -m_axi_addr64

INFO: [HLS 200-1510] Running: config_rtl -module_auto_prefix

INFO: [HLS 200-1510] Running: config_rtl -deadlock_detection none

INFO: [HLS 200-1510] Running: create_clock -period 10 -name default

INFO: [SYN 201-201] Setting up clock 'default' with a period of 10ns.

INFO: [HLS 200-1510] Running: csynth_design

INFO: [HLS 200-111] Finished File checks and directory preparation: CPU user time: 0.01 seconds. CPU system time: 0 seconds. Elapsed time: 0 seconds; current allocated memory: 1.214 GB.

INFO: [HLS 200-10] Analyzing design file '/tmp/finn_dev_jacopo/code_gen_ipgen_StreamingDataflowPartition_0_IODMA_0_holym8ev/top_StreamingDataflowPartition_0_IODMA_0.cpp' ...

WARNING: [HLS 207-5567] Invalid Directive: for current device, RAM_S2P + URAM is invalid combination for BIND_STORAGE's option 'type + impl' (/home/jacopo/git/finn/deps/finn-hlslib/slidingwindow.h:116:49)

ERROR: [HLS 207-3504] static_assert failed "" (/home/jacopo/git/finn/deps/finn-hlslib/dma.h:139:3)

INFO: [HLS 207-4235] in instantiation of function template specialization 'Mem2Stream<64, 0>' requested here (/home/jacopo/git/finn/deps/finn-hlslib/dma.h:170:7)

INFO: [HLS 207-4235] in instantiation of function template specialization 'Mem2Stream_Batch<64, 0>' requested here (/tmp/finn_dev_jacopo/code_gen_ipgen_StreamingDataflowPartition_0_IODMA_0_holym8ev/top_StreamingDataflowPartition_0_IODMA_0.cpp:25:1)

INFO: [HLS 200-111] Finished Command csynth_design CPU user time: 1.35 seconds. CPU system time: 0.07 seconds. Elapsed time: 0.71 seconds; current allocated memory: 0.867 MB.

while executing

"source /tmp/finn_dev_jacopo/code_gen_ipgen_StreamingDataflowPartition_0_IODMA_0_holym8ev/hls_syn_StreamingDataflowPartition_0_IODMA_0.tcl"

invoked from within

"hls::main /tmp/finn_dev_jacopo/code_gen_ipgen_StreamingDataflowPartition_0_IODMA_0_holym8ev/hls_syn_StreamingDataflowPartition_0_IODMA_0.tcl"

("uplevel" body line 1)

invoked from within

"uplevel 1 hls::main {*}$newargs"

(procedure "hls_proc" line 16)

invoked from within

"hls_proc [info nameofexecutable] $argv"

INFO: [HLS 200-112] Total CPU user time: 2.86 seconds. Total CPU system time: 0.48 seconds. Total elapsed time: 1.94 seconds; peak allocated memory: 1.215 GB.

INFO: [Common 17-206] Exiting vitis_hls at Thu Jul 21 13:35:24 2022...

I installed Vitis version 2022.1

WARNING: [HLS 207-5567] Invalid Directive: for current device, RAM_S2P + URAM is invalid combination for BIND_STORAGE's option 'type + impl' (/home/jacopo/git/finn/deps/finn-hlslib/slidingwindow.h:116:49)

From this warning it looks like URAM is selected. If I see it correctly, your target device is a Pynq-Z2, that board doesn't have URAM, you will need to select a different ram_style for that node.

WARNING: [HLS 207-5567] Invalid Directive: for current device, RAM_S2P + URAM is invalid combination for BIND_STORAGE's option 'type + impl' (/home/jacopo/git/finn/deps/finn-hlslib/slidingwindow.h:116:49)From this warning it looks like URAM is selected. If I see it correctly, your target device is a Pynq-Z2, that board doesn't have URAM, you will need to select a differentram_stylefor that node.

Apparently the entire folding step I copied from the original source was incorrect; now I removed it and it seems that synthesis is successfull. Where can I look in the documentation for updated informations on how to properly select the folding parameters?

EDIT: is there any way to increase or control the number of processes involved in the synthesis step?

You can find documentation about the folding factors here: https://github.com/Xilinx/finn/blob/github-pages/docs/finn-sheduling-and-folding.pptx

Some of the FINN transformations (e.g. HLSSynthIP) can be parallelized using the env var NUM_DEFAULT_WORKERS (https://finn.readthedocs.io/en/latest/getting_started.html#environment-variables), the default is 4

Closing issue as it is now solved.