openmixup

openmixup copied to clipboard

openmixup copied to clipboard

CAIRI Supervised, Semi- and Self-Supervised Visual Representation Learning Toolbox and Benchmark

OpenMixup

📘Documentation | 🛠️Installation | 🚀Model Zoo | 👀Awesome Mixup | 🔍Awesome MIM | 🆕News

Introduction

The main branch works with PyTorch 1.8 (required by some self-supervised methods) or higher (we recommend PyTorch 1.10). You can still use PyTorch 1.6 for supervised classification methods.

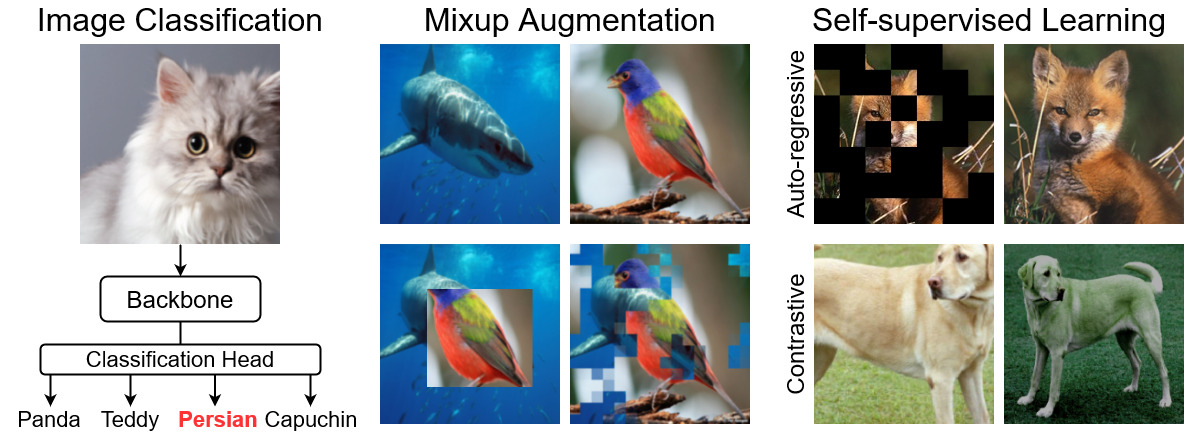

OpenMixup is an open-source toolbox for supervised, self-, and semi-supervised visual representation learning with mixup based on PyTorch, especially for mixup-related methods.

Major Features

-

Modular Design. OpenMixup follows a similar code architecture of OpenMMLab projects, which decompose the framework into various components, and users can easily build a customized model by combining different modules. OpenMixup is also transplatable to OpenMMLab projects (e.g., MMSelfSup).

-

All in One. OpenMixup provides popular backbones, mixup methods, semi-supervised, and self-supervised algorithms. Users can perform image classification (CNN & Transformer) and self-supervised pre-training (contrastive and autoregressive) under the same setting.

-

Standard Benchmarks. OpenMixup supports standard benchmarks of image classification, mixup classification, self-supervised evaluation, and provides smooth evaluation on downstream tasks with open-source projects (e.g., object detection and segmentation on Detectron2 and MMSegmentation).

What's New

[2020-08-19] Weights and logs for mixup benchmarks are released.

[2020-07-30] OpenMixup v0.2.5 is released (issue #10).

Installation

There are quick installation steps for develepment:

conda create -n openmixup python=3.8 pytorch=1.10 cudatoolkit=11.3 torchvision -c pytorch -y

conda activate openmixup

pip3 install openmim

mim install mmcv-full

git clone https://github.com/Westlake-AI/openmixup.git

cd openmixup

python setup.py develop

Please refer to install.md for more detailed installation and dataset preparation.

Getting Started

Please see get_started.md for the basic usage of OpenMixup. You can start a multiple GPUs training with CONFIG_FILE using the following script. An example,

bash tools/dist_train.sh ${CONFIG_FILE} ${GPUS} [optional arguments]

Please Then, see Tutorials for more tech details:

- config files

- add new dataset

- data pipeline

- add new modules

- customize schedules

- customize runtime

- benchmarks

Overview of Model Zoo

Please refer to Model Zoos for various backbones, mixup methods, and self-supervised algorithms. We also provide the paper lists of Awesome Mixups for your reference. Checkpoints and traning logs will be updated soon!

-

Backbone architectures for supervised image classification on ImageNet.

Currently supported backbones

- [x] VGG (ICLR'2015) [config]

- [x] ResNet (CVPR'2016) [config]

- [x] ResNeXt (CVPR'2017) [config]

- [x] SE-ResNet (CVPR'2018) [config]

- [x] SE-ResNeXt (CVPR'2018) [config]

- [x] ShuffleNetV2 (ECCV'2018) [config]

- [x] MobileNetV2 (CVPR'2018) [config]

- [x] MobileNetV3 (ICCV'2019)

- [x] EfficientNet (ICML'2019) [config]

- [x] Swin-Transformer (ICCV'2021) [config]

- [x] RepVGG (CVPR'2021)

- [x] Vision-Transformer (ICLR'2021) [config]

- [x] MLP-Mixer (NIPS'2021) [config]

- [x] DeiT (ICML'2021) [config]

- [x] ConvMixer (Openreview'2021) [config]

- [x] UniFormer (ICLR'2022) [config]

- [x] PoolFormer (CVPR'2022) [config]

- [x] ConvNeXt (CVPR'2022) [config]

- [x] VAN (ArXiv'2022) [config]

- [x] LITv2 (ArXiv'2022) [config]

-

Mixup methods for supervised image classification.

Currently supported mixup methods

- [x] Mixup (ICLR'2018)

- [x] CutMix (ICCV'2019)

- [x] ManifoldMix (ICML'2019)

- [x] FMix (ArXiv'2020)

- [x] AttentiveMix (ICASSP'2020)

- [x] SmoothMix (CVPRW'2020)

- [x] SaliencyMix (ICLR'2021)

- [x] PuzzleMix (ICML'2020)

- [x] GridMix (Pattern Recognition'2021)

- [x] ResizeMix (ArXiv'2020)

- [x] AutoMix (ECCV'2022) [config]

- [x] SAMix (ArXiv'2021) [config]

Currently supported datasets for mixups

- [x] ImageNet [download] [config]

- [x] CIFAR-10 [download] [config]

- [x] CIFAR-100 [download] [config]

- [x] Tiny-ImageNet [download] [config]

- [x] CUB-200-2011 [download] [config]

- [x] FGVC-Aircraft [download] [config]

- [x] StandfoldCars [download]

- [x] Place205 [download] [config]

- [x] iNaturalist-2017 [download] [config]

- [x] iNaturalist-2018 [download] [config]

-

Self-supervised algorithms for visual representation.

Currently supported self-supervised algorithms

- [x] Relative Location (ICCV'2015) [config]

- [x] Rotation Prediction (ICLR'2018) [config]

- [x] DeepCluster (ECCV'2018) [config]

- [x] NPID (CVPR'2018) [config]

- [x] ODC (CVPR'2020) [config]

- [x] MoCov1 (CVPR'2020) [config]

- [x] SimCLR (ICML'2020) [config]

- [x] MoCoV2 (ArXiv'2020) [config]

- [x] BYOL (NIPS'2020) [config]

- [x] SwAV (NIPS'2020) [config]

- [x] DenseCL (CVPR'2021) [config]

- [x] SimSiam (CVPR'2021) [config]

- [x] Barlow Twins (ICML'2021) [config]

- [x] MoCoV3 (ICCV'2021) [config]

- [x] MAE (CVPR'2022) [config]

- [x] SimMIM (CVPR'2022) [config]

- [x] CAE (ArXiv'2022)

- [x] A2MIM (ArXiv'2022) [config]

Change Log

Please refer to changelog.md for details and release history.

License

This project is released under the Apache 2.0 license.

Acknowledgement

- OpenMixup is an open-source project for mixup methods created by researchers in CAIRI AI LAB. We encourage researchers interested in visual representation learning and mixup methods to contribute to OpenMixup!

- This repo borrows the architecture design and part of the code from MMSelfSup and MMClassification.

Citation

If you find this project useful in your research, please consider cite our repo:

@misc{2022openmixup,

title={{OpenMixup}: Open Mixup Toolbox and Benchmark for Visual Representation Learning},

author={Li, Siyuan and Liu, Zichen and Wu, Di and Stan Z. Li},

howpublished = {\url{https://github.com/Westlake-AI/openmixup}},

year={2022}

}

Contributors

For now, the direct contributors include: Siyuan Li (@Lupin1998), Zicheng Liu (@pone7), and Di Wu (@wudi-bu). We thank contributors from MMSelfSup and MMClassification.

Contact

This repo is currently maintained by Siyuan Li ([email protected]) and Zicheng Liu ([email protected]).