RPi 4 Kinect: floor detected as distant points

I'm trying to get ORB_Slam3 working on a Raspberry Pi with a Kinect 360 sensor (without ROS).

When I try using this as a location:

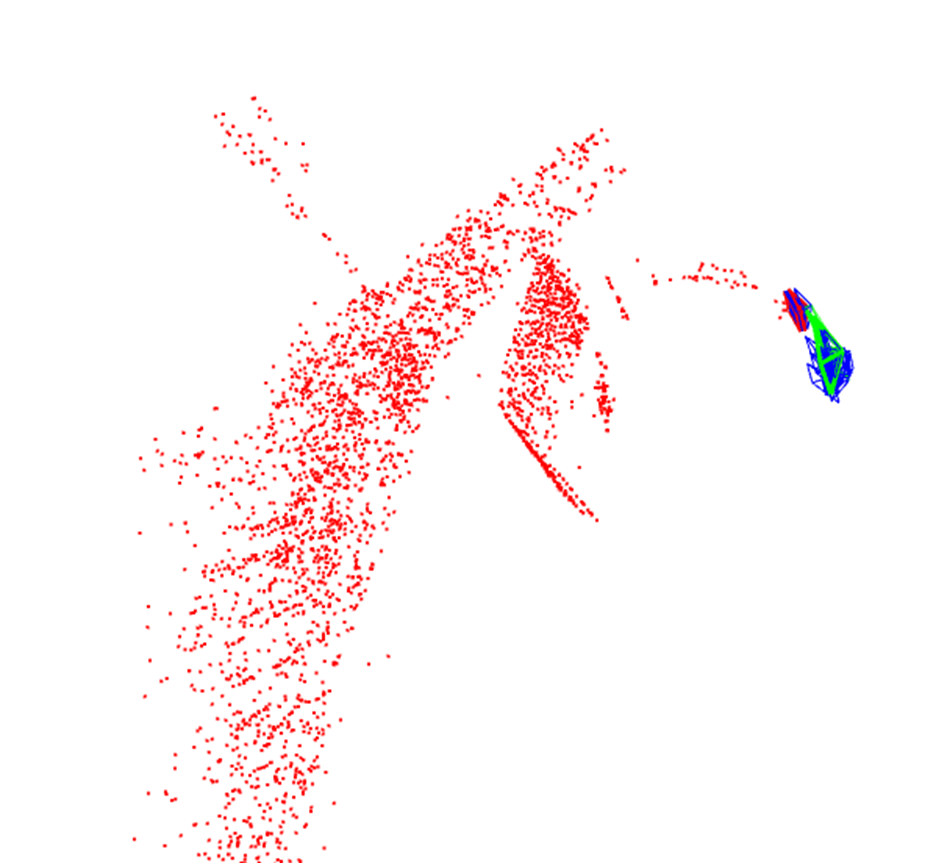

it is picking it up and finding points however it's finding a lot of points on the floor so looking at the map I get:

it is picking it up and finding points however it's finding a lot of points on the floor so looking at the map I get:

the outer cloud of points appears to be the floor that should be in the foreground. The centra; cloud is the cardboard wall.

the outer cloud of points appears to be the floor that should be in the foreground. The centra; cloud is the cardboard wall.

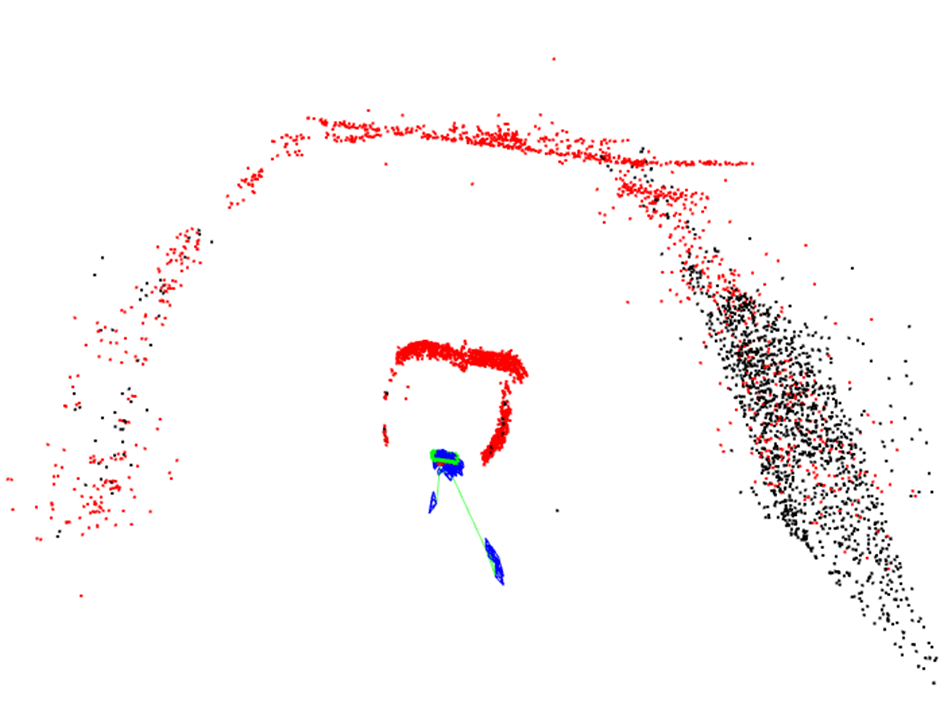

I'm also getting issues where it's jumping the location of the camera:

Which throws things out - the camera was only rotated in place.

Which throws things out - the camera was only rotated in place.

I get pretty much the same results when using RGBD or MONOCULAR

Managed to get it working (very slowly) in ROS, this picks up the depth correctly.

Using the camera through ROS gives me correct depth data but at a rate of 1 frame every 4 seconds. Using ORB_SLAM natively gives sub second responses but the depth data is messed up. Both using the same kinect.yaml configuration derived from one I found for ORB_SLAM2

Having lots of issues with ORB-SLAM3 on the Raspberry Pi 4: Using a Kinect it will only give reasonable data if using it through ROS1 If you have an existing map it only localizes itself on startup if you start in the same place that you started your original map the extra threads for map merge and loop checking take so much time that the robot loses localisation when they are running.

ROS is currently getting 7FPS from the camera but ORB-SLAM is currently working at about 1 frme every 3 seconds unless the extra threads are busy at which point it drops to 1 frame every 10-20 seconds.