Error when using negative embeddings in a chance block in Automatic1111's web ui

I'm trying to run this prompt:

positive: solo, adult

negative: [chance 50]NG_DeepNegative_V1_75T, stretched[/chance]

When I do so, I encounter the following error, and no images are generated:

Data shape for DDIM sampling is (3, 4, 64, 64), eta 0.0

Running DDIM Sampling with 20 timesteps

DDIM Sampler: 0%| | 0/20 [00:00<?, ?it/s]

Error completing request

Arguments: ('task(r15arh0wq0a7kc4)', 'solo, adult', '[chance 50]NG_DeepNegative_V1_75T, stretched[/chance]', [], 20, 17, False, False, 1, 3, 6.5, -1.0, -1.0, 0, 0, 0, False, 512, 512, False, 0.55, 1.5, 'Latent', 0, 0, 0, [], 0, '', '', True, -1.0, False, False, 'positive', 'comma', 0, False, False, '', 1, '', 0, '', 0, '', True, False, False, False, 0) {}

Traceback (most recent call last):

File "/home/foggy/stable-diffusion-webui/modules/call_queue.py", line 56, in f

res = list(func(*args, **kwargs))

File "/home/foggy/stable-diffusion-webui/modules/call_queue.py", line 37, in f

res = func(*args, **kwargs)

File "/home/foggy/stable-diffusion-webui/modules/txt2img.py", line 56, in txt2img

processed = process_images(p)

File "/home/foggy/stable-diffusion-webui/modules/processing.py", line 486, in process_images

res = process_images_inner(p)

File "/home/foggy/stable-diffusion-webui/modules/processing.py", line 628, in process_images_inner

samples_ddim = p.sample(conditioning=c, unconditional_conditioning=uc, seeds=seeds, subseeds=subseeds, subseed_strength=p.subseed_strength, prompts=prompts)

File "/home/foggy/stable-diffusion-webui/modules/processing.py", line 828, in sample

samples = self.sampler.sample(self, x, conditioning, unconditional_conditioning, image_conditioning=self.txt2img_image_conditioning(x))

File "/home/foggy/stable-diffusion-webui/modules/sd_samplers_compvis.py", line 158, in sample

samples_ddim = self.launch_sampling(steps, lambda: self.sampler.sample(S=steps, conditioning=conditioning, batch_size=int(x.shape[0]), shape=x[0].shape, verbose=False, unconditional_guidance_scale=p.cfg_scale, unconditional_conditioning=unconditional_conditioning, x_T=x, eta=self.eta)[0])

File "/home/foggy/stable-diffusion-webui/modules/sd_samplers_compvis.py", line 43, in launch_sampling

return func()

File "/home/foggy/stable-diffusion-webui/modules/sd_samplers_compvis.py", line 158, in <lambda>

samples_ddim = self.launch_sampling(steps, lambda: self.sampler.sample(S=steps, conditioning=conditioning, batch_size=int(x.shape[0]), shape=x[0].shape, verbose=False, unconditional_guidance_scale=p.cfg_scale, unconditional_conditioning=unconditional_conditioning, x_T=x, eta=self.eta)[0])

File "/home/foggy/stable-diffusion-webui/venv/lib/python3.9/site-packages/torch/autograd/grad_mode.py", line 27, in decorate_context

return func(*args, **kwargs)

File "/home/foggy/stable-diffusion-webui/repositories/stable-diffusion-stability-ai/ldm/models/diffusion/ddim.py", line 103, in sample

samples, intermediates = self.ddim_sampling(conditioning, size,

File "/home/foggy/stable-diffusion-webui/venv/lib/python3.9/site-packages/torch/autograd/grad_mode.py", line 27, in decorate_context

return func(*args, **kwargs)

File "/home/foggy/stable-diffusion-webui/repositories/stable-diffusion-stability-ai/ldm/models/diffusion/ddim.py", line 163, in ddim_sampling

outs = self.p_sample_ddim(img, cond, ts, index=index, use_original_steps=ddim_use_original_steps,

File "/home/foggy/stable-diffusion-webui/modules/sd_samplers_compvis.py", line 62, in p_sample_ddim_hook

unconditional_conditioning = prompt_parser.reconstruct_cond_batch(unconditional_conditioning, self.step)

File "/home/foggy/stable-diffusion-webui/modules/prompt_parser.py", line 223, in reconstruct_cond_batch

res[i] = cond_schedule[target_index].cond

RuntimeError: The expanded size of the tensor (154) must match the existing size (77) at non-singleton dimension 0. Target sizes: [154, 768]. Tensor sizes: [77, 768]

The negative embedding used may be found on Civit.ai.

Similar errors occur regardless of image size, sampler, or use of a VAE or hypernetwork. The error above occurred while using the Anything v3 f32 pruned model, but it's not dependent on the model either.

Some negative prompts change the final line of the error to this:

RuntimeError: The expanded size of the tensor (231) must match the existing size (77) at non-singleton dimension 0. Target sizes: [231, 768]. Tensor sizes: [77, 768]

I am not able to reproduce that second case at the moment.

The argument to chance appears to be a factor. I cannot reproduce the issue with either [chance 1] or [chance 99].

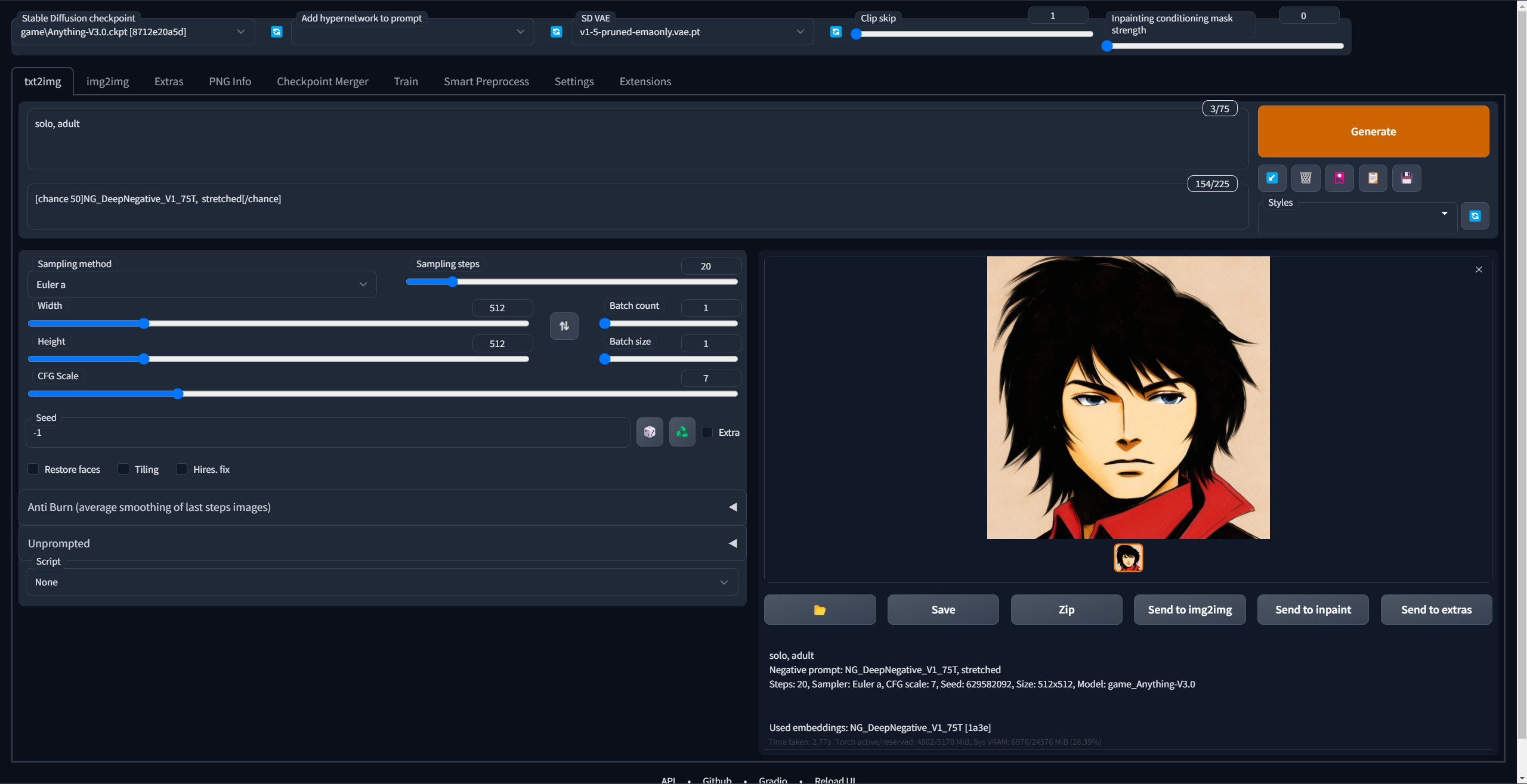

Hi @foggybogey,

It appears there may be some other factor at play - I am able to execute your provided prompt without error using the Anything v3 model:

Do you have any other extensions installed that may conflict with Unprompted?

I had some extensions installed and disabled, but I just now installed the web UI from scratch with no extensions other than Unprompted and the problem persists. I'm using fully out-of-the-box settings, with the exception of a workaround for a webserver bug as described here.

It's not an insurmountable issue, I can work around it by always including all of the negative prompts. I'm really enjoying Unprompted all the same!