Don't create secret when I sync

Hello, Thanks for this tool, it is very useful for me to synchronize ConfigMaps.

I have a problem when I try to synchronize a secret, the ConfigMaps are created for me, but the secrets cannot be created.

Have there been any changes to the Kopf API lately that break this?

Thanks

Are there any error logs in the synator or inside of kubelet?

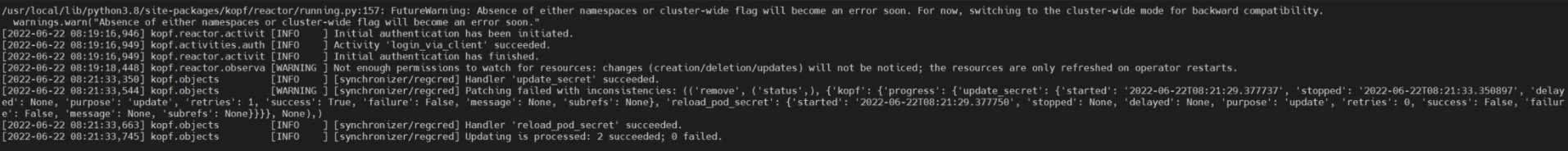

This is the log we received, everything seems to be fine but the secret has not been created.

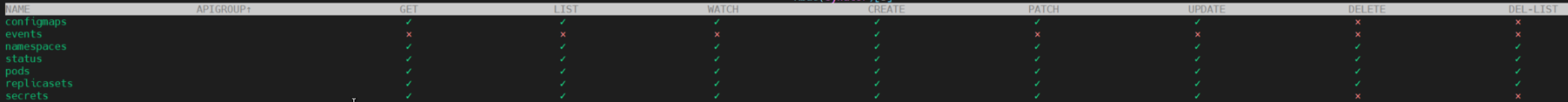

I don't know why the "not enough permissions to watch for resources" warning appears, it has the permissions.

What is the Kubernetes version?

1.20

Hi @TheYkk ,

Is the reason for the failure of the application or is it mine?

Thank you very much for your assistance.

Hi, it happens to me too in kubernetes 1.26. There is a solution to this problem? @TheYkk @llopezv

Synator pod logs

/usr/local/lib/python3.8/site-packages/kopf/_core/reactor/running.py:176: FutureWarning: Absence of either namespaces or cluster-wide flag will become an error soon. For now, switching to the cluster-wide mode for backward compatibility. warnings.warn("Absence of either namespaces or cluster-wide flag will become an error soon." [2023-05-11 15:11:00,674] kopf._core.engines.a [INFO ] Initial authentication has been initiated. [2023-05-11 15:11:00,975] kopf.activities.auth [INFO ] Activity 'login_via_client' succeeded. [2023-05-11 15:11:00,976] kopf._core.engines.a [INFO ] Initial authentication has finished. [2023-05-11 15:11:06,576] kopf._core.reactor.o [WARNING ] Not enough permissions to watch for resources: changes (creation/deletion/updates) will not be noticed; the resources are only refreshed on operator restarts.

Thanks for help

I think kubernetes changed many things in the last updates. When I was using this tool there was only kubernetes 1.18. It's been 8 big releases. Someone needs to probably first try upgrade the framework version and test it in newer kubernetes version. I don't have much time to do that. But feel free to test it out.

@TheYkk The problem occurs when pods annotated with synator/reload become up and running before the synator pods. it was resolved with a waiter in Kubernetes 1.26. Thanks for the reply.

Thanks for investigating the issue.