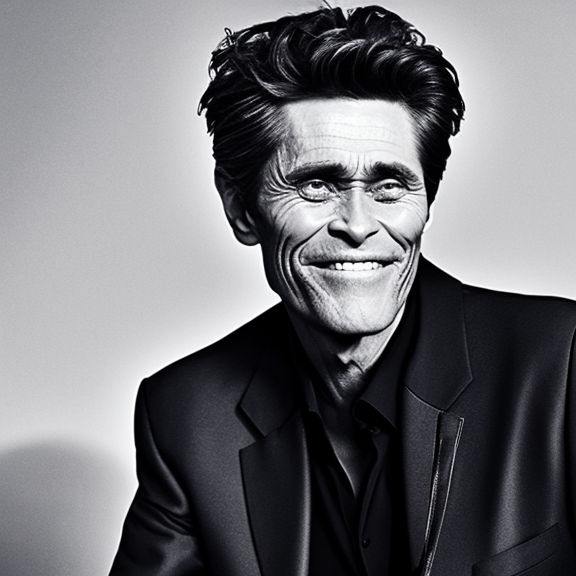

Ben

Ben

it's the new option text encoder training that might've changed things, but the results are actually better, keep the steps as low as 1500 and then play with the positive-negative...

I will train more models an see if I can reproduce the issue

I tried the leaning rate 1e-6, I'm getting great results, it is imperative to cap the training steps below 1600, the new text encoder method improves greatly the training and...

it's because of the feature "--train_text_encoder", it gives much better results with much less effort, but susceptible to overtraining and overfitting because of the old method habit.

I was working on an initial version of a paperspace notebook, but I noticed that the dependencies installation is very slow and starting the webui is almost impossible, anyway, here...

the text encoder training doesn't make thing worse, it makes things more efficient, you don't need 4000 steps anymore, you only need 1600 steps, if you go over it you...

@nfrhtp overtraining will lead to generating exactly the images used for training

New better, faster method incoming, it will be ready in a few hour

use the new method

I'm saying it's good because I tried it the first two are the default ones and the last two are trained with 600 steps    ![600s...