crawl_google_results.py update, modularize, documentation and doctest

Added large changes to web_programming/crawl_google_results.py with documentations and doctests. Modularized the script and made it more customizable. Fixed formatting using black and flake8.

- [x] Add an algorithm?

- [x] Fix a bug or typo in an existing algorithm?

- [x] Documentation change?

Checklist:

- [x] I have read CONTRIBUTING.md.

- [x] This pull request is all my own work -- I have not plagiarized.

- [x] I know that pull requests will not be merged if they fail the automated tests.

- [x] This PR only changes one algorithm file. To ease review, please open separate PRs for separate algorithms.

- [x] All new Python files are placed inside an existing directory.

- [x] All filenames are in all lowercase characters with no spaces or dashes.

- [x] All functions and variable names follow Python naming conventions.

- [x] All function parameters and return values are annotated with Python type hints.

- [x] All functions have doctests that pass the automated testing.

- [ ] All new algorithms have a URL in its comments that points to Wikipedia or other similar explanation.

- [] If this pull request resolves one or more open issues then the commit message contains

Fixes: #{$ISSUE_NO}.

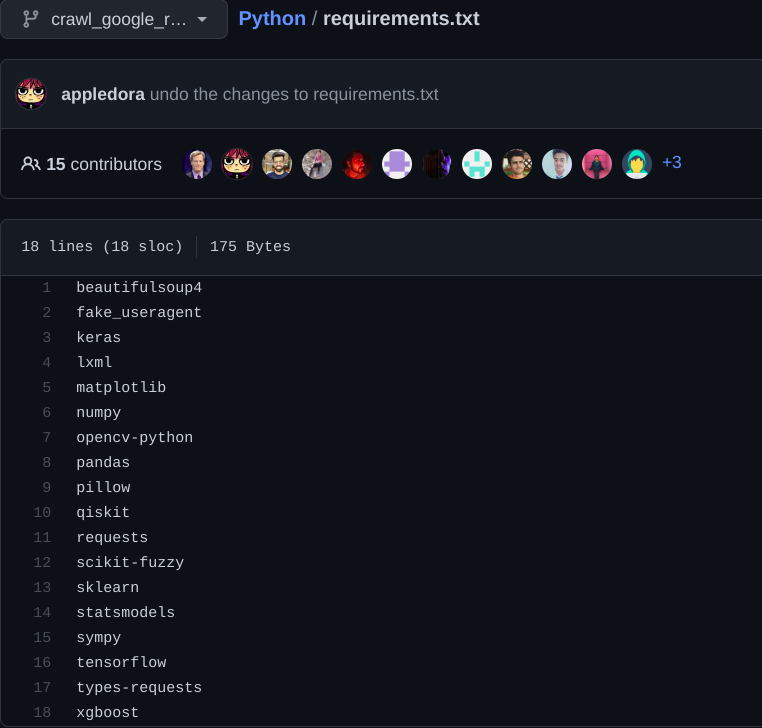

Please undo the changes to requirements.txt.

Please undo the changes to requirements.txt.

Done.

I think that this is a different algorithm than the original so let's keep both. Please rename this file to get_google_search_results.py with an algorithmic function get_google_search_results() which returns a list or tuple of search results. Put the writing of those results to a file in a separate function. Crawling is traversing each search result to go deeper which this algorithm does not do. Please do not print in the get_google_search_results() function.

I think that this is a different algorithm than the original so let's keep both. Please rename this file to

get_google_search_results.pywith an algorithmic functionget_google_search_results()which returns a list or tuple of search results. Put the writing of those results to a file in a separate function. Crawling is traversing each search result to go deeper which this algorithm does not do. Please do not print in theget_google_search_results()function.

On it!

The problem with this pull request is that the file written to disk (when opened with a text editor) does not contain the results of the search. When the file is opened with a web browser, the Google search is run again and the search results are shown. This can be proven by disconnecting from the internet and then reopening the html page in a browser.

The problem with this pull request is that the file written to disk (when opened with a text editor) does not contain the results of the search. When the file is opened with a web browser, the Google search is run again and the search results are shown. This can be proven by disconnecting from the internet and then reopening the html page in a browser.

I also saw that and thought it was bizarre. But as this is what the original script was doing, I thought this was solving some kind of problems for some people? I think I can change it scrape titles, links and preview texts. Should I go for it?

@cclauss , any comments on this one?

@poyea I think I fixed the technical and conventional errors on this one. Could you take a look?

So if we add

import doctest

doctest.testmod()

Then every single time we run the tests, it fires requests to google. And that those .txt files will be generated.

Please see https://github.com/TheAlgorithms/Python/blob/master/web_programming/instagram_crawler.py for what's current handling... Ideally we would have to mock the requests, but in the case we may further factor out the processing functions, and let's not test the request part.

@poyea, for the sake of clarification are you suggesting I follow the coding pattern in the https://github.com/TheAlgorithms/Python/blob/master/web_programming/instagram_crawler.py ?

@poyea, for the sake of clarification are you suggesting I follow the coding pattern in the https://github.com/TheAlgorithms/Python/blob/master/web_programming/instagram_crawler.py ?

Not necessarily. Now it's just a matter of how we write our test suite

@poyea, forgive me, but I believe I am still a little confused about the testing requirements. :|

What I "think" you ask for is, to only test the write_google_search_results() method, without actually calling the parse_results() method which sends out the actual REQUEST to google. And hence, I could write a mock test method like test_instagram_user() as given in this script . And by utilizing the doctest library, I will be calling this mock method?

Am I going in the right direction here?

web_programming/get_google_search_results.py:30: error: Name "headers" is not defined

@poyea, forgive me, but I believe I am still a little confused about the testing requirements. :| What I "think" you ask for is, to only test the

write_google_search_results()method, without actually calling theparse_results()method which sends out the actualREQUESTto google. And hence, I could write a mock test method liketest_instagram_user()as given in this script . And by utilizing the doctest library, I will be calling this mock method? Am I going in the right direction here?

@appledora You may try to run the test locally

This pull request has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.

Hi