Test designs for sky130

Adding a suite of test designs for sky130:

- bp_fe (BlackParrot front-end)

- bsg_manycore_tile_compute_mesh_real (design using real SRAMs)

- bsg_manycore_tile_compute_mesh_fakeram (design using fakerams)

- bsg_manycore_tile_compute_mesh_synth_512 (design using SRAM synthesized using OpenROAD)

- bsg_manycore_proc_vanilla_real (design with a smaller I/O count, using real SRAMs)

- bsg_manycore_proc_vanilla_fakeram (same design using fakerams)

- bsg_manycore_proc_vanilla_synth_512 (using SRAM synthesized using OpenROAD - 512x8)

- bsg_manycore_proc_vanilla_synth_256 (using SRAM synthesized using OpenROAD - 256x8)

- bsg_manycore_proc_vanilla_synth_128 (using SRAM synthesized using OpenROAD - 128x8)

- bsg_manycore_proc_vanilla_synth_64 (using SRAM synthesized using OpenROAD - 64x8)

- tinyRocket

These are a small subset of the designs that were failing before the recent fix ( #173 ). It was felt that these designs could make a good test suite for testing the flow as new changes are made, so collected them together.

Some variations are incorporated across the designs in this test suite:

- The manycore_tile_compute_mesh designs have a much higher I/O count compared to proc_vanilla designs

- There are three versions - real, fakeram and synthram (uses synthesized SRAMs) for the bsg_manycore_tile_compute_mesh and bsg_manycore_proc_vanilla designs.

- bsg_manycore_proc_vanilla's synthram version has 4 variants each having a different SRAM depth

- tinyRocket is comparatively much smaller than the other designs.

- tinyRocket has the smallest macro count (2), while bp_fe is in the middle (4) and bsg_manycore designs have the largest macro count (10)

- tinyRocket is a design that passed the flow (before the recent fix #173) upon adjusting the MACRO_PLACE_CHANNEL. The other designs could not pass the flow (before the fix) despite trying several different things.

- bsg_manycore_tile_compute_mesh_synth_512 and bsg_manycore_proc_vanilla_synth_512 have a much larger area compared to the other designs.

- bp_fe uses different SRAM configurations as per the design requirement, similarly tinyRocket too. The other designs use multiple replications of the same configuration to meet the design requirement (Eg:- 512x8 4 times to make 512x32)

The motivation is that these variations would assist in the detection/identification of issues as they arise.

These designs are added to the CI setup:

- Designs bp_fe and tinyRocket are added to 'public_tests_small.Jenkinsfile'.

- Together with them, bsg_manycore_tile_compute_mesh_real/fakeram and bsg_manycore_proc_vanilla_real/fakeram are added to 'public_tests_all.Jenkinsfile'

- Including the previous designs, bsg_manycore_tile_compute_mesh_synth_512 and bsg_manycore_proc_vanilla_synth_512 to 64 are added to 'public_nightly.Jenkinsfile'

Would you say it would serve as a good test suite/could any changes make this more useful?

@vvbandeira @taylor-bsg

The designs seem to be getting marked as 'failed' due to some metrics file related error after the flow is completed. Would, making the metrics file again, solve this?

It runs without giving this error when I try locally (ORFS commit - 99889aac8cfbe6c96da2001e43f71d4522f144e2)

Does this supersede PR #178 ?

How did you generate the fake RAMs? We have had trouble in the past with unrealistically weak drive strengths. If you have real rams why use fake ones too?

What is the runtime of these designs?

What is synthram ?

Thanks for the questions!

Yes, this supersedes #178.

How did you generate the fake RAMs? We have had trouble in the past with unrealistically weak drive strengths. If you have real rams why use fake ones too?

The fakerams are generated using bsg_fakeram

Here for bsg_manycore_tile_compute_mesh/bsg_manycore_proc_vanilla, the required configurations are: 1024x46 and 1024x32. These two configurations are being made by repeating 1024x8 real SRAM (the available real ram that is suitable)

This means 4 times 1024x8 for making 1024x32 and 6 times 1024x8 for making 1024x46, a total of 10 1024x8 SRAMs, which wouldn't be good considering the area and complexity but serves as a hack for using available standard real SRAM to meet the design memory requirement.

Both the real SRAM and fakeram versions are kept for bsg_manycore designs so that if any bug or issue arises specifically affecting one of these versions (i.e., specifically occur when fakeram is used or when real SRAM is used), then this would help to detect it / identify it quickly.

What is synthram ?

The synthrams are RAMs generated by putting a Verilog (basic RAM logic) through the OpenROAD flow. They were originally generated to test if using them can avoid the congestion issue #173. In case of synthrams, the routing of interconnects across the macro was not getting blocked (seen by observing the congestion map), unlike how it was observed in case of fakerams and real SRAMs.

What is the runtime of these designs?

On a portable system with i7 6th generation and 16GB RAM, the bsg_manycore_tile_compute_mesh_synth_512 design takes about 3/4hrs. The designs with 512x8 synthrams take most amount of time, followed by the bsg_manycore designs with real SRAM, fakeram, and the blackparrot front end design. The tinyRocket design would finish earliest.

The idea of keeping different designs incorporating various differences - for example, different versions using real SRAM, fakeram and synthram respectively - is to enable debugging / identification of issues that occur in each of these specific cases. Let's say if an issue occurs for bsg_manycore_proc_vanilla_fakeram and not for bsg_manycore_proc_vanilla_real, then we could investigate if this issue arises from something specific to fakerams, and so on.

What do you think?

Might you have any suggestions?

@maliberty Specifically, we are adding these tests to address a lack of testing of SRAM integration in OpenRoad flow scripts for Sky130. A number of SRAM integration styles are tested. "Fakeram" is more representative of commercial SRAMs than the "real rams". These are not some random files we are checking in, Lakshmi has worked for several months designing these to give a comprehensive suite of designs with different properties so that it is easy to tell if the problem is broad-based or specific to any particular design or SRAM style.

The CI failures need fixing. The conflicts needs resolving. We already have a version of tinyRocket in our tests. What does this version add? It would be good if they could be consolidated. If synthrams were a workaround, and most people will prefer real rams, is it worth the runtime to test with them?

@lakshmi-sathi (@maliberty and @vvbandeira) I think the idea behind this addition is really good but I think that rather than adding these tests to the openroad-flow-scripts repo, that we should instead get the designs from the setup in openlane and add them to our testing via openlane. Since that is the real place it is being used in production use we would be testing what the users are really doing. Are these designs already in OpenLane? If so I think @vvbandeira should be able to call whatever openlane script is needed to run the tests via openlane. Thanks for pushing testing, especially where coverage is missing like this.

@maliberty I synced it with OpenROAD master. I also made some changes for improving it. The number of designs has been pruned for a more optimized test cycle. Now the designs included are:

- bp_fe (BlackParrot front-end)

- bsg_manycore_tile_compute_mesh_real (design using real SRAMs)

- bsg_manycore_tile_compute_mesh_fakeram (design using fakerams)

- bsg_manycore_proc_vanilla_real (design with a smaller I/O count, using real SRAMs)

- bsg_manycore_proc_vanilla_fakeram (same design using fakerams)

- bsg_manycore_proc_vanilla_synth_512 (using SRAM synthesized using OpenROAD - 512x8)

- bsg_manycore_proc_vanilla_synth_128 (using SRAM synthesized using OpenROAD - 128x8)

Again, the idea of including fakerams, realrams, synthrams (SRAM verilog synthesized in SRAM macro using OpenROAD), then different sizes of rams, designs of different I/O count, designs of different complexity, and of different sizes, is that by including all these variations across the test designs it will make it much easier to narrow down on a particular issue when it happens.

Arrangement in CI files

public_nightly jenkins file: all designs

public_tests_all jenkins file:

- bp_fe

- bsg_manycore_tile_compute_mesh_real

- bsg_manycore_tile_compute_mesh_fakeram

- bsg_manycore_proc_vanilla_real

- bsg_manycore_proc_vanilla_fakeram

public_tests_small jenkins file: bp_fe

(design with lesser runtime is kept in public_tests_small, moderate runtime designs, and short runtime designs in public_tests_all)

Do let me know ideas to make it even more useful.

@tspyrou Thanks for the pointer. I am currently trying out these designs on OpenLane. Once I get them prepared as per the OpenLane setup, I will give a PR there.

@vvbandeira

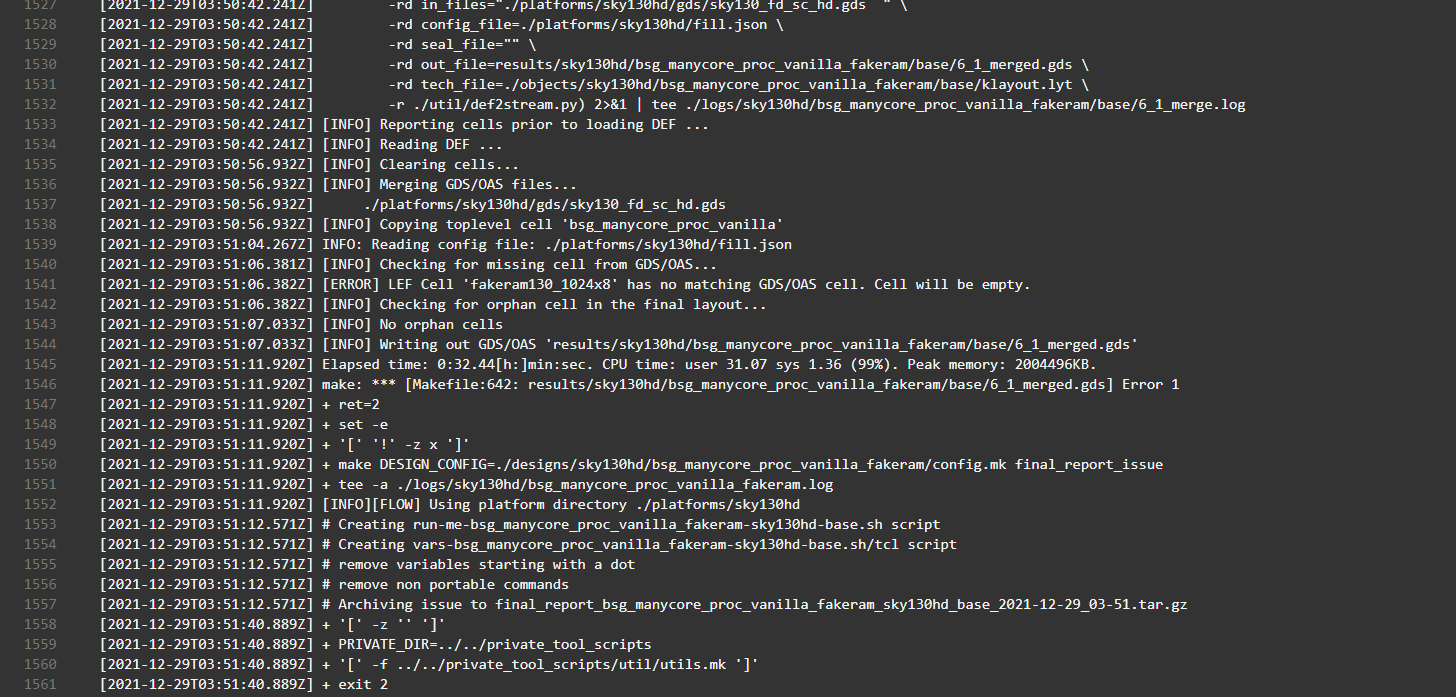

On jenkins, the build is indicated as having failed.

This is the error seen: [ERROR] LEF Cell 'fakeram130_1024x8' has no matching GDS/OAS cell. Cell will be empty.

Is it the lack of GDS files that is causing this failure indication?

Yes we check for missing gds. In ng45 see

export GDS_ALLOW_EMPTY = fakeram.*

@lakshmi-sathi could you also rebase your changes? You added some large files and removed them, this will still count in the repo size. Also, ORFS supports compressed GDS files -- see here

@lakshmi-sathi Any updates on this PR?