Pretrained model folder?

Hi, I'm trying to load the pretrained model from base to train on VQA dataset, but I'm unable to find the indicated model data files. It states " The data has been saved in NAS folder: human-ai-dialog/vilbert/data2. The data folder and pretrained_models folder are organized as shown below:". Is there any place that we can find the directory "human-ai-dialog/vilbert/data2"?

Also for the pretrained model here: --from_pretrained=/nas-data/vilbert/data2/kilbert_base_model/pytorch_model_9.bin

Thanks!

Hello,

vilbert/data2 are folder and subfolder where the dataset are located.

You can download them here:

- VQA2.0 dataset: https://visualqa.org/download.html

- OKVQA dataset: https://okvqa.allenai.org/download.html

- ConceptualCaptions dataset: https://ai.google.com/research/ConceptualCaptions/download

Our team is working to share the files requested in the issues and as soon as the files are ready we will share them with you (this includes the file model_9.bin)

Hi, thank you very much for the update! Will wait for the updates for the new files. Thanks.

Hello, thank you for your patience and sorry for the late reply, the pre-trained model (model_9.bin) is available on the kaggle of the project: https://www.kaggle.com/thalesgroup/conceptbert/

DO you need external knowledge for OK-VQA?

DO you need external knowledge for OK-VQA?

You don't need external knowledge for OK-VQA, since it would be possible to deduce some knowledge from the examples in the dataset. For example, using the bias in the dataset between the Wimbledon tournament and tennis, the model can learn what to answer. But these predictions would be heavily influenced by bias in the dataset, which we don't want.

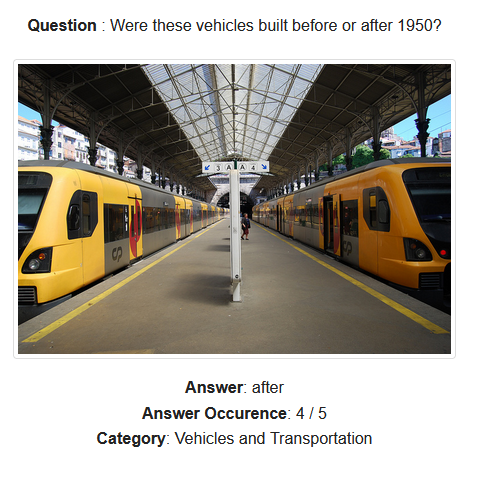

Furthermore, some questions are "not enough" to learn information and be able to answer similar questions. For example, consider the following one (image 132412 in the training split):

By looking at the answer from this question, the model will guess that the vehicles were built after 1950, but it will not be able to answer another similar question: "Were these vehicles built before or after 1960?".

By looking at the answer from this question, the model will guess that the vehicles were built after 1950, but it will not be able to answer another similar question: "Were these vehicles built before or after 1960?".

This is why having external knowledge is interesting for the OK-VQA dataset: instead of using biases in the dataset to approximate facts, the model can directly extract relevant information from another source.

Ooo, I see that. However, in your work, you did utilize external knowledge. And you have also said " the model can directly extract relevant information from another source.". Then, if we just use the dataset you have provided on the website, the model may be not different with VQA-CP.

Thanks.

| | Shandong University | | @.*** | 签名由网易邮箱大师定制 On 11/25/2021 21:21,François @.***> wrote:

You don't need external knowledge for OK-VQA, since it would be possible to deduce some knowledge from the examples in the dataset. For example, using the bias in the dataset between the Wimbledon tournament and tennis, the model can learn what to answer. But these predictions would be heavily influenced by bias in the dataset, which we don't want.

Furthermore, some questions are "not enough" to learn information and be able to answer similar questions. For example, consider the following one (image 132412 in the training split):

By looking at the answer from this question, the model will guess that the vehicles were built after 1950, but it will not be able to answer another similar question: "Were these vehicles built before or after 1960?".

This is why having external knowledge is interesting for the OK-VQA dataset: instead of using biases in the dataset to approximate facts, the model can directly extract relevant information from another source.

— You are receiving this because you commented. Reply to this email directly, view it on GitHub, or unsubscribe. Triage notifications on the go with GitHub Mobile for iOS or Android.