GFPGAN

GFPGAN copied to clipboard

GFPGAN copied to clipboard

Are the decoders finetuned?

From the training script I dont believe the decoders are being fine-tuned but when I play with the colab code I am getting weird results.

In the colab code, if I make conditions empty, it should return the results without SFT, however, the results are bad. ` image, _ = self.stylegan_decoder( [style_code], [], return_latents=return_latents, input_is_latent=True, randomize_noise=randomize_noise)

`

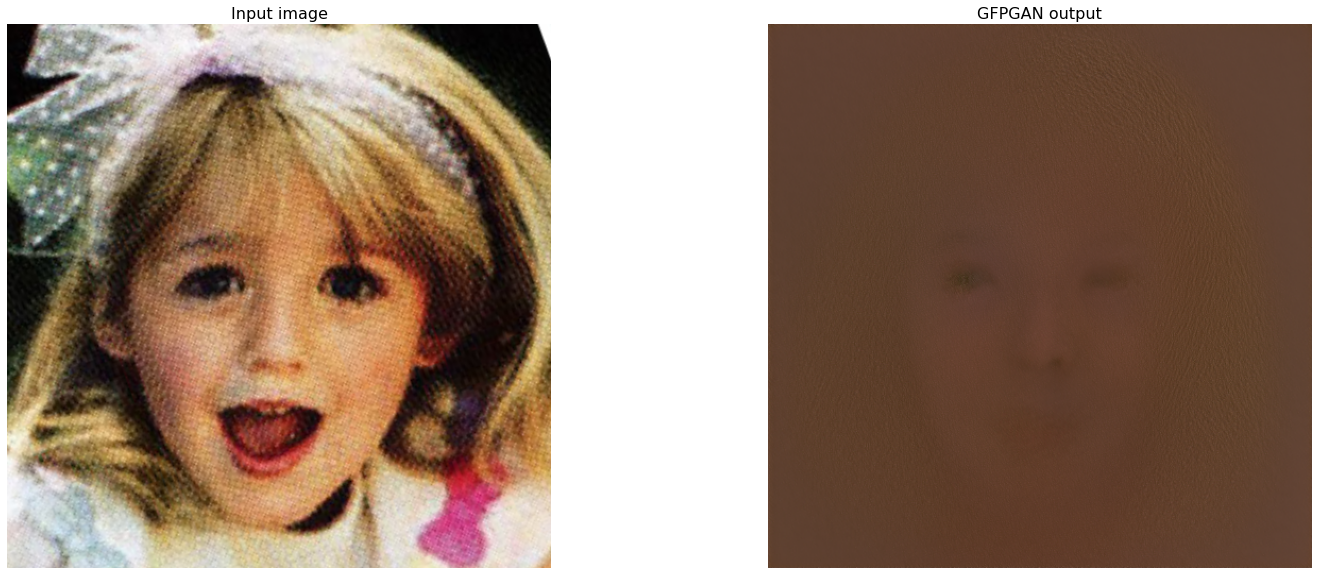

This is the result from setting conditions to empty using the test images. If decoders are not being fine-tuned, this should give proper face results.

This is the result from setting conditions to empty using the test images. If decoders are not being fine-tuned, this should give proper face results.

@mchong6 Have you solved this problem?