PocketFlow

PocketFlow copied to clipboard

PocketFlow copied to clipboard

The loss is nan when fine tuning with selected channels

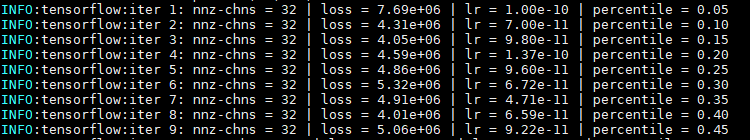

Hello! I use ChannelPrunedGpuLearner to compress a regression model which predicts a monocular depth image. The loss become nan and I want to know is it normal or is it my model's problem?

Thank~~

This is abnormal. Does the loss value always equal to NaN, or does it become NaN during the pruning process of some layer?

@jiaxiang-wu It become bigger and bigger and finally it become NaN after serial layer.

The first is not NaN.

The first is not NaN.

Could you please post the complete log file?

@jiaxiang-wu But it is too big. It has 37213 lines so I upload the file. nohup.txt

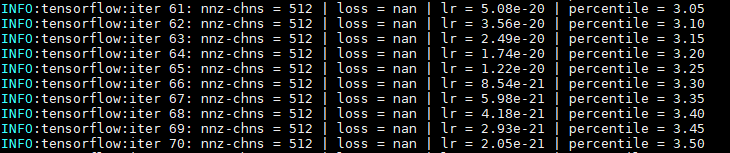

The Inf/NaN issue starts from here:

INFO:tensorflow:layer #15: pr = 0.50 (target)

INFO:tensorflow:mask.shape = (3, 3, 1024, 512)

INFO:tensorflow:iter 1: nnz-chns = 1024 | loss = 4.50e+14 | lr = 1.00e-10 | percentile = 0.05

INFO:tensorflow:iter 2: nnz-chns = 1024 | loss = 4.60e+20 | lr = 7.00e-11 | percentile = 0.10

INFO:tensorflow:iter 3: nnz-chns = 1024 | loss = 2.81e+23 | lr = 4.90e-11 | percentile = 0.15

INFO:tensorflow:iter 4: nnz-chns = 1024 | loss = 1.90e+18 | lr = 3.43e-11 | percentile = 0.20

INFO:tensorflow:iter 5: nnz-chns = 1024 | loss = 1.87e+18 | lr = 4.80e-11 | percentile = 0.25

INFO:tensorflow:iter 6: nnz-chns = 1024 | loss = 1.82e+18 | lr = 6.72e-11 | percentile = 0.30

INFO:tensorflow:iter 7: nnz-chns = 1024 | loss = 1.76e+18 | lr = 9.41e-11 | percentile = 0.35

INFO:tensorflow:iter 8: nnz-chns = 1024 | loss = 1.67e+18 | lr = 1.32e-10 | percentile = 0.40

INFO:tensorflow:iter 9: nnz-chns = 1024 | loss = 1.56e+18 | lr = 1.84e-10 | percentile = 0.45

INFO:tensorflow:iter 10: nnz-chns = 1024 | loss = 1.42e+18 | lr = 2.58e-10 | percentile = 0.50

INFO:tensorflow:iter 11: nnz-chns = 1024 | loss = 3.79e+28 | lr = 3.62e-10 | percentile = 0.55

INFO:tensorflow:iter 12: nnz-chns = 1024 | loss = 3.61e+31 | lr = 2.53e-10 | percentile = 0.60

INFO:tensorflow:iter 13: nnz-chns = 1024 | loss = inf | lr = 1.77e-10 | percentile = 0.65

INFO:tensorflow:iter 14: nnz-chns = 1024 | loss = inf | lr = 1.24e-10 | percentile = 0.70

INFO:tensorflow:iter 15: nnz-chns = 1024 | loss = inf | lr = 8.68e-11 | percentile = 0.75

INFO:tensorflow:iter 16: nnz-chns = 1024 | loss = inf | lr = 6.08e-11 | percentile = 0.80

INFO:tensorflow:iter 17: nnz-chns = 1024 | loss = inf | lr = 4.25e-11 | percentile = 0.85

INFO:tensorflow:iter 18: nnz-chns = 1024 | loss = nan | lr = 2.98e-11 | percentile = 0.90

INFO:tensorflow:iter 19: nnz-chns = 1024 | loss = nan | lr = 2.08e-11 | percentile = 0.95

According to your log:

INFO:tensorflow:creating a pruning mask for pruned_model/model/encoder/Conv/weights:0 of size (7, 7, 3, 32)

INFO:tensorflow:creating a pruning mask for pruned_model/model/encoder/Conv_1/weights:0 of size (7, 7, 32, 32)

INFO:tensorflow:creating a pruning mask for pruned_model/model/encoder/Conv_2/weights:0 of size (5, 5, 32, 64)

INFO:tensorflow:creating a pruning mask for pruned_model/model/encoder/Conv_3/weights:0 of size (5, 5, 64, 64)

INFO:tensorflow:creating a pruning mask for pruned_model/model/encoder/Conv_4/weights:0 of size (3, 3, 64, 128)

INFO:tensorflow:creating a pruning mask for pruned_model/model/encoder/Conv_5/weights:0 of size (3, 3, 128, 128)

INFO:tensorflow:creating a pruning mask for pruned_model/model/encoder/Conv_6/weights:0 of size (3, 3, 128, 256)

INFO:tensorflow:creating a pruning mask for pruned_model/model/encoder/Conv_7/weights:0 of size (3, 3, 256, 256)

INFO:tensorflow:creating a pruning mask for pruned_model/model/encoder/Conv_8/weights:0 of size (3, 3, 256, 512)

INFO:tensorflow:creating a pruning mask for pruned_model/model/encoder/Conv_9/weights:0 of size (3, 3, 512, 512)

INFO:tensorflow:creating a pruning mask for pruned_model/model/encoder/Conv_10/weights:0 of size (3, 3, 512, 512)

INFO:tensorflow:creating a pruning mask for pruned_model/model/encoder/Conv_11/weights:0 of size (3, 3, 512, 512)

INFO:tensorflow:creating a pruning mask for pruned_model/model/encoder/Conv_12/weights:0 of size (3, 3, 512, 512)

INFO:tensorflow:creating a pruning mask for pruned_model/model/encoder/Conv_13/weights:0 of size (3, 3, 512, 512)

INFO:tensorflow:creating a pruning mask for pruned_model/model/decoder/Conv/weights:0 of size (3, 3, 512, 512)

INFO:tensorflow:creating a pruning mask for pruned_model/model/decoder/Conv_1/weights:0 of size (3, 3, 1024, 512)

INFO:tensorflow:creating a pruning mask for pruned_model/model/decoder/Conv_2/weights:0 of size (3, 3, 512, 512)

INFO:tensorflow:creating a pruning mask for pruned_model/model/decoder/Conv_3/weights:0 of size (3, 3, 1024, 512)

INFO:tensorflow:creating a pruning mask for pruned_model/model/decoder/Conv_4/weights:0 of size (3, 3, 512, 256)

INFO:tensorflow:creating a pruning mask for pruned_model/model/decoder/Conv_5/weights:0 of size (3, 3, 512, 256)

INFO:tensorflow:creating a pruning mask for pruned_model/model/decoder/Conv_6/weights:0 of size (3, 3, 256, 128)

INFO:tensorflow:creating a pruning mask for pruned_model/model/decoder/Conv_7/weights:0 of size (3, 3, 256, 128)

INFO:tensorflow:creating a pruning mask for pruned_model/model/decoder/Conv_8/weights:0 of size (3, 3, 128, 2)

INFO:tensorflow:creating a pruning mask for pruned_model/model/decoder/Conv_9/weights:0 of size (3, 3, 128, 64)

INFO:tensorflow:creating a pruning mask for pruned_model/model/decoder/Conv_10/weights:0 of size (3, 3, 130, 64)

INFO:tensorflow:creating a pruning mask for pruned_model/model/decoder/Conv_11/weights:0 of size (3, 3, 64, 2)

INFO:tensorflow:creating a pruning mask for pruned_model/model/decoder/Conv_12/weights:0 of size (3, 3, 64, 32)

INFO:tensorflow:creating a pruning mask for pruned_model/model/decoder/Conv_13/weights:0 of size (3, 3, 66, 32)

INFO:tensorflow:creating a pruning mask for pruned_model/model/decoder/Conv_14/weights:0 of size (3, 3, 32, 2)

INFO:tensorflow:creating a pruning mask for pruned_model/model/decoder/Conv_15/weights:0 of size (3, 3, 32, 16)

INFO:tensorflow:creating a pruning mask for pruned_model/model/decoder/Conv_16/weights:0 of size (3, 3, 18, 16)

INFO:tensorflow:creating a pruning mask for pruned_model/model/decoder/Conv_17/weights:0 of size (3, 3, 16, 2)

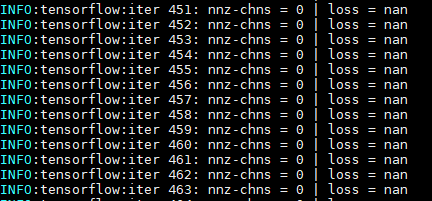

It seems that this layer, pruned_model/model/decoder/Conv_1/weights:0, has 1024 input channels. Does this layer take outputs from two layers (instead of one)? Our current implementation does not support such structure. Hotfix needed.

@jiaxiang-wu Yes,it seems like ResNet's structure and two outputs from another two layers are be concatenated as the input.

Got it.

Bug: ChannelPrunedGpuLearner cannot compress conv. layers with more than one preceding layers.