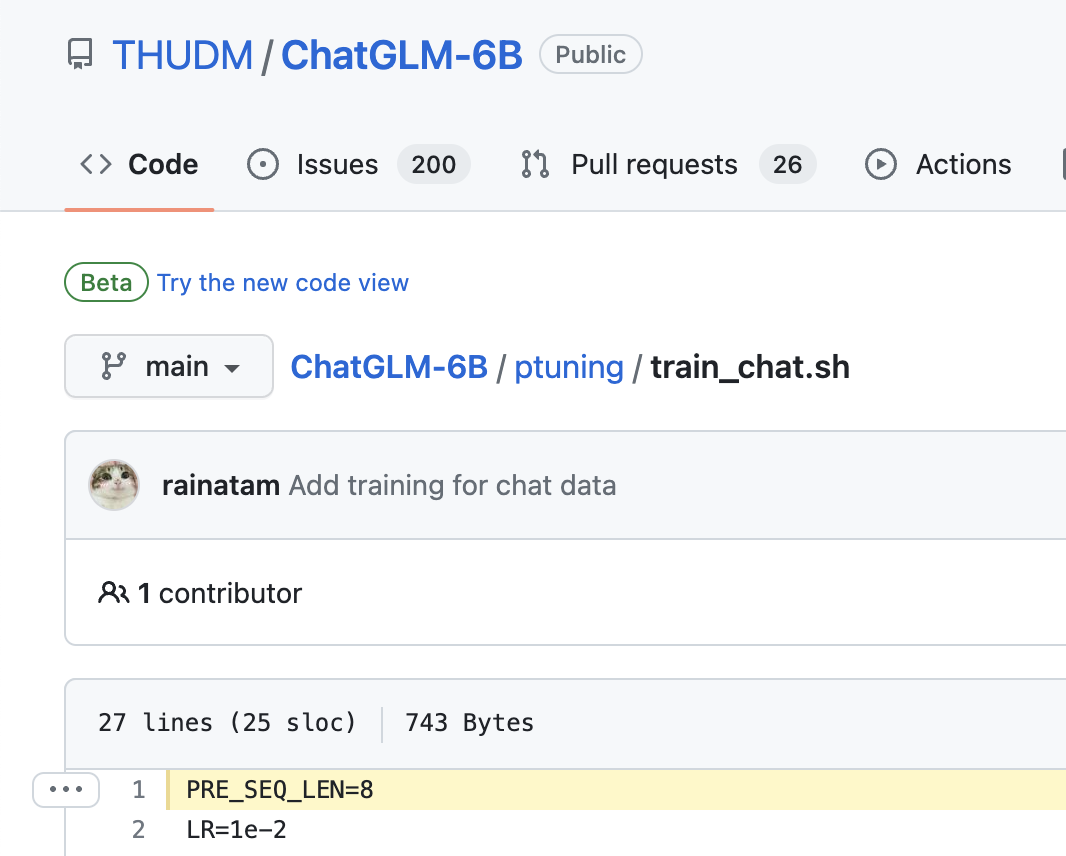

[BUG/Help] <title> 8 or 128 ?

Is there an existing issue for this?

- [X] I have searched the existing issues

Current Behavior

hi,dear pre_seq_len is 8 in train but 128 when inference https://github.com/THUDM/ChatGLM-6B/blob/main/ptuning/train_chat.sh#L1 PRE_SEQ_LEN=8 https://github.com/THUDM/ChatGLM-6B/blob/main/ptuning/web_demo.sh#L1 PRE_SEQ_LEN=128 why ?

Expected Behavior

No response

Steps To Reproduce

no

Environment

- OS:

- Python:

- Transformers:

- PyTorch:

- CUDA Support (`python -c "import torch; print(torch.cuda.is_available())"`) :

Anything else?

No response

raise RuntimeError('Error(s) in loading state_dict for {}:\n\t{}'.format(

RuntimeError: Error(s) in loading state_dict for PrefixEncoder: size mismatch for embedding.weight: copying a param with shape torch.Size([8, 229376]) from checkpoint, the shape in current model is torch.Size([128, 229376]).

this is a big bug !!!

i also confused,why in two shell script, PRE_SQL_LEN has two different value

Thanks for pointing out this. Already fixed.