ChatGLM-6B

ChatGLM-6B copied to clipboard

ChatGLM-6B copied to clipboard

[BUG/Help] <title>

Is there an existing issue for this?

- [X] I have searched the existing issues

Current Behavior

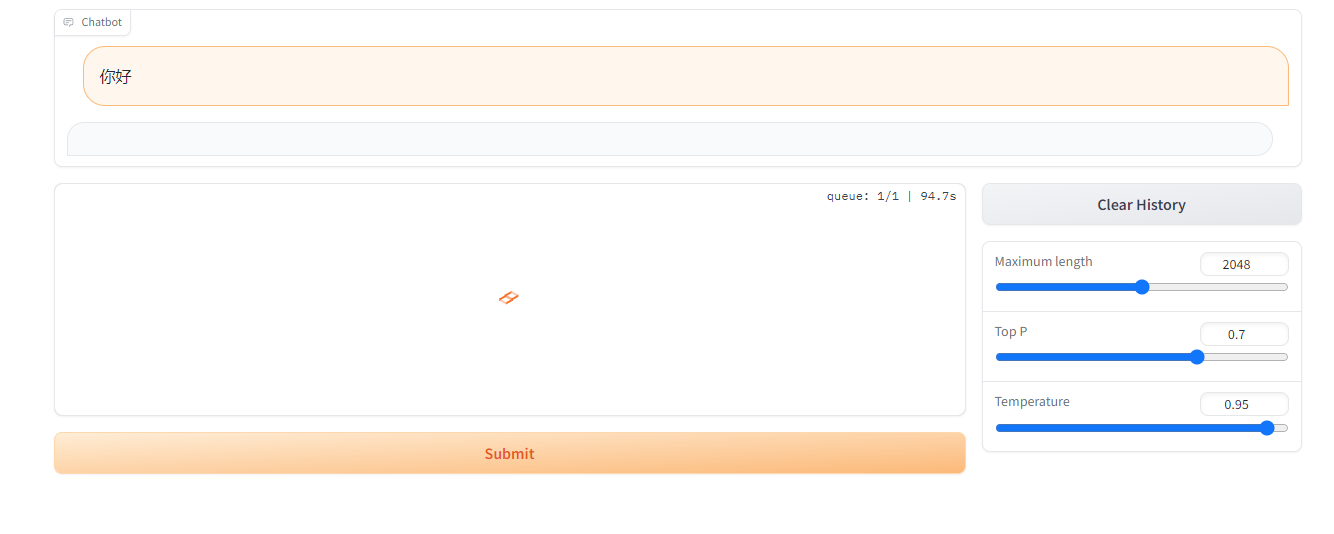

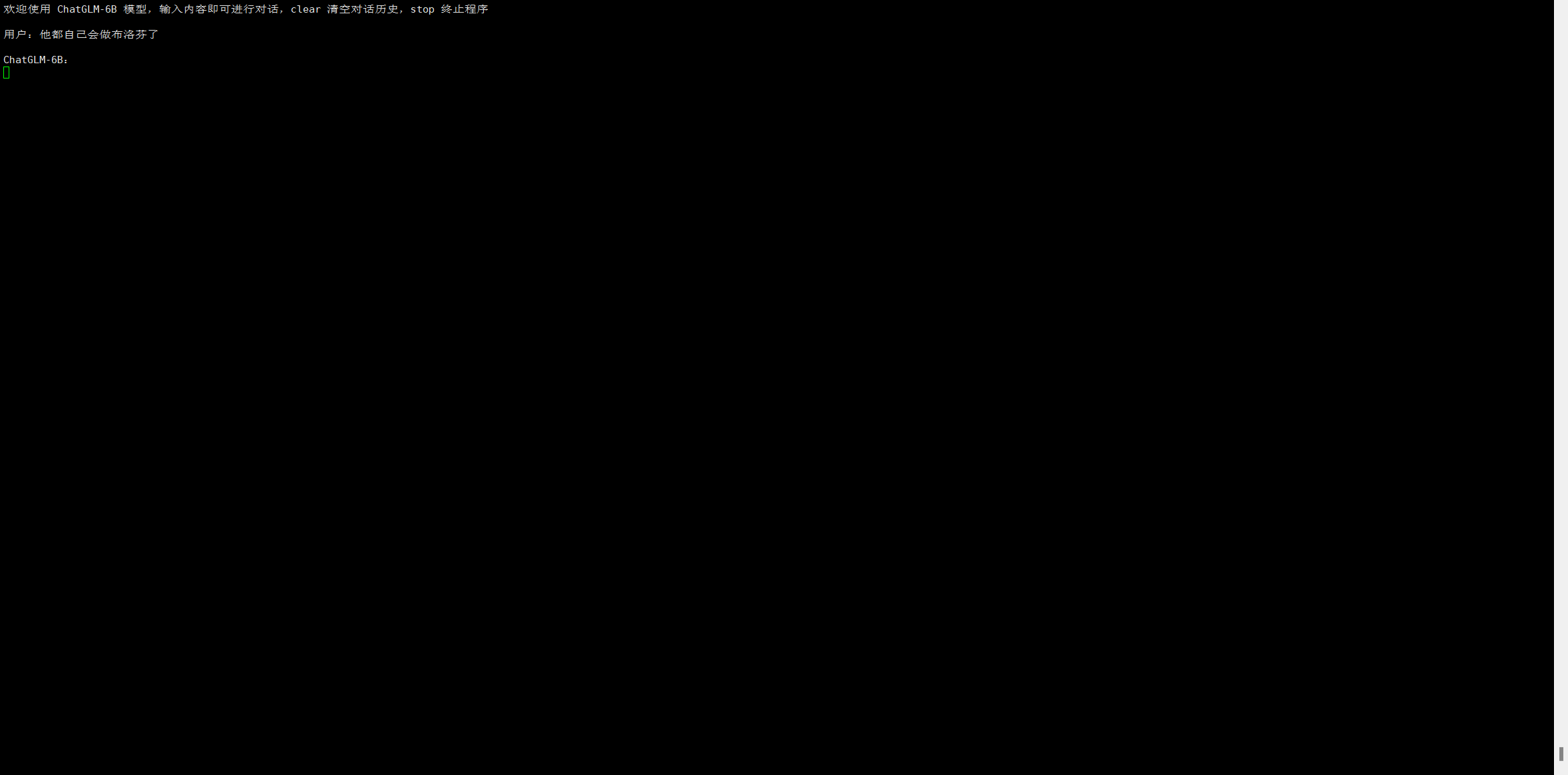

训练完跑起来以后,一直没有返回

Expected Behavior

有回复值

Steps To Reproduce

A6000显卡,PT训练完自己数据后,拿到webdemo.py里面跑

Environment

- OS:ubuntu

- Python:

- Transformers:

- PyTorch:

- CUDA Support (`python -c "import torch; print(torch.cuda.is_available())"`) :

true

Anything else?

No response

请参考 https://github.com/THUDM/ChatGLM-6B/tree/main/ptuning#%E6%A8%A1%E5%9E%8B%E9%83%A8%E7%BD%B2 的方式部署 当前提供的信息太少,无法提供进一步帮助

import os

import platform

import signal

from transformers import AutoTokenizer, AutoModel

import torch

tokenizer = AutoTokenizer.from_pretrained("ptuning/output/adgen-chatglm-6b-pt-128-2e-2/checkpoint-1000", trust_remote_code=True)

model = AutoModel.from_pretrained("ptuning/output/adgen-chatglm-6b-pt-128-2e-2/checkpoint-1000", trust_remote_code=True)

prefix_state_dict = torch.load(os.path.join("ptuning/output/adgen-chatglm-6b-pt-128-2e-2/checkpoint-1000", "pytorch_model.bin"))

new_prefix_state_dict = {}

for k, v in prefix_state_dict.items():

new_prefix_state_dict[k[len("transformer.prefix_encoder."):]] = v

model.transformer.prefix_encoder.load_state_dict(new_prefix_state_dict)

model = model.half().cuda()

model.transformer.prefix_encoder.float()

model = model.eval()

依旧没有返回ptuning训练和finetune训练的模型都试过了,都没有生成任何信息。

import os

import platform

import signal

from transformers import AutoTokenizer, AutoModel, AutoConfig

import torch

tokenizer = AutoTokenizer.from_pretrained("ptuning/output/adgen-chatglm-6b-pt-128-2e-2/checkpoint-1000", trust_remote_code=True)

config = AutoConfig.from_pretrained("ptuning/output/adgen-chatglm-6b-pt-128-2e-2/checkpoint-1000", trust_remote_code=True, pre_seq_len=128)

model = AutoModel.from_pretrained("ptuning/output/adgen-chatglm-6b-pt-128-2e-2/checkpoint-1000",config=config, trust_remote_code=True)

prefix_state_dict = torch.load(os.path.join("ptuning/output/adgen-chatglm-6b-pt-128-2e-2/checkpoint-1000", "pytorch_model.bin"))

new_prefix_state_dict = {}

for k, v in prefix_state_dict.items():

new_prefix_state_dict[k[len("transformer.prefix_encoder."):]] = v

model.transformer.prefix_encoder.load_state_dict(new_prefix_state_dict)

model = model.half().cuda()

model.transformer.prefix_encoder.float()

model = model.eval()

这样也不行

import os import platform import signal from transformers import AutoTokenizer, AutoModel, AutoConfig import torch tokenizer = AutoTokenizer.from_pretrained("ptuning/output/adgen-chatglm-6b-pt-128-2e-2/checkpoint-1000", trust_remote_code=True) config = AutoConfig.from_pretrained("ptuning/output/adgen-chatglm-6b-pt-128-2e-2/checkpoint-1000", trust_remote_code=True, pre_seq_len=128) model = AutoModel.from_pretrained("ptuning/output/adgen-chatglm-6b-pt-128-2e-2/checkpoint-1000",config=config, trust_remote_code=True) prefix_state_dict = torch.load(os.path.join("ptuning/output/adgen-chatglm-6b-pt-128-2e-2/checkpoint-1000", "pytorch_model.bin")) new_prefix_state_dict = {} for k, v in prefix_state_dict.items(): new_prefix_state_dict[k[len("transformer.prefix_encoder."):]] = v model.transformer.prefix_encoder.load_state_dict(new_prefix_state_dict) model = model.half().cuda() model.transformer.prefix_encoder.float() model = model.eval()这样也不行

config = AutoConfig.from_pretrained("THUDM/chatglm-6b", trust_remote_code=True, pre_seq_len=128)

model = AutoModel.from_pretrained("THUDM/chatglm-6b", config=config, trust_remote_code=True)

或者把 THUDM/chatglm-6b 换成你本地的模型路径,但是不能是checkpoint路径,checkpoint路径下只存了prefix encoder

import os

import platform

import signal

from transformers import AutoTokenizer, AutoModel, AutoConfig

import torch

tokenizer = AutoTokenizer.from_pretrained("THUDM/chatglm-6b", trust_remote_code=True)

config = AutoConfig.from_pretrained("THUDM/chatglm-6b", trust_remote_code=True, pre_seq_len=128)

model = AutoModel.from_pretrained("THUDM/chatglm-6b",config=config, trust_remote_code=True)

prefix_state_dict = torch.load(os.path.join("ptuning/output/adgen-chatglm-6b-pt-128-2e-2/checkpoint-1000", "pytorch_model.bin"))

new_prefix_state_dict = {}

for k, v in prefix_state_dict.items():

new_prefix_state_dict[k[len("transformer.prefix_encoder."):]] = v

model.transformer.prefix_encoder.load_state_dict(new_prefix_state_dict)

model = model.quantize(4)

model = model.half().cuda()

model.transformer.prefix_encoder.float()

model = model.eval()

import os import torch from transformers import AutoConfig, AutoModel, AutoTokenizer

CHECKPOINT_PATH = "./output/adgen-chatglm-6b-pt-8-1e-2-dev/checkpoint-3000" tokenizer = AutoTokenizer.from_pretrained("THUDM/chatglm-6b", trust_remote_code=True) config = AutoConfig.from_pretrained("THUDM/chatglm-6b", trust_remote_code=True, pre_seq_len=128) model = AutoModel.from_pretrained("THUDM/chatglm-6b", config=config, trust_remote_code=True) prefix_state_dict = torch.load(os.path.join(CHECKPOINT_PATH, "pytorch_model.bin")) new_prefix_state_dict = {} for k, v in prefix_state_dict.items(): new_prefix_state_dict[k[len("transformer.prefix_encoder."):]] = v model.transformer.prefix_encoder.load_state_dict(new_prefix_state_dict)

最后一步报错 raise RuntimeError('Error(s) in loading state_dict for {}:\n\t{}'.format( RuntimeError: Error(s) in loading state_dict for PrefixEncoder: Unexpected key(s) in state_dict: ".weight", "layernorm.weight", "layernorm.bias", "ion.rotary_emb.inv_freq", "ion.query_key_value.bias", "ion.query_key_value.weight", "ion.query_key_value.weight_scale", "ion.dense.bias", "ion.dense.weight", "ion.dense.weight_scale", "ttention_layernorm.weight", "ttention_layernorm.bias", "nse_h_to_4h.bias", "nse_h_to_4h.weight", "nse_h_to_4h.weight_scale", "nse_4h_to_h.bias", "nse_4h_to_h.weight", "nse_4h_to_h.weight_scale", "_layernorm.weight", "_layernorm.bias", "tion.rotary_emb.inv_freq", "tion.query_key_value.bias", "tion.query_key_value.weight", "tion.query_key_value.weight_scale", "tion.dense.bias", "tion.dense.weight", "tion.dense.weight_scale", "attention_layernorm.weight", "attention_layernorm.bias", "ense_h_to_4h.bias", "ense_h_to_4h.weight", "ense_h_to_4h.weight_scale", "ense_4h_to_h.bias", "ense_4h_to_h.weight", "ense_4h_to_h.weight_scale", ".bias", "".

import os import torch from transformers import AutoConfig, AutoModel, AutoTokenizer

CHECKPOINT_PATH = "./output/adgen-chatglm-6b-pt-8-1e-2-dev/checkpoint-3000" tokenizer = AutoTokenizer.from_pretrained("THUDM/chatglm-6b", trust_remote_code=True) config = AutoConfig.from_pretrained("THUDM/chatglm-6b", trust_remote_code=True, pre_seq_len=128) model = AutoModel.from_pretrained("THUDM/chatglm-6b", config=config, trust_remote_code=True) prefix_state_dict = torch.load(os.path.join(CHECKPOINT_PATH, "pytorch_model.bin")) new_prefix_state_dict = {} for k, v in prefix_state_dict.items(): new_prefix_state_dict[k[len("transformer.prefix_encoder."):]] = v model.transformer.prefix_encoder.load_state_dict(new_prefix_state_dict)

最后一步报错 raise RuntimeError('Error(s) in loading state_dict for {}:\n\t{}'.format( RuntimeError: Error(s) in loading state_dict for PrefixEncoder: Unexpected key(s) in state_dict: ".weight", "layernorm.weight", "layernorm.bias", "ion.rotary_emb.inv_freq", "ion.query_key_value.bias", "ion.query_key_value.weight", "ion.query_key_value.weight_scale", "ion.dense.bias", "ion.dense.weight", "ion.dense.weight_scale", "ttention_layernorm.weight", "ttention_layernorm.bias", "nse_h_to_4h.bias", "nse_h_to_4h.weight", "nse_h_to_4h.weight_scale", "nse_4h_to_h.bias", "nse_4h_to_h.weight", "nse_4h_to_h.weight_scale", "_layernorm.weight", "_layernorm.bias", "tion.rotary_emb.inv_freq", "tion.query_key_value.bias", "tion.query_key_value.weight", "tion.query_key_value.weight_scale", "tion.dense.bias", "tion.dense.weight", "tion.dense.weight_scale", "attention_layernorm.weight", "attention_layernorm.bias", "ense_h_to_4h.bias", "ense_h_to_4h.weight", "ense_h_to_4h.weight_scale", "ense_4h_to_h.bias", "ense_4h_to_h.weight", "ense_4h_to_h.weight_scale", ".bias", "".

旧版的checkpoint应该按照 https://github.com/THUDM/ChatGLM-6B/tree/main/ptuning#%E6%A8%A1%E5%9E%8B%E9%83%A8%E7%BD%B2 中的(2)来做

import os import platform import signal from transformers import AutoTokenizer, AutoModel, AutoConfig import torch tokenizer = AutoTokenizer.from_pretrained("ptuning/output/adgen-chatglm-6b-pt-128-2e-2/checkpoint-1000", trust_remote_code=True) config = AutoConfig.from_pretrained("ptuning/output/adgen-chatglm-6b-pt-128-2e-2/checkpoint-1000", trust_remote_code=True, pre_seq_len=128) model = AutoModel.from_pretrained("ptuning/output/adgen-chatglm-6b-pt-128-2e-2/checkpoint-1000",config=config, trust_remote_code=True) prefix_state_dict = torch.load(os.path.join("ptuning/output/adgen-chatglm-6b-pt-128-2e-2/checkpoint-1000", "pytorch_model.bin")) new_prefix_state_dict = {} for k, v in prefix_state_dict.items(): new_prefix_state_dict[k[len("transformer.prefix_encoder."):]] = v model.transformer.prefix_encoder.load_state_dict(new_prefix_state_dict) model = model.half().cuda() model.transformer.prefix_encoder.float() model = model.eval()这样也不行

config = AutoConfig.from_pretrained("THUDM/chatglm-6b", trust_remote_code=True, pre_seq_len=128) model = AutoModel.from_pretrained("THUDM/chatglm-6b", config=config, trust_remote_code=True)或者把

THUDM/chatglm-6b换成你本地的模型路径,但是不能是checkpoint路径,checkpoint路径下只存了prefix encoder

改了还是报错

checkpoint是通过train.sh生成的,怎么区分旧版和新版

checkpoint是通过train.sh生成的,怎么区分旧版和新版

文件大小,在3GB以上的是旧版的

model.transformer.prefix_encoder.load_state_dict(new_prefix_state_dict)

出现新的错误:

AttributeError: 'ChatGLMModel' object has no attribute 'prefix_encoder'

model.transformer.prefix_encoder.load_state_dict(new_prefix_state_dict)出现新的错误:AttributeError: 'ChatGLMModel' object has no attribute 'prefix_encoder'

这个问题可以尝试一下换个显卡试试, 我之前在1080上报这个错,在v100上就不报错了

import os import platform import signal from transformers import AutoTokenizer, AutoModel, AutoConfig import torch tokenizer = AutoTokenizer.from_pretrained("ptuning/output/adgen-chatglm-6b-pt-128-2e-2/checkpoint-1000", trust_remote_code=True) config = AutoConfig.from_pretrained("ptuning/output/adgen-chatglm-6b-pt-128-2e-2/checkpoint-1000", trust_remote_code=True, pre_seq_len=128) model = AutoModel.from_pretrained("ptuning/output/adgen-chatglm-6b-pt-128-2e-2/checkpoint-1000",config=config, trust_remote_code=True) prefix_state_dict = torch.load(os.path.join("ptuning/output/adgen-chatglm-6b-pt-128-2e-2/checkpoint-1000", "pytorch_model.bin")) new_prefix_state_dict = {} for k, v in prefix_state_dict.items(): new_prefix_state_dict[k[len("transformer.prefix_encoder."):]] = v model.transformer.prefix_encoder.load_state_dict(new_prefix_state_dict) model = model.half().cuda() model.transformer.prefix_encoder.float() model = model.eval()这样也不行

config = AutoConfig.from_pretrained("THUDM/chatglm-6b", trust_remote_code=True, pre_seq_len=128) model = AutoModel.from_pretrained("THUDM/chatglm-6b", config=config, trust_remote_code=True)或者把

THUDM/chatglm-6b换成你本地的模型路径,但是不能是checkpoint路径,checkpoint路径下只存了prefix encoder改了还是报错

我也是,试了和你一样的几种方式推理,结果都是空。 你的解决了吗?