OutOfMemory error due to high memory usage of io.aleph.dirigiste.Pool (again)

Hi,

This is a follow-up of #394. I'm still experiencing random OOM errors while leaving Aleph running for a few hours.

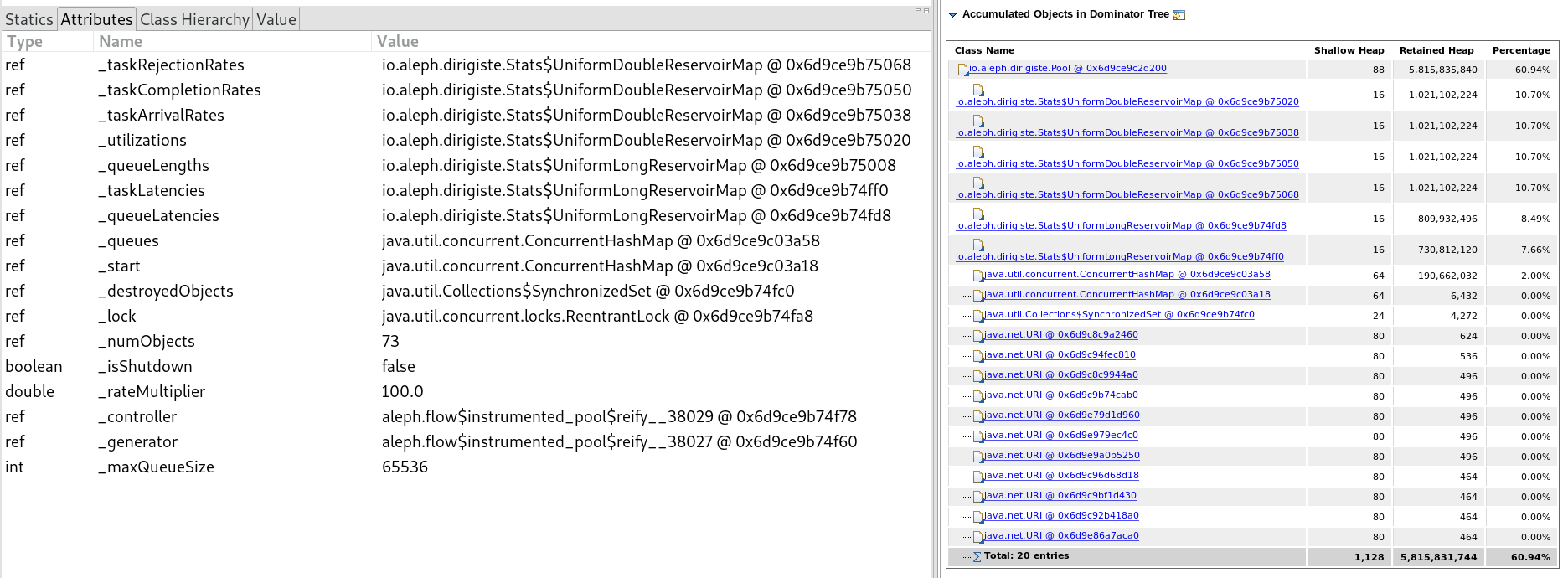

Here is what MAT shows:

Looking at dirigiste _queues map size, it contains 49'152 items, which seems quite a lot to me! So it seems like clearing of unused queues somehow doesn't happens.

I believe I have pinpointed the issue to the following piece of code in dirigiste: https://github.com/ztellman/dirigiste/blob/f5b035094fa947f9779d22d6d08a88f39f4a6e88/src/io/aleph/dirigiste/Pool.java#L329-L343.

More specifically line 331: entry.getValue().getUtilization(1) == 0

Here, the code gets the 1 percentile to decide if the queue is unused. By looking at how this percentile gets computed in dirigiste we have the following piece of code:

if (t == 1.0) {

return (double) vals[cnt-1];

}

So for a percentile of 1, it gets the last value of the reservoir of stats and assume it's the latest added metric.

This is fine as long as the reservoir contains 4'096 values or less. As soon as the reservoir has more values, every newly added metric is placed at a random location.

How can this happens? By default, aleph configures dirigiste with the following settings:

1. a control-period of 60'000

https://github.com/ztellman/aleph/blob/be1ee04c130660ce69ee7c6603132b36be79ec1f/src/aleph/http.clj#L146

2. a sample-period of 10

https://github.com/ztellman/aleph/blob/be1ee04c130660ce69ee7c6603132b36be79ec1f/src/aleph/flow.clj#L48

As such, dirigiste control loop attempts to clean unused queues only every 6'000 cycles, which is above the 4'096 reservoir size. So there is a very high probability that entry.getValue().getUtilization(1) does not represent the 1 percentile anymore.

I'll move modify control-period on my side to validate my analysis. In the mean time, a second thought about this is more than welcome since aleph & dirigiste integration remains pretty tricky to me :wink:

Another thing I had in mind when initially looking at this issue is that we may be tempted to use SoftReference for some of the internal dirigiste statistics.

This would mean that, under high memory pressure, we could tolerate loosing some of the metrics for the sake of the process survival.

Big question is to determine which stats we can soft references...

Quick update: I lowered control-period to 30'000 and although I can see more sites "recycled" (I tweaked dirigiste to log this information), that doesn't seems to change anything to the issue.

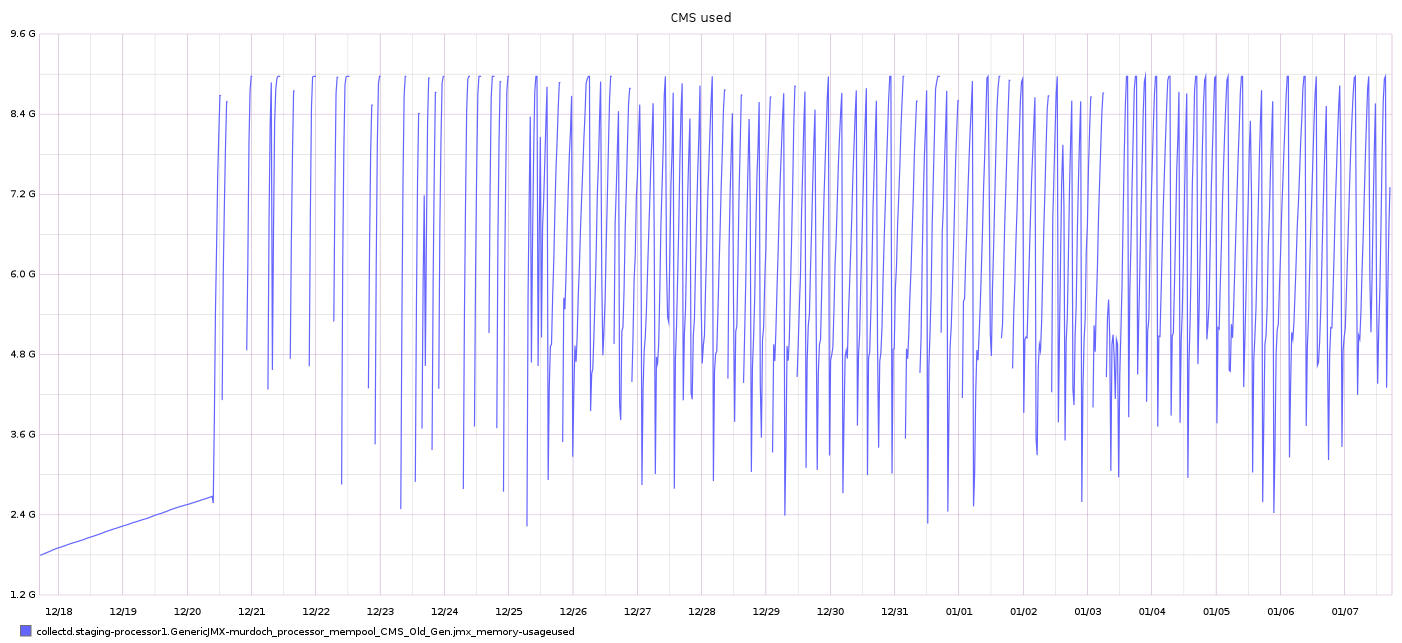

Here is a (barely readable) graph of the CMS old generation heap memory:

The strange thing is that it started to happen when I switched from aleph 0.4.7-alpha3 to 0.4.7-alph4... :thinking:

@rborer I think the most recent version of Aleph links the latest version of Dirigisty (upgraded). Maybe that's the reason?

@kachayev In fact, I think it comes from manifold. I've tried multiple combinations of aleph, manifold & dirigiste, and even with previous versions the issue remains.

The only common factor that I've just found out since the issue started to happens is that it's using manifold 0.1.9-alpha3 (yes, I have a somewhat complex set of dependencies). I'll attempt to downgrade to 0.1.9-alpha2 to validate my claim.

If it's true, then it means that there is something broken between manifold 0.1.9-alpha2 and 0.1.9-alpha3. I fear my PR https://github.com/ztellman/manifold/pull/157 may be the culprit...

@rborer I see. There also was a change with a class loader instance for manifold threads... but I don't see how it could be possibly connected to Dirigiste pool 🤔

Wrong guess it seems, the OOM still happens with manifold downgraded to 0.1.9-alpha2...

I'll continue my investigations, I still haven't been able to find the root cause of those OOMs and it's bugging me...

In the mean time, what do you think about my analysis on the control-period and sample-period default values in Aleph @kachayev @ztellman ? Is my understanding about dirigiste reservoirs correct?

Sorry for not responding sooner to this, I'm just getting back onto local time. The lerp method you mention assumes the array is in sorted order, which should be true due to https://github.com/ztellman/dirigiste/blob/master/src/io/aleph/dirigiste/Stats.java#L54. Let me know if I've missed something.

Absolutely. Thanks @ztellman , so I was completely wrong here...

Also, in case something comes to your mind while reading about this issue let me know @ztellman ; currently I'm out of ideas (and of time too sadly...)

You mention ~50k queues, which in Aleph corresponds to ~50k distinct hostnames. Is that something that might happen over the course of a minute or two in your use case?

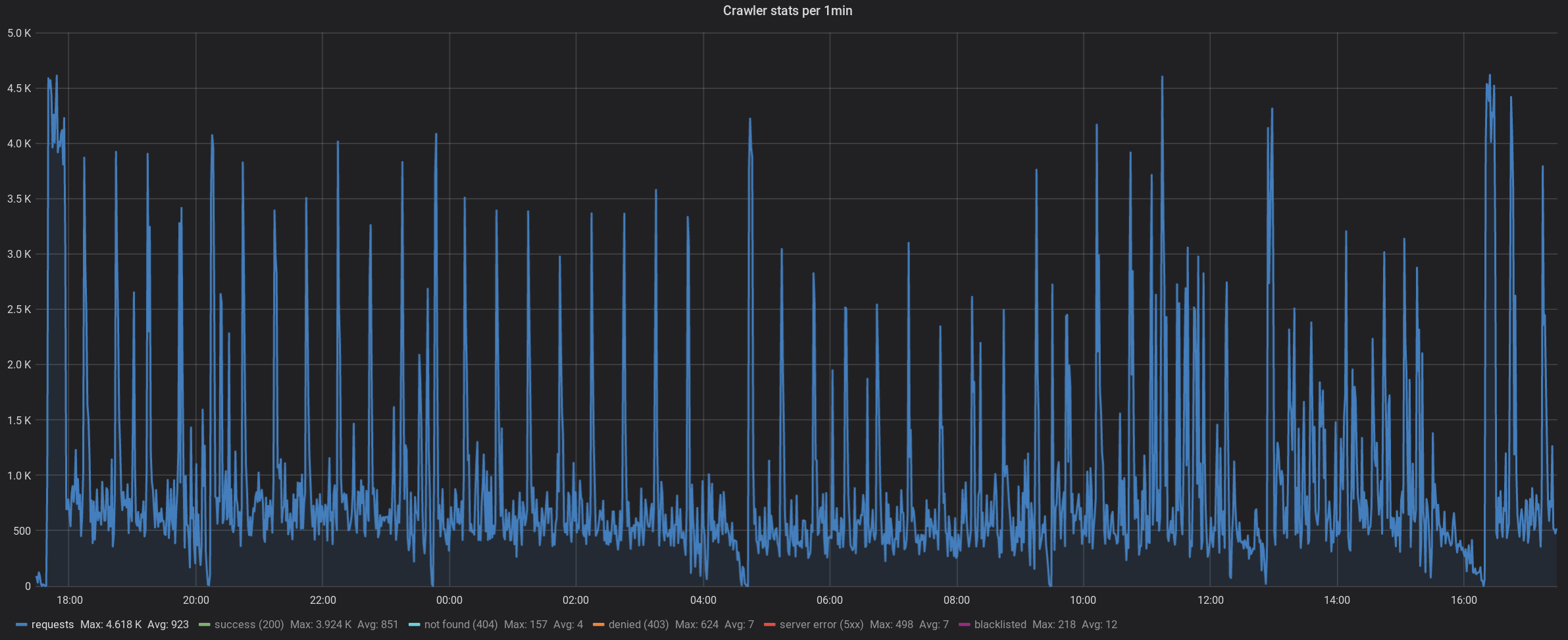

At first glance no, the biggest number of requests it receives is 4.6k / minute. Here is a plot of the last 24 hours:

I'll try to gather & plot more stats about the pool sizing to see if it is indeed growing through time or if there is a sudden spike that would lead to an OOM.

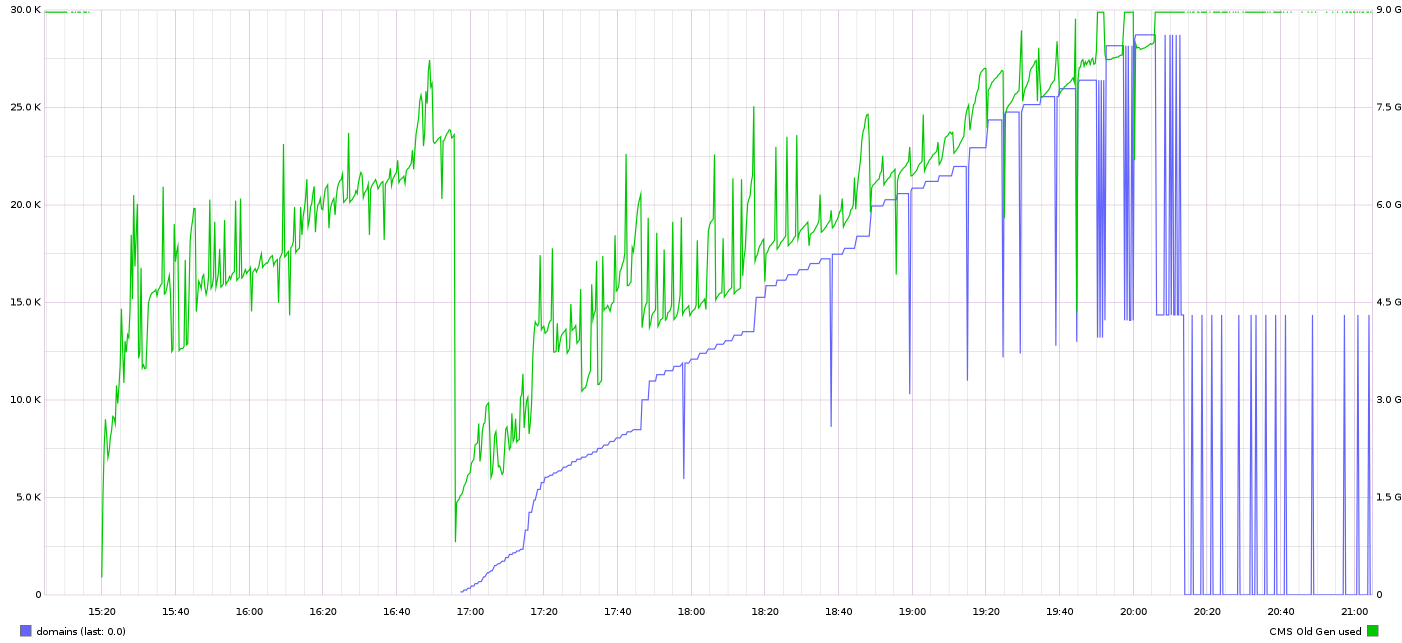

Here is a plot showing the number of domains (blue) and the old gen memory usage (green). Obviously continuously increasing...

Hi @rborer Did you ever progress further on this issue or conclude with something? I've recently had an OOM issue with aleph, and thanks to your previous issues I've upgraded to the latest dirigiste version. Thanks!

@rborer @ivarref any updates?

@Yneth For my case it turned out that dirigiste, but some other problem that caused the OOM. I switched away from dirigiste anyway. I described what/how I did that here: https://github.com/juxt/yada/issues/324

@ivarref that helped, thanks!

My bad to have remained silent such a long time. Unfortunately back in the days when this issue happened I took the decision to not continue to use Aleph. The project was dormant so the risk was too important to use it... So I cannot tell if the issue remains or has finally been fixed.

I anyway see that some of my dirigiste patches that may help in my scenario are still open. I am referring to the followings:

- https://github.com/clj-commons/dirigiste/pull/24

- https://github.com/clj-commons/dirigiste/pull/26

- https://github.com/clj-commons/dirigiste/pull/27

If others encounter the same kind of issues it may be worth trying to merge those 3 (the latest one is probably the most important). My assumption (hard to verify) is that under important load the queue length may vary and leads to incomplete cleanups.

Hope this helps!

This is tricky to know how to proceed. There were some memory leaks in Manifold and Aleph that were fixed which could very well have been the cause, plus your own OOM PR @rborer, clj-commons/dirigiste#19. So it's not even clear to me this is still an issue.

@ivarref is this is still a problem for you? @Yneth you bumped this conversation up and said you were having OOM issues with Dirigiste, do you have a test case or profiling dump I could look at?

For anyone who's having an OOM, does applying clj-commons/dirigiste#27 fix it? I'm not sure it's the cause, but it's sort of in the right place, as the computed utilization is used as a test to see whether a queue can be removed.

@KingMob It's not a problem for me anymore. The problem was some Datomic query for me, that was what caused the OOM. I did switch to a standard Java threadpool nevertheless though.