spikeinterface

spikeinterface copied to clipboard

spikeinterface copied to clipboard

export_to_phy runs out of memory and/or is slow

My test recording is 32 channels, 1 hr long. Kilosort2 finds 29 units with normal firing rates (all but one less than 20 Hz, one 100 Hz). Running Win10 with 24 GB RAM.

When I run:

# Get waveforms

waveform_folder = results_path/(sorter_name+'_waveforms')

ms_before = 1.5 # default 3

ms_after = 2.5 # default 4

we = si.extract_waveforms(recording_cmr, sorting, waveform_folder,ms_before=ms_before, ms_after=ms_after,

n_jobs=1, chunk_duration="10s", max_spikes_per_unit=500, return_scaled=True, overwrite=True)

# Write to Phy

phy_folder = results_path/(sorter_name+'_phy')

si.export_to_phy(we,output_folder=phy_folder,copy_binary=False, max_channels_per_template=8)

I get the error:

Output exceeds the size limit. Open the full output data in a text editor

---------------------------------------------------------------------------

MemoryError Traceback (most recent call last)

Cell In [23], line 18

16 phy_folder = results_path/(sorter_name+'_phy')

17 print(phy_folder)

---> 18 si.export_to_phy(we, output_folder=phy_folder, verbose=False, remove_if_exists=True)

File d:\hannah\dropbox\code\spikesort\spikeinterface\spikeinterface\exporters\to_phy.py:113, in export_to_phy(waveform_extractor, output_folder, compute_pc_features, compute_amplitudes, sparsity_dict, copy_binary, max_channels_per_template, remove_if_exists, peak_sign, template_mode, dtype, verbose, **job_kwargs)

111 if copy_binary:

112 rec_path = output_folder / 'recording.dat'

--> 113 write_binary_recording(recording, file_paths=rec_path, verbose=verbose, dtype=dtype, **job_kwargs)

114 elif isinstance(recording, BinaryRecordingExtractor):

115 rec_path = recording._kwargs['file_paths'][0]

File d:\hannah\dropbox\code\spikesort\spikeinterface\spikeinterface\core\core_tools.py:285, in write_binary_recording(recording, file_paths, dtype, add_file_extension, verbose, byte_offset, auto_cast_uint, **job_kwargs)

282 init_args = (recording.to_dict(), rec_memmaps_dict, dtype, cast_unsigned)

283 executor = ChunkRecordingExecutor(recording, func, init_func, init_args, verbose=verbose,

284 job_name='write_binary_recording', **job_kwargs)

--> 285 executor.run()

File d:\hannah\dropbox\code\spikesort\spikeinterface\spikeinterface\core\job_tools.py:287, in ChunkRecordingExecutor.run(self)

285 worker_ctx = self.init_func(*self.init_args)

286 for segment_index, frame_start, frame_stop in all_chunks:

--> 287 res = self.func(segment_index, frame_start, frame_stop, worker_ctx)

288 if self.handle_returns:

289 returns.append(res)

...

-> 4244 x = np.array(x, dtype, order='C') # make a copy, can modify in place

4245 zi = np.ascontiguousarray(np.reshape(zi, (-1, n_sections, 2)))

4246 sos = sos.astype(dtype, copy=False)

MemoryError: Unable to allocate 26.2 GiB for an array with shape (32, 109745986) and data type float64

This is similar to https://github.com/SpikeInterface/spikeinterface/issues/648#issue-1252688905. Based on that I tried chunking:

si.export_to_phy(we, output_folder=phy_folder, remove_if_exists=True,

copy_binary=False, n_jobs=1,max_channels_per_template=8, compute_amplitudes=True,chunk_duration='100s')

and it now finishes but is pretty slow (17 min)

I played around more extensively with a shorter example file (6 min long, 64channels) it also completes successfully but but slowly: it takes longer to export to phy than it does to sort -- 2min 53s to sort with Kilosort2, 2min 17s to extract waveforms, and 4min 47s to export to Phy -- so ~10 min total. Maybe not a fair comparison, but running Kilosort2 on the same file and exporting to Phy in Matlab is 2min 40s total -- exporting to Phy is almost instantaneous.

I'm curious if there is a reason for this or any way to speed up the phy export step (maybe adding code to directly export from Matlab to the matlab call, although that would only help with this sorter)

This comment mentions caching the recording extractor to speed things up:

https://github.com/SpikeInterface/spikeinterface/issues/72#issuecomment-680077923

I believe the update to the recommended code is this:

recording_saved = recording_cmr.save(folder=results_path/'spikeinterface')

I don't notice any improvement, and it adds ~1min of processing to save the recording_cmr. I run this after performing the filtering to create recording_cmr and before the code above, please correct me if I need to do anything else for this to be beneficial

Two other small issues relating to export_to_phy:

1. Despite setting max_channels_per_template=8, I see more than 8 channels active per unit in Phy. Is this expected?

2. params.py does not set the dat_path to the raw binary, so the trace data and waveforms are not loaded (just the template).

I think this occurs because the filtered recording extractor doesn't have a filepath associated with it: recording_cmr._kwargs['file_paths'] gives an error

Is is possible to add a parameter to export_to_phy to set the dat_path in params.py to a specific string? OR, to keep the file_paths information associated with the extractor after filtering?

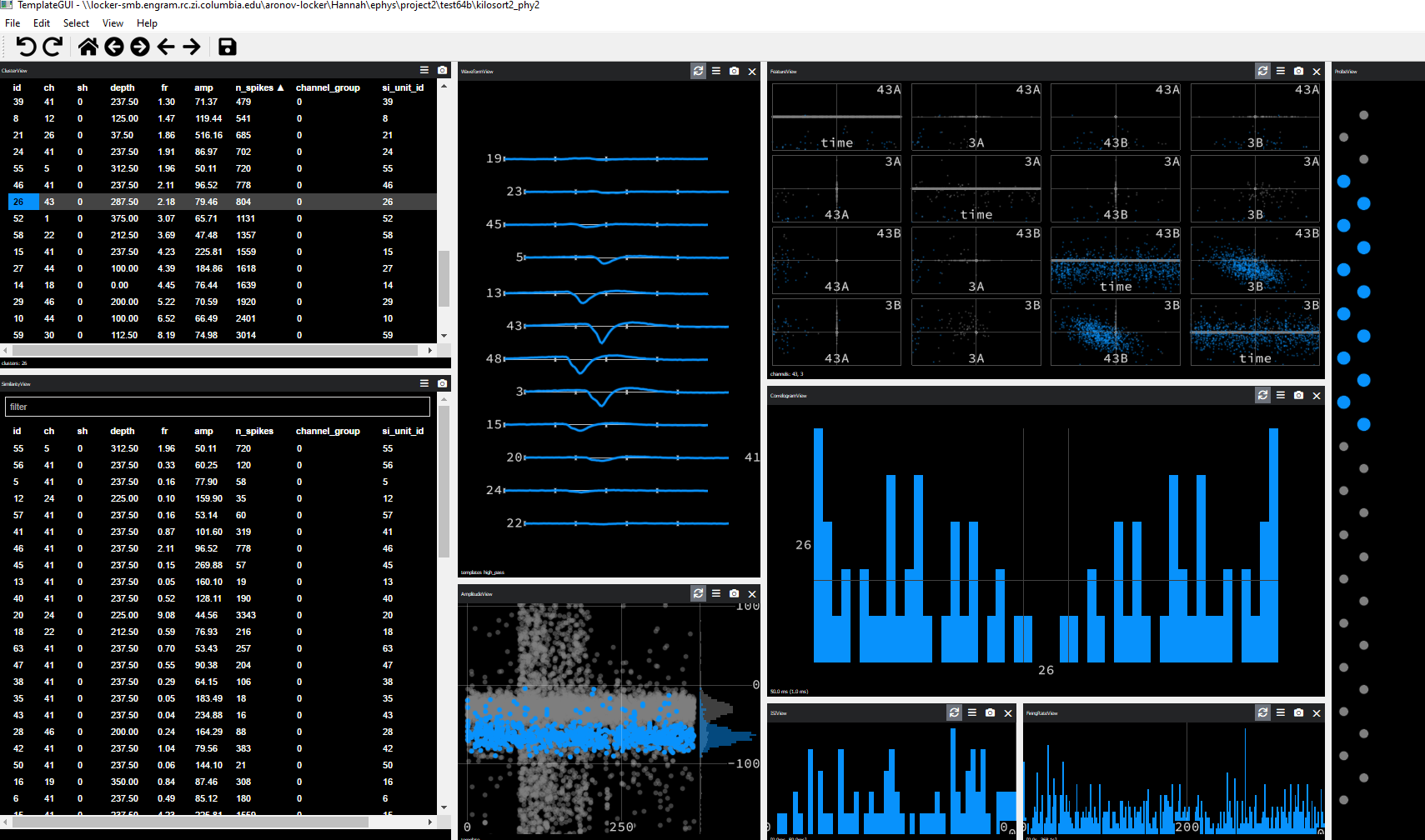

See example of both of these issues:

Quick update, I changed n_jobs=-1 and that sped it up a bit. (From the docs, "n_jobs: Number of cores to use in parallel. Uses all available if -1")

To work around my small issue 2 in Phy

- params.py does not set the dat_path to the raw binary, so the trace data and waveforms are not loaded (just the template)

I did:

# Export to Phy

phy_folder = results_path/'clean_phy'

we.recording = recording_raw # Just do this so that the path to the raw .dat file is stored in params.py

si.export_to_phy(we, output_folder=phy_folder,copy_binary=False,compute_amplitudes=True,

max_channels_per_template=8, chunk_duration='100s', n_jobs=-1)

I'd still be curious about this

- Despite setting max_channels_per_template=8, I see more than 8 channels active per unit in Phy. Is this expected?

and if there's anything else obvious I should do to speed things up further. But not urgent since it is workable as is.

p.s. Thanks for making & maintaining this! So far using a consensus of two sorters (either 1. primary sorting with kilo2, but only keep units also found by ironclust using si.compare_two_sorters, and then .hungarian_match_12, or 2. take consensus using si.compare_multiple_sorters with spiketrain_mode='intersection') seems very promising for my data, I think that it'll greatly speed up final manual curation

Hi @hpay

Thanks for the report. Indeed the easiest way to speed it up is to use more jobs, but I'd recommend using a much smaller chunk_duration. I usually use 1s and it seems to give a great compromise between speed and memory usage.

I'll take a look at the max_channels_per_template mismatch, probably it's a small bug on our side!

Indeed that seems to only control the number of channels on which PC projections are computed.

For a nice visualization, I would recommend using the sparsity_dict argument:

# example 1: select channels within a radius of 50um

sparsity_dict=dict(method="radius", radius_um=50)

# example 2: select 8 channels with largest amplitude

sparsity_dict=dict(method="best_channels", num_channels=8)

Cheers Alessio

I think also that we should change the behavior of exporters in general :

export only what is already computed (PCS, amplitudes, ...) and not compute this stuff inside export_report() and export_to_phy().

So the end user would have more control on everything for computation (sparsity, n_jobs, chunking).

And so only what-is-computed would be exported.

Mmm we can discuss about it!

@hpay what do you think? Pre-computing all the required data would take some extra lines of code, but in general you can control all of these aspects much better.

Thanks for the clarification on max_channels_per_template and sparsity_dict!

I did a quick test of export_to_phy, assuming that if max_channels_per_template controls how many channels go into the PC computation, reducing that might also speed things up. On a small dataset, max_channels_per_template = 1 took 1 min 22s, and max_channels_per_template = 20 took 1 min 34s. Not a huge effect but something I guess.

Re: pre-computing, would it be any faster overall? I have only ever used "extract_waveforms" and "export_to_phy" together as a unit, one after the other. My use case is NOT doing any real spike sorter evaluations, just to streamline curation of my data and then move onto analysis :)

Are the main bottlenecks of export_to_phy are 1. computing waveform amplitudes and 2. calculating PCs?

Yes those are the main bottlenecks. Computing amplitudes is usually pretty fast, but PC can be very slow. In order to speed them up, we added an option to use sparsity, i.e, for each unit, to compute PCs only on sparse channels. I don't think that is correctly propagated to Phy yet. I'll double check and let you know

fixed here https://github.com/SpikeInterface/spikeinterface/pull/1133