Luban

Luban copied to clipboard

Luban copied to clipboard

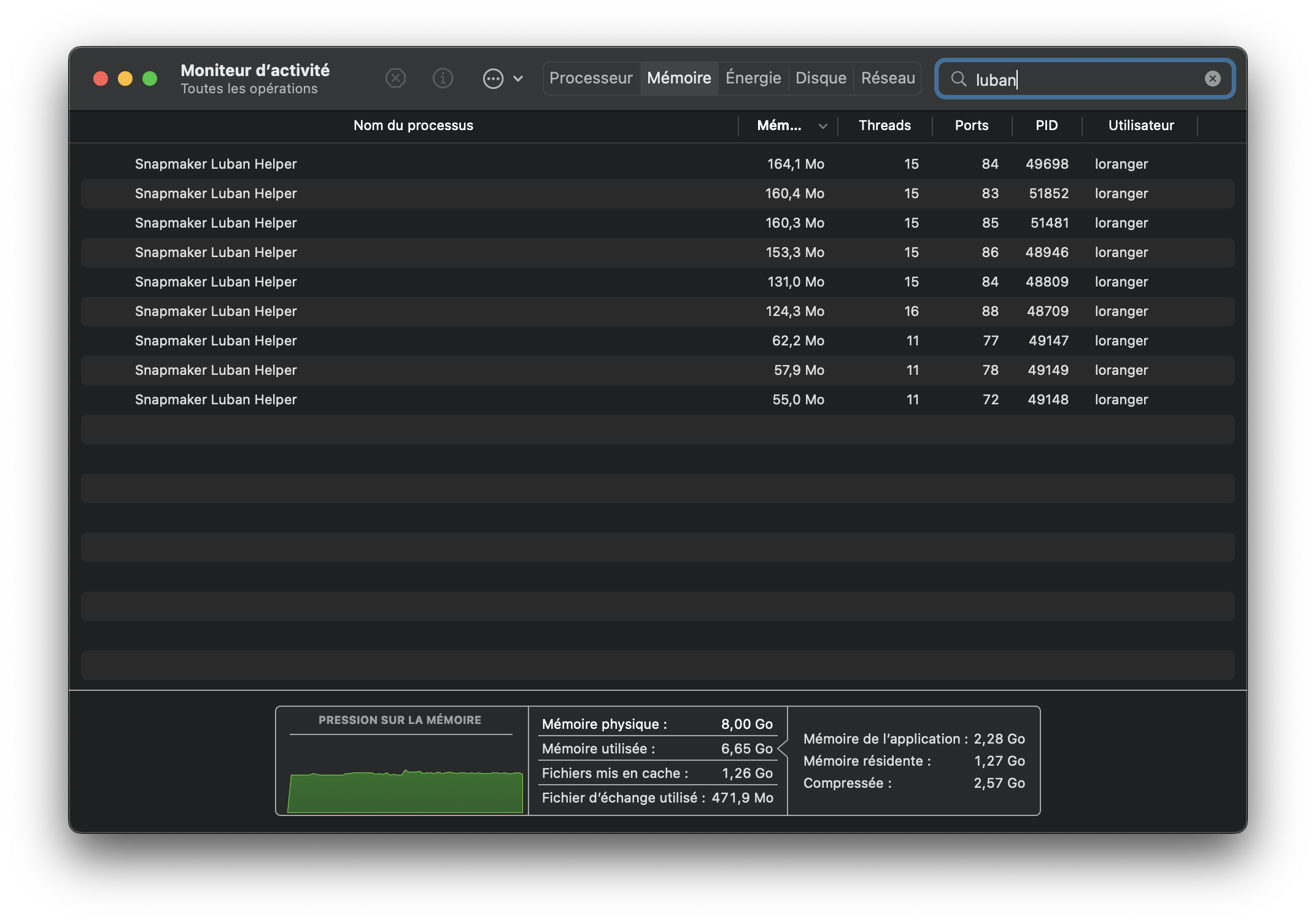

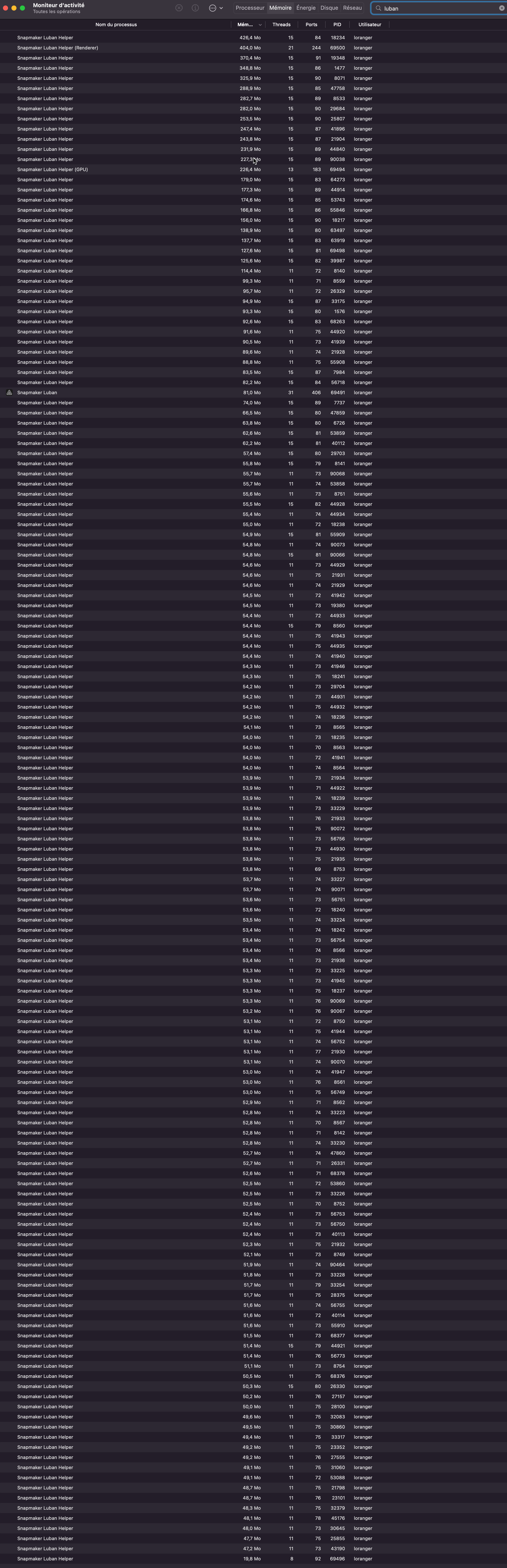

Bug: Helper never quits (and stack up)

🐞 bug report

Affected Version(s)

The issue is found in version 4 (4.3 and 4.4)

Is this a regression? (optional)

Yes, the previous versionI used (4.3.2) had the same behaviour

To Reproduce

Steps to reproduce the behavior:

- Start Luban

- Create a project

- Quit (with or without saving, having the project started on SnapMaker or not, it does not matter)

- Monitor your running processes matching Luban

Expected behavior

There should no remaining Luban process running since Luban has been quit, but an helper (for each previously started Luban) is still running and using memory

~ ツ ps -ax | grep Luban

47100 ?? 83:07.73 /Applications/Snapmaker Luban.app/Contents/Resources/app/node_modules/snapmaker-lunar/engine/MacOS/LunarMP modelsimplify -v -p -j /Users/loranger/Library/Application Support/snapmaker-luban/Config/printing/simplify_model.def.json -l /Users/loranger/Library/Application Support/snapmaker-luban/Tmp/07954960_53798017.stl -o /Users/loranger/Library/Application Support/snapmaker-luban/Tmp/-simplify

47569 ?? 76:06.03 /Applications/Snapmaker Luban.app/Contents/Resources/app/node_modules/snapmaker-lunar/engine/MacOS/LunarMP modelsimplify -v -p -j /Users/loranger/Library/Application Support/snapmaker-luban/Config/printing/simplify_model.def.json -l /Users/loranger/Library/Application Support/snapmaker-luban/Tmp/92905899_00567043.stl -o /Users/loranger/Library/Application Support/snapmaker-luban/Tmp/-simplify

47779 ?? 73:42.86 /Applications/Snapmaker Luban.app/Contents/Resources/app/node_modules/snapmaker-lunar/engine/MacOS/LunarMP modelsimplify -v -p -j /Users/loranger/Library/Application Support/snapmaker-luban/Config/printing/simplify_model.def.json -l /Users/loranger/Library/Application Support/snapmaker-luban/Tmp/39923483_02325052.stl -o /Users/loranger/Library/Application Support/snapmaker-luban/Tmp/-simplify

48709 ?? 0:08.67 /Applications/Snapmaker Luban.app/Contents/Frameworks/Snapmaker Luban Helper.app/Contents/MacOS/Snapmaker Luban Helper /Applications/Snapmaker Luban.app/Contents/Resources/app/server-cli.js

48809 ?? 0:08.26 /Applications/Snapmaker Luban.app/Contents/Frameworks/Snapmaker Luban Helper.app/Contents/MacOS/Snapmaker Luban Helper /Applications/Snapmaker Luban.app/Contents/Resources/app/server-cli.js

48946 ?? 0:21.99 /Applications/Snapmaker Luban.app/Contents/Frameworks/Snapmaker Luban Helper.app/Contents/MacOS/Snapmaker Luban Helper /Applications/Snapmaker Luban.app/Contents/Resources/app/server-cli.js

49147 ?? 0:03.61 /Applications/Snapmaker Luban.app/Contents/Frameworks/Snapmaker Luban Helper.app/Contents/MacOS/Snapmaker Luban Helper ./Pool.worker.js

49148 ?? 0:02.84 /Applications/Snapmaker Luban.app/Contents/Frameworks/Snapmaker Luban Helper.app/Contents/MacOS/Snapmaker Luban Helper ./Pool.worker.js

49149 ?? 0:02.56 /Applications/Snapmaker Luban.app/Contents/Frameworks/Snapmaker Luban Helper.app/Contents/MacOS/Snapmaker Luban Helper ./Pool.worker.js

49698 ?? 0:17.79 /Applications/Snapmaker Luban.app/Contents/Frameworks/Snapmaker Luban Helper.app/Contents/MacOS/Snapmaker Luban Helper /Applications/Snapmaker Luban.app/Contents/Resources/app/server-cli.js

51481 ?? 0:15.26 /Applications/Snapmaker Luban.app/Contents/Frameworks/Snapmaker Luban Helper.app/Contents/MacOS/Snapmaker Luban Helper /Applications/Snapmaker Luban.app/Contents/Resources/app/server-cli.js

51852 ?? 0:08.87 /Applications/Snapmaker Luban.app/Contents/Frameworks/Snapmaker Luban Helper.app/Contents/MacOS/Snapmaker Luban Helper /Applications/Snapmaker Luban.app/Contents/Resources/app/server-cli.js

52728 ttys000 0:00.00 grep --color=auto Luban

🌍 Your Environment

Platform:

- Operating System: macOS Monterey 12.6

- Printer: Snapmaker 2.0 A350

Sometimes it gets totally mad

We confirmed this bug and it will be fixed on next version.

I see the same behaviour on Linux, does the fix cover all versions?

Is there a way to clear out the cache or something so that we don't have this memory problem? Thanks

The issue has been fixed in v4.6.X.

If you find Helper process stacked again, please re-open this issue and provider steps to reproduce.