DefaultCPUAllocator: not enough memory

I tried to python python -u run_models.py --h_dim 300 --mb_size 32 --n_epoch 20 --gpu --lr 0.0001 till i got

RuntimeError: [enforce fail at ..\c10\core\CPUAllocator.cpp:62] data. DefaultCPUAllocator: not enough memory: you tried to allocate %dGB. Buy new RAM!208096001

I have 48GB of RAM and a 1060 GPU (6GB), is is not enough?

how should i do? thanks

the model keeps the pre-processed data into memory, I don't think decreasing the batch size will help. can you post the full error stack trace?

I restart pre-processed.py, and run run_models.py I got

Finished loading dataset! D:\chen_ya\AK-DE-biGRU-master\models.py:484: UserWarning: nn.init.xavier_normal is now deprecated in favor of nn.init.xavier_normal_. nn.init.xavier_normal(self.M)

Epoch-0

Training: 0it [00:00, ?it/s]Traceback (most recent call last):

File "D:/chen_ya/AK-DE-biGRU-master/run_models.py", line 140, in

Is that my pytorch version's problem? my pytorch version is 1.1.0

no, looks like you are running it on cpu, did you use the argument for running it on the gpu?

yes, I try it again and it show RuntimeError: CuDNN error: CUDNN_STATUS_SUCCESS

i cheak my CUDA and CUDNN, and their version is 9.1 & 7.1.2 Is that mean I have wrong version?

maybe check your pytorch gpu configuration/version properly with this? In [1]: import torch

In [2]: torch.cuda.current_device() Out[2]: 0

In [3]: torch.cuda.device(0) Out[3]: <torch.cuda.device at 0x7efce0b03be0> In [4]: torch.cuda.device_count() Out[4]: 1 In [5]: torch.cuda.get_device_name(0) Out[5]: 'GeForce GTX 950M' In [6]: torch.cuda.is_available() Out[6]: True

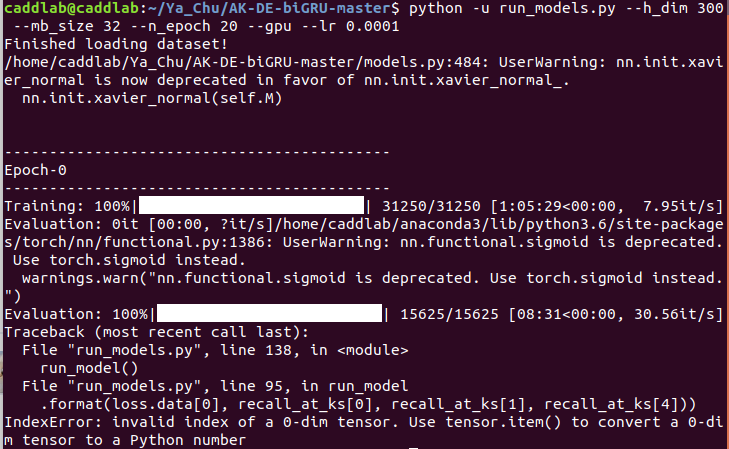

I finally run success ,but when i run finish it show that

how should I solve it ? thanks

cool that you could run it. Can you please change loss.data[0] to loss.item() and provide the results?

thanks very much I finished training the data but I wanna know how to chat and test on my computer?

provide the model with a question utterance and a set of possible response, the output would be a predicted response.

should I run something when I provide the model with a question utterance?

I don't have anything readymade. You need to reuse parts from the batcher (data) and run_models to get the predictions.