ImageSharp

ImageSharp copied to clipboard

ImageSharp copied to clipboard

Color conversion with ICC profiles

Prerequisites

- [x] I have written a descriptive pull-request title

- [x] I have verified that there are no overlapping pull-requests open

- [x] I have verified that I am following matches the existing coding patterns and practice as demonstrated in the repository. These follow strict Stylecop rules :cop:.

- [x] I have provided test coverage for my change (where applicable)

Description

Note: This is a replacement for the original PR #273 from @JBildstein that was automatically closed by our Git LFS history rewrite. Individual commits have unfortunately been lost in the process. Help is very much needed to complete the work.

As the title says, this adds methods for converting colors with an ICC profile.

Architecturally, the idea is that the profile is checked once for available and appropriate conversion methods and a then a delegate is stored that only takes the color values to convert and returns the calculated values. The possible performance penalty for using a delegate is far smaller than searching through the profile for every conversion. I'm open for other suggestions though.

There are classes to convert from the profile connection space (=PCS, can be XYZ or Lab) to the data space (RGB, CMYK, etc.) and vice versa. There are also classes to convert from PCS to PCS and Data to Data but they are only used for special profiles and are not important for us now but I still added them for completeness sake.

A challenge here is writing tests for this because of the complexity of the calculations and the big amount of different possible conversion paths. This is a rough list of the paths that exist:

- "A to B" and "B to A" tags

- IccLut8TagDataEntry

- Input IccLut[], Clut, Output IccLut[]

- Matrix(3x3), Input IccLut[], IccClut, Output IccLut[]

- IccLut16TagDataEntry

- Input IccLut[], IccClut, Output IccLut[]

- Matrix(3x3), Input IccLut[], IccClut, Output IccLut[]

- IccLutAToBTagDataEntry/IccLutBToATagDataEntry (Curve types can either be IccCurveTagDataEntry or IccParametricCurveTagDataEntry (which has several curve subtypes))

- CurveA[], Clut, CurveM[], Matrix(3x1), Matrix(3x3), CurveB[]

- CurveA[], Clut, CurveB[]

- CurveM[], Matrix(3x1), Matrix(3x3), CurveB[]

- CurveB[]

- IccLut8TagDataEntry

- "D to B" tags

- IccMultiProcessElementsTagDataEntry that contains an array of any of those types in any order:

- IccCurveSetProcessElement

- IccOneDimensionalCurve[] where each curve can have several curve subtypes

- IccMatrixProcessElement

- Matrix(Nr. of input Channels by Nr. of output Channels), Matrix(Nr. of output channels by 1)

- IccClutProcessElement

- IccClut

- IccCurveSetProcessElement

- IccMultiProcessElementsTagDataEntry that contains an array of any of those types in any order:

- Color Trc

- Matrix(3x3), one curve for R, G and B each (Curve types can either be IccCurveTagDataEntry or IccParametricCurveTagDataEntry (which has several curve subtypes))

- Gray Trc

- Curve (Curve type can either be IccCurveTagDataEntry or IccParametricCurveTagDataEntry (which has several curve subtypes))

The three main approaches in that list are

- A to B/B to A: using a combination of lookup tables, matrices and curves

- D to B: using a chain of multi process elements (curves, matrices or lookup)

- Trc: using curves (and matrices for color but not for gray)

The most used approaches are Color Trc for RGB profiles and LutAToB/LutBToA for CMYK profiles.

Todo list:

- [x] Integrate with the rest of the project

- [x] Write tests that cover all conversion paths

- [x] Review architecture

- [x] Improve speed and accuracy of the calculations

Help and suggestions are very welcome.

I wonder why the test MatrixCalculator_WithMatrix_ReturnsResult only fails with netcoreapp2.1 and not with the other frameworks.

@brianpopow It'll be an accuracy issue most likely. (I hope it's not a JIT issue). It should be possible to inspect the result and see.

Codecov Report

:exclamation: No coverage uploaded for pull request base (

main@ca20c92). Click here to learn what that means. The diff coverage isn/a.

:exclamation: Current head 5834c39 differs from pull request most recent head f60d4b8. Consider uploading reports for the commit f60d4b8 to get more accurate results

@@ Coverage Diff @@

## main #1567 +/- ##

======================================

Coverage ? 87%

======================================

Files ? 1023

Lines ? 55212

Branches ? 7052

======================================

Hits ? 48227

Misses ? 5768

Partials ? 1217

| Flag | Coverage Δ | |

|---|---|---|

| unittests | 87% <0%> (?) |

Flags with carried forward coverage won't be shown. Click here to find out more.

:mega: We’re building smart automated test selection to slash your CI/CD build times. Learn more

@brianpopow It'll be an accuracy issue most likely. (I hope it's not a JIT issue). It should be possible to inspect the result and see.

The issue only happens with a Release build. I think i found the reason, but it seems very weird. Vector3.Zero does not have the expected value (0, 0, 0).

This can be seen with the testoutput:

[xUnit.net 00:00:07.19] MatrixCalculator_WithMatrix_ReturnsResult(matrix2D: { {M11:1 M12:0 M13:0 M14:0} {M21:0 M22:1 M23:0 M24:0} {M31:0 M32:0 M33:1 M34:0} {M41:0 M42:0 M43:0 M44:1} }, matrix1D: <-0,0007887525. 4,590794E-41. 1>, input: <0,5. 0,5. 0,5. 0>, expected: <0,5. 0,5. 0,5. 0>) [FAIL]

matrix1D is supposed to be Vector3.Zero

Vector3.Zero does not have the expected value (0, 0, 0).

@brianpopow Woah! That's bonkers!

Vector3.Zero does not have the expected value (0, 0, 0).

@brianpopow Woah! That's bonkers!

I have reported this issue: https://github.com/dotnet/runtime/issues/55623

They confirmed the issue, but they say its unlikely to be fixed because netcore2.1 is out of support in august.

So long story short: be careful with default values or Vector.Zero in testdata.

@JimBobSquarePants It would be really nice, if we could bring this PR forward. This would be a good addition to ImageSharp. I thought, I may ask you, if you know what the PR needs (besides tests) to be finished?

What would be the right way to apply an embedded color profile? My first attempt was:

var converter = new IccPcsToDataConverter(profile);

for (int y = 0; y < image.Height; y++)

{

for (int x = 0; x < image.Width; x++)

{

var inputVec = image[x, y].ToVector4();

Vector4 converted = converter.Calculate(inputVec);

image[x, y] = new RgbaVector(converted.X, converted.Y, converted.Z);

}

}

Here is an example image with adobe rgb color profile:

This does not seems to work, the colors seem to be wrong. Here are more example images

@brianpopow Honestly.....

I don't know. I was hoping the OP would come back to finish things off. I've just kept things updated over the years and hadn't gotten involved at all in the implementation as yet.

Judging from the old comments in the previous PR I believe the code is based somewhat based on the following

https://github.com/InternationalColorConsortium/DemoIccMAX/tree/master/IccProfLib

As for accuracy. That result looks like it's just spitting out the sRGB values again.

I do agree that it would be an awesome addition to the library and would save a ton of headaches. I hoped we'd get it in V3 but that's a mighty big ask.

I think we definitely need a reference implementation to compare the results against. I tried BABL which gnome is using, but i could not get it to work on windows. I will take a look at DemoIccMAX

Just fixed up the merge conflicts here. Git really struggle to handle the trait attribute in combination with the namespace nesting change.

I am still trying to understand how this is supposed to work. Judging from the explanation from wikipedia ICC-Details I think it should work like this (anyone with more knowledge about color profiles, please correct me, if I am wrong):

If we want to convert the pixel data of an image according to it's color profile, we always need two colorprofiles.

- First the input color profile (the embedded color profile)

- The Target color profile (maybe sRGB is a reasonable default, or the user would need to provide a color profile to convert to)

Each RGB triplet of the image is first converted to the Profile connection space (PCS) using the input/embedded profile and then PCS is converted to the target profile. So we would need two converters. I would assume this would be done like this:

private static void ProfileToProfileConversion(Image<RgbaVector> image, IccProfile inputIccProfile, IccProfile outputIccProfile)

{

IccDataToPcsConverter converterDataToPcs = new(inputIccProfile);

IccPcsToDataConverter converterPcsToData = new(outputIccProfile);

for (int y = 0; y < image.Height; y++)

{

for (int x = 0; x < image.Width; x++)

{

Vector4 inputVec = image[x, y].ToVector4();

Vector4 converted = converterDataToPcs.Calculate(inputVec);

Vector4 converted2 = converterPcsToData.Calculate(converted);

image[x, y] = new RgbaVector(converted2.X, converted2.Y, converted2.Z);

}

}

}

Does that sound reasonable?

Thanks for doing the analysis there @brianpopow yes, I think that is a reasonable summation.

I would also definitely use sRGB as a reasonable default.

I would initially rewrite your sample as the following (assuming the calculators persist alpha component data). We can use scaled vectors by default and avoid all the per-pixel clamping overheads in the individual calculators.

public static void ProfileToProfileConversion<TPixel>(Image<TPixel> image, IccProfile inputIccProfile, IccProfile outputIccProfile)

where TPixel : unmanaged, IPixel<TPixel>

{

IccDataToPcsConverter converterDataToPcs = new(inputIccProfile);

IccPcsToDataConverter converterPcsToData = new(outputIccProfile);

Configuration configuration = image.GetConfiguration();

image.ProcessPixelRows(accessor =>

{

using IMemoryOwner<Vector4> vectors = configuration.MemoryAllocator.Allocate<Vector4>(accessor.Width);

Span<Vector4> vectorsSpan = vectors.GetSpan();

for (int y = 0; y < accessor.Height; y++)

{

Span<TPixel> row = accessor.GetRowSpan(y);

PixelOperations<TPixel>.Instance.ToVector4(configuration, row, vectorsSpan, PixelConversionModifiers.Scale);

for (int x = 0; x < vectorsSpan.Length; x++)

{

Vector4 pcs = converterDataToPcs.Calculate(vectorsSpan[x]);

vectorsSpan[x] = converterPcsToData.Calculate(pcs);

}

PixelOperations<TPixel>.Instance.FromVector4Destructive(configuration, vectorsSpan, row);

}

});

}

However, I think we should improve the IccConverterBase and IVector4Calculator APIs to take a Span<Vector4>. That way we can look to optimize the conversion internally as much as possible using AVX (would need help here).

Unfortunately it does not seem to work. I tried to convert the following image with adobe rgb profile:

to sRgb with the code snipped mentioned above, but the colors look completely wrong.

The sRgb profile I used:

The testimages comes from this very helpful website: http://regex.info/blog/photo-tech/color-spaces-page2

If my code snipped above and assumptions are correct, it seems there is an bug with the color conversion. I tried the last few days to figure out what's wrong, but did not succeed. I think we need someone who has more experience with color profile's for advice. I dont know how to proceed with this.

Can you share your test code? I can use that as a starting point to see if I can figure this out.

Can you share your test code? I can use that as a starting point to see if I can figure this out.

Its the same as before:

private static void ProfileToProfileConversion(Image<RgbaVector> image, IccProfile inputIccProfile, IccProfile outputIccProfile)

{

IccDataToPcsConverter converterDataToPcs = new(inputIccProfile);

IccPcsToDataConverter converterPcsToData = new(outputIccProfile);

for (int y = 0; y < image.Height; y++)

{

for (int x = 0; x < image.Width; x++)

{

Vector4 inputVec = image[x, y].ToVector4();

Vector4 converted = converterDataToPcs.Calculate(inputVec);

Vector4 converted2 = converterPcsToData.Calculate(converted);

image[x, y] = new RgbaVector(converted2.X, converted2.Y, converted2.Z);

}

}

}

image.Metadata.IccProfile = outputIccProfile;

image.SaveAsPng("output.png");

I know your approach will be more performant, but we first need to make it correct before we try to optimize it. The result should look exactly like what any browser would show, but it is completely off. I tried adding the srgb profile to the image and also removing it (assuming most viewers would assume sRgb), same result.

Sorry I meant loading the sRGB profile that you shared. Wasn’t sure how you were going about that.

My first thoughts are likely a wrong assumption internally about the layout of matrices - column vs row major.

I wonder if it'd be worth looking at this project for ideas: https://github.com/mm2/Little-CMS

@brianpopow I don't think it's as bad as it initially seems. I added some test images from the sample page you referenced and a round trip test. The output, though slightly different (likely due to precision) seems to be pretty good.

Here's the roundtrip result for the Adobe image. BeyondCompare ignores the profile during comparison which is useful here.

And here Wide

Here's the Wide compared to Adobe converted to Wide

Some issues I have found:

- IccConverterBase throws for both the sRGB profile and the ProPhoto profile saying that they are invalid for conversion.

I guess we can compare the checks against IccProfLib to see why.

I'm at a loss. I cannot find equivalent checks in what I thought was the referenced library for the port.

@JBildstein if you're still around we'd be very grateful if you could give us a hint. I feel like we're incredibly close to a working solution here.

Sorry I meant loading the sRGB profile that you shared. Wasn’t sure how you were going about that.

@JimBobSquarePants: Nothing special there. I have exported the profiles like this:

Image<RgbaVector> image = Image.Load<RgbaVector>(@"E:\testimages\colorprofile\Momiji-WideRGB-yes.jpg");

File.WriteAllBytes("WideRGB.icc", image.Metadata.IccProfile.ToByteArray());

and then loaded the profile again like this:

byte[] profileDataWide = File.ReadAllBytes(@"E:\testimages\colorprofile\WideRgb.icc");

IccProfile profileWide = new(profileDataWide);

Here I attach all the testimages with the profiles for anyone who wants to try it out: colorprofile_with_images.zip

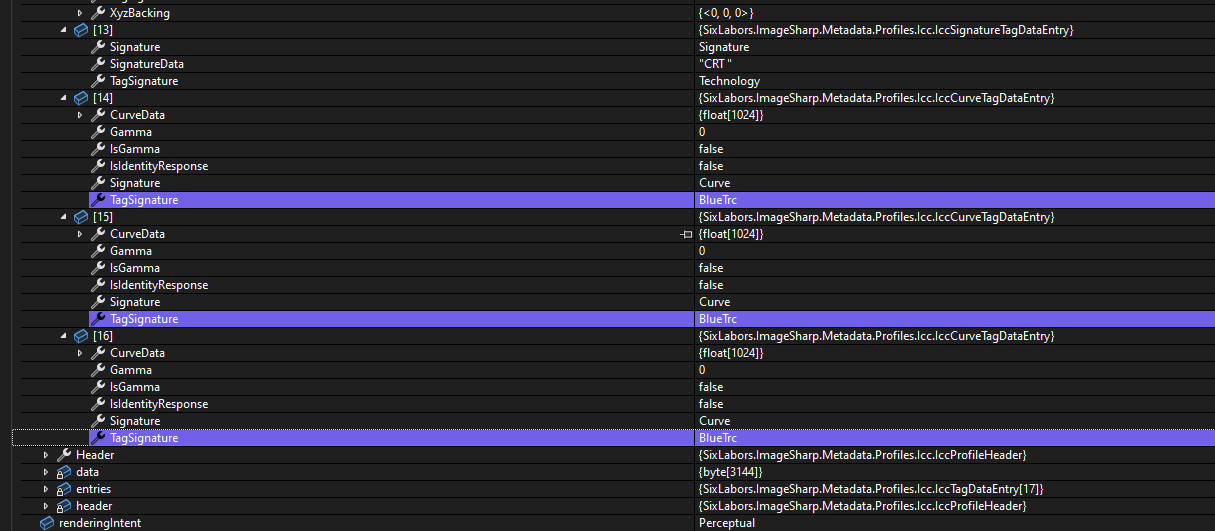

@brianpopow I've made some headway here. The checks appear to be fine; the issue seems to be with our profile reader.

EXIFTools reports our SRGB profile as having all three tone reproduction curves wheras our reader reports the blue curve 3 times.

I'm attempting to parse our reader code no to see if something obvious jumps out. The length of our data does not match the reported byte length

OK. Fixed the reader by removing the reader temp dictionary, it seems multiple tags can share the same data offset (I guess this saves space if the data is the same). I think the dictionary code was trying to handle that but was setting the entire tag.

I also attempted to fix the OOB error that was thrown in the LutCalculator I saw during round-tripping the sRGB profile when the passed value was 1. Not sure if the fix was correct though.

It seems things are working though not as accuratle as I'd like.

My round-trip tests are junk though and the converter method should be updated to check if the profile signatires are the same so will need to fix them up.

I'm at a loss. I cannot find equivalent checks in what I thought was the referenced library for the port.

I believe this is repository the source code is based on: InternationalColorConsortium/DemoIccMAX, as this was mentioned in the original PR. I tried to understand how this works, but in my opinion this repo is not very helpful. I was not even able to figure out how to convert from one profile to another. This post sums the issues with the repo up quite good.

OK. Fixed the reader by removing the reader temp dictionary, it seems multiple tags can share the same data offset (I guess this saves space if the data is the same). I think the dictionary code was trying to handle that but was setting the entire tag.

Nice, good work in finding the issue.

I'm pretty happy with this at the moment.

There's likely scope for performance improvement, and we should consider utilizing double precision in our converters for greater accuracy. (That would require us implementing double precision Vector4 and Matrix4x4 types though which is a lot of work). Visually the images appear to look ok though so not sure the effort is worth it.

Once the conversion calling code is implemented, I can add some additional tests and update the dodgy ones I added before.

Ok. @SixLabors/core I think this is good to review. I have added conversion methods and decoder options to allow converting to sRGB on decode. The converter itself is internal for now.

There are performance opportunities in the new code where we can potentially use a LUT for gamma calculations, but I haven't implemented that and likely will not given time-pressure and growing desire to ship something.

@brianpopow I've found an issue 😔

I've added an image containing a CMYK profile from #129 and it causes an out-of-range exception in ClutCalculator.Interpolate (I've marked where). Clamping the value doesn't appear to correct the issue so there is something else going on in the calculator.

There's some information on the calculator in #273 which I have copied over. This includes a link to the reference source method which unfortunately is written in C++ which I struggle with at the best of times so I'm at a loss as to what the issues is.

For reference here's a quick explanation of the CLUT (Color LookUp Table) interpolation: It's nothing more than an n-dimensional linear interpolation plus finding the nodes. There are three things that need to be done and in the current interpolation method each step is a loop.

1) Finding nodes: This is an example CLUT, two input channels (A, B), three output channels (X, Y, Z) and a grid point count of 3 for A and B (it could be different for each channel but usually isn't). The values of X, Y, Z are nonsense and don't matter for this example.

A B X Y Z 0 0 0.1 0.1 0.1 0 0.5 0.2 0.2 0.2 0 1 0.3 0.3 0.3 0.5 0 0.4 0.4 0.4 0.5 0.5 0.5 0.5 0.5 0.5 1 0.6 0.6 0.6 1 0 0.7 0.7 0.7 1 0.5 0.8 0.8 0.8 1 1 0.9 0.9 0.9 The values of A and B aren't actually stored anywhere, they can be calculated and this is what the inner loop does. The outer loop finds the nodes for the interpolation. To do the interpolation we need every variation of lower and higher values. E.g. if A = 0.3 and B = 0.8 then we need to interpolate the values at

A B X Y Z 0 0.5 A low, B low 0.2 0.2 0.2 0 1 A low, B high 0.3 0.3 0.3 0.5 0.5 A high, B low 0.5 0.5 0.5 0.5 1 A high, B high 0.6 0.6 0.6 The line

if (((i >> j) & 1) == 1)that @antonfirsov pointed out above was the simplest way I could think of to iterate over all variations of high and low (it's the same as an integer in binary). If there's a more vector friendly way I'd be happy to implement that.2) Calculating the factors for interpolation: The actual interpolation is the same as described here for bilinear unit square but done for n channels instead of just two: Wikipedia This part calculates all the factors for the third loop.

3) Interpolation of the output: This loop calculates the final interpolated output values for each output channel using the previously calculated factors.

As a reference, this is code from the official ICC repo: InterpND They also have separate interpolation routines for a channel count of 1 to 6 and it'll likely be a lot faster. I'd like to do the same later even if it won't be pretty. Having a working n-dimensional interpolation is still beneficial for reference/comparison and for potential expansion later.

@brianpopow I've found an issue 😔

I've added an image containing a CMYK profile from #129 and it causes an out-of-range exception in

ClutCalculator.Interpolate(I've marked where). Clamping the value doesn't appear to correct the issue so there is something else going on in the calculator.There's some information on the calculator in #273 which I have copied over. This includes a link to the reference source method which unfortunately is written in C++ which I struggle with at the best of times so I'm at a loss as to what the issues is.

@JimBobSquarePants

I struggle myself to understand the reference implementation. I will have some free time on friday where I can take a closer look.

It would be really beneficial to understand how conversion from one profile to another is done with the reference implementation. Last time I look at it, which is some time ago, I could not understand it. There is a iccRoundTrip_d.exe in DemoIccMAX\Testing. I guess that should do it somehow.

At the risk of muddying the issue a bit, although N-dimensional interpolation can be useful to have as a fallback, in practice it would rarely if ever be used. Real-world profiles will have either 3 or 4 input channels, and for those profiles, tetrahedral interpolation is faster and gives better results.

There's a 3D tetrahedral implementation in the linked reference source (Interp3dTetra) that looks reasonble, though not optimized. This post has some good visuals and an explanation of why it's better. To extend tetrahedral to 4 dimensions, you'd simply do a 3D interpolation for each of the possible values of the 4th dimension and then lerp between those two results. For CMYK, this works best if you use the K channel for the lerp step, although plenty of implementations just use C since that matches the order of the LUT in the profile.

You may have better luck in general looking at something like https://github.com/mm2/Little-CMS as a reference, since it's more focused on real-world use cases. DemoIccMAX is ostensibly written for completeness and ease of readability, not for practicality.

@saucecontrol I was hoping you'd comment here at some point. Tetrahedral interpolation looks like it should be our preferred approach, but I would not be able to implement it nor fix the issue I've found. I really struggle reading all these sources!

Do these help?

https://github.com/mm2/Little-CMS/blob/master/src/cmsintrp.c#L623 https://github.com/mm2/Little-CMS/blob/master/src/cmsintrp.c#L855

First link is 3D tetrahedral, and second extends that to 4D (the float 4D one is macro-heavy so I linked the integer version). That file also has trilinear interpolation, which might help you figure out where your ND has gone wrong.

The one easy improvement to the LCMS tetrahedral implementation is that it works on each output channel separately, which can be combined into a single step that works with Vector3 instead for the common case of 4->3 mapping, as in CMYK->LAB.

Are you clear on the steps involved for CMYK->RGB conversion, and have you checked that your inputs to the CLUT step are correct? I'm not in a good place to review the code in this PR just now, but I have a good handle on the logic and have implemented most of it myself in various places. If there's anything I can help with conceptually, I'm happy to answer any questions.