proxsuite

proxsuite copied to clipboard

proxsuite copied to clipboard

The effect of eps_rel in the solver's settings is very strange.

I find that even changing the eps_rel from 0 to a very small value(e.g. eps_rel changes from 0 to 1e-15, eps_abs keeps 1e-4) can have a great impact on the results, some variables differ by more than 50%. But if I use OSQP to solve my problem, changing the solver's parameters from (eps_abs = 1e-4, eps_rel = 0) to (eps_abs = 1e-4, eps_rel = 1e-15,) makes little difference. I'm not sure whether I understand this parameter incorrectly or whether there is some bugs about relative convergence condition in the ProxQP solver?

The dimension of my QP problem is about 500,and the number of constraints is about 1000, more than 70% of the constraints is inequality constraints. Sparsity ratio is about 0.1. Thank you!

@RobustControl could you provide a tiny example to replicate your issue?

@RobustControl could you provide a tiny example to replicate your issue?

Please try the following code.

#include <proxsuite/proxqp/dense/dense.hpp>

#include <proxsuite/proxqp/utils/random_qp_problems.hpp> // used for generating a random convex qp1

using namespace proxsuite::proxqp;

using T = double;

int main() {

// generate a QP problem

// case 1

T sparsity_factor = 0.08; // 0.08 0.07 0.15 0.15

dense::isize dim = 10; // 10 10 15 40

// case 2

// T sparsity_factor = 0.07;

// dense::isize dim = 10;

// case 3

// T sparsity_factor = 0.15;

// dense::isize dim = 15;

// case 4

// T sparsity_factor = 0.15;

// dense::isize dim = 40;

dense::isize n_eq(dim * 3);

dense::isize n_in(dim * 3);

T strong_convexity_factor(1.e-2);

// we generate a qp1, so the function used from helpers.hpp is

// in proxqp namespace. The qp1 is in dense eigen format and

// you can control its sparsity ratio and strong convexity factor.

dense::Model<T> qp_random = utils::dense_strongly_convex_qp(

dim, n_eq, n_in, sparsity_factor, strong_convexity_factor);

std::cout << "H: \n" << qp_random.H << std::endl;

std::cout << "g \n" << qp_random.g << std::endl;

std::cout << "A \n" << qp_random.A << std::endl;

std::cout << "b \n" << qp_random.b << std::endl;

std::cout << "C \n" << qp_random.C << std::endl;

std::cout << "u \n" << qp_random.u << std::endl;

std::cout << "l \n" << qp_random.l << std::endl;

// load PROXQP solver with dense backend and solve the problem

dense::QP<T> qp1(dim, n_eq, n_in);

qp1.settings.max_iter = 10000;

qp1.settings.max_iter_in = 1000;

qp1.settings.eps_abs = 1e-5;

qp1.settings.eps_rel = 0;

qp1.init(qp_random.H, qp_random.g, qp_random.A, qp_random.b, qp_random.C,

qp_random.u, qp_random.l);

qp1.solve();

// std::cout << "qp1 status: " << static_cast<int>(qp1.results.info.status)

// << std::endl;

dense::QP<T> qp2(dim, n_eq, n_in);

qp2.settings.max_iter = 10000;

qp2.settings.max_iter_in = 1000;

qp2.settings.eps_abs = 1e-5;

qp2.settings.eps_rel = 1e-20;

qp2.init(qp_random.H, qp_random.g, qp_random.A, qp_random.b, qp_random.C,

qp_random.u, qp_random.l);

qp2.solve();

// std::cout << "qp2 status: " << static_cast<int>(qp2.results.info.status)

// << std::endl;

auto const error = qp1.results.x - qp2.results.x;

for (uint64_t i{0U}; i < error.size(); ++i) {

if (fabs(error[i]) > 1e-3) {

std::cout << std::setw(11) << "result 1" << std::setw(17) << "result 2"

<< std::setw(15) << "error" << std::setw(15) << "ratio(%)"

<< std::endl;

for (uint64_t j{0U}; j < error.size(); ++j) {

std::cout << std::setw(10) << qp1.results.x[j] << ", "

<< std::setw(10) << qp2.results.x[j] << ", "

<< std::setw(10) << std::setprecision(2) << error[j] << ","

<< std::setw(10) << std::setprecision(2)

<< std::fabs(error[j]) /

std::max(std::fabs(qp1.results.x[j]),

std::fabs(qp2.results.x[j])) *

100

<< "%" << std::endl;

}

break;

}

}

return 0;

}

The output is

The dense_strongly_convex_qp() funciton will generate same dense::Model every this code executes. If the results are different in your computer, Please manually enter the matrices in the screenshot.

Or you can try different sparsity_factor and dim to make the error between the two results large enough (e.g. 20%).

@Bambade Could you have a look to this issue when you have time?

Hi @RobustControl, after discussing with @Bambade we could fix this issue. The problem is coming from very large bounds on the inequality constraints. I ran your code example and added a std::cout for the error (which is 0 now - so your loop is not displaying anything).

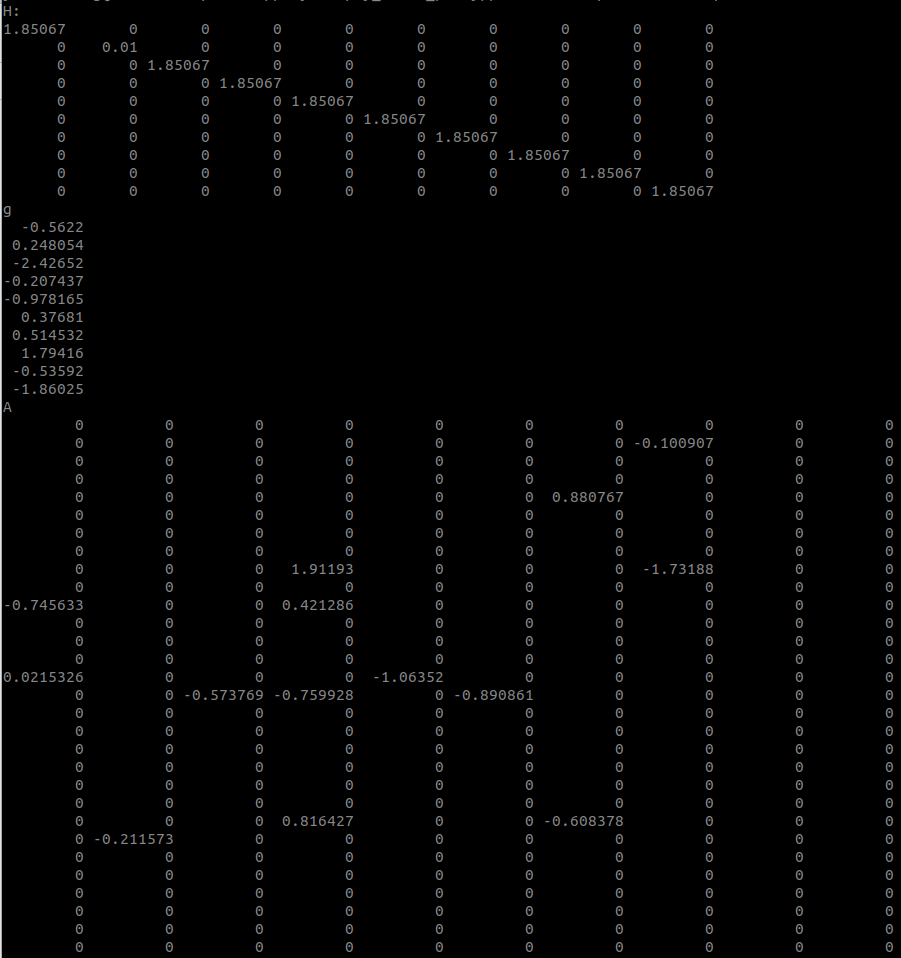

H:

1.85067 0 0 0 0 0 0 0 0 0

0 0.01 0 0 0 0 0 0 0 0

0 0 1.85067 0 0 0 0 0 0 0

0 0 0 1.85067 0 0 0 0 0 0

0 0 0 0 1.85067 0 0 0 0 0

0 0 0 0 0 1.85067 0 0 0 0

0 0 0 0 0 0 1.85067 0 0 0

0 0 0 0 0 0 0 1.85067 0 0

0 0 0 0 0 0 0 0 1.85067 0

0 0 0 0 0 0 0 0 0 1.85067

g

-0.5622

0.248054

-2.42652

-0.207437

-0.978165

0.37681

0.514532

1.79416

-0.53592

-1.86025

A

0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 -0.100907 0 0

0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0.880767 0 0 0

0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0

0 0 0 1.91193 0 0 0 -1.73188 0 0

0 0 0 0 0 0 0 0 0 0

-0.745633 0 0 0.421286 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0

0.0215326 0 0 0 -1.06352 0 0 0 0 0

0 0 -0.573769 -0.759928 0 -0.890861 0 0 0 0

0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0

0 0 0 0.816427 0 0 -0.608378 0 0 0

0 -0.211573 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0

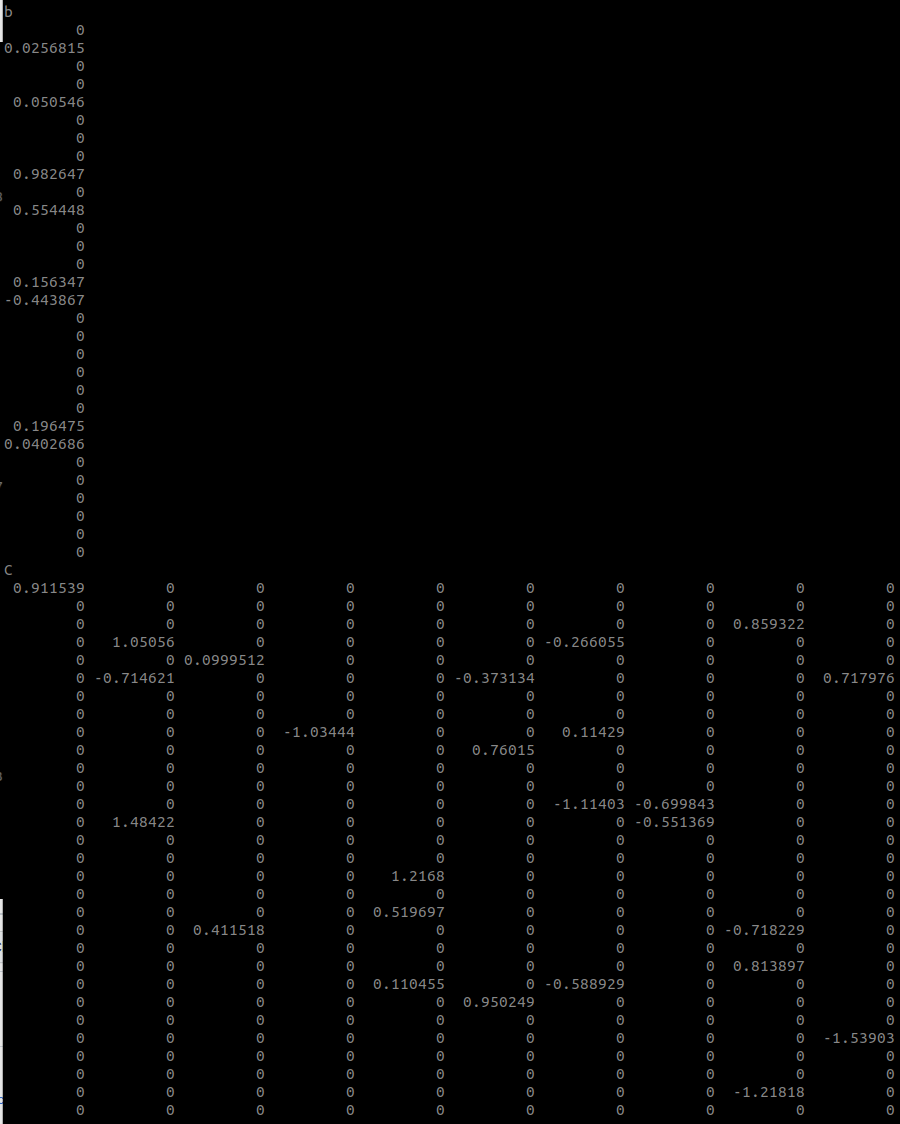

b

0

0.0256815

0

0

0.050546

0

0

0

0.982647

0

0.554448

0

0

0

0.156347

-0.443867

0

0

0

0

0

0

0.196475

0.0402686

0

0

0

0

0

0

C

0.911539 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0.859322 0

0 1.05056 0 0 0 0 -0.266055 0 0 0

0 0 0.0999512 0 0 0 0 0 0 0

0 -0.714621 0 0 0 -0.373134 0 0 0 0.717976

0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0

0 0 0 -1.03444 0 0 0.11429 0 0 0

0 0 0 0 0 0.76015 0 0 0 0

0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 -1.11403 -0.699843 0 0

0 1.48422 0 0 0 0 0 -0.551369 0 0

0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0

0 0 0 0 1.2168 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0

0 0 0 0 0.519697 0 0 0 0 0

0 0 0.411518 0 0 0 0 0 -0.718229 0

0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0.813897 0

0 0 0 0 0.110455 0 -0.588929 0 0 0

0 0 0 0 0 0.950249 0 0 0 0

0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 -1.53903

0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 -1.21818 0

0 0 0 0 0 0 0 0 0 0

u

0.449803

0.837235

1.30134

0.707384

0.130732

-0.0901918

0.165764

0.327293

0.530621

0.694916

0.126289

0.651918

0.446538

0.00290164

0.744711

0.253963

0.772124

0.144624

0.556128

-0.480807

0.189741

0.87036

0.923525

1.42382

0.829632

0.320807

0.74111

0.822662

-0.26687

0.0533956

l

-1e+20

-1e+20

-1e+20

-1e+20

-1e+20

-1e+20

-1e+20

-1e+20

-1e+20

-1e+20

-1e+20

-1e+20

-1e+20

-1e+20

-1e+20

-1e+20

-1e+20

-1e+20

-1e+20

-1e+20

-1e+20

-1e+20

-1e+20

-1e+20

-1e+20

-1e+20

-1e+20

-1e+20

-1e+20

-1e+20

error: 0

0

0

0

0

0

0

0

0

0

Good news. This issue has been fixed by @fabinsch in https://github.com/Simple-Robotics/proxsuite/pull/58.