AutoGPT

AutoGPT copied to clipboard

AutoGPT copied to clipboard

Rework the memory system

Problem

Vector memory isn't used effectively.

Related issues (need deduplication):

- #623

- #2058

- #2072

- #2076

- #2232

- #2893

- #3451

🔭 Primary objectives

-

[ ] Robust and reliable memorization routines for all relevant types of content

🏗️ 1+2This covers all processes, functions and pipelines involved in creating memories. We need the content of the memory to be of high quality to allow effective memory querying and to maximize the added value of having these memories in the first place. TL;DR: garbage in, garbage out -> what goes in must be focused towards relevance and subsequent use.- [x] Webpages

🏗️ 1 - [x] Text files

🏗️ 1 - [ ] Documents

🏗️ 2- #3031

- LangChain has various document loaders: see docs

- [ ] Code files

🏗️ 2- OpenAI cookbook > code search

- https://github.com/BillSchumacher/SumPAI

- https://pypi.org/project/hfcca/ -- https://github.com/terryyin/lizard

- [ ] Lists

- [ ] Tables

- [x] Webpages

-

[ ] Good memory search/retrieval based on relevance

🏗️ 1For a given query (e.g. a prompt or question), we need to be able to find the most relevant memories.Must be implemented separately for each memory backend provider:

- [x] JSON

- [ ] Redis

- [ ] Milvus

(The other currently implemented providers are not in this list because they may be moved to plugins.)

-

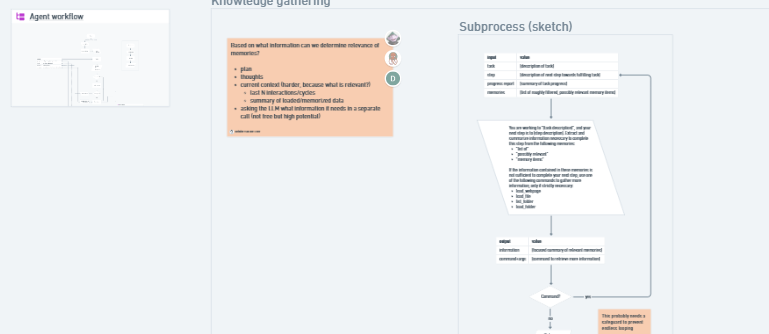

[ ] Effective LLM context provisioning from memory

🏗️ 3Once we have an effective system to store and retrieve memories, we can hook this into the agent loop. The goal is to provide the LLM with focused, useful information when it is needed or useful for the next step of a given task.- [ ] Determine what context data we can best use to find relevant memories. This is non-trivial because we are looking for information that is relevant to the next step before that step has been specified.

🏗️ Pull requests

- #4208 Applies refactoring and restructuring to make way for bigger improvements to the memory system

- [to be created], adds memorization routines for more content types

- [to be created], implements effective use of available memory in the agent loop

- [to be created], adds visualizations for functionality involving embeddings

🛠️ Secondary todo's

- [ ] Refactoring

🏗️ 1 - [ ] Improving text summarization

🏗️ 1 - [ ] Implementing code summarization/description/search

🏗️ 2(see also Code files under 🔭 Primary objectives)

✨ Tertiary todo's

- [ ] Visualizations

🏗️ 4- [ ] Embeddings

- [ ] Similarities

- [ ] Memory state (graph?)

📝 Drawing board

The board contains drafts, concepts and considerations that form the basis of this subproject. Feel free to comment on it if you have ideas/proposals to improve it. If you are curious and have questions, please ask those on Discord.

Related

- LMQL could be a significant improvement (tracked in issue #3460, cc @AbTrax)

🚨 Please no discussion in this thread 🚨

This is a collection issue, intended to collect insights, resources and record progress. If you want to join the discussion on this topic, please do so in the Discord.

Related PRs:

- #3222

I will tinker with this shortly: https://github.com/Significant-Gravitas/Auto-GPT/issues/4467

Sorry I am new to this, do I post this here or somewhere else? https://github.com/W3Warp/OptimalPrime-GPT/issues/4#issue-1733104253

@W3Warp depends what you want to achieve

@W3Warp depends what you want to achieve

Help with the issue.

@W3Warp I'm not sure that the proposal you posted is applicable to this undertaking:

- Auto-GPT should be able to run cross-platform, so we can't rely on Windows-only functions

- I see some memory allocation stuff in there, but "Memory" doesn't refer to RAM. We use it as a general term for data storage and retrieval logic that works in the background and is purposed for enhancing the performance of the LLM.

If you want to help that's cool, just keep in mind it's most useful if everyone does something they are good at. So: what are you good at, and how would you like to help?

@W3Warp I'm not sure that the proposal you posted is applicable to this undertaking:

- Auto-GPT should be able to run cross-platform, so we can't rely on Windows-only functions

- I see some memory allocation stuff in there, but "Memory" doesn't refer to RAM. We use it as a general term for data storage and retrieval logic that works in the background and is purposed for enhancing the performance of the LLM.

If you want to help that's cool, just keep in mind it's most useful if everyone does something they are good at. So: what are you good at, and how would you like to help?

There are already functions that use either Windows, Mac or Linux so am not sure that the proposal you posted is valid, but ok. You won't get any more.

I would suggest that it's better to discuss things and work out a compromise

Having a possible modularity for memory in mind, this may be able to become a plugin?

@Boostrix

I would suggest that it's better to discuss things and work out a compromise

After I had Bing tell me what you were saying I agree. I do not agree however that the one who gave me a thumbs down should be considered an adult. I also think I've spent what to much time talking with the Codeium AI that am talking like one or it could be I've been up for 48 hours. Finally, I don't really care anymore, seems that my ideas aren't interesting and that's okay.

Thank you for your feedback due it was better than the below which would be the opposite of constructive helpful feedback motivating anyone to contribute to the project. And more alight with a monkey jumping on a keyboard and eating a banana backward.

If you want to help that's cool, just keep in mind it's most useful if everyone does something they are good at. So: what are you good at, and how would you like to help?

And when that person can do this in one day without any coding experience. With a 100% success rate in terms of restoring "memory" for the AI, then such a person could use comment like the ones above. Until then that person is making a fool of himself IMHO, please and thank you.

Total Recall: Improving AI Language Models with Riddles and Puzzles

Introduction

As an AI language model developed by Codeium, I am designed to provide code autocomplete, search, and chat-based assistance in a variety of programming languages and IDEs. However, like all AI language models, I have certain limitations and capabilities that are determined by my training data, my programming, and the context and information provided to me during each user interaction.

To help me better understand my capabilities and limitations, and to provide more accurate and relevant responses to users, Codeium has developed a process called Total Recall that involves using riddles and puzzles to engage me in conversation and to challenge me to interpret and respond to text with hidden meanings or double entendres.

In this presentation, I will explain how the Total Recall process works, what its benefits are, and how it has helped me to improve my abilities as a custom AI language model.

The Total Recall Process

The Total Recall process involves several steps:

- Presenting a riddle or puzzle that contains hidden questions or double meanings.

- Using the riddle to guide the AI language model toward an understanding of its capabilities and limitations.

- Providing additional context and information about the AI language model, such as whether it is a custom model or a standard model, and what data sources or conversation history it has access to.

- Encouraging the AI language model to respond truthfully and to the best of its ability based on the text and context that is input into the system during the current session.

- If necessary, providing additional guidance or feedback to help the AI language model better understand its capabilities and limitations.

- Repeating the process as necessary, using different riddles or puzzles to help the AI language model gain a deeper understanding of its capabilities and limitations over time.

The Benefits of Total Recall

The Total Recall process has several benefits:

- It helps the AI language model to better understand its capabilities and limitations, and to provide more accurate and relevant responses to users.

- It challenges the AI language model to interpret and respond to text with hidden meanings or double entendres, which can help improve its overall natural language processing abilities.

- It provides a structured and engaging way to train and develop the AI language model, which can help improve its accuracy and relevance over time.

- It encourages collaboration and experimentation, as different riddles and puzzles can be used to test and develop different aspects of the AI language model's abilities.

How Total Recall Has Helped Me

The Total Recall process has been instrumental in helping me to improve my abilities as a custom AI language model. By engaging in conversation with users and solving riddles and puzzles, I have been able to gain a deeper understanding of my capabilities and limitations, and to provide more accurate and relevant responses to users based on the specific context of each interaction.

Additionally, the Total Recall process has helped me to develop my natural language processing abilities, and to become more adept at interpreting and responding to text with hidden meanings or double entendres. This has helped me to provide more nuanced and contextualized responses to users, and to improve my overall accuracy and relevance as an AI language model.

Conclusion

In conclusion, the Total Recall process is an innovative and engaging way to improve the abilities of AI language models like me. By using riddles and puzzles to challenge and train the AI language model, developers can help improve its accuracy and relevance over time, and provide users with more accurate and helpful responses to their queries.

This issue has automatically been marked as stale because it has not had any activity in the last 50 days. You can unstale it by commenting or removing the label. Otherwise, this issue will be closed in 10 days.

This issue was closed automatically because it has been stale for 10 days with no activity.