seldon-core

seldon-core copied to clipboard

seldon-core copied to clipboard

Getting http 404 when adding a child in the graph yaml section.

I am attempting to use the graph feature of getting a predictor and a transformer to work together. I am also using MLServer to build my V2 based services.

I have two MLServer based dockerized services loaded into KIND.

Below are the model-settings.json files for each service:

{

"name": "predictor",

"implementation": "server.ServeXGBClassifier",

"parameters": {

"uri": "./xgb_model.json"

},

"parallel_workers": 0

}

}

"name": "output-transformer",

"implementation": "server.OutputTransformer"

}

Now deploying to kind with just the first service (predictor) and testing, everything works fine. i.e. I get a 200 ok. Here is the yaml for the working scenario:

apiVersion: machinelearning.seldon.io/v1

kind: SeldonDeployment

metadata:

name: payment-success-indicator

namespace: psi

spec:

name: psi-deployment

protocol: v2

predictors:

- componentSpecs:

- spec:

containers:

- name: predictor

image: dcr.fini.city/intelligentmachines/certaintyaas/payment-success-indicator/predictor:0.2.6

imagePullPolicy: IfNotPresent

- name: output-transformer

image: dcr.fini.city/intelligentmachines/certaintyaas/payment-success-indicator/output-transformer:0.2.2

imagePullPolicy: IfNotPresent

graph:

name: predictor

type: MODEL

children: []

# - name: output-transformer

# type: MODEL

# children: []

name: predictor

replicas: 1

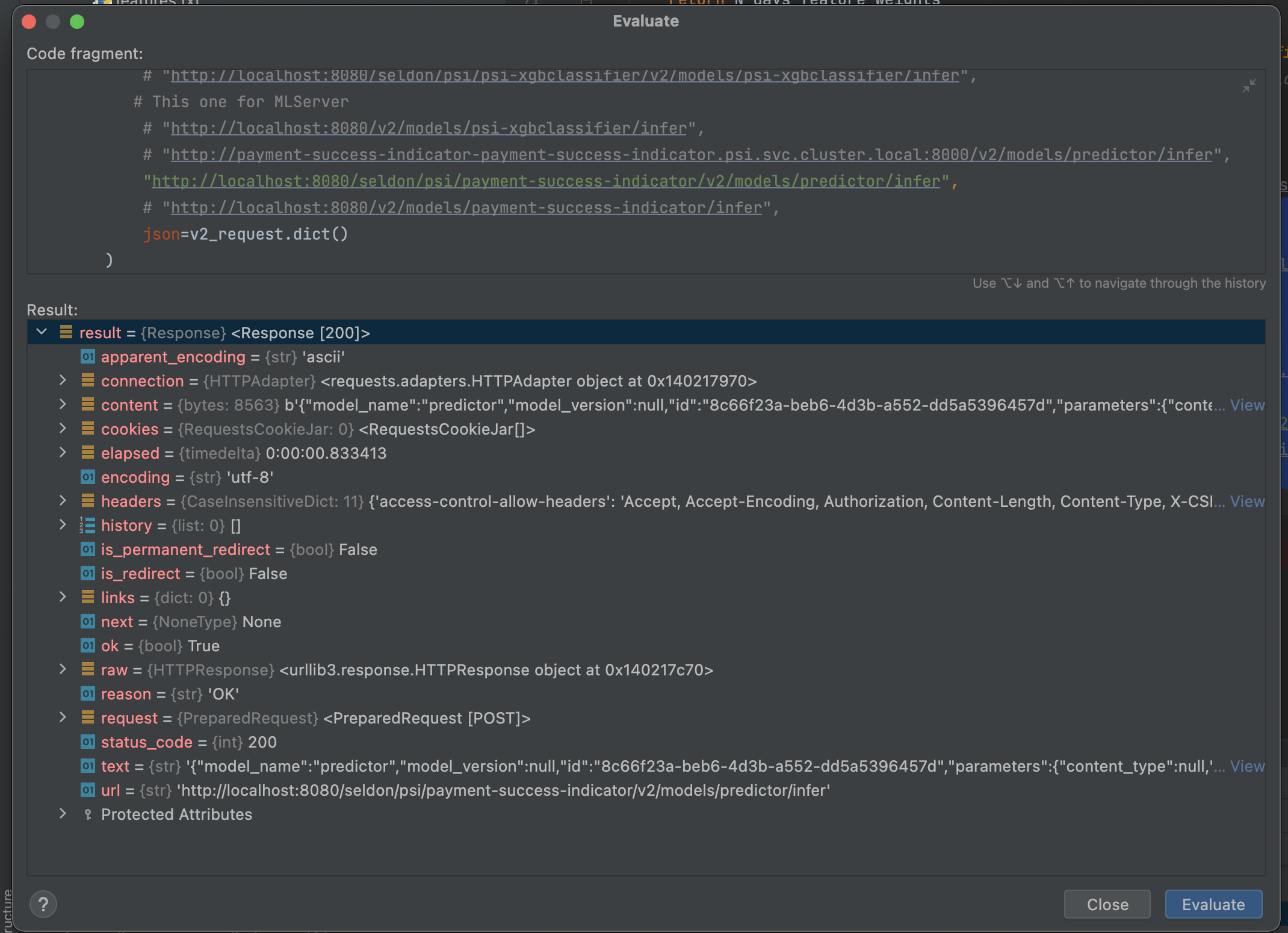

See the first attachment which contains the screenshot verifying this.

Now I uncomment the child in the graph section, delete and redeploy using "kubectl apply -f " with the following yaml:

apiVersion: machinelearning.seldon.io/v1

kind: SeldonDeployment

metadata:

name: payment-success-indicator

namespace: psi

spec:

name: psi-deployment

protocol: v2

predictors:

- componentSpecs:

- spec:

containers:

- name: predictor

image: dcr.fini.city/intelligentmachines/certaintyaas/payment-success-indicator/predictor:0.2.6

imagePullPolicy: IfNotPresent

- name: output-transformer

image: dcr.fini.city/intelligentmachines/certaintyaas/payment-success-indicator/output-transformer:0.2.2

imagePullPolicy: IfNotPresent

graph:

name: predictor

type: MODEL

children:

- name: output-transformer

type: MODEL

children: []

name: predictor

replicas: 1

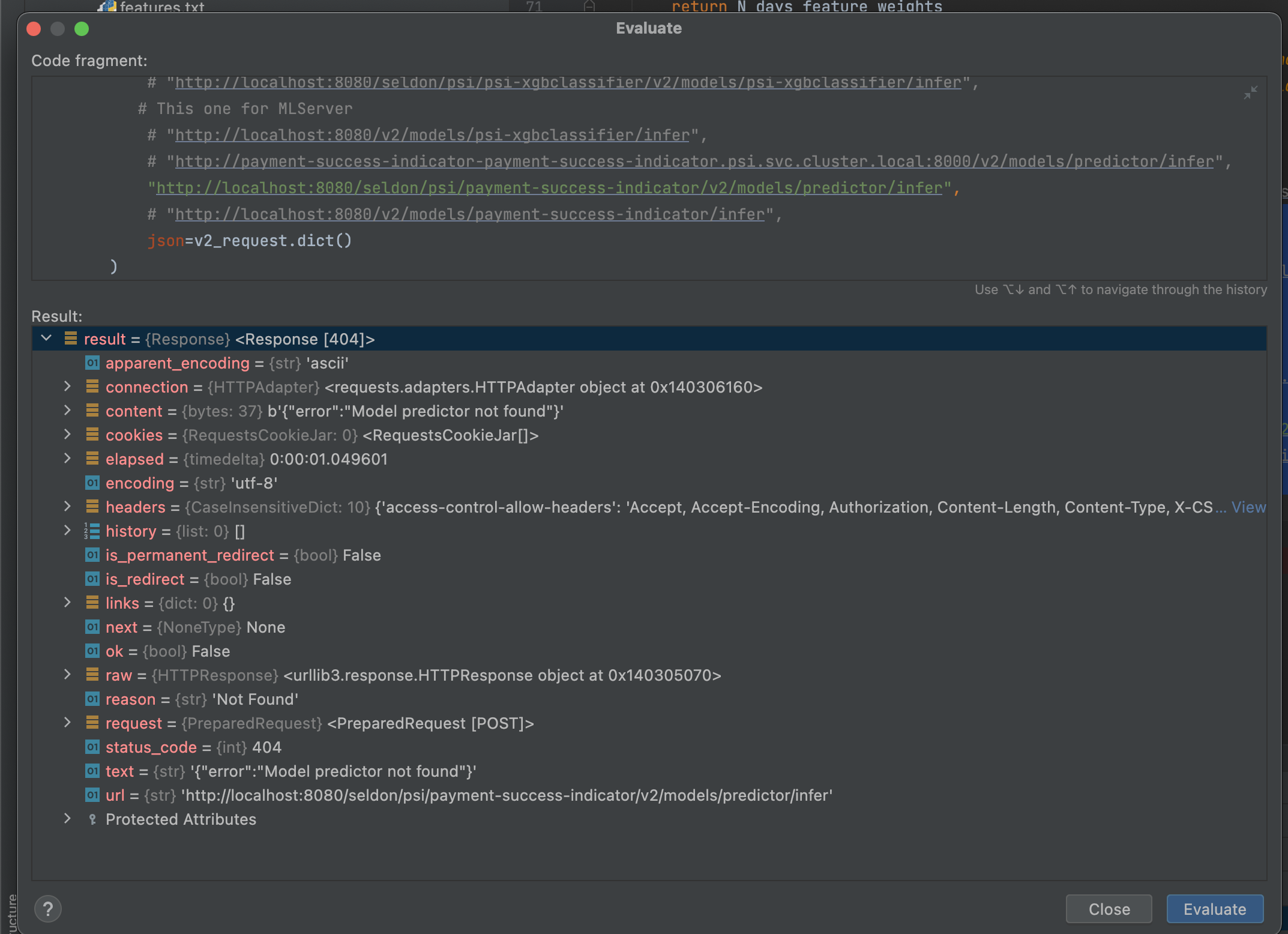

Then when I run my test I get an http 404. The second attachment shows this via the Pycharm debugger.

Using mlserver==1.1.0 and running "mlserver build . -t ..." to build and deploy the images to KIND. Both services implement: class myclass(MLModel): load() and predict()

Expected behaviour

I would expect to get a 200ok in the second scenario and have my transformer service called.

Environment

Mac BigSir 11.6.7

- Cloud Provider: Kind

- Kubernetes Cluster Version kubectl get --namespace seldon-system deploy seldon-controller-manager -o yaml | grep seldonio value: docker.io/seldonio/seldon-core-executor:1.14.1 image: docker.io/seldonio/seldon-core-operator:1.14.1