seiscomp3

seiscomp3 copied to clipboard

seiscomp3 copied to clipboard

fdsnws / fastsds - 'invalid pointers' error when accessing minissed with empty records

There is strange (wrong?) behaviour of fdsnws when using the 'fastsds' recordstream (2017.124 + EIDA service pack). It happens when the miniseed file contains empty records (because of a wrong archiving).

As an example, we have this file:

$ msi -T NL.HGN.02.BHZ.D.2015.299

Source Start sample End sample Hz Samples

NL_HGN_02_BHZ 2015,299,00:00:06.694538 2015,300,00:00:04.094538 40 3455897

NL_HGN_02_BHZ 2015,299,09:17:46.319500 2015,299,09:17:46.319500 40 0

NL_HGN_02_BHZ 2015,299,09:18:11.644500 2015,299,09:18:11.644500 40 0

NL_HGN_02_BHZ 2015,299,09:18:37.919500 2015,299,09:18:37.919500 40 0

NL_HGN_02_BHZ 2015,299,09:19:03.919500 2015,299,09:19:03.919500 40 0

NL_HGN_02_BHZ 2015,299,09:19:30.169500 2015,299,09:19:30.169500 40 0

NL_HGN_02_BHZ 2015,299,09:19:55.769500 2015,299,09:19:55.769500 40 0

NL_HGN_02_BHZ 2015,299,09:20:21.619500 2015,299,09:20:21.619500 40 0

NL_HGN_02_BHZ 2015,299,09:20:47.519500 2015,299,09:20:47.519500 40 0

NL_HGN_02_BHZ 2015,299,09:21:14.344500 2015,299,09:21:14.344500 40 0

NL_HGN_02_BHZ 2015,299,09:21:40.394500 2015,299,09:21:40.394500 40 0

NL_HGN_02_BHZ 2015,299,09:22:05.694500 2015,299,09:22:05.694500 40 0

NL_HGN_02_BHZ 2015,299,09:22:32.894500 2015,299,09:22:32.894500 40 0

NL_HGN_02_BHZ 2015,299,09:23:04.744500 2015,299,09:23:04.744500 40 0

NL_HGN_02_BHZ 2015,299,09:23:40.219500 2015,299,09:23:40.219500 40 0

NL_HGN_02_BHZ 2015,299,09:24:18.919500 2015,299,09:24:18.919500 40 0

NL_HGN_02_BHZ 2015,299,13:04:32.344500 2015,299,13:04:32.344500 40 0

NL_HGN_02_BHZ 2015,299,13:30:38.094500 2015,299,13:30:38.094500 40 0

NL_HGN_02_BHZ 2015,299,22:42:01.844500 2015,299,22:42:01.844500 40 0

Total: 1 trace(s) with 19 segment(s)

If doing a query to fdsnws-dataselect with a start/endtime where there are some of these empty records...

/fdsnws/dataselect/1/query?net=NL&sta=HGN&loc=02&cha=BHZ&start=2015-10-26T09:15:46.0000Z&end=2015-10-26T09:22:46.0000Z

...then the request returns HTTP 204 No Content.

See the fdsnws log:

2018/06/25 09:35:55 [debug/log] request (127.0.0.1): /fdsnws/dataselect/1/query?net=NL&sta=HGN&loc=02&cha=BHZ&start=2015-10-26T09:15:46.0000Z&end=2015-10-26T09:22:46.0000Z

2018/06/25 09:35:55 [debug/log] adding stream: NL.HGN.02.BHZ 2015-10-26T09:15:46.0000Z - 2015-10-26T09:22:46.0000Z

2018/06/25 09:35:55 [error/log] /data/orfeus/orfeus/SDS/2015/NL/HGN/BHZ.D/NL.HGN.02.BHZ.D.2015.299: invalid pointers

2018/06/25 09:35:55 [info/log] [reactor] 127.0.0.1 - - [25/Jun/2018:09:35:55 +0000] "GET /fdsnws/dataselect/1/query?net=NL&sta=HGN&loc=02&cha=BHZ&start=2015-10-26T09:15:46.0000Z&end=2015-10-26T09:22:46.0000Z HTTP/1.1" 204 - "-" "curl/7.53.1"

However, this does not happen when using 'sdsarchive' recordstream. In that case, fdsnws returns a miniseed file with the correct data including the empty records as well.

I'm not sure if this a bug, or an intended behaviour, as the original miniseed file is "not clean".

I leave the comment about the fastsds issue to @andres-h.

Just out of interest: what speed increase do you get with fastsds compared to the sdsarchive path with default configuration or an increased recordBulkSize?

Thanks @gempa-jabe !

Just out of interest: what speed increase do you get with fastsds compared to the sdsarchive path with default configuration or an increased recordBulkSize?

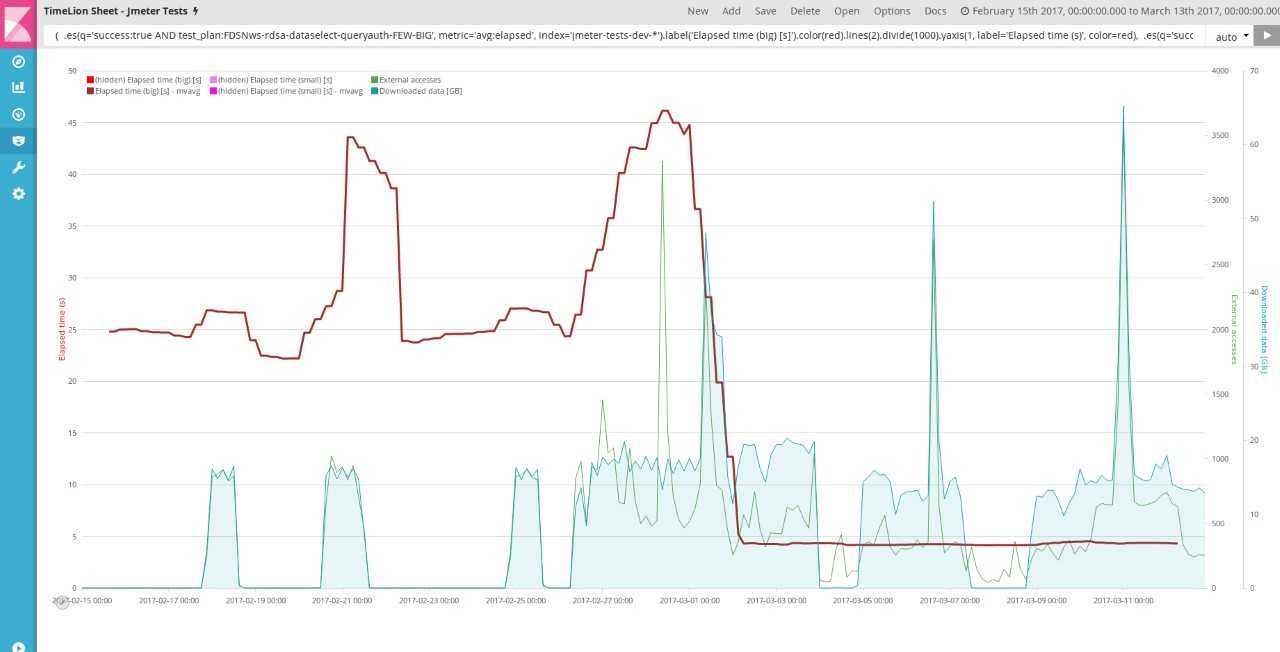

I did not test it since recordBulkSize was included. In the last test I did (March 2017, 2016.333 vs fastsds), the improvement was substantial (see picture), specially for big files.

I will compare them again. What would be the most optimal recordBulkSize value?

I will compare them again. What would be the most optimal

recordBulkSizevalue?

That is a good question and might depend. The default is 102400 bytes, which should give a pretty good balance of IO and Python overhead. If you could test it again that would be great. Thank you.