SWIFT

SWIFT copied to clipboard

SWIFT copied to clipboard

Planetary impact simulation super slow with large dead time

Dear SWIFT team, I'm having a serious slow downing issue when trying to use swift_mpi running planetary impact simulation on our HPC. The issue can only happen if I use a specific number of nodes and CPUs (basically all happened when I tried to use 2 or 4 full nodes). I've listed some situations below that I've tested:

- HPC1 -----10^5 particles 2node56cpu----Failed

- HPC1------10^5 particles 2node16cpu----good

- HPC1------10^5 particles 1node28cpu----good

- HPC2------10^5 particles 2node48cpu----good

- HPC2------10^6 particles 4node96cpu----Failed

the timestep plot of the HPC-2 10^6 particles simulation is like this:

After some steps, the dead time becomes extremely large basically taking 98% CPU time of each step.

After some steps, the dead time becomes extremely large basically taking 98% CPU time of each step.

Here is my configure and running recipe:

./configure --with-hydro=planetary --with-equation-of-state=planetary --enable-compiler-warnings=yes

--enable-ipo CC=icc MPICC=mpiicc --with-tbbmalloc --with-gravity=basic

submit script like this:

##HPC-2 10^6 particles 4node96cpu

#PBS -l select=4:ncpus=24:mpiprocs=2:ompthreads=12:mem=150gb

time mpirun -np 8 ./swift_mpi -a -v 1 -s -G -t 12 parameters_impact.yml 2>&1 | tee $SCRATCH/output_${PBS_JOBNAME}.log

##HPC-1 10^5 particles 2node56cpu

#SBATCH --cpus-per-task=14

#SBATCH --tasks-per-node=2

#SBATCH --nodes 2

#SBATCH --mem=120G

time mpirun -np 4 ./swift_mpi_intel -a -v 1 -s -G -t 14 parameters_impact.yml 2>&1 | tee $SCRATCH/output_${SLURM_JOB_NAME}.log

parameters file and initial condition: parameters yml file Initial condition

ParMETIS lib is currently unavailable on both HPC, so I can't use that. Also, HPC 2 doesn't have parallel hdf5 lib, but the same issue happened on HPC 1 which has parallel hdf5 loaded.

Here below is the log file from 10^6 particles simulation on HPC2: 10^6 output log file rank_cpu_balance.log rank_memory_balance.log task_level_0000_0.txt timesteps_96.txt

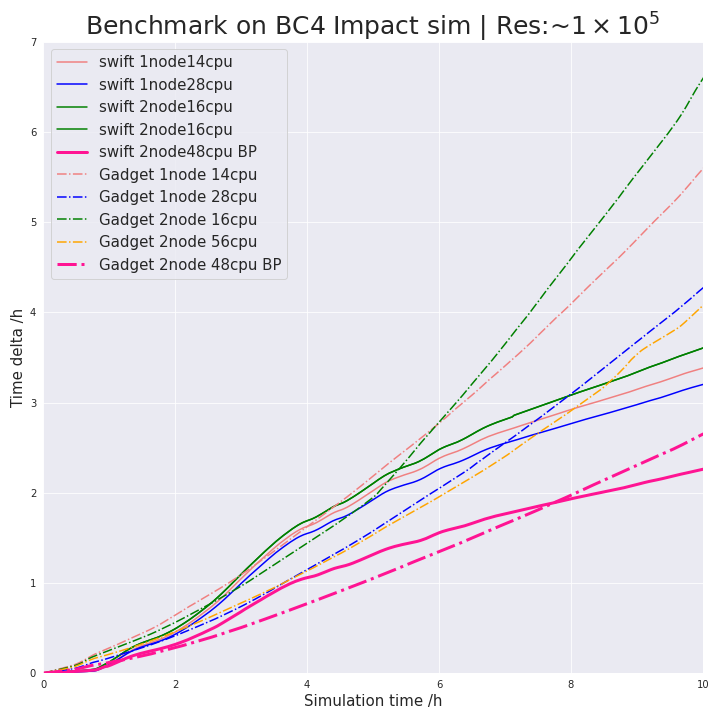

I'm doing some not very series benchmark tests with swift and Gadget when running planetary simulations. The below plot shows some results, which suggest SWIFT is always running a little bit slower than Gadget2 during the period of 1.5~8h (simulation time). The gif shows the period where this sluggish situation happened. Looks like SWIFT gets slower when the position of particles changed dramatically. After this period two planets just merged into a single one and behave not as drastically as in the first stage. I was expecting SWIFT to be always faster during the simulation, do you have any suggestions on how I could improve the performance of the code.