gst-inference

gst-inference copied to clipboard

gst-inference copied to clipboard

ResNet support

Hi, Is it Resnet50 v1 supported on the plugin yet? I am interested in using that.

Also, I have a question about performance measurements. Would anyone know how to measure frame rate for inference part? Thanks

Resnet50 is not supported yet. We are in a point that adding architectures is fairly trivial. @migueltaylor @GFallasRR can you add support for Resnet50? @gsrujana it would be helpful and faster for us if you could share the TF model you are testing against, if possible.

We use two techniques to measure framerate:

- GstPerf is a simple element that you place in the branch you want to measure the frames per second.

- GstShark is a more sophisticated tool that is able to measure framerate in multiple points simultaneously. Check the framerate tracer

Thank you for response.

Following is the resnet model: ResNet-50_v1 paper: https://github.com/tensorflow/models/tree/master/official/resnet Source url: https://github.com/tensorflow/models/tree/master/official/resnet Download Model Checkpoint : http://download.tensorflow.org/models/official/20180601_resnet_v1_imagenet_checkpoint.tar.gz Frozen model: http://download.tensorflow.org/models/official/20180601_resnet_v1_imagenet_savedmodel.tar.gz Dataset: ILSVRC-2012-CLS input_layer : input output_layer: resnet_v1_50/SpatialSqueeze I converted this to frozen graph.

Thanks for Gshark information. I tried that plugin with tinyyolov2 to get inference frame rate with GShark framerate. Following is my pipeline: gst-launch-1.0 tinyyolov2 name=net backend=tensorflow model-location=/sdd/GSTREAMER/models/TinyYoloV2_TensorFlow/graph_tinyyolov2_tensorflow.pb backend::input-layer=input/Placeholder backend::output-layer=add_8 filesrc location=/sdd/GSTREAMER/ForBiggerFun.mp4 ! decodebin ! tee name=t t. ! queue ! videoconvert ! videoscale ! net.sink_model t. ! queue ! videoconvert ! video/x-raw,format=RGB ! net.sink_bypass net.src_bypass ! detectionoverlay ! videoconvert ! queue ! fakesink sync=false

I am getting following in debug file:

0:00:17.351001644 [331m 3162[00m 0x1093800 [37mTRACE [00m [00;34m GST_TRACER :0::[00m framerate, pad=(string)capsfilter0_src, fps=(uint)17; 0:00:17.351050006 [331m 3162[00m 0x1093800 [37mTRACE [00m [00;34m GST_TRACER :0::[00m framerate, pad=(string)qtdemux0_video_0, fps=(uint)16; 0:00:17.351060289 [331m 3162[00m 0x1093800 [37mTRACE [00m [00;34m GST_TRACER :0::[00m framerate, pad=(string)qtdemux0_audio_0, fps=(uint)29; 0:00:17.351068215 [331m 3162[00m 0x1093800 [37mTRACE [00m [00;34m GST_TRACER :0::[00m framerate, pad=(string)queue1_src, fps=(uint)16; 0:00:17.351076513 [331m 3162[00m 0x1093800 [37mTRACE [00m [00;34m GST_TRACER :0::[00m framerate, pad=(string)net_src_bypass, fps=(uint)16; 0:00:17.351084355 [331m 3162[00m 0x1093800 [37mTRACE [00m [00;34m GST_TRACER :0::[00m framerate, pad=(string)multiqueue0_src_0, fps=(uint)16; 0:00:17.351092371 [331m 3162[00m 0x1093800 [37mTRACE [00m [00;34m GST_TRACER :0::[00m framerate, pad=(string)decodebin0_sink, fps=(uint)45; 0:00:17.351099959 [331m 3162[00m 0x1093800 [37mTRACE [00m [00;34m GST_TRACER :0::[00m framerate, pad=(string)multiqueue0_src_1, fps=(uint)0; 0:00:17.351107755 [331m 3162[00m 0x1093800 [37mTRACE [00m [00;34m GST_TRACER :0::[00m framerate, pad=(string)typefind_sink, fps=(uint)45; 0:00:17.351115650 [331m 3162[00m 0x1093800 [37mTRACE [00m [00;34m GST_TRACER :0::[00m framerate, pad=(string)avdec_h264_0_src, fps=(uint)16; 0:00:17.351139071 [331m 3162[00m 0x1093800 [37mTRACE [00m [00;34m GST_TRACER :0::[00m framerate, pad=(string)h264parse0_src, fps=(uint)16; 0:00:17.351148728 [331m 3162[00m 0x1093800 [37mTRACE [00m [00;34m GST_TRACER :0::[00m framerate, pad=(string)detectionoverlay0_src, fps=(uint)16; 0:00:17.351156824 [331m 3162[00m 0x1093800 [37mTRACE [00m [00;34m GST_TRACER :0::[00m framerate, pad=(string)t_src_0, fps=(uint)16; 0:00:17.351164165 [331m 3162[00m 0x1093800 [37mTRACE [00m [00;34m GST_TRACER :0::[00m framerate, pad=(string)videoconvert1_src, fps=(uint)17; 0:00:17.351171917 [331m 3162[00m 0x1093800 [37mTRACE [00m [00;34m GST_TRACER :0::[00m framerate, pad=(string)decodebin0_src_0, fps=(uint)16; 0:00:17.351179735 [331m 3162[00m 0x1093800 [37mTRACE [00m [00;34m GST_TRACER :0::[00m framerate, pad=(string)capsfilter1_src, fps=(uint)16; 0:00:17.351187113 [331m 3162[00m 0x1093800 [37mTRACE [00m [00;34m GST_TRACER :0::[00m framerate, pad=(string)t_src_1, fps=(uint)16; 0:00:17.351194215 [331m 3162[00m 0x1093800 [37mTRACE [00m [00;34m GST_TRACER :0::[00m framerate, pad=(string)queue0_src, fps=(uint)16; 0:00:17.351201816 [331m 3162[00m 0x1093800 [37mTRACE [00m [00;34m GST_TRACER :0::[00m framerate, pad=(string)queue2_src, fps=(uint)16; 0:00:17.351209199 [331m 3162[00m 0x1093800 [37mTRACE [00m [00;34m GST_TRACER :0::[00m framerate, pad=(string)qtdemux0_sink, fps=(uint)45; 0:00:17.351216722 [331m 3162[00m 0x1093800 [37mTRACE [00m [00;34m GST_TRACER :0::[00m framerate, pad=(string)aacparse0_src, fps=(uint)0; 0:00:17.351224160 [331m 3162[00m 0x1093800 [37mTRACE [00m [00;34m GST_TRACER :0::[00m framerate, pad=(string)videoconvert0_src, fps=(uint)17; 0:00:17.351232347 [331m 3162[00m 0x1093800 [37mTRACE [00m [00;34m GST_TRACER :0::[00m framerate, pad=(string)videoconvert2_src, fps=(uint)16; 0:00:17.351250382 [331m 3162[00m 0x1093800 [37mTRACE [00m [00;34m GST_TRACER :0::[00m framerate, pad=(string)avdec_aac0_src, fps=(uint)0; 0:00:17.351258634 [331m 3162[00m 0x1093800 [37mTRACE [00m [00;34m GST_TRACER :0::[00m framerate, pad=(string)videoscale0_src, fps=(uint)17; 0:00:17.353339895 [331m 3162[00m 0x1943190 [37mTRACE [00m [00;34m GST_TRACER :0::[00m interlatency, from_pad=(string)filesrc0_src, to_pad=(string)net_src_bypass, time=(string)0:00:01.525158992;

which of these components would be the actual inference element?

Another question is how to combine different models? For example if I want to perform classification (ResNet) on bounding box outputs from tinyYolo v2.

Thanks for your help.

Hi, any updates on this? thanks

Hi @gsrujana89 , thank you for your questions, more information about:

- ResNet support

- GstShark filtering information

- Combinate different models Will be provided soon, in the mean time it is important to mention that GstInference is a live project, and therefore has a backlog with this user story included on it. The idea is to work on it on the incoming sprints. We can raise the priority if this is needed by you company, please send an email to [email protected] with details.

regards.

2. GstShark question: @gsrujana89 according to wiki provided by @michaelgruner you can use the env variables for filtering messages: GST_DEBUG="GST_TRACER:7" GST_TRACERS="framerate"

On your provided output you can see fps measures for different elements, you can grep or filter by other means the information that you require, in this case the pads related with tinyyolov2 element, I suggest that you look for:

- net_src_bypass

Currently bypass and model pads provides same FPS, it seems that you are not using the element's model src pad, gst-shark won't provide any measure for a non-connected pad.

Regards.

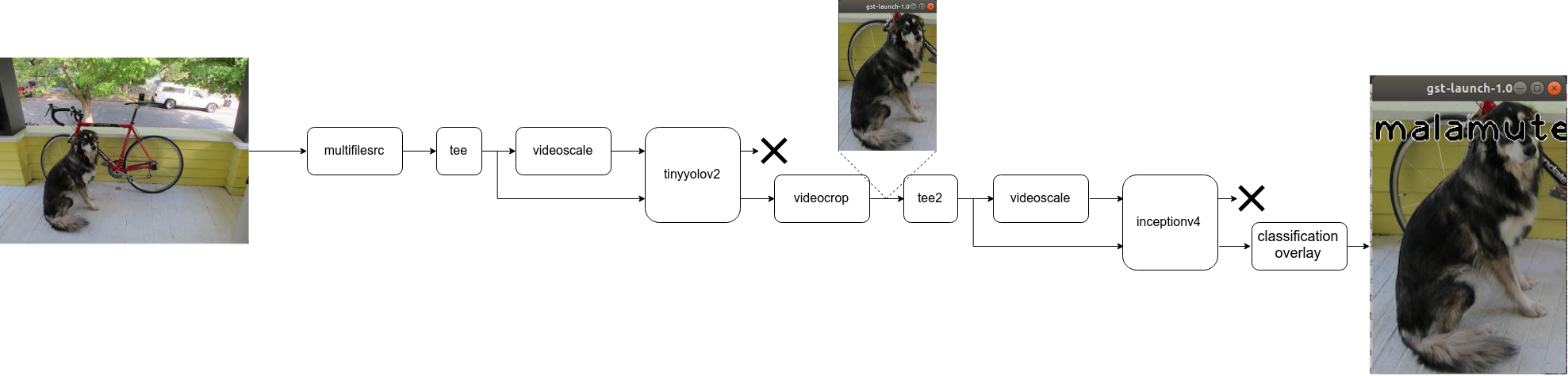

3. Combining different models: It is currently possible to run models in cascade. That's why we output both model and bypass pads. We are planning on adding a GstVideoCropMeta so the videocrop element crops the region automatically. Since this is currenlty not supported, one possibility is using a videocrop element and an external app for control is the only option. The external app would read the detection meta and set videocrop parameters. PTZR is a hardware accelerated alternative to videocrop. Here is an example pipeline:

gst-launch-1.0 \ multifilesrc location=tinyyolo.jpg start-index=0 stop-index=0 loop=true ! jpegparse ! jpegdec ! videoconvert ! "video/x-raw, format=RGBx" ! tee name=t \ t. ! videoscale ! queue ! net.sink_model \ t. ! queue ! net.sink_bypass \ tinyyolov2 name=net model-location='graph_tinyyolov2_tensorflow.pb' backend=tensorflow backend::input-layer='input/Placeholder' backend::output-layer='add_8' \ net.src_bypass ! videoconvert ! ptzr rectangle='106,222,226,317' ! "video/x-raw, width=(int)226, height=(int)317" ! videoconvert ! tee name=t2 \ t2. ! videoscale ! queue ! net2.sink_model \ t2. ! queue ! net2.sink_bypass \ inceptionv4 name=net2 model-location=graph_inceptionv4_tensorflow.pb backend=tensorflow backend::input-layer=input backend::output-layer=InceptionV4/Logits/Predictions \ net2.src_bypass ! videoconvert ! classificationoverlay labels="$(cat imagenet_labels.txt)" font-scale=3 thickness=4 ! videoconvert ! xvimagesink sync=false

That is great! I will execute 2 & 3. I look forward to resnet model.

Hi @gsrujana89 , Just to notify you that ResNet50V1 is supported in the latest R2Inference and GstInference release. Thanks for your interest in our project.

Regards.

Hi @gsrujana / @gsrujana89 , please checkout our new hierarchical meta architecture https://developer.ridgerun.com/wiki/index.php?title=GstInference/Metadatas/GstInferenceMeta. It will allow you to run multiple models simultaneously. You can run a classification based on the bounding box result out of the first detection, all being keep in a single meta