ARAX production and kg2beta crashes

Got an alert this morning that ARAX was not working properly. Found two services not running:

* /mnt/data/orangeboard/production/RTX/code/UI/OpenAPI/python-flask-server/RTX_OpenAPI_production.start is not running

* /mnt/data/orangeboard/kg2beta/RTX/code/UI/OpenAPI/python-flask-server/KG2/RTX_OpenAPI_kg2beta.start is not running

The others were running. End of the production log was:

2023-01-22T17:02:05.662515 DEBUG: [] Wrote /responses/117236.json in 0.14253541082143784 seconds

2023-01-22T17:02:05.663886 INFO: [] Result was stored with id 117236. It can be viewed at https://arax.ncats.io/?r=117236

2023-01-22T17:02:05.663925 INFO: [] Processing is complete and resulted in 166 results.

2023-01-22T17:02:05.663947 INFO: [] Processing is complete. Transmitting resulting Message back to client.

INFO:werkzeug:127.0.0.1 - - [22/Jan/2023 17:02:06] "POST /api/arax/v1.3/query HTTP/1.1" 200 -

I suspect that was the 9am PST watchdog query. Then at 10am it was found dead. Strange.

For the kg2beta, the log ended with:

2023-01-22T17:38:06.324394 INFO: [] Processing action 'return' with parameters {'store': 'false'}

2023-01-22T17:38:06.477808 INFO: [] Processing is complete and resulted in 190856 results.

2023-01-22T17:38:14.380060 DEBUG: [] Results have been ranked and sorted

2023-01-22T17:38:14.380229 INFO: [] Processing action 'return' with parameters {'store': 'false'}

2023-01-22T17:38:14.493286 INFO: [] Processing is complete and resulted in 208803 results.

2023-01-22T17:38:44.709538 DEBUG: [] Results have been ranked and sorted

2023-01-22T17:38:44.709754 INFO: [] Processing action 'return' with parameters {'store': 'false'}

2023-01-22T17:38:44.815243 INFO: [] Processing is complete and resulted in 205945 results.

2023-01-22T17:39:08.345732 DEBUG: [] Results have been ranked and sorted

2023-01-22T17:39:08.345891 INFO: [] Processing action 'return' with parameters {'store': 'false'}

2023-01-22T17:39:08.451154 INFO: [] Processing is complete and resulted in 209010 results.

INFO:root:[query_controller]: child process detected a SIGPIPE; exiting python

INFO:root:[query_controller]: child process detected a SIGPIPE; exiting python

INFO:root:[query_controller]: child process detected a SIGPIPE; exiting python

INFO:root:[query_controller]: child process detected a SIGPIPE; exiting python

INFO:root:[query_controller]: child process detected a SIGPIPE; exiting python

INFO:root:[query_controller]: child process detected a SIGPIPE; exiting python

INFO:root:[query_controller]: child process detected a SIGPIPE; exiting python

INFO:root:[query_controller]: child process detected a SIGPIPE; exiting python

INFO:root:[query_controller]: child process detected a SIGPIPE; exiting python

It looks like there were many simultaneous queries returning hundreds of thousands of results. Perhaps a cause..

It may be that high load took down kg2beta. Still a mystery why production ARAX went down. Perhaps out of memory on the server.

I have killed and restarted all services on arax.ncats.io

I got an alert at 1pm that there were again problems. This time on the main kg2 was not running:

* /mnt/data/orangeboard/kg2/RTX/code/UI/OpenAPI/python-flask-server/KG2/RTX_OpenAPI_kg2.start is not running

the log:

2023-01-22T20:17:38.345593 INFO: [] Resultify created 148916 results

2023-01-22T20:17:38.602211 DEBUG: [] Cleaning up the KG to remove nodes not used in the results

2023-01-22T20:17:39.068751 INFO: [] After cleaning, the KG contains 16258 nodes and 256648 edges

2023-01-22T20:17:39.075001 INFO: [] Running experimental reranker on results

2023-01-22T20:17:39.075129 DEBUG: [] Starting to rank results

2023-01-22T20:17:39.075183 DEBUG: [] Assessing the QueryGraph for basic information

2023-01-22T20:17:39.075214 DEBUG: [] Found 2 nodes and 1 edges

2023-01-22T20:17:39.075302 DEBUG: [] The QueryGraph reference template is: n00(ids)-e00()-n01(categories=biolink:NamedThing)

2023-01-22T20:17:44.063494 WARNING: [] No non-infinite value was encountered in any edge attribute in the knowledge graph.

2023-01-22T20:17:44.063586 INFO: [] Summary of available edge metrics: {}

2023-01-22T20:18:01.359465 DEBUG: [] Results have been ranked and sorted

2023-01-22T20:18:01.359596 INFO: [] Processing action 'return' with parameters {'store': 'false'}

2023-01-22T20:18:01.465147 INFO: [] Processing is complete and resulted in 172140 results.

2023-01-22T20:19:20.376227 DEBUG: [] Results have been ranked and sorted

2023-01-22T20:19:20.376363 INFO: [] Processing action 'return' with parameters {'store': 'false'}

2023-01-22T20:19:20.463581 INFO: [] Processing is complete and resulted in 148916 results.

INFO:root:[query_controller]: child process detected a SIGPIPE; exiting python

INFO:root:[query_controller]: child process detected a SIGPIPE; exiting python

INFO:root:[query_controller]: child process detected a SIGPIPE; exiting python

INFO:root:[query_controller]: child process detected a SIGPIPE; exiting python

INFO:root:[query_controller]: child process detected a SIGPIPE; exiting python

INFO:root:[query_controller]: child process detected a SIGPIPE; exiting python

INFO:root:[query_controller]: child process detected a SIGPIPE; exiting python

INFO:root:[query_controller]: child process detected a SIGPIPE; exiting python

INFO:root:[query_controller]: child process detected a SIGPIPE; exiting python

INFO:root:[query_controller]: child process detected a SIGPIPE; exiting python

INFO:root:[query_controller]: child process detected a SIGPIPE; exiting python

INFO:root:[query_controller]: child process detected a SIGPIPE; exiting python

INFO:root:[query_controller]: child process detected a SIGPIPE; exiting python

INFO:root:[query_controller]: child process detected a SIGPIPE; exiting python

INFO:root:[query_controller]: child process detected a SIGPIPE; exiting python

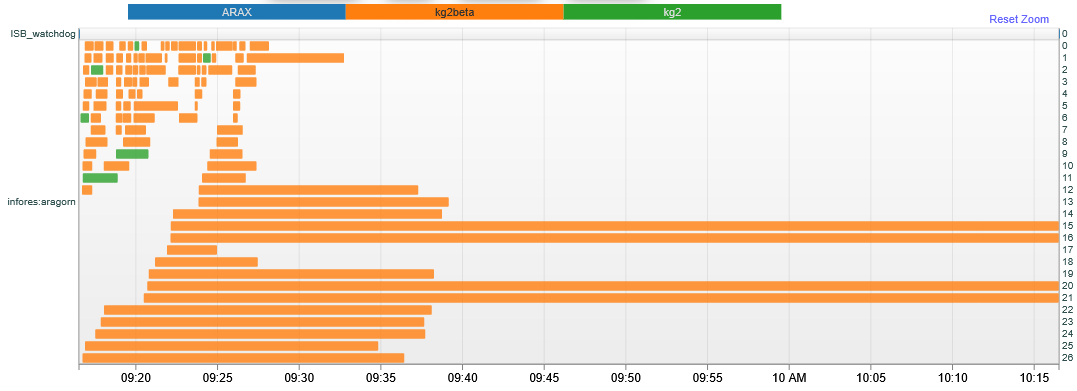

It looks like Aragorn was beating on production kg2 this time:

So this seems pretty clear that heavy load can and does kill it. We should try to develop a solution.

I wonder if this ever happens on the ITRB instances and whether they have systems in place to automatically restart?

Will be resolved with migration to PloverDB 2.0 KG2