idea: down-weight results whose "essence" is too general

This idea came up in the Translator Stand-up Meeting on March 15, 2022. The following query:

https://github.com/NCATSTranslator/testing/blob/main/ars-requests/not-none/1.2/subutexDryMouth2.json

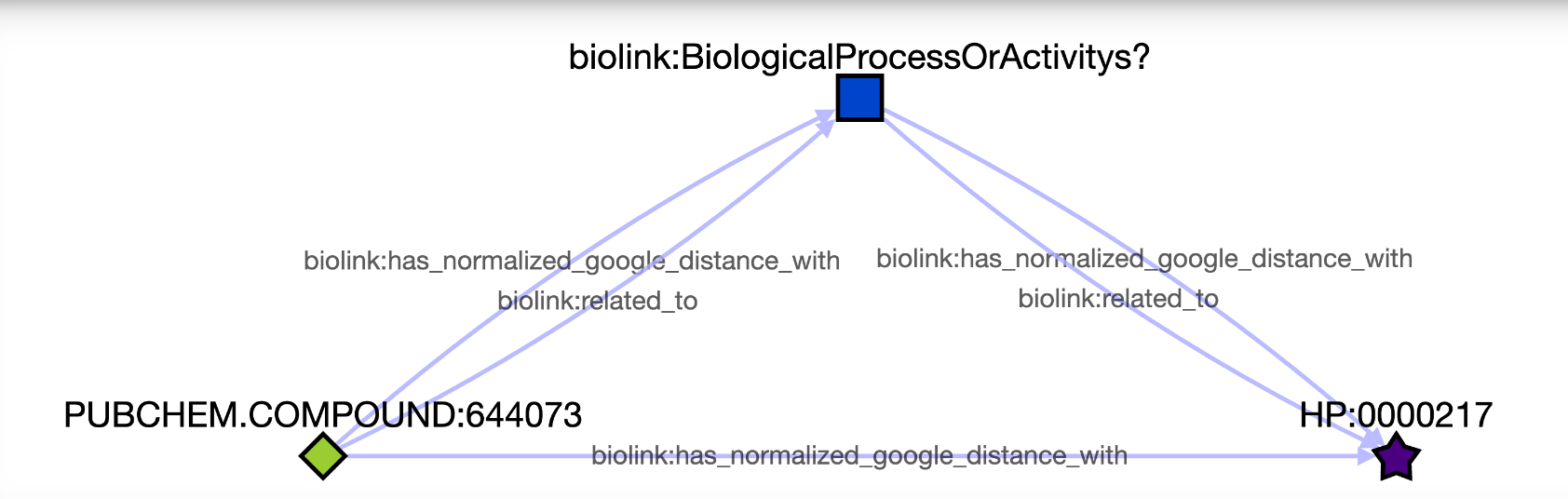

gave some results, like this one:

that are probably too general to be useful. Is there any way to (optionally) filter out results whose "essence" concept is way too general, i.e., has too high a proportion of the relevant semantic-type-specific concepts under them? Like, a significant proportion of biolink:ProcessOrActivity concepts are underneath "Pathogenesis".

Christine Colvis was supportive of some kind of filtering capability. Jennifer Hadlock suggested that it (the filtering) should be optional, and I agree with that.

Sounds like @dkoslicki and @finnagin are already working on a "down-weighting" capability for overly general concepts. See also one technical issue that came up, in that work: https://github.com/RTXteam/RTX/issues/1809

Interesting idea. @saramsey what do you mean by under them in this instance? And how would I tell if a node has too many semantic-type-specific concepts under it? Would we need to get that info from kg2 or would it already be in the result set or the returned kg?

If there is an easy way to identify these nodes I could add a filter method that removes all nodes like this. Then we could add an operation to be able to call it through a workflow.

Good discussion of this issue in the AHM today. Recapping for DMK here. Jared pointed out that we could downweight a result if the "essence node" has too high degree in the query-specific KG (or perhaps in the RTX-KG2 KG). Alternatively, Jared proposed that we could pre-compute (and store in RTX-KG2), for each concept node, some kind of "specificity index", which could for example be the proportion of concepts in the node's ontology, that are descendants of the node. For example, for the node Parkinson's Disease (MONDO:0005180), there are 27 descendant nodes (including MONDO:0005180 itself), out of 43,953 terms in the ontology, for a specificity index value of 27/43,953 = 0.00061. We discussed some pros and cons of pre-computing specificity index values. We could probably do it for MONDO, HPO, CHEBI, and GO, which (by virtue of node normalization) would provide specificity indexes for a wide swath of nodes in KG2. For all the other nodes, we could probably just assign an average value of 0.001 or something. There was discussion of whether we should hard-filter results based on essence node specificity index or whether we should just (somehow) include the result's specificity index value in the calculation of the result graph's score; the sentiment of the group seemed to favor using the index to weight, rather than hard-filter. Jared also pointed out that we could generate (or hand-curate) a list of the most ridiculously un-specific nodes that tend to show up in our queries, and filter those out. Obvious caveats apply to any situation where we are hand-curating a list; but it is an option I suppose. Also, Jared suggested that we could use a hand-curated list of such super-general concept nodes to test out our proposed specificity index, i.e., to see how we should/could weight or filter on it. Finn pointed out that DMK and Finn are looking into using FET to eliminate results that are non-specific (right Finn?). So that is another potential method to address this issue.

Interesting idea. @saramsey what do you mean by under them in this instance?

Right, I mean descendant nodes. See the example in my previous post, using MONDO:0005180.

For posterity's sake: IIRC another way of doing this discussed at yesterday's AHM (and not specifically mentioned in @saramsey's recap above) would be to simply chop off the top N parent terms/nodes of whatever given ontology/hierarchy is being applied.

I went over this with @saramsey and he suggested a rough python module that precomputes a specificity index based on the ratio of descendants of a node to the number of concepts in its ontology. The values would be stored in a PickleDB. If the query returns a result that is not stored, it should use the SRI Node Normalizer to try to map a synonym, otherwise return None. Steve, please add any corrections if I misrepresented anything. Does this sound like something we should move forward on?

As of 6/5 meeting with Finn: this is in KG2 team's court now

After the values are computed, reasoning team can incorporate this into the ranker

After fiddling with this for a while, I think it might be better to use the depth in an appropriate ontology to do this. Some bioentities are specific, but have lots of neighbors (eg. RITUXIMAB with 3290 neighbors) while others are non-specific, but have few neighbors (eg. therapeutic agent at 3023, Atypical antipsychotic at 809)

I wonder if a combination of depth in an appropriate ontology (normalized) and some degree-based metric might be useful... for instance, "water" isn't a helpful answer for us, even though it is a very specific concept. but, it has a huge degree (102,706).

Just following up on this, Amy and I have had fairly extensive discussions on how to rank triples (first in PloverDB, then perhaps later in ARAX) based on a combination of specificity and "vertex degree" of the non-pinned node in the triple. @amykglen is that right? Anything else I should add here?

yes, that's right, thanks Steve. I neglected to post an update here on our plan/progress.

I think we all decided a few AHMs ago to prioritize the new synonymizer over this project, but work on this is underway.

Steve and I are thinking it makes sense for Plover to output what is essentially a score of 'usefulness' for each node (combining specificity and degree), since Plover has easy access to both node degree and node ontology depth. this score can be returned from Plover by default in node attributes (in KG2 results), but we'll also expose an endpoint on Plover via which ARAX can grab these node scores (for applying to results from other KPs).

- for the degree half of the score: we're thinking of using the degree of nodes involved in (non-semmed)

treatsandcausesedges (and maybe other predicates) to try to identify a 'sweet spot' of node degree that helps indicate how useful that node is as an answer; I've done initial degree distribution analyses for this and it seems promising. the 'sweet spot' appears to be different for different node categories. - for the specificity index half of the score: I'm thinking of looking at the depth of nodes within KG2c's subclass_of tree as a whole, rather than looking up the depth of each node in its original ontology, since KG2 should contain the ontologies anyway. I'll be working on this soon along with a larger expansion of Plover's subclass_of reasoning (which currently is still limited to only Drugs/Diseases).

(this project will likely serve as the basis for a thesis chapter of mine)

Thank you @amykglen for the update. Got it. Is there an issue in RTX where work on the new synonymizer is being tracked?

there is not yet - I'll create one soon. I've been keeping my code in a separate repo while it's in the experimental stage

one more note: I'm planning to first start by just implementing the specificity half, since that's more straightforward, and deploying that so that we have something in ARAX quicker. after that I'll work on the degree half.

@amykglen let me know if @kvnthomas98 can help, as this might involve the ranker

yes - will do, thanks!

I just unassigned others since I'll be working on this - I'll let @kvnthomas98 know if I could use help with the Ranker later on

do we still need to tackle this, or do we have sufficient solutions for filtering out overly general results now? (I've been a bit out of the loop here, but it sounds like we have a block list now? @kvnthomas98?)

Hi Amy,

We do have a block list now and we are going to implement semmeddb filtering based on novelty score.

ok cool - I at least removed the 'high priority' label for now. maybe a bit down the road the group can assess whether we should keep/close this issue

Adding the "technical debt" flag as concept generality would still be quite useful from the reasoning side of things