pythainlp

pythainlp copied to clipboard

pythainlp copied to clipboard

Using adversarial texts for training text normalization algorithms

Consider the following example:

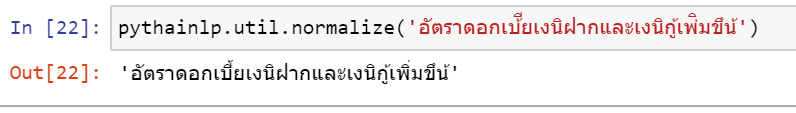

Current PythaiNLP's text normalization relies heavily on rules, which are sufficient in some circumstances. However, consider the following example, it considerably fails

.

.

Input: อัตราดอกเบ้ียเงนิฝากและเงนิกู้เพ่ิมขึน้

Expect: อัตราดอกเบี้ยเงินฝากและเงินกู้เพิ่มขึ้น

Would it be possible if we can train a ML model for text normalization? I think the approach is similar to what we did for thai2rom, which is a seq2seq model.

Speaking about training data, we might develop a probabilistic model that perturbed a given word according to to some rules, e.g. สระลอย. So, we can use it to generate the training data for our seq2seq normalization model.

From what I can see, consider that many Thai official documents are in PDF, this model will be very useful for preprocessing results from PDF parsing, which is typically not robust for cases such as สระลอย.

@c4n : do you think we can leverage what you've developed for https://github.com/c4n/Thai-Adversarial-Evaluation here?

Hi guys, You can try using this code to generate adversarial texts.

I think the rules I've used are quite simple, and there are rooms for more rules/ adversarial texts generation techniques. Also, it would be great if we can collect real spelling errors. (for more info on Thai spelling errors, you can take a look at this article: "A Cognitive and Linguistic Analysis of Search Queries of an Online Dictionary:A Case Study of LEXiTRON" ). As of now, there aren't that many works done on Thai spelling errors. But I hope that there will be more papers on this subject soon.

You can use either an E2E model or a 2-stage (detection + correction) model to do the spelling correction task. You guys can consult the first-half of this lecture to begin with. Spelling Correction was a take-home midterm exam (Kaggle competition) at Chula this semester. Let me ask them, if I can share the link to the competition.

Very interesting, will be very useful.

@wannaphong what do you think we advertise this issue in the Thai NLP group? There might be students who're interested in working on the idea, as a course project.

@wannaphong what do you think we advertise this issue in the Thai NLP group? There might be students who're interested in working on the idea, as a course project.

I think it is interesting. We may be able to present based on the problems encountered.

@cstorm125 because I actually took the example from you, can you tell us a bit about your use cases?

@heytitle I used to to clean up texts before putting them through embeddings. This might be a good research topic. For practical use, since we are leaning more towards subwords, it might not be as useful.