MONAI

MONAI copied to clipboard

MONAI copied to clipboard

Unexpected segmentation fault encountered in worker

Describe the bug

when running the unit tests in pytorch 23.03, it sometimes exits into errors:

[2023-04-12T17:08:04.837Z] .ERROR: Unexpected segmentation fault encountered in worker.

[2023-04-12T17:08:05.092Z] ./runtests.sh: line 653: 2477 Segmentation fault (core dumped) ${cmdPrefix}${cmd} ./tests/runner.py -p "^(?!test_integration).*(?<!_dist)$"

script returned exit code 139

e.g. https://github.com/Project-MONAI/MONAI/actions/runs/4686850278/jobs/8305411337

FYI @mingxin-zheng @Nic-Ma, I haven't found the root cause yet.

Hi @wyli ,

In the test log you shared, I think it stopped at:

https://github.com/Project-MONAI/MONAI/blob/dev/tests/test_flatten_sub_keysd.py#L57

But I personally feel this test is not the root cause..

Do you know whether the Unexpected segmentation fault raised in randomly different tests every time?

Thanks.

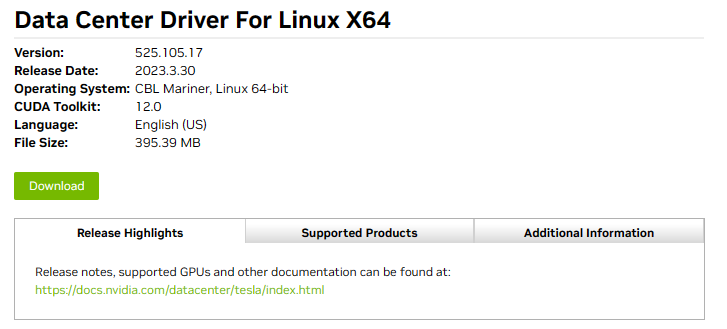

Is the driver version 530.30.02 officially supported?

I did a quick search: https://www.nvidia.com/Download/driverResults.aspx/202370/en-us/

Hi @mingxin-zheng I saw the same issue with 525.85 on blossom

also I think the error is not from test_flatten_sub_keysd as that test has been finished according to the log, it's probably from the next test case

@wyli is there a link to the failure incident on the blossom test too?

yes https://blossom.nvidia.com/dlmed-clara-jenkins/blue/organizations/jenkins/MONAI-premerge/detail/MONAI-premerge/2429/pipeline

Looks like it stopped here: https://github.com/Project-MONAI/MONAI/blob/1a55ba5423d04d2ef7ac19356ccabc4c7906f577/tests/test_to_tensor.py#L44 But the behavior looks quite random to me.

I don't think so, test_to_tensor has finished, the test sequence is random because we don't sort the glob outcomes https://github.com/Project-MONAI/MONAI/blob/1a55ba5423d04d2ef7ac19356ccabc4c7906f577/tests/runner.py#L127

this still happens randomly in 23.03

- https://blossom.nvidia.com/dlmed-clara-jenkins/blue/organizations/jenkins/MONAI-premerge/detail/MONAI-premerge/2472/pipeline

- https://blossom.nvidia.com/dlmed-clara-jenkins/blue/organizations/jenkins/Monai-latest-image/detail/Monai-latest-image/783/pipeline/189/

- https://blossom.nvidia.com/dlmed-clara-jenkins/blue/organizations/jenkins/MONAI-premerge/detail/MONAI-premerge/2455/pipeline

~it's always after test_auto3dseg or test_auto3dseg_ensemble, probably from~

https://github.com/Project-MONAI/MONAI/blob/9c9777751ab4f96e059a6597b9aa7ac6e7ca3b92/monai/apps/auto3dseg/data_analyzer.py#L209-L218

Could this lead to racing condition between processes without a lock? @Nic-Ma @wyli

https://github.com/Project-MONAI/MONAI/blob/9c9777751ab4f96e059a6597b9aa7ac6e7ca3b92/monai/apps/auto3dseg/data_analyzer.py#L370

I'm still debugging, it's happening in single GPU according to the logs, so my previous conclusion is wrong... please ignore it. I can't replicate this issue locally.

another instance with 23.03 https://blossom.nvidia.com/dlmed-clara-jenkins/blue/organizations/jenkins/Monai-latest-image/detail/Monai-latest-image/785/pipeline/139

I'm pretty sure it's triggered by test_auto3dseg_ensemble and/or test_auto3dseg

I see failures after test_auto3dseg without test_auto3dseg_ensemble present.

https://blossom.nvidia.com/dlmed-clara-jenkins/blue/organizations/jenkins/Monai-latest-image/detail/Monai-latest-image/783/pipeline/189/

If it is triggered by test_auto3dseg, it means something in the DataAnalzyer is unsafe.

agreed, and I never see the issue with the other versions of containers, it might be 23.03 specific (or pytorch ~2.0 specific?)

https://blossom.nvidia.com/dlmed-clara-jenkins/blue/organizations/jenkins/Monai-latest-docker/detail/Monai-latest-docker/797/pipeline/

seems to be a problem of OOM when number of threads is large and can be addressed by OMP_NUM_THREADS=4 MKL_NUM_THREADS=4, closing this for now.