Paper

Paper copied to clipboard

Paper copied to clipboard

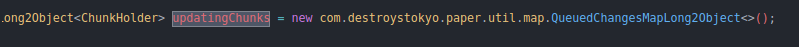

Memory leak at "PlayerChunkMap" "QueuedChangesMapLong2Object"

Timings or Profile link

https://timings.aikar.co/?id=0acd4ff33f15410c9c01bf5e9c628d67

Description of issue

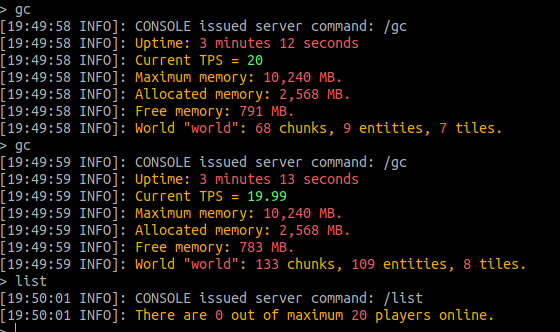

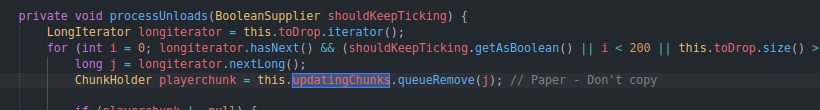

When running Paper 1.18.2 on a big server environment it seems like some chunks are being kept on this "queue" after they were unloaded from the server.

This might be related to (https://github.com/PaperMC/Paper/issues/4816) but I am not sure. Here i am posting more data that seems to indicate that some "unloaded chunks" are not being removed from this queue.

I am experiencing this weird behaviour too. (No players connected and chunks start loading/unloading)

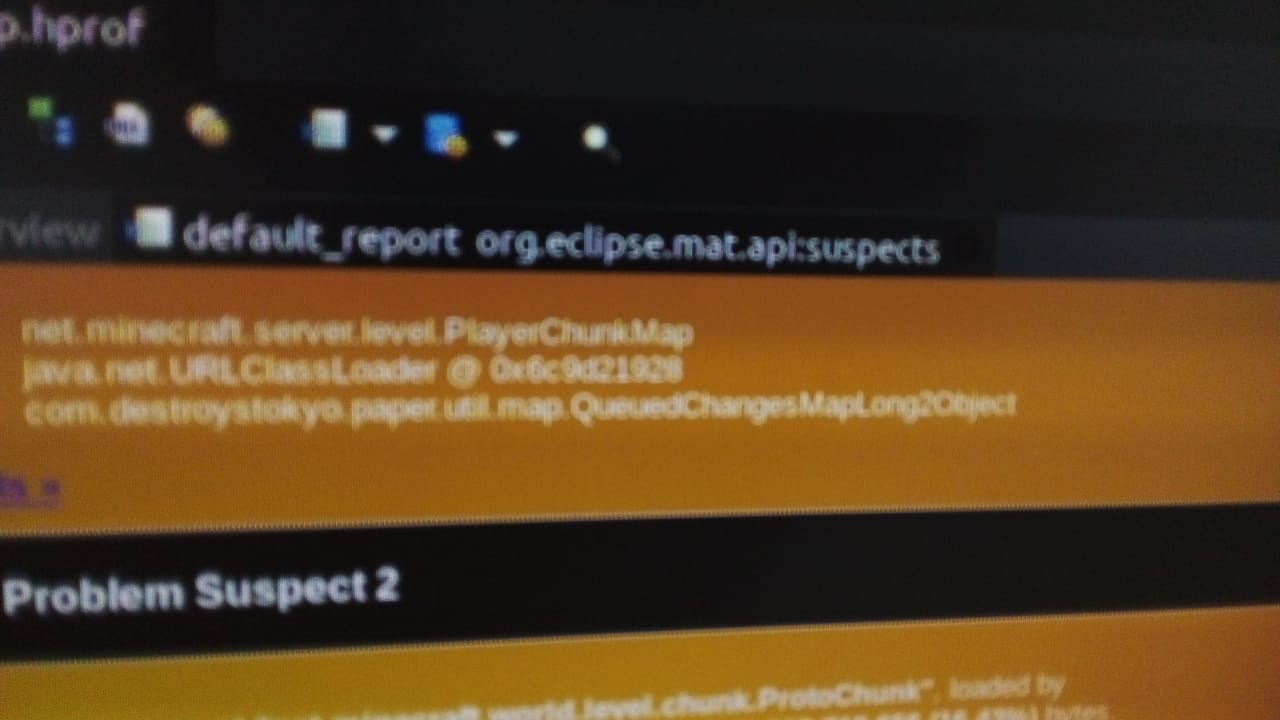

This are images of some information I seeked from the profiler.

Plugin and Datapack List

Essentials, LiteCombat, Milks ViaRewind, ViaVersion, WorldBorder

Server config files

https://paste.gg/p/anonymous/8b78d3f3eeee4ea3b02f2046355eb14c

Paper version

This server is running Paper version git-Paper-265 (MC: 1.18.2) (Implementing API version 1.18.2-R0.1-SNAPSHOT) (Git: 993f828)

Other

No response

What is the profiler software you using ? (2nd picture)

What is the profiler software you using ? (2nd picture)

Eclipse Memory Analyzer

Hello @linsaftw did you find any workaround for this ? we have the same problem. The MAT dropped the same output as yours.

Hello @linsaftw did you find any workaround for this ? we have the same problem. The MAT dropped the same output as yours.

Nope. Minecraft new chunk system is extremely hard to understand for me.

Same problem exists in the latest paper version

I've managed to reproduce something like this once, wasn't a leak either, was fluids basically doing stupid stuff like loading chunks, which, for that case, am not sure that there is a too sane way to deal with without potentially breaking fluid stuff...

Currently having this same issue. Has anyone found a fix yet?

I have this issue too. Memory leak at "PlayerChunkMap" "QueuedChangesMapLong2Object"

leak suspect reports are literally useless, "the chunk system uses a bunch of memory" is generally not inherently a leak and no surprise that a system which stores large transient data in memory, has a good chunk of memory in ram; 2194 chunks loaded on a server is not really all that much especially considering the new chunk system;

I'm honestly inclined to close these types ofissues, especially after the fixes made to the chunk system a while back such as ensuring that chunks will unload more aggressively when the chunk unload queue gets too high, otherwise, we'd need much more info, and there's already discussions elsewhere around this topic of which has generally gotten nowhere too, it generally seems to be an isolated issue rather than something which is blowing up for everybody, and in my own cases where I've monitored stuff blowing up, I've generally always been able to find an environmental cause which is not trivial to solve (and often self induced)

Sorry to not mention that this chunks were never unloaded after multiple minutes.

Well, I do have an update for this. It was indeed a garbage collection issue and after tweaking the JVM flags and updating to the new chunk system we've had zero crashes. So, at least for me, consider this solved.

On Thu, Sep 1, 2022 at 1:08 AM Shane Freeder @.***> wrote:

leak suspect reports are literally useless, "the chunk system uses a bunch of memory" is generally not inherently a leak and no surprise that a system which stores large transient data in memory, has a good chunk of memory in ram; 2194 chunks loaded on a server is not really all that much especially considering the new chunk system;

I'm honestly inclined to close these types ofissues, especially after the fixes made to the chunk system a while back such as ensuring that chunks will unload more aggressively when the chunk unload queue gets too high, otherwise, we'd need much more info, and there's already discussions elsewhere around this topic of which has generally gotten nowhere too, it generally seems to be an isolated issue rather than something which is blowing up for everybody, and in my own cases where I've monitored stuff blowing up, I've generally always been able to find an environmental cause which is not trivial to solve (and often self induced)

— Reply to this email directly, view it on GitHub https://github.com/PaperMC/Paper/issues/7640#issuecomment-1233747251, or unsubscribe https://github.com/notifications/unsubscribe-auth/AZF2ILVUO42C5CRUUZXHGVTV4A26JANCNFSM5RGFL3LA . You are receiving this because you commented.Message ID: @.***>

thanks for the update, ill just close this then and of something new comes up, we can investigate again (will be different thanks to the new chunk system anyways)