Memory leak at PlayerChunkMap

What behaviour is expected:

Remove unused PlayerChunk objects from PlayerChunkMap

What behaviour is observed:

PlayerChunk objects are permamently loaded into map causing server starving to die in few hours

Steps/models to reproduce:

idk

Plugin list:

A list of your plugins

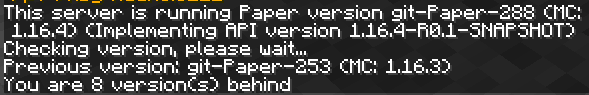

Paper version:

Paste the output of running /version on your server WITH the Minecraft version. latest is not a version; we require the output of /version so we can properly track down the issue.

Anything else:

Anything else you think may help us resolve the problem

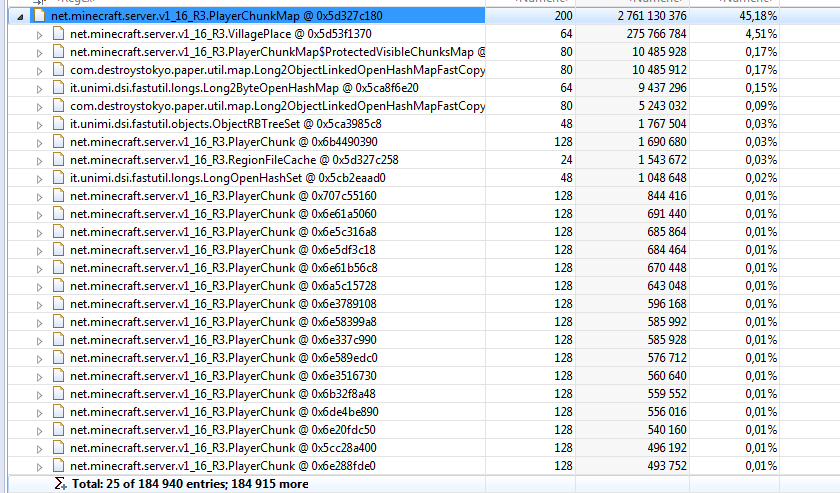

200k objects of PlayerChunk:

Having the same issue with the latest version as of now (1.16.4 #318), any updates yet? My server has become unplayable.

Use Tuinity spigot instead of paper. Works fine

sob., 5 gru 2020, 16:57 użytkownik Filippo Pesavento < [email protected]> napisał:

Having the same issue with the latest version as of now (1.16.4 #318 https://github.com/PaperMC/Paper/issues/318), any updates yet? My server has become unplayable.

— You are receiving this because you authored the thread. Reply to this email directly, view it on GitHub https://github.com/PaperMC/Paper/issues/4816#issuecomment-739313805, or unsubscribe https://github.com/notifications/unsubscribe-auth/ADAV7BX4CRT627XXOL7RJDTSTJJ47ANCNFSM4UF3OSJQ .

Use Tuinity spigot instead of paper. Works fine sob., 5 gru 2020, 16:57 użytkownik Filippo Pesavento < [email protected]> napisał: … Having the same issue with the latest version as of now (1.16.4 #318 <#318>), any updates yet? My server has become unplayable. — You are receiving this because you authored the thread. Reply to this email directly, view it on GitHub <#4816 (comment)>, or unsubscribe https://github.com/notifications/unsubscribe-auth/ADAV7BX4CRT627XXOL7RJDTSTJJ47ANCNFSM4UF3OSJQ .

This happens on Tuinity too at high playercounts

I cant confirm it, my server is running pretty stable on latest build

sob., 5 gru 2020, 17:34 użytkownik Michael [email protected] napisał:

Use Tuinity spigot instead of paper. Works fine sob., 5 gru 2020, 16:57 użytkownik Filippo Pesavento < [email protected]> napisał: … <#m_-1638815543456777194_> Having the same issue with the latest version as of now (1.16.4 #318 https://github.com/PaperMC/Paper/issues/318 <#318 https://github.com/PaperMC/Paper/issues/318>), any updates yet? My server has become unplayable. — You are receiving this because you authored the thread. Reply to this email directly, view it on GitHub <#4816 (comment) https://github.com/PaperMC/Paper/issues/4816#issuecomment-739313805>, or unsubscribe https://github.com/notifications/unsubscribe-auth/ADAV7BX4CRT627XXOL7RJDTSTJJ47ANCNFSM4UF3OSJQ .

This happens on Tuinity too

— You are receiving this because you authored the thread. Reply to this email directly, view it on GitHub https://github.com/PaperMC/Paper/issues/4816#issuecomment-739318100, or unsubscribe https://github.com/notifications/unsubscribe-auth/ADAV7BUJKNENVYAHXHHZ2ZDSTJOKBANCNFSM4UF3OSJQ .

I cant confirm it, my server is running pretty stable on latest build sob., 5 gru 2020, 17:34 użytkownik Michael [email protected] napisał: … Use Tuinity spigot instead of paper. Works fine sob., 5 gru 2020, 16:57 użytkownik Filippo Pesavento < @.***> napisał: … <#m_-1638815543456777194_> Having the same issue with the latest version as of now (1.16.4 #318 <#318> <#318 <#318>>), any updates yet? My server has become unplayable. — You are receiving this because you authored the thread. Reply to this email directly, view it on GitHub <#4816 (comment) <#4816 (comment)>>, or unsubscribe https://github.com/notifications/unsubscribe-auth/ADAV7BX4CRT627XXOL7RJDTSTJJ47ANCNFSM4UF3OSJQ . This happens on Tuinity too — You are receiving this because you authored the thread. Reply to this email directly, view it on GitHub <#4816 (comment)>, or unsubscribe https://github.com/notifications/unsubscribe-auth/ADAV7BUJKNENVYAHXHHZ2ZDSTJOKBANCNFSM4UF3OSJQ .

You need a high playercount & to easier to reproduce it, a high (no-tick) view distance

Any fixes?

same issue

same issue

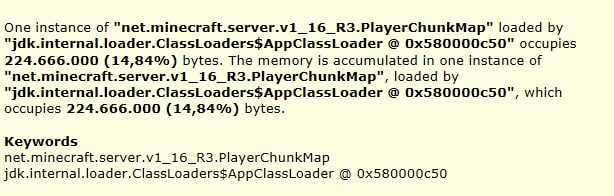

screenshots of heap reports are essentially useless as they provide very little info, 224M bytes is really not that much depending on what's going on, we'd need the actual dump

screenshots of heap reports are essentially useless as they provide very little info, 224M bytes is really not that much depending on what's going on, we'd need the actual dump

if you want I will pass you a dump from file. However I allocate 10gb on java and it takes me 20 ... another reason that may actually be him.

anyone found a solution for this yet?

No one has provided any dumps as requested yet, not much we can do until then.

I can give you a repro - my custom pregen logic triggers this (or a related) bug every time. Of course there may be a bug in my plugin itself... but now that I'm experiencing this on my live 1.18.1 Paper server I suspect it might not be in my code.

I've pulled a heap dump from my live 1.18.1 server and I see a couple overly fat PlayerChunkMap's. I have not pulled a heap dump of the leak as produced by my pregen logic yet.

The heap dump I have is 3.5GB (630MB zipped). Send me a message and I can supply the dump and/or my pregen plugin. I'll only send either of these to a spigot contributor as the heap dump is for a live SMP server and the pregen logic is part of my much larger custom plugin.

A symptom I've observed when running my pregen plugin, which does let the game tick - but is very aggressive, the CPU heavily uses 2 cores and if I stop the pregen before MC freezes due to memory problems the CPU will go to 100% on all cores/threads for some time before idling back down to < 20%. Clearly the background chunk saving isn't able to fully do its job until the server load reduces. I forget if this next symptom happens when i stop my pregen, or when I run /stop - but at one of these points the memory spikes 30-50% again and can cause an OOM freeze.

After digging through the source code trying to fix this, I realise its because of the noSave variable in the ServerLevel class. You must have auto save on otherwise this issue will persist.

/save-on or world.setAutoSave(true).

If someone wants to contribute a change to fix this, there's a tick function in PlayerChunkMap, it will check if saving is on, just remove that. AFAIK, PlayerChunkMap doesn't save chunks through this method anyways, although I haven't looked further in.

I think I've also been having a problem with this, Eclipse Memory Analyzer suspects PlayerChunkMap and like the fellow above I have auto saving turned off. Here's a heap dump: https://www.dropbox.com/s/x8ewqzmd0nxsrct/heap-dump-2022-01-15_21.41.10.hprof.zip?dl=0

same here, 40 players server it only take 2 hours to create a memory leak that crash a server

I have the same problem ! I have 8 GB ram with only 30 plugins on 10 player base , i would appreciate if Developers take a action against this issue

I've yet to be able to reproduce this issue, but I also don't run a full term server anymore so, not 100% on what I can do here especially as this doesn't appear to be stupidly widespread

I have auto saving turned off

turning auto saving off prevents chunk unloading, so ofc it's gonna end up running out of memory eventually

Have people tested the builds here? https://github.com/PaperMC/Paper/pull/7368

I was responding to the specific claim there, not in general; disabling auto saving will prevent chunks from unloading so it's no surprise that the chunk map eats a chunk of data if you had that disabled

I was responding to the specific claim there, not in general; disabling auto saving will prevent chunks from unloading so it's no surprise that the chunk map eats a chunk of data if you had that disabled

It's a bit silly that auto saving would determine whether chunks are unloaded or not.

paper has 0 control over when the GC runs, so that's irrelevant, it will run when the JVM things it runs

if memory is pooling up in the chunk manager we'd need to see where, I've not had the time to look into many dumps and the ones I have seen thus far don't have any issues

you had 6k chunks loaded according to that dump, and a few hundred mb which didn't really seem all that pressing or confirmation of aleak at least on the surface to me without having to go deep diving into numbers of view distance and tickets, etc, etc

not sure whether this is related but..

I have tried to use squaremap's full render function to render the entire 10000 radius Minecraft border map(just a big map) with 0 online players.

Papaer 1.18.1 181 And then the server crashed

Caused by: java.lang.OutOfMemoryError: Java heap space

rendering map should be a way of loading chunk. so should related to this topic OutOfMemoryError crash can be caused because of a memory leak My matrix-anti-cheat is recording memory leaks and giving out warning when chunk is scanning by the map.

And in here, no players but chunk loading, so my conclude the only factor should be chunk loading is causing memory leak humm..

here is the crash log https://mclo.gs/MWIWUaK

extra info, my server using aikar's flag with 16GB ram

not sure whether this is related but..

I have tried to use squaremap's full render function to render the entire 10000 radius Minecraft border map(just a big map) with 0 online players.

Papaer 1.18.1 181 And then the server crashed

Caused by: java.lang.OutOfMemoryError: Java heap space

rendering map should be a way of loading chunk. so should related to this topic OutOfMemoryError crash can be caused because of a memory leak My matrix-anti-cheat is recording memory leaks and giving out warning when chunk is scanning by the map.

And in here, no players but chunk loading, so my conclude the only factor should be chunk loading is causing memory leak humm..

here is the crash log https://mclo.gs/MWIWUaK

extra info, my server using aikar's flag with 16GB ram

How much memory does your server have and how much is allocated in xmx?

not sure whether this is related but.. I have tried to use squaremap's full render function to render the entire 10000 radius Minecraft border map(just a big map) with 0 online players. Papaer 1.18.1 181 And then the server crashed Caused by: java.lang.OutOfMemoryError: Java heap space rendering map should be a way of loading chunk. so should related to this topic OutOfMemoryError crash can be caused because of a memory leak My matrix-anti-cheat is recording memory leaks and giving out warning when chunk is scanning by the map. And in here, no players but chunk loading, so my conclude the only factor should be chunk loading is causing memory leak humm.. here is the crash log https://mclo.gs/MWIWUaK extra info, my server using aikar's flag with 16GB ram

How much memory does your server have and how much is allocated in xmx?

as mentioned in my comment bottom 16GB Both Xmx and Xms is 16GB , I also tried both 10GB Two value is the same according to aikars guide

Currently having this same issue. Has anyone found a fix yet?

Currently having this same issue. Has anyone found a fix yet?

Either patch the jar like I mention in one of my previous comments or turn on auto-saving. It doesn't appear like it's going to be fixed as it is expected behaviour or something. I think "auto-saving" implies that chunks just won't save to disk; however, apprently it means they should never unload in memory :/

When I try to turn on autosaving it just tells me it's already enabled. All the configs are set to autosave but it still doesn't unload the chunks. Below is the heapdump: https://drive.google.com/file/d/1rrQMGyPTuwUKyf7Mo2lU4j1P6v6fuIHV/view?usp=sharing

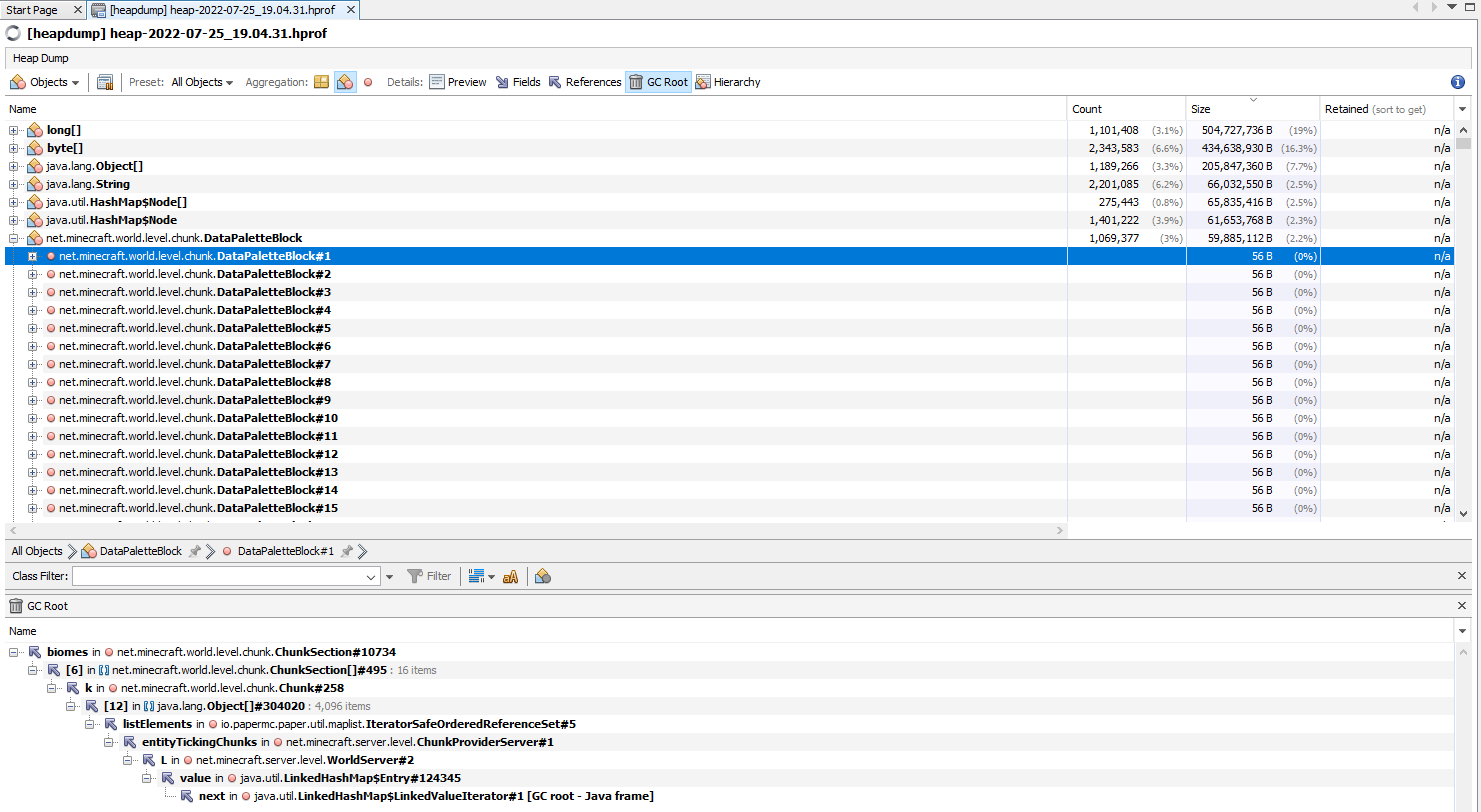

TL;DR: Got a bit bored and decided to have a dive into this - not really sure where the issue really boils down to since the dump provided above was given without any context (such as server specs, player count, etc.) but hopefully this helps provide some insight. Read below for a more detailed walkthrough.

Looking at the above heapdump through VisualVM, it appears that the entityTickingChunks is the primary collection holding Chunk instances in memory.

Here is a screenshot of what I see from my end through the GC Root tab in VisualVM. DataPaletteBlock was the highest NMS class in the list (since it is what holds the most memory inside of ChunkSections), but looking at some other classes shown as well such as Short2LongOpenHashMap or ChunkSection, the GC root brings us back to entityTickingChunks.

Scrolling down a bit farther in the DataPaletteBlock instance list (which there were over 1,000,000 of held in memory), it seems tickingChunks is also holding references. I don't think this is the actual issue, but it appears to be the specific collection(s) exhausting the memory.

Now, searching for ChunkMapDistance in the GC Root (DistanceManager inside of Mojang mappings), there is a map containing all the tickets registered for chunks in the world. In the same heap dump, it appears there are a few thousand tickets. Most tickets from what I could see in this dump were of level 33, and of the PLAYER type. 33 is a static constant in DistanceManager of type BLOCK_TICKING_LEVEL_THRESHOLD. I'm not entirely sure of the specifics around this, but from what I could gather this level should not be keeping chunks loaded in memory indefinitely.

The highest value normally utilized within the chunk system for these levels is 33. Plugin tickets when they call DistanceManager#addRegionTicketAtDistance specify a value of 2 in the level parameter, which is decremented from the 33 default. This means chunk tickets from plugins are always force loaded, and the only instance I can find where chunks would become force loaded outside of plugins is for keeping the spawn chunks loaded, spawning structures through the structure block, or explicitly using the /forceload command. The only force-loaded chunks I could find were with the SPAWN ticket type, so chances are these are from keeping the spawn chunks enabled and plugins don't appear to be erroneously adding tickets and forgetting to unregister them.

Unfortunately, I have zero clue what kind of hardware the actual server is running on, how many players were out exploring, how many worlds were loaded or any other valuable information that would help narrow down where this issue is really coming from. I couldn't see any plugins holding chunks in memory or any odd behavior like that, so it could most certainly be a situation where there were just a lot of players online and not enough memory allocated to handle the demand. Again, zero clue for sure without more information.

If anyone else runs into similar issues please provide more information than "im getting this too pls fix". It does nothing to help narrow down the problem and providing info such as heap dumps, server specs, player count, etc. will make this much easier to track.

Hello, and thank you RednedEpic. Our server has 25gb of ram and 3 threads on a AMD Ryzen 7 5800X 8-Core Processor. We get about 20 players or so at peak, and it tends to crash around 12am, despite a daily restart at 4am.

There has been a small update to the situation: using Spark to produce a heapdump seems to flush most of the memory being held up. For example, when it had collected to 24gb, I did /spark heapdump, and it shot all the way down to 10gb, without any gameplay impact other than a lagspike.