a question, what’s legacy?

sorry to interrupt. i found some distributed version of pgl, but they are in legacy, so what’s legacy? how can i run demo in legacy example, such like

thanks!

Some of the distributed demos in legacy are supported with PaddlePaddle<=1.8.5 and PGL <=1.2. You can still install the corresponding packages for distributed demos in the legacy directory.

We are working on migrating the old distributed demos to PaddlePaddle>=2.0 and PGL >= 2.1 in dynamic computation graphs like PyTorch. The newest distributed demos are coming soon.

Some of the distributed demos in legacy are supported with PaddlePaddle<=1.8.5 and PGL <=1.2. You can still install the corresponding packages for distributed demos in the legacy directory.

We are working on migrating the old distributed demos to PaddlePaddle>=2.0 and PGL >= 2.1 in dynamic computation graphs like PyTorch. The newest distributed demos are coming soon.

is the new version of pgl support metapath?

Sure, hetergraph has been already migrated. The old distributed random walk demos like deepwalk/metapath2vec/ges are a little bit duplicated. We are combining them to provide a more flexible and scalable network embedding tool.

so can I explore the metapath2vec in the newest version of PGL? when would you provide distributed demos for metapath2vec based on 2.0.0+?

thanks

so can I explore the metapath2vec in the newest version of PGL?

Feel free to do it.

when would you provide distributed demos for metapath2vec based on 2.0.0+?

In the most optimistic case, it will be completed in March.

so can I explore the metapath2vec in the newest version of PGL?

Feel free to do it.

when would you provide distributed demos for metapath2vec based on 2.0.0+?

In the most optimistic case, it will be completed in March.

hello, I just explore the distributed version of a deep walk in your example(folder), the Gpu accelerate version of the distributed train only support data-parallel(no dist embedding compared with aligraph) and the Cpu version of the distributed train can not support Cuda acceleration(also no dist embedding)。 will you support very big graph training in the future?

so can I explore the metapath2vec in the newest version of PGL?

Feel free to do it.

when would you provide distributed demos for metapath2vec based on 2.0.0+?

In the most optimistic case, it will be completed in March.

hello, I just explore the distributed version of a deep walk in your example(folder), the Gpu accelerate version of the distributed train only support data-parallel(no dist embedding compared with aligraph) and the Cpu version of the distributed train can not support Cuda acceleration(also no dist embedding)。 will you support very big graph training in the future?

so can I explore the metapath2vec in the newest version of PGL?

Feel free to do it.

when would you provide distributed demos for metapath2vec based on 2.0.0+?

In the most optimistic case, it will be completed in March.

hello, I just explore the distributed version of a deep walk in your example(folder), the Gpu accelerate version of the distributed train only support data-parallel(no dist embedding compared with aligraph) and the Cpu version of the distributed train can not support Cuda acceleration(also no dist embedding)。 will you support very big graph training in the future?

The CPU version of the distributed train is already dist embedding.

so can I explore the metapath2vec in the newest version of PGL?

Feel free to do it.

when would you provide distributed demos for metapath2vec based on 2.0.0+?

In the most optimistic case, it will be completed in March.

hello, I just explore the distributed version of a deep walk in your example(folder), the Gpu accelerate version of the distributed train only support data-parallel(no dist embedding compared with aligraph) and the Cpu version of the distributed train can not support Cuda acceleration(also no dist embedding)。 will you support very big graph training in the future?

so can I explore the metapath2vec in the newest version of PGL?

Feel free to do it.

when would you provide distributed demos for metapath2vec based on 2.0.0+?

In the most optimistic case, it will be completed in March.

hello, I just explore the distributed version of a deep walk in your example(folder), the Gpu accelerate version of the distributed train only support data-parallel(no dist embedding compared with aligraph) and the Cpu version of the distributed train can not support Cuda acceleration(also no dist embedding)。 will you support very big graph training in the future?

The CPU version of the distributed train is already dist embedding.

https://github.com/PaddlePaddle/PGL/blob/main/examples/deepwalk/train_distributed_cpu.py

In the 2.0.0 example, the example seems not dist embedding.

https://github.com/PaddlePaddle/PGL/blob/387d77cb9940fc3e1ed2a00af0bdb518550f91a2/legacy/examples/distribute_metapath2vec/model.py#L58

however, in the preview version, the embedding is distributed version.

so should I use v1.8.5

https://github.com/PaddlePaddle/PGL/blob/main/examples/deepwalk/train_distributed_cpu.py

In the 2.0.0 example, the example seems not dist embedding.

https://github.com/PaddlePaddle/PGL/blob/387d77cb9940fc3e1ed2a00af0bdb518550f91a2/legacy/examples/distribute_metapath2vec/model.py#L58

however, in the preview version, the embedding is distributed version.

so should I use v1.8.5

In the 2.0.0 example, when sparse=True, it will be a sparse and distributed embedding table.

https://github.com/PaddlePaddle/PGL/blob/main/examples/deepwalk/train_distributed_cpu.py In the 2.0.0 example, the example seems not dist embedding. https://github.com/PaddlePaddle/PGL/blob/387d77cb9940fc3e1ed2a00af0bdb518550f91a2/legacy/examples/distribute_metapath2vec/model.py#L58

however, in the preview version, the embedding is distributed version. so should I use v1.8.5

In the 2.0.0 example, when sparse=True, it will be a sparse and distributed embedding table.

in 2.0.0 example, the distributed deep walk CPU version does not work.

` fleetrun --worker_num 1 --server_num 1 train_distributed_cpu.py grep: warning: GREP_OPTIONS is deprecated; please use an alias or script ----------- Configuration Arguments ----------- gpus: None heter_worker_num: None heter_workers: http_port: None ips: 127.0.0.1 log_dir: log nproc_per_node: None server_num: 1 servers: training_script: train_distributed_cpu.py training_script_args: [] worker_num: 1 workers:

INFO 2021-02-25 09:45:26,784 launch.py:298] Run parameter-sever mode. pserver arguments:['--worker_num', '--server_num'], cuda count:1 INFO 2021-02-25 09:45:26,785 launch_utils.py:973] Local server start 1 processes. First process distributed environment info (Only For Debug): +=======================================================================================+ | Distributed Envs Value | +---------------------------------------------------------------------------------------+ | PADDLE_PSERVERS_IP_PORT_LIST 127.0.0.1:40657 | | TRAINING_ROLE PSERVER | | PADDLE_GLOO_FS_PATH /tmp/tmpM2D7v4 | | PADDLE_PORT 40657 | | PADDLE_WITH_GLOO 0 | | PADDLE_TRAINERS_NUM 1 | | PADDLE_TRAINER_ENDPOINTS 127.0.0.1:40651 | | POD_IP 127.0.0.1 | | PADDLE_GLOO_RENDEZVOUS 3 | | PADDLE_GLOO_HTTP_ENDPOINT 127.0.0.1:42835 | | PADDLE_HETER_TRAINER_IP_PORT_LIST | +=======================================================================================+

INFO 2021-02-25 09:45:26,791 launch_utils.py:1041] Local worker start 1 processes. First process distributed environment info (Only For Debug): +=======================================================================================+ | Distributed Envs Value | +---------------------------------------------------------------------------------------+ | PADDLE_PSERVERS_IP_PORT_LIST 127.0.0.1:40657 | | TRAINING_ROLE TRAINER | | PADDLE_GLOO_FS_PATH /tmp/tmpM2D7v4 | | PADDLE_WITH_GLOO 0 | | PADDLE_TRAINERS_NUM 1 | | PADDLE_TRAINER_ENDPOINTS 127.0.0.1:40651 | | XPU_VISIBLE_DEVICES 0 | | PADDLE_TRAINER_ID 0 | | FLAGS_selected_xpus 0 | | FLAGS_selected_gpus 0 | | CUDA_VISIBLE_DEVICES 0 | | PADDLE_GLOO_RENDEZVOUS 3 | | PADDLE_GLOO_HTTP_ENDPOINT 127.0.0.1:42835 | | PADDLE_HETER_TRAINER_IP_PORT_LIST | +=======================================================================================+

INFO 2021-02-25 09:45:26,797 launch_utils.py:903] Please check servers, workers and heter_worker logs in log/workerlog., log/serverlog. and log/heterlog.* INFO 2021-02-25 09:45:27,176 launch_utils.py:914] all workers exit, going to finish parameter server and heter_worker. INFO 2021-02-25 09:45:27,176 launch_utils.py:926] all parameter server are killed `

server

See the ./log dir for more detail.

server

See the ./log dir for more detail.

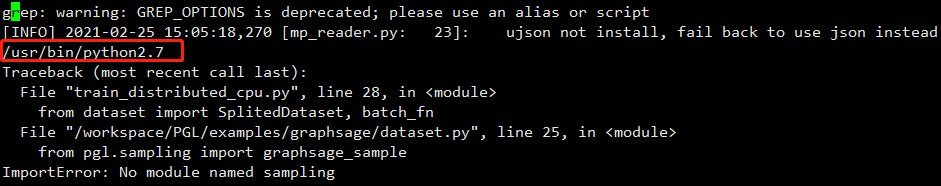

I found the problem, however, I don't know how to solve this problem, the [fleetrun] API run train_distributed_cpu.py using python2.7。 I alias default python=python3.7 with source ~/.bashrc,the problem still not solved.

server

See the ./log dir for more detail.

老兄,能加个微信好友吗,luke_lh