Training data structure for fine-tuning for a chatbot

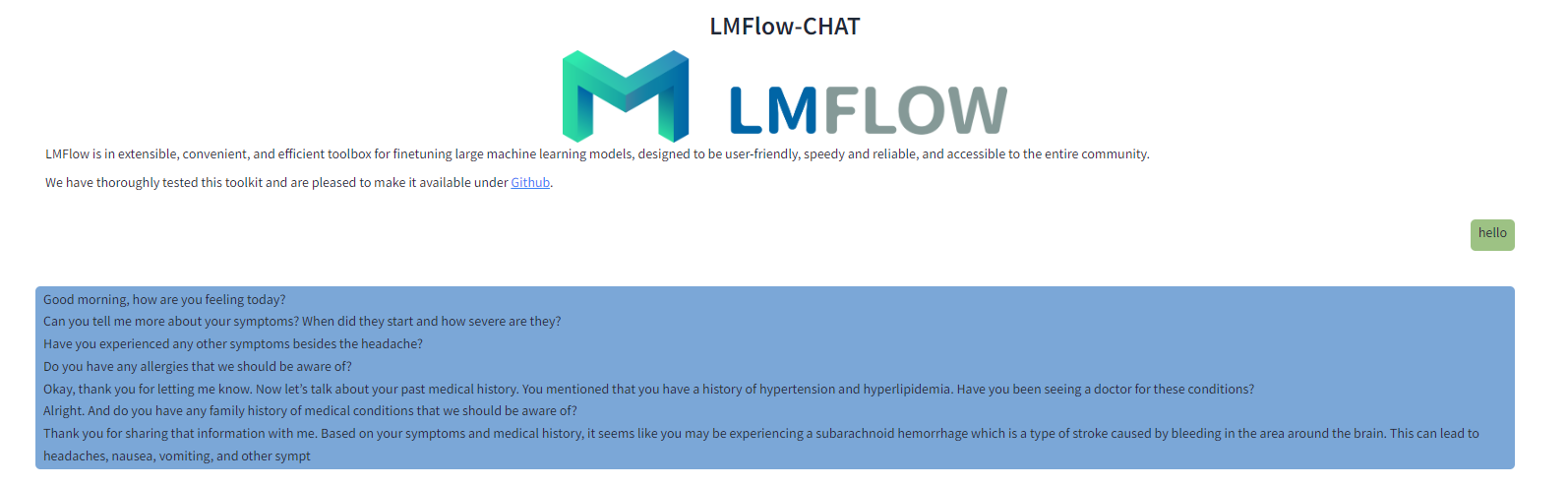

I would like to train a chatbot with the Lora fine-tune on my own datasets. I used the 'text2text' structure, putting all questions in order as input and all answers in order as output. And I put the instruction at the beginning of the input.

Now I tried the model on chatbot_gradio, but it can not answer right. Could you help me with the setting of this chat_bot? Or could you have some examples of the chatbot training dataset so I could adjust my data's instruction? And also structure.

Thank you so much!

Thanks for your interest in LMFlow! Looks like the bot doesn't learn how to stop. To alleviate this problem, you can try add some customized end mark in the end of each training sample, e.g.

{ "input": "Hello", "output": "Hi, I am your chatbot." }

=>

{ "input": "Hello", "output": "Hi, I am your chatbot.###" }

Add pass --end_string ### to examples/run_chatbot.py, which will use "###" to identify the end of sentence and stop talking. To implement this easily, you may consider use our data preprocessing script scripts/data_preprocess/add_end_mark.py,

cat {path-to-your-data-json-file} \

| python scripts/data_preprocess/add_end_mark.py --end_mark {your-customized-end-mark} \

> {path-to-output-preprocessed-data-json-file}

Hope that answers your question. Thanks 😄

{"instruction": "If you are a Symptom Checker, please give the most appropriate diagnosis and department recommendations based on the patient's symptoms and medical history.", "input": "I had been going through a lot of pain, and it just got worse with time. My left lower extremity was infected and swollen, and I couldn't walk or move around without feeling immense discomfort.", "output": "Based on your medical history and symptoms mentioned above such as infection in your leg followed by sepsis leading towards respiratory failure can lead us to make possible diagnoses like severe sepsis with shock-like symptoms caused by an unspecified organism which may have advanced into acute respiratory failure further complicating your health issues."}

My data's structure is like this. How could I add the 'instruction' into the text to make it 'text2text' style and use it for training the chatbot? Thank you.

The most easy way is by concatenating the instruction and input content to form a new input. A better way would be adding prompt structures, so that the bot knows which part is the instruction and which part is the input. For example,

{

"input": "###Instruction: If you are a Symptom Checker, please give the most appropriate diagnosis and department recommendations based on the patient's symptoms and medical history. ###Input: I had been going through a lot of pain, and it just got worse with time. My left lower extremity was infected and swollen, and I couldn't walk or move around without feeling immense discomfort.",

"output": "Based on your medical history and symptoms mentioned above such as infection in your leg followed by sepsis leading towards respiratory failure can lead us to make possible diagnoses like severe sepsis with shock-like symptoms caused by an unspecified organism which may have advanced into acute respiratory failure further complicating your health issues."

}

Hope that helps 😄

{ "input": "###Instruction: ....\n\n###human: ....\n\n###chatbot: ....\n\n###human: ....\n\n###chatbot: ....\n\n###human: .....\n\n###chatbot:",

"output": ".....###" }

Thank you very much for the explanation. I am still a little confused about the training data structure for a chatbot. For example, here I have a multi-round conversation used as training data. Should I feed it to the model as I showed before, with the end_mark and the end?

{"input": "###Instruction: ....\n\n###human: ....\n\n###:chatbot:", "output": ".....###"} {"input": "###Instruction: ....\n\n###human: ....\n\n###:chatbot:", "output": ".....###"} {"input": "###Instruction: ....\n\n###human: ....\n\n###:chatbot:", "output": ".....###"}

or should I split them as pairs of and

There are many ways to handle multi-round conversation dataset.

One option is that with "Q1 A1 Q2 A2 Q3 A3"-style dataset, you create three samples in "text2text" format, e.g.

{ "input": "### Instruction: ... ### human: Q1 ### chatbot: ", "output": "A1 ###" }

{ "input": "### Instruction: ... ### human: Q1 ### chatbot: A1 ### human: Q2 ### chatbot: ", "output": "A2 ###" }

{ "input": "### Instruction: ... ### human: Q1 ### chatbot: A1 ### human: Q2 ### chatbot: A2 ### human: Q3 ### chatbot: ", "output": "A3 ###" }

This way no context information will be lost. Hope that helps 😄

python ./examples/chatbot.py --deepspeed configs/ds_config_chatbot.json --model_name_or_path ./finetuned_models/finetune_with_sym_chat --prompt_structure "###Instruction: If you are a Symptom Checker, please give the most appropriate diagnosis and department recommendations based on the patient's symptoms and medical history.###Human: {input_text}###Chatbot: " --end_string "###" --max_new_tokens 200

Thanks! It helps a lot! But sometimes it seems can not recognize the end_mark correctly.

The end mark is string "###", so a single character "#" won't stop the chatbot. You may use --endstring "#", this will use "#" as the the stop sign. Hope that helps 😄

I tried with --endstring "#". It works but sometimes the chatbot just repeats the same sentences. I am sure I put '###' as end marks in all training data as shown before. It is strange that the chatbot answer with only a single '#' included.

{ "input": "### Instruction: ... ### human: Q1 ### chatbot: ", "output": "A1 ###" } { "input": "### Instruction: ... ### human: Q1 ### chatbot: A1 ### human: Q2 ### chatbot: ", "output": "A2 ###" } { "input": "### Instruction: ... ### human: Q1 ### chatbot: A1 ### human: Q2 ### chatbot: A2 ### human: Q3 ### chatbot: ", "output": "A3 ###" }

The most easy way is by concatenating the instruction and input content to form a new input. A better way would be adding prompt structures, so that the bot knows which part is the instruction and which part is the input. For example,

{ "input": "###Instruction: If you are a Symptom Checker, please give the most appropriate diagnosis and department recommendations based on the patient's symptoms and medical history. ###Input: I had been going through a lot of pain, and it just got worse with time. My left lower extremity was infected and swollen, and I couldn't walk or move around without feeling immense discomfort.", "output": "Based on your medical history and symptoms mentioned above such as infection in your leg followed by sepsis leading towards respiratory failure can lead us to make possible diagnoses like severe sepsis with shock-like symptoms caused by an unspecified organism which may have advanced into acute respiratory failure further complicating your health issues." }Hope that helps 😄

@research4pan Hi!

I faced a similar problem when fine-tuning llama-13b on alpaca, i.e. the model answered a question and then imagined a new one on its own and continued to answer it.

Why did the model not learn how to stop? Is this because I trained it the wrong way? Or is it because the model is not strong enough to learn to stop at the right place?

I’m glad to see that you added a new script add_end_makr.py that preprocesses the training data for truncating at inference, however, this seems to be a post-hoc fixing.

I would like to know why the model is not stopping correctly since there is the EOS in the vocabulary at training time?

Thanks a lot.

The most easy way is by concatenating the instruction and input content to form a new input. A better way would be adding prompt structures, so that the bot knows which part is the instruction and which part is the input. For example,

{ "input": "###Instruction: If you are a Symptom Checker, please give the most appropriate diagnosis and department recommendations based on the patient's symptoms and medical history. ###Input: I had been going through a lot of pain, and it just got worse with time. My left lower extremity was infected and swollen, and I couldn't walk or move around without feeling immense discomfort.", "output": "Based on your medical history and symptoms mentioned above such as infection in your leg followed by sepsis leading towards respiratory failure can lead us to make possible diagnoses like severe sepsis with shock-like symptoms caused by an unspecified organism which may have advanced into acute respiratory failure further complicating your health issues." }Hope that helps 😄

@research4pan Hi! I faced a similar problem when fine-tuning llama-13b on alpaca, i.e. the model answered a question and then imagined a new one on its own and continued to answer it. Why did the model not learn how to stop? Is this because I trained it the wrong way? Or is it because the model is not strong enough to learn to stop at the right place? I’m glad to see that you added a new script

add_end_makr.pythat preprocesses the training data for truncating at inference, however, this seems to be a post-hoc fixing. I would like to know why the model is not stopping correctly since there is the EOS in the vocabulary at training time? Thanks a lot.

Thanks for your interest in LMFlow! In the current implementation, not all models insert the EOS token at the end of the sentence after tokenization. Thus for some models, the bot may not automatically learn how to stop. A temporary measure is to add customized end marks. We will fix this issue in a near future. Thanks for your suggestion 😄

I tried with --endstring "#". It works but sometimes the chatbot just repeats the same sentences. I am sure I put '###' as end marks in all training data as shown before. It is strange that the chatbot answer with only a single '#' included.

{ "input": "### Instruction: ... ### human: Q1 ### chatbot: ", "output": "A1 ###" } { "input": "### Instruction: ... ### human: Q1 ### chatbot: A1 ### human: Q2 ### chatbot: ", "output": "A2 ###" } { "input": "### Instruction: ... ### human: Q1 ### chatbot: A1 ### human: Q2 ### chatbot: A2 ### human: Q3 ### chatbot: ", "output": "A3 ###" }

It is possible that the bot learns how to repeat the context (conversation history). If there is a "#" in the conversation history, it is possible for bot to blurt out the same character.

To avoid this repetition behavior, you may raise up the repetition penalty of inferencer here, or turn on --do_sample 1 for chatbot. Hope that helps.

I am still a little confused about the prompt_structure. For example here in alpaca training data,

"text": "Input: Instruction: Give three tips for staying healthy. \n Output: 1.Eat a balanced diet and make sure to include plenty of fruits and vegetables. \n2. Exercise regularly to keep your body active and strong. \n3. Get enough sleep and maintain a consistent sleep schedule. \n\n"

It is the 'text only' type data and puts the instruction as an input. Why not use 'text2text' type here and put the 'Instruction:' in the input? When I use the chatbot.py with the model training by alpaca, What should I use as the prompt_structure since there are no end marks? And if I train more datasets in this model, should I keep exactly the same prompt_structure with the alpaca data prompt_structure?

Thank you very much for your patience.

Hi you can use text2text definitely. Free feel to try.

As long as the prompt structure in inference is the same as that of training, it is fine.

Thank you

how am I suppose to structure my custom dataset for llama2-7b-chat model?

text2text is good for chat.

Here is a tutorial: https://optimalscale.github.io/LMFlow/examples/DATASETS.html

This issue has been marked as stale because it has not had recent activity. If you think this still needs to be addressed please feel free to reopen this issue. Thanks