OpenCTI Worker performance

Prerequisites

- [x] I read the Deployment and Setup section of the OpenCTI documentation as well as the Troubleshooting page and didn't find anything relevant to my problem.

- [x] I went through old GitHub issues and couldn't find anything relevant

- [x] I googled the issue and didn't find anything relevant

Description

Hi there, I'm running opencti in kubernetes ( doing a writeup about it, will send you a link :)) I use KEDA to scale my workers depending on the amount of messages on push_* queues, with a maximum of 10 workers.

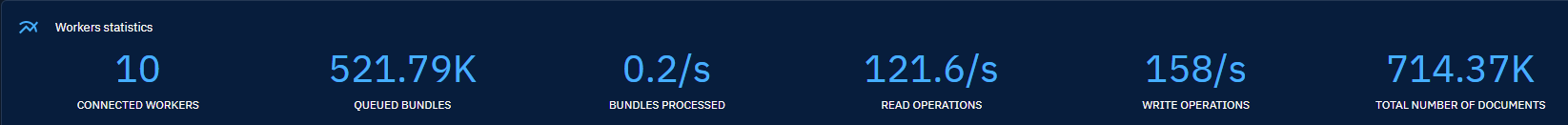

However, it seems that scaling up the workers is not impacting the bundles processed statistic, thus not reaching the intended goal. Is there something I am missing here? I provide ample cpu and memory for the workers, but they don't seem to use that much in general.

Environment

- OS (where OpenCTI server runs): Kubernetes

- OpenCTI version: 5.2.4

Hello @ThisIsNotTheUserYouAreLookingFor,

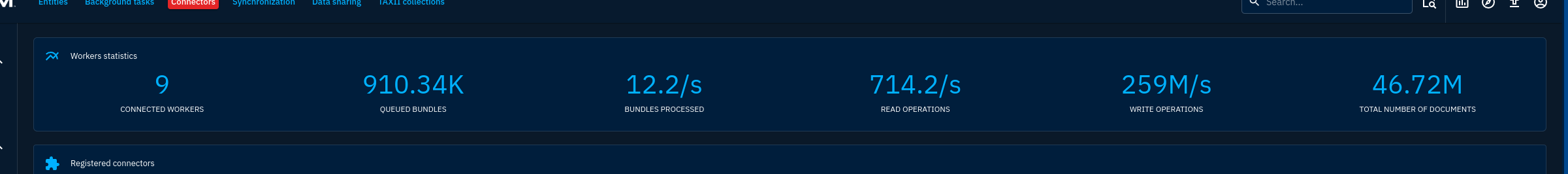

I think you should decrease your number of workers and increase the sizing of your Elastic stack. On simple 3 nodes clusters, we have this kind of performances:

You can also read https://medium.com/luatix/opencti-platform-performances-e3431b03f822?source=collection_home---2------6----------------------- to better understand they way we are handling with performances issues.

Kind regards, Samuel

Hi @SamuelHassine I'm using an ES Cloud instance, but i'll check there if this could be the issue, and update the issue!

Checked with an on-prem Elasticsearch cluster in a lab. still not handling those levels of processed bundles/S. Lowered the workers to 9, but what it seems that each worker is processing sequentially and takes up to 3 seconds to do write the info to ES. Is there any connection pooling parameter somewhere I might be forgetting about?

Can you share the opencti config (minus the secrets ofc :)) you are using in that demo setup?

Found the issue! I'm running this setup in a K8S Cluster, and I had a CPU limit on the opencti API pod. After removing it, performance jumped to expected levels. Now the following question pops up. I can run 8 workers, but since they all use the API of opencti, can I scale those up as well?