osmcha-frontend

osmcha-frontend copied to clipboard

osmcha-frontend copied to clipboard

Missing changes in long open/multi-upload, large bbox changesets

I'm submitting a bug report

Brief Description

Not all changes are shown for changesets with a large bounding box and a long open time span with multiple uploads, e.g. 133929698 (OSM).

What is the current behaviour ?

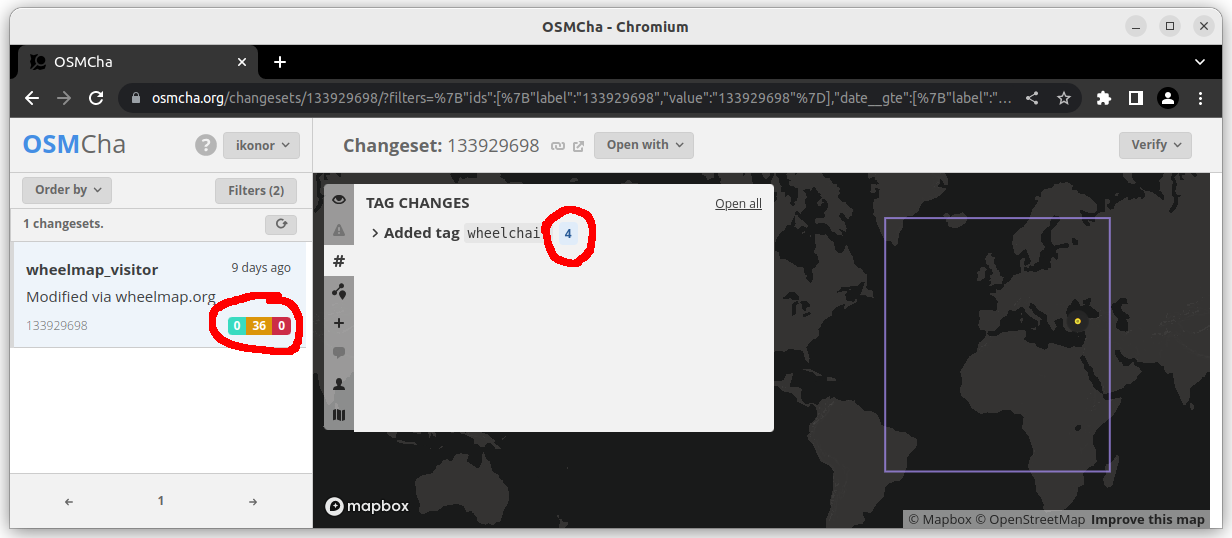

The total changes count for a changeset does not match with the number of changes shown on the map and in the changes tabs ("tag changes" only in this case):

Out of 100 changesets in a sample I took from user wheelmap_visitor:

- 37 have missing changes

- 4 are empty (#650)

- 4 with a bbox > 1 deg² are complete

What is the expected behaviour ?

As in #650, I would assume this has worked in the past, but don't really know in this case.

When does this occur ?

Might be the same root cause as in #650. Cases in the sample start with a bbox size larger than about 6 "square degrees" (simple width * height from bbox coordinates).

An additional condition for missing changes seems to be that a changeset is kept open for a long time, > 4500 seconds (75 minutes) in this sample, and/or that there are multiple uploads. The Wheelmap editor rosemary apparently immediately uploads each object change and a changeset stays open until it times out after an hour.

How do we replicate the issue ?

- Click on the changeset id link in the examples below and compare total changes shown in the changeset list or tab with the number of changes in the "tag changes" tab (wheelmap_visitor only adds/modifies wheelmap tags).

The changes numbers in the table are taken from the S3 JSONmetadata.changes_countand actual changes by counting elements with the same changeset id.

Missing examples:

| changeset | changes actual |

expected |

open for seconds |

bbox size deg² |

editor | ||

|---|---|---|---|---|---|---|---|

| 133586098 | OSM | json | 2 | 6 | 6253 | 6 | rosemary v0.4.4 |

| 133875017 | OSM | json | 1 | 2 | 7068 | 8 | rosemary v0.4.4 |

| 133775713 | OSM | json | 7 | 12 | 12099 | 33 | rosemary v0.4.4 |

| 134031519 | OSM | json | 3 | 35 | 8535 | 154 | rosemary v0.4.4 |

| 133803143 | OSM | json | 1 | 5 | 4493 | 1485 | rosemary v0.4.4 |

| 134027190 | OSM | json | 1 | 2 | 5442 | 2877 | rosemary v0.4.4 |

| 134051281 | OSM | json | 4 | 369 | 37203 | 3374 | rosemary v0.4.4 |

| 133545073 | OSM | json | 35 | 88 | 27829 | 4470 | rosemary v0.4.4 |

Complete examples:

| changeset | changes actual |

expected |

open for seconds |

bbox size deg² |

editor | ||

|---|---|---|---|---|---|---|---|

| 133657644 | OSM | json | 3 | 3 | 4956 | 6 | rosemary v0.4.4 |

| 133763487 | OSM | json | 13 | 13 | 10199 | 6 | rosemary v0.4.4 |

| 133567836 | OSM | json | 2 | 2 | 4167 | 7 | rosemary v0.4.4 |

Other Information / context:

Related:

- #650

Thanks for the detailed report, @nrenner !

Not all changes are shown for changesets with a large bounding box and a long open time span with multiple uploads, e.g. 133929698 (OSM).

Probably in that case, as the bbox and the time the changeset stayed open are so big, the overpass query timed out and the S3 file could not be updated when the changeset was closed.

I can see that https://s3.amazonaws.com/mapbox/real-changesets/production/133929698.json has open: true yet and the latest feature change timestamp is 2023-03-21T08:47:09Z.

I checked and the overpass query we execute is the same as achavi does, but our overpass server doesn't respond to such a large query.

@willemarcel thanks for the quick response and checking!

I can see that https://s3.amazonaws.com/mapbox/real-changesets/production/133929698.json has

open: trueyet

Actually all wheelmap_visitor changesets, even the working ones, are still flagged as open: true and don't have a closed_at timestamp in the S3 JSON. For example this single object upload. zero bbox, one hour 134041593.json (OSM) and the ones in the second "complete" table above. The changes_count is always correct though.

I checked and the overpass query we execute is the same as achavi does

Probably not exactly the same? Both achavi and the changeset-map fallback queries use the full created_at to closed_at time range of the changeset. If that query fails, there will be no changes, as in #650. So I wonder why the cases in this issue do have some changes.

The setup described in the geohacker diary uses Augmented Diffs:

Overpass offers augmented diffs between two timestamps that contains current and previous versions of each feature that changed in that period. We put together an infrastructure that queries Overpass minutely, prepares changeset representation as a JSON, and stashes them on S3.

Those Overpass minutely augmented diffs can be queried either by sequence id (like OSM minutely diffs): http://overpass-api.de/api/augmented_diff?id=5546258 or using a query (see http://overpass-api.de/api/augmented_diff?id=5546258&debug=yes):

[adiff:"2023-03-30T20:33:00Z","2023-03-30T20:34:00Z"];

(

node(changed:"2023-03-30T20:33:00Z","2023-03-30T20:34:00Z");

way(changed:"2023-03-30T20:33:00Z","2023-03-30T20:34:00Z");

rel(changed:"2023-03-30T20:33:00Z","2023-03-30T20:34:00Z");

);

out meta geom;

So basically also an adiff query like in achavi and changeset-map, but world-wide, without a bbox, and with a time range of one minute.

The osm-adiff-parser would then group all changes within a minute by changeset and update the S3 JSONs of those.

In this process, the bbox or duration of individual changesets doesn't matter at all. Which is why I considered this process superior to individual changeset queries. Issues like #650 and this shouldn't occur.

Therefore I wonder if this setup has changed and why?