Performance - OData response serialization is very expensive in CPU and memory

OData response serialization is very expensive in CPU and allocations.

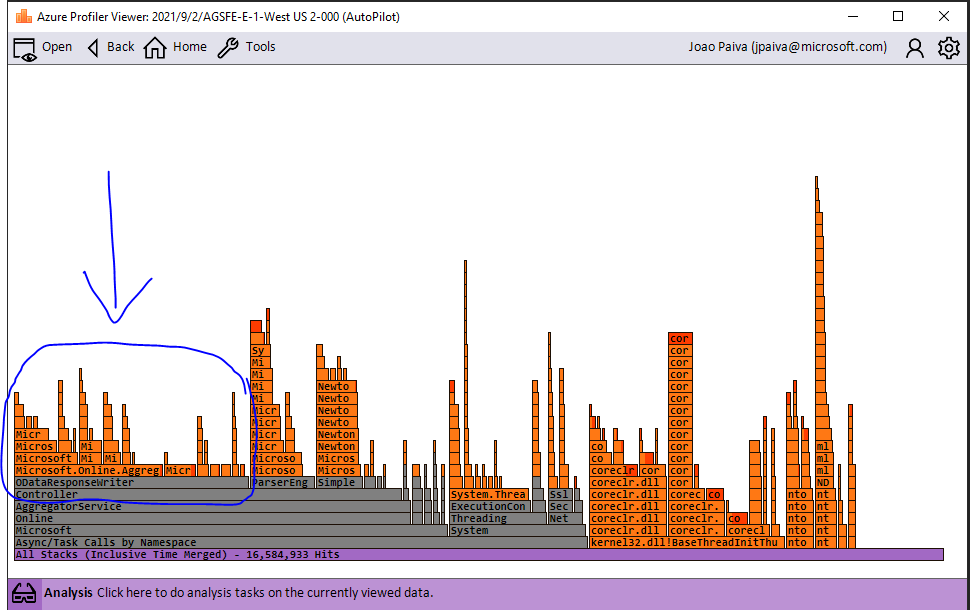

In AGS the cost of CPU to serialize responses is 25%, out of which, approximately 20% is coming from Microsoft.OData.Core.dll. The cost of allocations inside this callstack serializing the response, coming from Microsoft.OData.Core.dll, is approximately 45% (though some of this cost is improved in 7.9.1).

As described in this very thorough experiment that @habbes conducted there is a lot of opportunity for improvement. For example, I think buffering will render significant improvements for latency and CPU. It is important to avoid writing very small chunks to the stream (which in the case of AGS is a network stream) because that adds significant overhead, blocking on IO, etc. Furthermore, AGS currently uses the synchronous flavor of serialization API but it should really be using async to avoid blocking threads on network IO. However, the async overhead is more prominent when buffering is not present, so having buffering will help AGS move to async, hopefully without any major drawbacks.

We also need to try reduce the excessive number of allocations. For example there seems to be an excessive usage of lambdas that capture enclosing context. This leads to a lot of allocations. On this note, I will continue looking into the data to narrow down the most expensive allocations in this path and share.

CPU cost:

Memory cost:

Assemblies affected

Microsoft.OData.Core.dll

Reproduce steps

Exercise OData response serialization API, and compare to System.Text.Json.

Expected result

CPU and allocations should be more approximate to System.Text.Json.

Actual result

Excessive use of CPU and allocations.

I just contributed a PR for this, but only with one half of my changes. Those shouldn't be controversial. The other change I'm suggesting might be.

When I was analyzing the memory usage for a project using Odata, I noticed that the AsyncBufferedStream buffers the entire response before it sends it to the client. Is there a reason for this? From what I gathered from this and other performance related issues, this was done to reduce the number of writes to the underlying stream, because in case of a network stream, this would be very costly.

However, buffering the whole response is very expensive in terms of memory when dealing with large Odata responses (read: megabytes).

I went ahead and used the experiment from @habbes and replaced the AsyncBufferedStream with the BufferedStream which is available in .Net. I was astonished to see that this has not only some impact on memory, but improves the speed of the async implementation significantly (see Benchmark.md for the whole benchmark).

Here is an excerpt from the benchmarks I took:

| Method | Job | UnrollFactor | dataSize | Mean | Error | StdDev | Median | Ratio | RatioSD | Gen 0 | Gen 1 | Gen 2 | Allocated |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Original | Job-CRROQW | 1 | 10000 | 548.185 ms | 6.1334 ms | 5.4371 ms | 546.844 ms | ? | ? | 19000.0000 | 1000.0000 | - | 161366.68 KB |

| Async Improvements | Job-NSTZLY | 1 | 10000 | 414.704 ms | 1.7958 ms | 1.6798 ms | 414.285 ms | ? | ? | 14000.0000 | 1000.0000 | - | 122237.91 KB |

| Improvements+BufferedStream | Job-LXFLUL | 1 | 10000 | 377.662 ms | 8.1253 ms | 23.9577 ms | 388.532 ms | ? | ? | 14000.0000 | 1000.0000 | - | 116504.63 KB |

I used the standard constructor for the stream and ran these tests on .NET 5.

The only real drawback I currently see is that the BufferedStream is not available in .NET Standard 1.1, but that could be worked around.

Any thoughts on this?

Interesting findings @henning-krause!

Writing very small chunks to Network stream is not good either. But caching the entire payload in memory, regardless of the size, it can put a lot of memory pressure because of large payloads. Not good either.

@habbes is AsyncBufferedStream the stream being used for async writes? Looking into the code it seems we cache a queue of DataBuffer and when Flush is invoked it writes all to the underlying stream? I think writing to the underlying stream whenever the DataBuffer is filled, with 80kb, is a better solution/compromise.

The question is, why is the AsyncBufferedStream required at all. The .net BufferedStream is far more optimized and allows a better Async utilization. The AsyncBufferedStream currently lacks optimizations in this area.

The question is, why is the AsyncBufferedStream required at all. The .net BufferedStream is far more optimized and allows a better Async utilization. The AsyncBufferedStream currently lacks optimizations in this area.

For sure. Assuming there are no drawbacks with the BufferedStream in place of this custom implementation, IMO it should be leveraged. And for .NET standard 1.1 we could keep AsyncBufferedStream.

If no one objects, I will draft a PR next weekend...

@habbes Is the new STJ based writer enabled by default? Also, is converting the reader to use STJ on the roadmap? We see a large performance impact when using OData deserialization even compared to JSON.NET.