FIX: Ngnix fails to start if custom location upstream is unavailable/unreachable

:warning: Potentially breaking change: Upstream services with special configurations to compensate for not being hosted at root of domain are affected (e.g. Sonarr & Radarr). See section A potential problem below for an explanation.

Issue:

Currently, if a Proxy Host has a custom location and the host being forwarded to is down, Nginx fails to start. See here, here and here for examples of others who have encountered this issue.

Impact:

In this scenario:

- All other hosts managed by NPM are now also taken out.

- NPM cannot be started in order to disable or edit the host configuration in order to rectify the issue.

Solution:

Declare a variable for forward_host instead of simply injecting it directly into the proxy_pass directive.

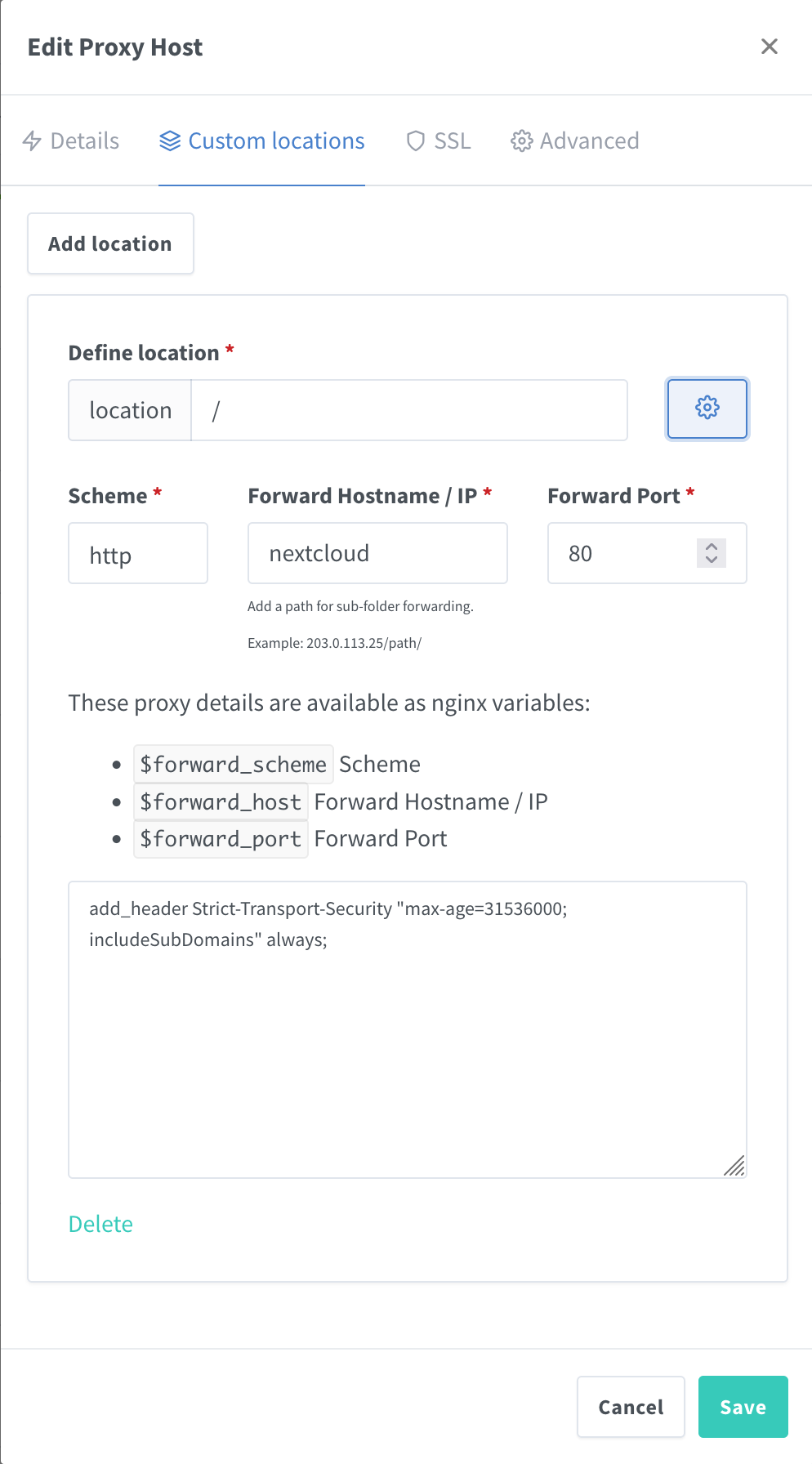

Although not strictly necessary to fix the issue, this PR also adds similar variables for forward_scheme and forward_port as this keeps things consistent and these variables could be useful in any custom elements an end-user might want to add.

Handling paths and GET params properly:

With further testing and reading I discovered that the Nginx proxy_pass directive does not gracefully handle paths when using variables (source):

Changing

proxy_pass https://localhost:5000/api/;to

set $upstream https://localhost:5000; proxy_pass $upstream/api/;you might think should result in exactly the same, you might be surprised. The former will hit your upstream server with /api/foo?bar=baz with our example request to /webapp/foo?bar=baz. The latter, however, will hit your upstream server with /api/. No foo. No bar. And no baz. :-(

I also found that @chaptergy had attempted something similar in the past, but reverted it because of this issue. @chaptergy I believe the approach I have found works well. It certainly works in my testing. I'd appreciate your second opinion & testing though.

Testing:

It can be a bit tricky testing this because it isn't always obvious exactly what request is being passed to the upstream. For my testing, I used http-https-echo as my upstream as it simply shows the details of the request being passed through directly in the browser. Super handy.

A potential problem:

For one of my proxy hosts, I had a custom location: /sonarr with the forward hostname set to sonarr.

It turns out that prior to this change, the proxy_pass directive was automatically including the custom location path /sonarr in the proxied request to the upstream Sonarr.

This meant that in Sonarr's configuration, I had configured the property URL Base to /sonarr to compensate.

With this PR, the proxy_pass directive now actually does what the NPM UI describes and so uses the path specified by the user in the Forward Hostname / IP field instead. If no path is provided there, then no path is used in the upstream request.

This means that with this change in place, I either have to set the URL Base property in Sonarr back to the default / or set the Forward Hostname / IP field in NPM to sonarr/sonarr.

This also affects Radarr in the same way.

Conclusion:

Personally, I think this PR actually makes NPM behave in a way that is more flexible, explicit and in keeping with what the UI actually presents. However, I'm pretty sure that this makes it a potentially breaking change for many users who have 'worked around' this issue by configuring their upstream systems to recognise that they are not hosted at the root of the domain. It also needs some decent testing by the community.

On the flip side, this PR would:

- Make it less likely that less experienced users would get tripped up by NPM silently adding a path to upstream requests and make compensating configuration at the upstream service unnecessary.

- Enable compatibility with upstream services that are not able to be configured to operate at non-root paths.

Having done a bit more reading, I think there may be some issues with this fix. Specifically, I suspect that it will not handle paths and request parameters correctly. Article on the topic here: https://dev.to/danielkun/nginx-everything-about-proxypass-2ona#let-nginx-start-even-when-not-all-upstream-hosts-are-available I'm going to do more testing.

Ok, so I think I have found an approach that works.

I trim the location path off of the nginx $request_uri variable and use logic at the LiquidJS templating layer to determine whether or not slashes needed to be added. There are four different permutations depending on whether or not the user adds a trailing slash to the location path or the forwarding path.

The $request_uri variable includes both the requested path, but also any GET parameters and so this approach handles both.

Currently, if a Proxy Host has a custom location and the host being forwarded to is down, Nginx fails to start.

FYI: nginx also fails to start if the destination of a stream is a hostname. I'm assuming your fix only fixes the startup issue of proxy hosts with a hostname?

FYI: nginx also fails to start if the destination of a stream is a hostname.

I didn't know that. I've never played with the stream function. Perhaps I'll take a look. EDIT: I have now raised a PR which I believe should fix the issue: https://github.com/NginxProxyManager/nginx-proxy-manager/pull/2714

I'm assuming your fix only fixes the startup issue of proxy hosts

That's correct.

Nuts. I've just found an upstream container in my setup where this change causes a redirect loop. Investigating...

EDIT: I found the issue: It's actually not broken. Rather, this PR fixes something else that I didn't even realise was broken and I had worked around. The workaround was causing the redirect loop. See section A potential problem in the PR description (top comment) for an explanation.

Found a bug in the PR:

If both the location path lacks a trailing slash and there is no forward path specified, the regex match doesn't work as desired and so the $uri_remainder variable ends up as the location path.

It's late here and I'm tired, so going to pick this up another time. I have a feeling that there's a neater & more reliable way to trim the location path off the front of the $request_uri variable in Nginx.

Hey, good to see this issue being worked on! I noticed the exact problem for a container that I occasionaly stop. Whats the best manual workaround for now?

Edit: I am seeing your comments on other issues and using the advice there. Thanks for helping with this!

Docker Image for build 7 is available on DockerHub as jc21/nginx-proxy-manager:github-pr-2672

Note: ensure you backup your NPM instance before testing this PR image! Especially if this PR contains database changes.

Would be great if this PR could be considered. It's a pain having 20 proxy targets while some can occasionally die which is ok. During runtime it will be a 503 which is perfectly fine. But on restart npm won't start and all 20 proxy targets are down.

Using IP addresses is not a solution. It won't work good with docker or kubernetes for which npm should be designed for?

Echoing TheTrueRandom, every time there's a broken update, such as in NextCloud, it takes down everything else with it, if NPM cannot start when unable to find an upstream host to proxy.

Would be great to be able to start NPM when some other containers are not on/present anymore.

Hi all, I'm sorry I went quiet on this one. Life has been busy and so I haven't had a chance to pick this back up.

I will try and make some time to look at improving this PR to a point where I am satisfied with it.

@xrh0905 Thanks for approving the change however, this PR still has some bugs so I don't think it's ready to submit for review. Life has been busy recently so I haven't had a chance to work on this PR.

I heard this issue has actually been fixed in Niginx Plus.