metaflow

metaflow copied to clipboard

metaflow copied to clipboard

Underscore in template name of argo-workflows not allowed

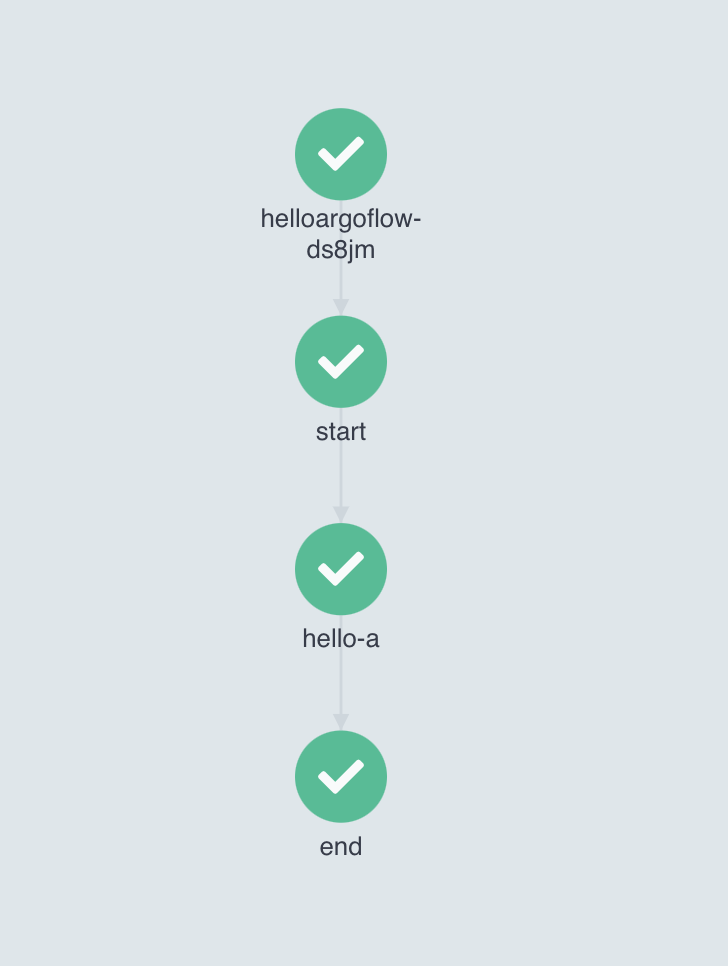

Argo-Workflows UI rejects Metaflow generated Workflows if step name contains "_" Although _sanitize method exists in _dag_templates, a step name with underscore is not sanitized. Example:

class HelloArgoFlow(FlowSpec):

@step

def start(self):

print("HelloFlow is starting.")

self.next(self.hello_a)

@step

def hello_a(self):

print("HelloFlow says HELLO.")

self.next(self.end)

@step

def end(self):

print("HelloFlow is all done.")\

if __name__ == '__main__':

HelloArgoFlow()

I thought this was handled. Let me take a look.

I was unable to reproduce the error using Metaflow 2.6.0

Click to expand!

{

"apiVersion": "argoproj.io/v1alpha1",

"kind": "WorkflowTemplate",

"metadata": {

"name": "helloargoflow",

"namespace": "default",

"labels": {

"app.kubernetes.io/name": "metaflow-flow",

"app.kubernetes.io/part-of": "metaflow"

},

"annotations": {

"metaflow/production_token": "helloargoflow-0-symt",

"metaflow/owner": "savin",

"metaflow/user": "argo-workflows",

"metaflow/flow_name": "HelloArgoFlow"

}

},

"spec": {

"parallelism": 100,

"workflowMetadata": {

"labels": {

"app.kubernetes.io/name": "metaflow-run",

"app.kubernetes.io/part-of": "metaflow"

},

"annotations": {

"metaflow/production_token": "helloargoflow-0-symt",

"metaflow/owner": "savin",

"metaflow/user": "argo-workflows",

"metaflow/flow_name": "HelloArgoFlow",

"metaflow/run_id": "argo-{{workflow.name}}"

}

},

"arguments": {

"parameters": []

},

"podMetadata": {

"labels": {

"app.kubernetes.io/name": "metaflow-task",

"app.kubernetes.io/part-of": "metaflow"

},

"annotations": {

"metaflow/production_token": "helloargoflow-0-symt",

"metaflow/owner": "savin",

"metaflow/user": "argo-workflows",

"metaflow/flow_name": "HelloArgoFlow"

}

},

"entrypoint": "HelloArgoFlow",

"templates": [

{

"name": "HelloArgoFlow",

"dag": {

"failFast": true,

"tasks": [

{

"name": "start",

"template": "start"

},

{

"name": "hello-a",

"dependencies": [

"start"

],

"template": "hello_a",

"arguments": {

"parameters": [

{

"name": "input-paths",

"value": "argo-{{workflow.name}}/start/{{tasks.start.outputs.parameters.task-id}}"

}

]

}

},

{

"name": "end",

"dependencies": [

"hello-a"

],

"template": "end",

"arguments": {

"parameters": [

{

"name": "input-paths",

"value": "argo-{{workflow.name}}/hello_a/{{tasks.hello-a.outputs.parameters.task-id}}"

}

]

}

}

]

}

},

{

"name": "start",

"activeDeadlineSeconds": 432000,

"serviceAccountName": "s3-full-access",

"inputs": {

"parameters": []

},

"outputs": {

"parameters": [

{

"name": "task-id",

"valueFrom": {

"path": "/mnt/out/task_id"

}

}

]

},

"failFast": true,

"metadata": {

"annotations": {

"metaflow/step_name": "start",

"metaflow/attempt": "0"

}

},

"volumes": [

{

"name": "out",

"emptyDir": {}

}

],

"labels": {},

"nodeSelector": {},

"container": {

"args": null,

"command": [

"bash",

"-c",

"mkdir -p $PWD/.logs && export METAFLOW_TASK_ID=(t-$(echo start-{{workflow.creationTimestamp}} | md5sum | cut -d ' ' -f 1 | tail -c 9)) && export PYTHONUNBUFFERED=x MF_PATHSPEC=HelloArgoFlow/argo-{{workflow.name}}/start/$METAFLOW_TASK_ID MF_DATASTORE=s3 MF_ATTEMPT=0 MFLOG_STDOUT=$PWD/.logs/mflog_stdout MFLOG_STDERR=$PWD/.logs/mflog_stderr && mflog(){ T=$(date -u -Ins|tr , .); echo \"[MFLOG|0|${T:0:26}Z|task|$T]$1\" >> $MFLOG_STDOUT; echo $1; } && mflog 'Setting up task environment.' && python -m pip install awscli requests boto3 -qqq && mkdir metaflow && cd metaflow && mkdir .metaflow && i=0; while [ $i -le 5 ]; do mflog 'Downloading code package...'; python -m awscli s3 cp s3://valay-k8s-testing/metaflow/HelloArgoFlow/data/f8/f882d08568f6290d783027b6aa617bb6c336a158 job.tar >/dev/null && mflog 'Code package downloaded.' && break; sleep 10; i=$((i+1)); done && if [ $i -gt 5 ]; then mflog 'Failed to download code package from s3://valay-k8s-testing/metaflow/HelloArgoFlow/data/f8/f882d08568f6290d783027b6aa617bb6c336a158 after 6 tries. Exiting...' && exit 1; fi && TAR_OPTIONS='--warning=no-timestamp' tar xf job.tar && mflog 'Task is starting.' && (if ! python hello.py dump --max-value-size=0 argo-{{workflow.name}}/_parameters/$METAFLOW_TASK_ID-params >/dev/null 2>/dev/null; then python hello.py --with kubernetes:cpu=1,memory=4096,disk=10240,image=python:3.9,service_account=s3-full-access,namespace=default,gpu_vendor=nvidia --with 'environment:vars={}' --quiet --metadata=service --environment=local --datastore=s3 --datastore-root=s3://valay-k8s-testing/metaflow --event-logger=nullSidecarLogger --monitor=nullSidecarMonitor --no-pylint --with=argo_workflows_internal init --run-id argo-{{workflow.name}} --task-id $METAFLOW_TASK_ID-params; fi && python hello.py --with kubernetes:cpu=1,memory=4096,disk=10240,image=python:3.9,service_account=s3-full-access,namespace=default,gpu_vendor=nvidia --with 'environment:vars={}' --quiet --metadata=service --environment=local --datastore=s3 --datastore-root=s3://valay-k8s-testing/metaflow --event-logger=nullSidecarLogger --monitor=nullSidecarMonitor --no-pylint --with=argo_workflows_internal step start --run-id argo-{{workflow.name}} --task-id $METAFLOW_TASK_ID --retry-count 0 --max-user-code-retries 0 --input-paths argo-{{workflow.name}}/_parameters/$METAFLOW_TASK_ID-params) 1>> >(python -m metaflow.mflog.tee task $MFLOG_STDOUT) 2>> >(python -m metaflow.mflog.tee task $MFLOG_STDERR >&2); c=$?; python -m metaflow.mflog.save_logs; exit $c"

],

"env": [

{

"name": "METAFLOW_CODE_URL",

"value": "s3://valay-k8s-testing/metaflow/HelloArgoFlow/data/f8/f882d08568f6290d783027b6aa617bb6c336a158",

"valueFrom": null

},

{

"name": "METAFLOW_CODE_SHA",

"value": "f882d08568f6290d783027b6aa617bb6c336a158",

"valueFrom": null

},

{

"name": "METAFLOW_CODE_DS",

"value": "s3",

"valueFrom": null

},

{

"name": "METAFLOW_SERVICE_URL",

"value": "https://x3kwb6qyc6.execute-api.us-west-2.amazonaws.com/api/",

"valueFrom": null

},

{

"name": "METAFLOW_USER",

"value": "argo-workflows",

"valueFrom": null

},

{

"name": "METAFLOW_DATASTORE_SYSROOT_S3",

"value": "s3://valay-k8s-testing/metaflow",

"valueFrom": null

},

{

"name": "METAFLOW_DATATOOLS_S3ROOT",

"value": "s3://oleg-stepfn-test-metaflows3bucket-1s3wkux8ddr1/data",

"valueFrom": null

},

{

"name": "METAFLOW_DEFAULT_DATASTORE",

"value": "s3",

"valueFrom": null

},

{

"name": "METAFLOW_DEFAULT_METADATA",

"value": "service",

"valueFrom": null

},

{

"name": "METAFLOW_CARD_S3ROOT",

"value": "s3://valay-k8s-testing/metaflow/mf.cards",

"valueFrom": null

},

{

"name": "METAFLOW_KUBERNETES_WORKLOAD",

"value": "1",

"valueFrom": null

},

{

"name": "METAFLOW_RUNTIME_ENVIRONMENT",

"value": "kubernetes",

"valueFrom": null

},

{

"name": "METAFLOW_OWNER",

"value": "savin",

"valueFrom": null

},

{

"name": "METAFLOW_FLOW_NAME",

"value": "HelloArgoFlow",

"valueFrom": null

},

{

"name": "METAFLOW_STEP_NAME",

"value": "start",

"valueFrom": null

},

{

"name": "METAFLOW_RUN_ID",

"value": "argo-{{workflow.name}}",

"valueFrom": null

},

{

"name": "METAFLOW_RETRY_COUNT",

"value": "0",

"valueFrom": null

},

{

"name": "METAFLOW_PRODUCTION_TOKEN",

"value": "helloargoflow-0-symt",

"valueFrom": null

},

{

"name": "ARGO_WORKFLOW_TEMPLATE",

"value": "helloargoflow",

"valueFrom": null

},

{

"name": "ARGO_WORKFLOW_NAME",

"value": "{{workflow.name}}",

"valueFrom": null

},

{

"name": "ARGO_WORKFLOW_NAMESPACE",

"value": "default",

"valueFrom": null

},

{

"name": "METAFLOW_RUNTIME_NAME",

"value": "argo-workflows",

"valueFrom": null

},

{

"name": "USER",

"value": "savin",

"valueFrom": null

},

{

"name": "METAFLOW_VERSION",

"value": "{\"platform\": \"Darwin\", \"username\": \"savin\", \"production_token\": \"helloargoflow-0-symt\", \"runtime\": \"dev\", \"app\": null, \"environment_type\": \"local\", \"use_r\": false, \"python_version\": \"3.9.1 (default, Dec 11 2020, 06:28:49) \\n[Clang 10.0.0 ]\", \"python_version_code\": \"3.9.1\", \"metaflow_version\": \"2.6.0\", \"script\": \"hello.py\", \"ext_info\": [{}, {}], \"flow_name\": \"HelloArgoFlow\"}",

"valueFrom": null

},

{

"name": "METAFLOW_KUBERNETES_POD_NAMESPACE",

"value": null,

"valueFrom": {

"configMapKeyRef": null,

"fieldRef": {

"apiVersion": null,

"fieldPath": "metadata.namespace"

},

"resourceFieldRef": null,

"secretKeyRef": null

}

},

{

"name": "METAFLOW_KUBERNETES_POD_NAME",

"value": null,

"valueFrom": {

"configMapKeyRef": null,

"fieldRef": {

"apiVersion": null,

"fieldPath": "metadata.name"

},

"resourceFieldRef": null,

"secretKeyRef": null

}

},

{

"name": "METAFLOW_KUBERNETES_POD_ID",

"value": null,

"valueFrom": {

"configMapKeyRef": null,

"fieldRef": {

"apiVersion": null,

"fieldPath": "metadata.uid"

},

"resourceFieldRef": null,

"secretKeyRef": null

}

},

{

"name": "METAFLOW_KUBERNETES_SERVICE_ACCOUNT_NAME",

"value": null,

"valueFrom": {

"configMapKeyRef": null,

"fieldRef": {

"apiVersion": null,

"fieldPath": "spec.serviceAccountName"

},

"resourceFieldRef": null,

"secretKeyRef": null

}

}

],

"envFrom": [],

"image": "python:3.9",

"imagePullPolicy": null,

"lifecycle": null,

"livenessProbe": null,

"name": "start",

"ports": null,

"readinessProbe": null,

"resources": {

"limits": {},

"requests": {

"cpu": "1",

"memory": "4096M",

"ephemeral-storage": "10240M"

}

},

"securityContext": null,

"startupProbe": null,

"stdin": null,

"stdinOnce": null,

"terminationMessagePath": null,

"terminationMessagePolicy": null,

"tty": null,

"volumeDevices": null,

"volumeMounts": [

{

"mountPath": "/mnt/out",

"mountPropagation": null,

"name": "out",

"readOnly": null,

"subPath": null,

"subPathExpr": null

}

],

"workingDir": null

}

},

{

"name": "hello_a",

"activeDeadlineSeconds": 432000,

"serviceAccountName": "s3-full-access",

"inputs": {

"parameters": [

{

"name": "input-paths"

}

]

},

"outputs": {

"parameters": [

{

"name": "task-id",

"valueFrom": {

"path": "/mnt/out/task_id"

}

}

]

},

"failFast": true,

"metadata": {

"annotations": {

"metaflow/step_name": "hello_a",

"metaflow/attempt": "0"

}

},

"volumes": [

{

"name": "out",

"emptyDir": {}

}

],

"labels": {},

"nodeSelector": {},

"container": {

"args": null,

"command": [

"bash",

"-c",

"mkdir -p $PWD/.logs && export METAFLOW_TASK_ID=(t-$(echo hello_a-{{workflow.creationTimestamp}}-{{inputs.parameters.input-paths}} | md5sum | cut -d ' ' -f 1 | tail -c 9)) && export PYTHONUNBUFFERED=x MF_PATHSPEC=HelloArgoFlow/argo-{{workflow.name}}/hello_a/$METAFLOW_TASK_ID MF_DATASTORE=s3 MF_ATTEMPT=0 MFLOG_STDOUT=$PWD/.logs/mflog_stdout MFLOG_STDERR=$PWD/.logs/mflog_stderr && mflog(){ T=$(date -u -Ins|tr , .); echo \"[MFLOG|0|${T:0:26}Z|task|$T]$1\" >> $MFLOG_STDOUT; echo $1; } && mflog 'Setting up task environment.' && python -m pip install awscli requests boto3 -qqq && mkdir metaflow && cd metaflow && mkdir .metaflow && i=0; while [ $i -le 5 ]; do mflog 'Downloading code package...'; python -m awscli s3 cp s3://valay-k8s-testing/metaflow/HelloArgoFlow/data/f8/f882d08568f6290d783027b6aa617bb6c336a158 job.tar >/dev/null && mflog 'Code package downloaded.' && break; sleep 10; i=$((i+1)); done && if [ $i -gt 5 ]; then mflog 'Failed to download code package from s3://valay-k8s-testing/metaflow/HelloArgoFlow/data/f8/f882d08568f6290d783027b6aa617bb6c336a158 after 6 tries. Exiting...' && exit 1; fi && TAR_OPTIONS='--warning=no-timestamp' tar xf job.tar && mflog 'Task is starting.' && (python hello.py --with kubernetes:cpu=1,memory=4096,disk=10240,image=python:3.9,service_account=s3-full-access,namespace=default,gpu_vendor=nvidia --with 'environment:vars={}' --quiet --metadata=service --environment=local --datastore=s3 --datastore-root=s3://valay-k8s-testing/metaflow --event-logger=nullSidecarLogger --monitor=nullSidecarMonitor --no-pylint --with=argo_workflows_internal step hello_a --run-id argo-{{workflow.name}} --task-id $METAFLOW_TASK_ID --retry-count 0 --max-user-code-retries 0 --input-paths {{inputs.parameters.input-paths}}) 1>> >(python -m metaflow.mflog.tee task $MFLOG_STDOUT) 2>> >(python -m metaflow.mflog.tee task $MFLOG_STDERR >&2); c=$?; python -m metaflow.mflog.save_logs; exit $c"

],

"env": [

{

"name": "METAFLOW_CODE_URL",

"value": "s3://valay-k8s-testing/metaflow/HelloArgoFlow/data/f8/f882d08568f6290d783027b6aa617bb6c336a158",

"valueFrom": null

},

{

"name": "METAFLOW_CODE_SHA",

"value": "f882d08568f6290d783027b6aa617bb6c336a158",

"valueFrom": null

},

{

"name": "METAFLOW_CODE_DS",

"value": "s3",

"valueFrom": null

},

{

"name": "METAFLOW_SERVICE_URL",

"value": "https://x3kwb6qyc6.execute-api.us-west-2.amazonaws.com/api/",

"valueFrom": null

},

{

"name": "METAFLOW_USER",

"value": "argo-workflows",

"valueFrom": null

},

{

"name": "METAFLOW_DATASTORE_SYSROOT_S3",

"value": "s3://valay-k8s-testing/metaflow",

"valueFrom": null

},

{

"name": "METAFLOW_DATATOOLS_S3ROOT",

"value": "s3://oleg-stepfn-test-metaflows3bucket-1s3wkux8ddr1/data",

"valueFrom": null

},

{

"name": "METAFLOW_DEFAULT_DATASTORE",

"value": "s3",

"valueFrom": null

},

{

"name": "METAFLOW_DEFAULT_METADATA",

"value": "service",

"valueFrom": null

},

{

"name": "METAFLOW_CARD_S3ROOT",

"value": "s3://valay-k8s-testing/metaflow/mf.cards",

"valueFrom": null

},

{

"name": "METAFLOW_KUBERNETES_WORKLOAD",

"value": "1",

"valueFrom": null

},

{

"name": "METAFLOW_RUNTIME_ENVIRONMENT",

"value": "kubernetes",

"valueFrom": null

},

{

"name": "METAFLOW_OWNER",

"value": "savin",

"valueFrom": null

},

{

"name": "METAFLOW_FLOW_NAME",

"value": "HelloArgoFlow",

"valueFrom": null

},

{

"name": "METAFLOW_STEP_NAME",

"value": "hello_a",

"valueFrom": null

},

{

"name": "METAFLOW_RUN_ID",

"value": "argo-{{workflow.name}}",

"valueFrom": null

},

{

"name": "METAFLOW_RETRY_COUNT",

"value": "0",

"valueFrom": null

},

{

"name": "METAFLOW_PRODUCTION_TOKEN",

"value": "helloargoflow-0-symt",

"valueFrom": null

},

{

"name": "ARGO_WORKFLOW_TEMPLATE",

"value": "helloargoflow",

"valueFrom": null

},

{

"name": "ARGO_WORKFLOW_NAME",

"value": "{{workflow.name}}",

"valueFrom": null

},

{

"name": "ARGO_WORKFLOW_NAMESPACE",

"value": "default",

"valueFrom": null

},

{

"name": "METAFLOW_RUNTIME_NAME",

"value": "argo-workflows",

"valueFrom": null

},

{

"name": "USER",

"value": "savin",

"valueFrom": null

},

{

"name": "METAFLOW_VERSION",

"value": "{\"platform\": \"Darwin\", \"username\": \"savin\", \"production_token\": \"helloargoflow-0-symt\", \"runtime\": \"dev\", \"app\": null, \"environment_type\": \"local\", \"use_r\": false, \"python_version\": \"3.9.1 (default, Dec 11 2020, 06:28:49) \\n[Clang 10.0.0 ]\", \"python_version_code\": \"3.9.1\", \"metaflow_version\": \"2.6.0\", \"script\": \"hello.py\", \"ext_info\": [{}, {}], \"flow_name\": \"HelloArgoFlow\"}",

"valueFrom": null

},

{

"name": "METAFLOW_KUBERNETES_POD_NAMESPACE",

"value": null,

"valueFrom": {

"configMapKeyRef": null,

"fieldRef": {

"apiVersion": null,

"fieldPath": "metadata.namespace"

},

"resourceFieldRef": null,

"secretKeyRef": null

}

},

{

"name": "METAFLOW_KUBERNETES_POD_NAME",

"value": null,

"valueFrom": {

"configMapKeyRef": null,

"fieldRef": {

"apiVersion": null,

"fieldPath": "metadata.name"

},

"resourceFieldRef": null,

"secretKeyRef": null

}

},

{

"name": "METAFLOW_KUBERNETES_POD_ID",

"value": null,

"valueFrom": {

"configMapKeyRef": null,

"fieldRef": {

"apiVersion": null,

"fieldPath": "metadata.uid"

},

"resourceFieldRef": null,

"secretKeyRef": null

}

},

{

"name": "METAFLOW_KUBERNETES_SERVICE_ACCOUNT_NAME",

"value": null,

"valueFrom": {

"configMapKeyRef": null,

"fieldRef": {

"apiVersion": null,

"fieldPath": "spec.serviceAccountName"

},

"resourceFieldRef": null,

"secretKeyRef": null

}

}

],

"envFrom": [],

"image": "python:3.9",

"imagePullPolicy": null,

"lifecycle": null,

"livenessProbe": null,

"name": "hello_a",

"ports": null,

"readinessProbe": null,

"resources": {

"limits": {},

"requests": {

"cpu": "1",

"memory": "4096M",

"ephemeral-storage": "10240M"

}

},

"securityContext": null,

"startupProbe": null,

"stdin": null,

"stdinOnce": null,

"terminationMessagePath": null,

"terminationMessagePolicy": null,

"tty": null,

"volumeDevices": null,

"volumeMounts": [

{

"mountPath": "/mnt/out",

"mountPropagation": null,

"name": "out",

"readOnly": null,

"subPath": null,

"subPathExpr": null

}

],

"workingDir": null

}

},

{

"name": "end",

"activeDeadlineSeconds": 432000,

"serviceAccountName": "s3-full-access",

"inputs": {

"parameters": [

{

"name": "input-paths"

}

]

},

"outputs": {

"parameters": []

},

"failFast": true,

"metadata": {

"annotations": {

"metaflow/step_name": "end",

"metaflow/attempt": "0"

}

},

"volumes": [

{

"name": "out",

"emptyDir": {}

}

],

"labels": {},

"nodeSelector": {},

"container": {

"args": null,

"command": [

"bash",

"-c",

"mkdir -p $PWD/.logs && export METAFLOW_TASK_ID=(t-$(echo end-{{workflow.creationTimestamp}}-{{inputs.parameters.input-paths}} | md5sum | cut -d ' ' -f 1 | tail -c 9)) && export PYTHONUNBUFFERED=x MF_PATHSPEC=HelloArgoFlow/argo-{{workflow.name}}/end/$METAFLOW_TASK_ID MF_DATASTORE=s3 MF_ATTEMPT=0 MFLOG_STDOUT=$PWD/.logs/mflog_stdout MFLOG_STDERR=$PWD/.logs/mflog_stderr && mflog(){ T=$(date -u -Ins|tr , .); echo \"[MFLOG|0|${T:0:26}Z|task|$T]$1\" >> $MFLOG_STDOUT; echo $1; } && mflog 'Setting up task environment.' && python -m pip install awscli requests boto3 -qqq && mkdir metaflow && cd metaflow && mkdir .metaflow && i=0; while [ $i -le 5 ]; do mflog 'Downloading code package...'; python -m awscli s3 cp s3://valay-k8s-testing/metaflow/HelloArgoFlow/data/f8/f882d08568f6290d783027b6aa617bb6c336a158 job.tar >/dev/null && mflog 'Code package downloaded.' && break; sleep 10; i=$((i+1)); done && if [ $i -gt 5 ]; then mflog 'Failed to download code package from s3://valay-k8s-testing/metaflow/HelloArgoFlow/data/f8/f882d08568f6290d783027b6aa617bb6c336a158 after 6 tries. Exiting...' && exit 1; fi && TAR_OPTIONS='--warning=no-timestamp' tar xf job.tar && mflog 'Task is starting.' && (python hello.py --with kubernetes:cpu=1,memory=4096,disk=10240,image=python:3.9,service_account=s3-full-access,namespace=default,gpu_vendor=nvidia --with 'environment:vars={}' --quiet --metadata=service --environment=local --datastore=s3 --datastore-root=s3://valay-k8s-testing/metaflow --event-logger=nullSidecarLogger --monitor=nullSidecarMonitor --no-pylint --with=argo_workflows_internal step end --run-id argo-{{workflow.name}} --task-id $METAFLOW_TASK_ID --retry-count 0 --max-user-code-retries 0 --input-paths {{inputs.parameters.input-paths}}) 1>> >(python -m metaflow.mflog.tee task $MFLOG_STDOUT) 2>> >(python -m metaflow.mflog.tee task $MFLOG_STDERR >&2); c=$?; python -m metaflow.mflog.save_logs; exit $c"

],

"env": [

{

"name": "METAFLOW_CODE_URL",

"value": "s3://valay-k8s-testing/metaflow/HelloArgoFlow/data/f8/f882d08568f6290d783027b6aa617bb6c336a158",

"valueFrom": null

},

{

"name": "METAFLOW_CODE_SHA",

"value": "f882d08568f6290d783027b6aa617bb6c336a158",

"valueFrom": null

},

{

"name": "METAFLOW_CODE_DS",

"value": "s3",

"valueFrom": null

},

{

"name": "METAFLOW_SERVICE_URL",

"value": "https://x3kwb6qyc6.execute-api.us-west-2.amazonaws.com/api/",

"valueFrom": null

},

{

"name": "METAFLOW_USER",

"value": "argo-workflows",

"valueFrom": null

},

{

"name": "METAFLOW_DATASTORE_SYSROOT_S3",

"value": "s3://valay-k8s-testing/metaflow",

"valueFrom": null

},

{

"name": "METAFLOW_DATATOOLS_S3ROOT",

"value": "s3://oleg-stepfn-test-metaflows3bucket-1s3wkux8ddr1/data",

"valueFrom": null

},

{

"name": "METAFLOW_DEFAULT_DATASTORE",

"value": "s3",

"valueFrom": null

},

{

"name": "METAFLOW_DEFAULT_METADATA",

"value": "service",

"valueFrom": null

},

{

"name": "METAFLOW_CARD_S3ROOT",

"value": "s3://valay-k8s-testing/metaflow/mf.cards",

"valueFrom": null

},

{

"name": "METAFLOW_KUBERNETES_WORKLOAD",

"value": "1",

"valueFrom": null

},

{

"name": "METAFLOW_RUNTIME_ENVIRONMENT",

"value": "kubernetes",

"valueFrom": null

},

{

"name": "METAFLOW_OWNER",

"value": "savin",

"valueFrom": null

},

{

"name": "METAFLOW_FLOW_NAME",

"value": "HelloArgoFlow",

"valueFrom": null

},

{

"name": "METAFLOW_STEP_NAME",

"value": "end",

"valueFrom": null

},

{

"name": "METAFLOW_RUN_ID",

"value": "argo-{{workflow.name}}",

"valueFrom": null

},

{

"name": "METAFLOW_RETRY_COUNT",

"value": "0",

"valueFrom": null

},

{

"name": "METAFLOW_PRODUCTION_TOKEN",

"value": "helloargoflow-0-symt",

"valueFrom": null

},

{

"name": "ARGO_WORKFLOW_TEMPLATE",

"value": "helloargoflow",

"valueFrom": null

},

{

"name": "ARGO_WORKFLOW_NAME",

"value": "{{workflow.name}}",

"valueFrom": null

},

{

"name": "ARGO_WORKFLOW_NAMESPACE",

"value": "default",

"valueFrom": null

},

{

"name": "METAFLOW_RUNTIME_NAME",

"value": "argo-workflows",

"valueFrom": null

},

{

"name": "USER",

"value": "savin",

"valueFrom": null

},

{

"name": "METAFLOW_VERSION",

"value": "{\"platform\": \"Darwin\", \"username\": \"savin\", \"production_token\": \"helloargoflow-0-symt\", \"runtime\": \"dev\", \"app\": null, \"environment_type\": \"local\", \"use_r\": false, \"python_version\": \"3.9.1 (default, Dec 11 2020, 06:28:49) \\n[Clang 10.0.0 ]\", \"python_version_code\": \"3.9.1\", \"metaflow_version\": \"2.6.0\", \"script\": \"hello.py\", \"ext_info\": [{}, {}], \"flow_name\": \"HelloArgoFlow\"}",

"valueFrom": null

},

{

"name": "METAFLOW_KUBERNETES_POD_NAMESPACE",

"value": null,

"valueFrom": {

"configMapKeyRef": null,

"fieldRef": {

"apiVersion": null,

"fieldPath": "metadata.namespace"

},

"resourceFieldRef": null,

"secretKeyRef": null

}

},

{

"name": "METAFLOW_KUBERNETES_POD_NAME",

"value": null,

"valueFrom": {

"configMapKeyRef": null,

"fieldRef": {

"apiVersion": null,

"fieldPath": "metadata.name"

},

"resourceFieldRef": null,

"secretKeyRef": null

}

},

{

"name": "METAFLOW_KUBERNETES_POD_ID",

"value": null,

"valueFrom": {

"configMapKeyRef": null,

"fieldRef": {

"apiVersion": null,

"fieldPath": "metadata.uid"

},

"resourceFieldRef": null,

"secretKeyRef": null

}

},

{

"name": "METAFLOW_KUBERNETES_SERVICE_ACCOUNT_NAME",

"value": null,

"valueFrom": {

"configMapKeyRef": null,

"fieldRef": {

"apiVersion": null,

"fieldPath": "spec.serviceAccountName"

},

"resourceFieldRef": null,

"secretKeyRef": null

}

}

],

"envFrom": [],

"image": "python:3.9",

"imagePullPolicy": null,

"lifecycle": null,

"livenessProbe": null,

"name": "end",

"ports": null,

"readinessProbe": null,

"resources": {

"limits": {},

"requests": {

"cpu": "1",

"memory": "4096M",

"ephemeral-storage": "10240M"

}

},

"securityContext": null,

"startupProbe": null,

"stdin": null,

"stdinOnce": null,

"terminationMessagePath": null,

"terminationMessagePolicy": null,

"tty": null,

"volumeDevices": null,

"volumeMounts": [

{

"mountPath": "/mnt/out",

"mountPropagation": null,

"name": "out",

"readOnly": null,

"subPath": null,

"subPathExpr": null

}

],

"workingDir": null

}

}

]

}

}

What is the version of Argo Workflows you are on?

Aha - the UI has an additional check that prevents uploading the JSON by hand.

I have started a thread in CNCF slack to ensure that this isn't a regression within Argo Workflows UI.

thnx for your quick reply Savin! Metaflow 2.6.0.post4+gitd977cd4 I created the argo template using this command: helloworld.py argo-workflows --name=h5 create --only-json > h5.json

Aha - the UI has an additional check that prevents uploading the JSON by hand.

I've seen this a while ago. Argo UI does the validation according to the schema. Funny, that executing this via argo api, does the sanitization under the hood :)

Is this issue solved? @savingoyal @karimmohraz

I also have the same problem in Metaflow 2.6.3, Argo 3.3.5.

I create workflow template with follwing command.

python helloworld.py --with kubernetes:secrets=s3-secret argo-workflows create

@ZiminPark Are you running into this issue when you submit using the UI?

I tried both metaflow cli and Argo UI. Submitting via metaflow cli (argo-workflows create) can create a workflow template but it fails on run. And submitting via Argo UI raise same error on submission.

https://cloud-native.slack.com/archives/C01QW9QSSSK/p1652298821097089