Collecting Prometheus Histogram Stats with Harvest

Is your feature request related to a problem? Please describe. We have a requirement to monitor some stats that are of type histogram. However, after enabling them in harvest, we have noticed that the stat is not formatted in a Prometheus histogram format. This means the Prometheus function call "histogram_quantile" does not work. At the moment, histogram stats seem unusable in their current format (as far as we can tell).

Describe the solution you'd like Format the histograms collected from Harvest in a Prometheus histogram format. There are 3 stats generated in a Prometheus histogram from one Netapp histogram collected.

- <stat_name>_bucket{le="<bucket_name>"}

- <stat_name>_sum

- <stat_name>_count

Details about each can be found here: Prometheus Histogram

Currently, histograms get formatted like this:

svm_cifs_cifs_latency_hist{datacenter="DC1",cluster="cluster1",svm="svm1",metric="<90s"} 0

svm_cifs_cifs_latency_hist{datacenter="DC1",cluster="cluster1",svm="svm1",metric="<10s"} 0

svm_cifs_cifs_latency_hist{datacenter="DC1",cluster="cluster1",svm="svm1",metric="<200us"} 0

svm_cifs_cifs_latency_hist{datacenter="DC1",cluster="cluster1",svm="svm1",metric="<600ms"} 0

svm_cifs_cifs_latency_hist{datacenter="DC1",cluster="cluster1",svm="svm1",metric="<14ms"} 0

svm_cifs_cifs_latency_hist{datacenter="DC1",cluster="cluster1",svm="svm1",metric="<100ms"} 0

svm_cifs_cifs_latency_hist{datacenter="DC1",cluster="cluster1",svm="svm1",metric="<8s"} 0

svm_cifs_cifs_latency_hist{datacenter="DC1",cluster="cluster1",svm="svm1",metric="<1s"} 0

svm_cifs_cifs_latency_hist{datacenter="DC1",cluster="cluster1",svm="svm1",metric="<20s"} 0

svm_cifs_cifs_latency_hist{datacenter="DC1",cluster="cluster1",svm="svm1",metric="<4ms"} 0

svm_cifs_cifs_latency_hist{datacenter="DC1",cluster="cluster1",svm="svm1",metric="<100us"} 0

svm_cifs_cifs_latency_hist{datacenter="DC1",cluster="cluster1",svm="svm1",metric="<60us"} 0

svm_cifs_cifs_latency_hist{datacenter="DC1",cluster="cluster1",svm="svm1",metric="<2ms"} 0

svm_cifs_cifs_latency_hist{datacenter="DC1",cluster="cluster1",svm="svm1",metric="<1ms"} 0

svm_cifs_cifs_latency_hist{datacenter="DC1",cluster="cluster1",svm="svm1",metric="<6s"} 0

svm_cifs_cifs_latency_hist{datacenter="DC1",cluster="cluster1",svm="svm1",metric="<30s"} 0

svm_cifs_cifs_latency_hist{datacenter="DC1",cluster="cluster1",svm="svm1",metric="<6ms"} 0

svm_cifs_cifs_latency_hist{datacenter="DC1",cluster="cluster1",svm="svm1",metric="<60ms"} 0

svm_cifs_cifs_latency_hist{datacenter="DC1",cluster="cluster1",svm="svm1",metric="<600us"} 0

svm_cifs_cifs_latency_hist{datacenter="DC1",cluster="cluster1",svm="svm1",metric="<4s"} 0

svm_cifs_cifs_latency_hist{datacenter="DC1",cluster="cluster1",svm="svm1",metric="<80us"} 0

svm_cifs_cifs_latency_hist{datacenter="DC1",cluster="cluster1",svm="svm1",metric=">=120s"} 0

svm_cifs_cifs_latency_hist{datacenter="DC1",cluster="cluster1",svm="svm1",metric="<200ms"} 0

svm_cifs_cifs_latency_hist{datacenter="DC1",cluster="cluster1",svm="svm1",metric="<800us"} 0

svm_cifs_cifs_latency_hist{datacenter="DC1",cluster="cluster1",svm="svm1",metric="<2s"} 0

svm_cifs_cifs_latency_hist{datacenter="DC1",cluster="cluster1",svm="svm1",metric="<800ms"} 0

svm_cifs_cifs_latency_hist{datacenter="DC1",cluster="cluster1",svm="svm1",metric="<20ms"} 0

svm_cifs_cifs_latency_hist{datacenter="DC1",cluster="cluster1",svm="svm1",metric="<400ms"} 0

svm_cifs_cifs_latency_hist{datacenter="DC1",cluster="cluster1",svm="svm1",metric="<18ms"} 0

svm_cifs_cifs_latency_hist{datacenter="DC1",cluster="cluster1",svm="svm1",metric="<120s"} 0

svm_cifs_cifs_latency_hist{datacenter="DC1",cluster="cluster1",svm="svm1",metric="<8ms"} 0

svm_cifs_cifs_latency_hist{datacenter="DC1",cluster="cluster1",svm="svm1",metric="<60s"} 0

svm_cifs_cifs_latency_hist{datacenter="DC1",cluster="cluster1",svm="svm1",metric="<40us"} 0

svm_cifs_cifs_latency_hist{datacenter="DC1",cluster="cluster1",svm="svm1",metric="<16ms"} 0

svm_cifs_cifs_latency_hist{datacenter="DC1",cluster="cluster1",svm="svm1",metric="<12ms"} 0

svm_cifs_cifs_latency_hist{datacenter="DC1",cluster="cluster1",svm="svm1",metric="<80ms"} 0

svm_cifs_cifs_latency_hist{datacenter="DC1",cluster="cluster1",svm="svm1",metric="<400us"} 0

svm_cifs_cifs_latency_hist{datacenter="DC1",cluster="cluster1",svm="svm1",metric="<10ms"} 0

svm_cifs_cifs_latency_hist{datacenter="DC1",cluster="cluster1",svm="svm1",metric="<40ms"} 0

svm_cifs_cifs_latency_hist{datacenter="DC1",cluster="cluster1",svm="svm1",metric="<20us"} 0

This same stat would get formatted like this to match the Prometheus scheme:

# HELP netapp_perf_cifs_vserver_cifs_latency_hist netapp_perf_cifs_vserver_cifs_latency_hist cifs_latency_hist

# TYPE netapp_perf_cifs_vserver_cifs_latency_hist histogram

netapp_perf_cifs_vserver_cifs_latency_hist_bucket{cluster_name="cluster1",instance="collector:25035",instance_name="svm1",vserver_name="svm1",le="0.02"} 1439

netapp_perf_cifs_vserver_cifs_latency_hist_bucket{cluster_name="cluster1",instance="collector:25035",instance_name="svm1",vserver_name="svm1",le="0.04"} 2579

netapp_perf_cifs_vserver_cifs_latency_hist_bucket{cluster_name="cluster1",instance="collector:25035",instance_name="svm1",vserver_name="svm1",le="0.06"} 2607

netapp_perf_cifs_vserver_cifs_latency_hist_bucket{cluster_name="cluster1",instance="collector:25035",instance_name="svm1",vserver_name="svm1",le="0.08"} 2608

netapp_perf_cifs_vserver_cifs_latency_hist_bucket{cluster_name="cluster1",instance="collector:25035",instance_name="svm1",vserver_name="svm1",le="0.1"} 2611

netapp_perf_cifs_vserver_cifs_latency_hist_bucket{cluster_name="cluster1",instance="collector:25035",instance_name="svm1",vserver_name="svm1",le="0.2"} 2759

netapp_perf_cifs_vserver_cifs_latency_hist_bucket{cluster_name="cluster1",instance="collector:25035",instance_name="svm1",vserver_name="svm1",le="0.4"} 2908

netapp_perf_cifs_vserver_cifs_latency_hist_bucket{cluster_name="cluster1",instance="collector:25035",instance_name="svm1",vserver_name="svm1",le="0.6"} 3108

netapp_perf_cifs_vserver_cifs_latency_hist_bucket{cluster_name="cluster1",instance="collector:25035",instance_name="svm1",vserver_name="svm1",le="0.8"} 3119

netapp_perf_cifs_vserver_cifs_latency_hist_bucket{cluster_name="cluster1",instance="collector:25035",instance_name="svm1",vserver_name="svm1",le="1"} 3126

netapp_perf_cifs_vserver_cifs_latency_hist_bucket{cluster_name="cluster1",instance="collector:25035",instance_name="svm1",vserver_name="svm1",le="2"} 3143

netapp_perf_cifs_vserver_cifs_latency_hist_bucket{cluster_name="cluster1",instance="collector:25035",instance_name="svm1",vserver_name="svm1",le="4"} 3172

netapp_perf_cifs_vserver_cifs_latency_hist_bucket{cluster_name="cluster1",instance="collector:25035",instance_name="svm1",vserver_name="svm1",le="6"} 3179

netapp_perf_cifs_vserver_cifs_latency_hist_bucket{cluster_name="cluster1",instance="collector:25035",instance_name="svm1",vserver_name="svm1",le="8"} 3215

netapp_perf_cifs_vserver_cifs_latency_hist_bucket{cluster_name="cluster1",instance="collector:25035",instance_name="svm1",vserver_name="svm1",le="10"} 3220

netapp_perf_cifs_vserver_cifs_latency_hist_bucket{cluster_name="cluster1",instance="collector:25035",instance_name="svm1",vserver_name="svm1",le="12"} 3228

netapp_perf_cifs_vserver_cifs_latency_hist_bucket{cluster_name="cluster1",instance="collector:25035",instance_name="svm1",vserver_name="svm1",le="14"} 3232

netapp_perf_cifs_vserver_cifs_latency_hist_bucket{cluster_name="cluster1",instance="collector:25035",instance_name="svm1",vserver_name="svm1",le="16"} 3235

netapp_perf_cifs_vserver_cifs_latency_hist_bucket{cluster_name="cluster1",instance="collector:25035",instance_name="svm1",vserver_name="svm1",le="18"} 3238

netapp_perf_cifs_vserver_cifs_latency_hist_bucket{cluster_name="cluster1",instance="collector:25035",instance_name="svm1",vserver_name="svm1",le="20"} 3242

netapp_perf_cifs_vserver_cifs_latency_hist_bucket{cluster_name="cluster1",instance="collector:25035",instance_name="svm1",vserver_name="svm1",le="40"} 3253

netapp_perf_cifs_vserver_cifs_latency_hist_bucket{cluster_name="cluster1",instance="collector:25035",instance_name="svm1",vserver_name="svm1",le="60"} 3256

netapp_perf_cifs_vserver_cifs_latency_hist_bucket{cluster_name="cluster1",instance="collector:25035",instance_name="svm1",vserver_name="svm1",le="80"} 3256

netapp_perf_cifs_vserver_cifs_latency_hist_bucket{cluster_name="cluster1",instance="collector:25035",instance_name="svm1",vserver_name="svm1",le="100"} 3257

netapp_perf_cifs_vserver_cifs_latency_hist_bucket{cluster_name="cluster1",instance="collector:25035",instance_name="svm1",vserver_name="svm1",le="200"} 3262

netapp_perf_cifs_vserver_cifs_latency_hist_bucket{cluster_name="cluster1",instance="collector:25035",instance_name="svm1",vserver_name="svm1",le="400"} 3265

netapp_perf_cifs_vserver_cifs_latency_hist_bucket{cluster_name="cluster1",instance="collector:25035",instance_name="svm1",vserver_name="svm1",le="600"} 3265

netapp_perf_cifs_vserver_cifs_latency_hist_bucket{cluster_name="cluster1",instance="collector:25035",instance_name="svm1",vserver_name="svm1",le="800"} 3265

netapp_perf_cifs_vserver_cifs_latency_hist_bucket{cluster_name="cluster1",instance="collector:25035",instance_name="svm1",vserver_name="svm1",le="1000"} 3265

netapp_perf_cifs_vserver_cifs_latency_hist_bucket{cluster_name="cluster1",instance="collector:25035",instance_name="svm1",vserver_name="svm1",le="2000"} 3265

netapp_perf_cifs_vserver_cifs_latency_hist_bucket{cluster_name="cluster1",instance="collector:25035",instance_name="svm1",vserver_name="svm1",le="4000"} 3265

netapp_perf_cifs_vserver_cifs_latency_hist_bucket{cluster_name="cluster1",instance="collector:25035",instance_name="svm1",vserver_name="svm1",le="6000"} 3265

netapp_perf_cifs_vserver_cifs_latency_hist_bucket{cluster_name="cluster1",instance="collector:25035",instance_name="svm1",vserver_name="svm1",le="8000"} 3265

netapp_perf_cifs_vserver_cifs_latency_hist_bucket{cluster_name="cluster1",instance="collector:25035",instance_name="svm1",vserver_name="svm1",le="10000"} 3265

netapp_perf_cifs_vserver_cifs_latency_hist_bucket{cluster_name="cluster1",instance="collector:25035",instance_name="svm1",vserver_name="svm1",le="20000"} 3265

netapp_perf_cifs_vserver_cifs_latency_hist_bucket{cluster_name="cluster1",instance="collector:25035",instance_name="svm1",vserver_name="svm1",le="30000"} 3265

netapp_perf_cifs_vserver_cifs_latency_hist_bucket{cluster_name="cluster1",instance="collector:25035",instance_name="svm1",vserver_name="svm1",le="60000"} 3265

netapp_perf_cifs_vserver_cifs_latency_hist_bucket{cluster_name="cluster1",instance="collector:25035",instance_name="svm1",vserver_name="svm1",le="90000"} 3265

netapp_perf_cifs_vserver_cifs_latency_hist_bucket{cluster_name="cluster1",instance="collector:25035",instance_name="svm1",vserver_name="svm1",le="120000"} 3265

netapp_perf_cifs_vserver_cifs_latency_hist_bucket{cluster_name="cluster1",instance="collector:25035",instance_name="svm1",vserver_name="svm1",le="240000"} 3265

netapp_perf_cifs_vserver_cifs_latency_hist_bucket{cluster_name="cluster1",instance="collector:25035",instance_name="svm1",vserver_name="svm1",le="+Inf"} 3265

netapp_perf_cifs_vserver_cifs_latency_hist_sum{cluster_name="cluster1",instance="collector:25035",instance_name="svm1",vserver_name="svm1"} 4085.44

netapp_perf_cifs_vserver_cifs_latency_hist_count{cluster_name="cluster1",instance="collector:25035",instance_name="svm1",vserver_name="svm1"} 3265

To make this work, the label "metric" harvest is collecting would need to be converted to a number value and made into a bucket label "le".

Example: metric="<20us" => le="0.02" (standarizing all buckets to ms)

Describe alternatives you've considered We currently can collect these histograms in an internal perf collection tool, but we would like to transfer all collections to harvest since it is way better in so many ways.

Thanks for the detailed report and doc references @rodenj1

You're probably the first person trying to use ontap converted histograms to Prometheus histograms via Harvest. None of the current dashboards use them.

All of that is to say, they've probably been incorrectly formatted since day one.

We'll fix this

hi @rodenj1 this change is in nightly if you want to try it out before the next release, you can grab the latest nightly build.

Rest support is pending

Sorry, hit the wrong button

Hi Chris, Thank you for working on this. I have one of my harvester collectors running the nightly update, and I noticed a couple of things.

- As the buckets increase, it should include the count from all previous values.

Should be this

... consider that all other bucket values are 0

waflremote_fc_retrieve_hist_bucket{datacenter="DC01",cluster="cluster1",node="node-01",process_name="",le="20000"} 0

waflremote_fc_retrieve_hist_bucket{datacenter="DC01",cluster="cluster1",node="node-01",process_name="",le="40000"} 0

waflremote_fc_retrieve_hist_bucket{datacenter="DC01",cluster="cluster1",node="node-01",process_name="",le="60000"} 3070

waflremote_fc_retrieve_hist_bucket{datacenter="DC01",cluster="cluster1",node="node-01",process_name="",le="80000"} 1114

waflremote_fc_retrieve_hist_bucket{datacenter="DC01",cluster="cluster1",node="node-01",process_name="",le="100000"} 0

waflremote_fc_retrieve_hist_bucket{datacenter="DC01",cluster="cluster1",node="node-01",process_name="",le="200000"} 4

waflremote_fc_retrieve_hist_bucket{datacenter="DC01",cluster="cluster1",node="node-01",process_name="",le="400000"} 1

... consider that all other bucket values are 0

waflremote_fc_retrieve_hist_bucket{datacenter="DC01",cluster="cluster1",node="node-01",process_name="",le="+Inf"} 0

It should become this...

... There were Zero observations for each bucket before this...

waflremote_fc_retrieve_hist_bucket{datacenter="DC01",cluster="cluster1",node="node-01",process_name="",le="20000"} 0

waflremote_fc_retrieve_hist_bucket{datacenter="DC01",cluster="cluster1",node="node-01",process_name="",le="40000"} 0

waflremote_fc_retrieve_hist_bucket{datacenter="DC01",cluster="cluster1",node="node-01",process_name="",le="60000"} 3070

waflremote_fc_retrieve_hist_bucket{datacenter="DC01",cluster="cluster1",node="node-01",process_name="",le="80000"} 4184

waflremote_fc_retrieve_hist_bucket{datacenter="DC01",cluster="cluster1",node="node-01",process_name="",le="100000"} 4184

waflremote_fc_retrieve_hist_bucket{datacenter="DC01",cluster="cluster1",node="node-01",process_name="",le="200000"} 4188

waflremote_fc_retrieve_hist_bucket{datacenter="DC01",cluster="cluster1",node="node-01",process_name="",le="400000"} 4189

... There were Zero observations for each bucket after this...

waflremote_fc_retrieve_hist_bucket{datacenter="DC01",cluster="cluster1",node="node-01",process_name="",le="+Inf"} 4189

- _count is missing. This is just the count of observations

waflremote_fc_retrieve_hist_count{datacenter="DC01",cluster="cluster1",node="node-01",process_name=""} 4189

- _sum is missing. This is the sum total of the values of each observation. This one ends up being a bit of a math game since we don't get the actual values. ...(60000 * 3070) + (80000 * 1114) + (100000 * 0) + (200000 * 4) + (400000 * 1)... = 274520000

waflremote_fc_retrieve_hist_sum{datacenter="DC01",cluster="cluster1",node="node-01",process_name=""} 274520000

hi @rodenj1 thanks for giving it a try and sharing your findings. I'll reply in order to your points.

-

Got it, that makes sense. Convert ONTAP's per-bucket-frequencies into Prometheus's cumulative buckets.

-

_countis missing, that's true. I was under the impression that it wasn't required if the+Infbucket was present. Perhaps I misunderstood. Either way, if it's essential to what you're trying to do, we can add it. -

_sum, yep that one ONTAP doesn't give us. I thought about the sum of products, but that's a fairly gross approximation since we've "lost" the original values. We can do the sum of products if you think that approximation is better than nothing.

@rodenj1 you can try the latest nightly build for these issues

Just got a chance to validate... Looks great!

Awesome, glad to hear you're all set. I'll leave this issue open until the REST changes are submitted.

@rodenj1 if there are histograms panels that you think would be broadly useful to Harvest, feel free to share and we can try adding them

@cgrinds I am using histogram stats for some Flexcache tracking, so probably not applicable to the greater audience. However I was playing with NFSv3 latency and have a few histograms charts I can send over. I did notice a bug though, I think it is pretty minor. In the collection config if I add this line it does not collect.

- nfsv3_latency_hist => latency_hist

However if I just do no rename way it works fine.

- nfsv3_latency_hist

I think this is because of the all the _bucket, _count, and _sum stats the need to get created. The bummer is the status name is "svm_nfs_nfsv3_latency_hist_bucket" rather than "svm_nfs_latency_hist_bucket".

Here is a screenshot of the NFSv3 latency histograms. Let me know if this is interesting.

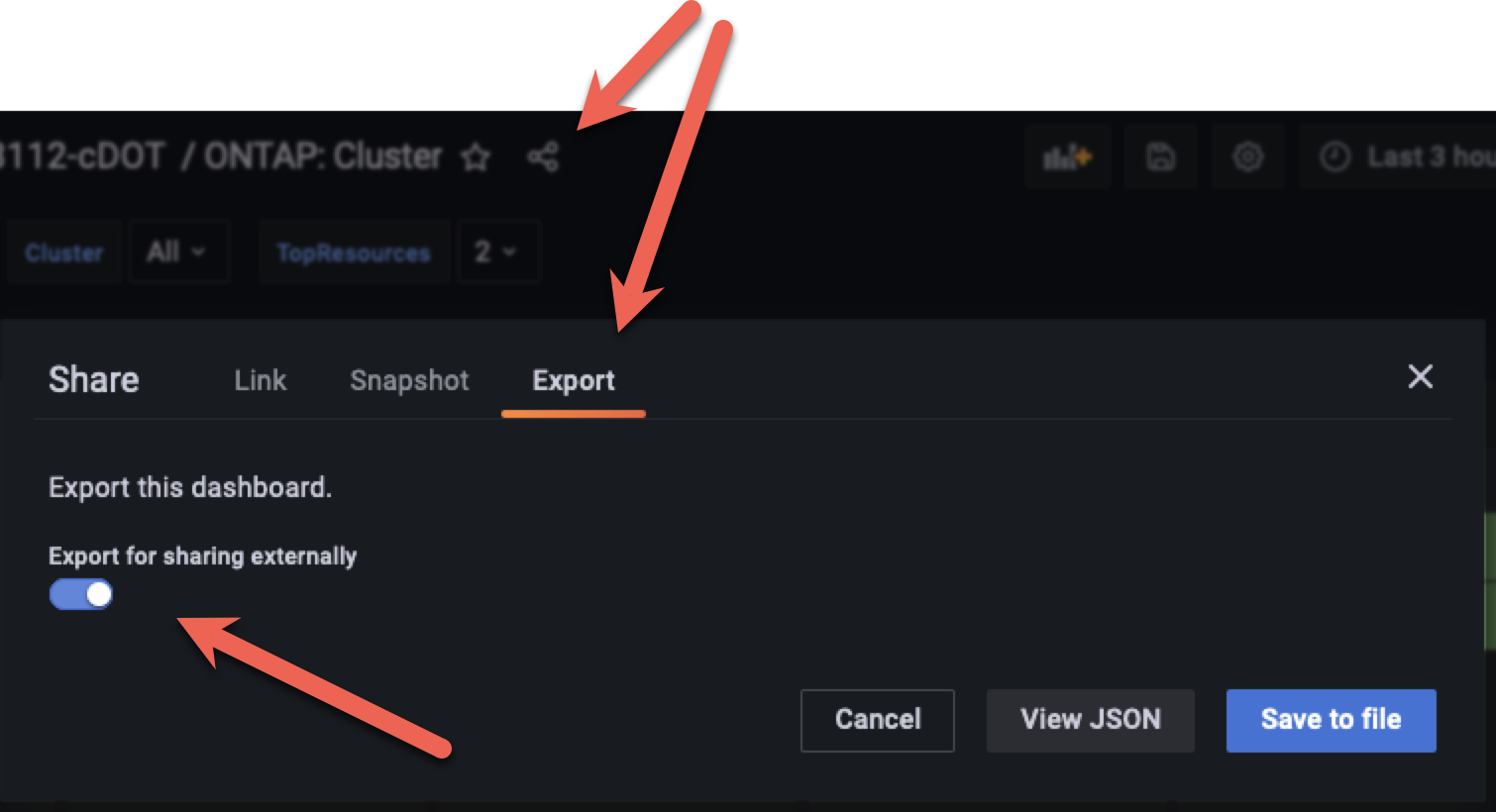

Nice! I think those are worth adding. Could you export for sharing externally and email [email protected] or attach them to this issue?

And yes, the name problem is a bug we'll fix.

hi @rodenj1 #1403 is fixed in nightly

RestPerf changes are also checked-in.

hi @rodenj1 reminder to send the histogram panels or dashboards. Feel free to email [email protected] or attach them to this issue. Thanks!

Sorry for the delay. I sent over the dashboard to the email and also verified that the rename now works. Thank you so much!

Thanks @rodenj1 . I have opened a feature request to look into the shared dashboard. #1462

I verified with 22.11 build commit 6d5f6a48 and rodenj1 verified in earlier nightly.