TensorRT

TensorRT copied to clipboard

TensorRT copied to clipboard

TRT PluginCan it support multiple outputs

Description

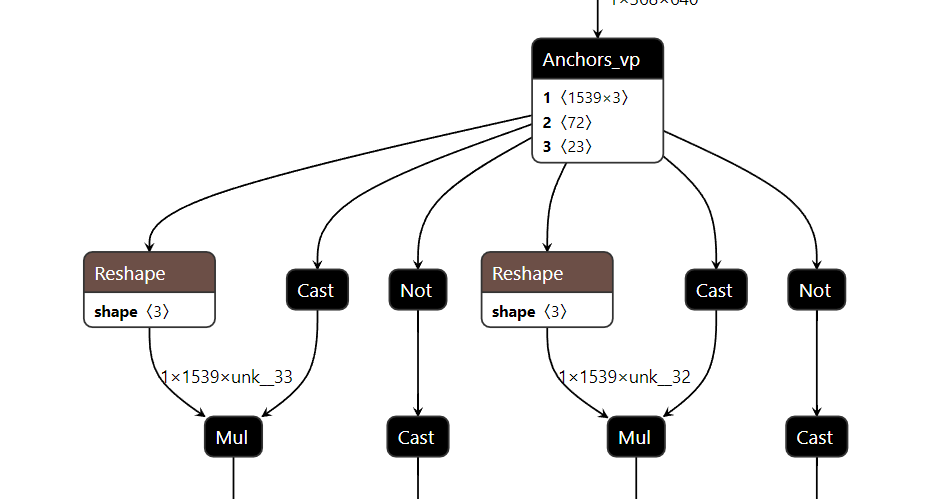

TRT PluginCan it support multiple outputs ,I have implemented a multi-output plugin myself, but it will report an error in the next op node。

-------------------------SgnetAnchorsVpDynamic::getOutputDimensions-----------0 outputIndex:0 ret.d[0]:1539 ret.d[1]:77 ret.d[0]:0x2b5267c0 ret.d[1]:0x2b526a00 -------------------------SgnetAnchorsVpDynamic::getOutputDimensions-----------1index:0 index_type:0 [08/22/2022-22:03:19] [V] [TRT] Registering tensor: onnx::Reshape_571 for ONNX tensor: onnx::Reshape_571 terminate called after throwing an instance of 'std::out_of_range' what(): vector::_M_range_check: __n (which is 1) >= this->size() (which is 1) Aborted (core dumped)

Environment **TensorRT Version :tensorrt 8.4 **GPU Type :v100 **Nvidia Driver Version :515 **CUDA Version :11.4 **CUDNN Version :8.2

Relevant Files

Steps To Reproduce

TRT supports multiple plugin outputs, please check your code or refer to our OSS plugin implementation.

TRT supports multiple plugin outputs, please check your code or refer to our OSS plugin implementation.

Can different types of multiple outputs also be supported? I only see the same type of reference code

TRT supports multiple plugin outputs, please check your code or refer to our OSS plugin implementation.

Can different types of multiple outputs also be supported? I only see the same type of reference code @zerollzeng

TRT supports multiple plugin outputs, please check your code or refer to our OSS plugin implementation.

TRT supports multiple plugin outputs, please check your code or refer to our OSS plugin implementation.

I refer to split, but there will be an error。 -------------------------SgnetAnchorsVpDynamic::getOutputDimensions-----------0 outputIndex:0 ret.d[0]:1539 ret.d[1]:77 ret.d[0]:0x2b5267c0 ret.d[1]:0x2b526a00 -------------------------SgnetAnchorsVpDynamic::getOutputDimensions-----------1index:0 index_type:0 [08/22/2022-22:03:19] [V] [TRT] Registering tensor: onnx::Reshape_571 for ONNX tensor: onnx::Reshape_571 terminate called after throwing an instance of 'std::out_of_range' what(): vector::_M_range_check: __n (which is 1) >= this->size() (which is 1) Aborted (core dumped) don't know what's the problem @zerollzeng

Can different types of multiple outputs also be supported? I only see the same type of reference code

Yes, it's supported for IPluginV2IOExt and IPluginV2DynamicExt.

don't know what's the problem @zerollzeng

I can't see the root cause with only those logs, can you share your plugin code here?

Registering tensor: onnx::Reshape_571 for ONNX tensor: onnx::Reshape_571

Is your plugin's output tensor is the input of a reshape node?

don't know what's the problem @zerollzeng

I can't see the root cause with only those logs, can you share your plugin code here?

@zerollzeng nvinfer1::DimsExprs SgnetAnchorsVpDynamic::getOutputDimensions( int outputIndex, const nvinfer1::DimsExprs *inputs, int nbInputs, nvinfer1::IExprBuilder &exprBuilder) noexcept { nvinfer1::DimsExprs ret(inputs[0]); // ret.nbDims = 3; cout << "ret.nbDims:" << ret.nbDims << endl; cout << "-------------------------SgnetAnchorsVpDynamic::" "getOutputDimensions-----------0" << endl; cout << "outputIndex:" << outputIndex << endl; // ret.d[0] = exprBuilder.constant(1); // ret.d[1] = exprBuilder.constant(1); // ret.d[2] = exprBuilder.constant(1);

if (outputIndex == 0) { ret.d[1] = inputs[1].d[0]; // vp_base_anchors_dims[0] ret.d[2] = exprBuilder.constant(2 + 2 + 1 + n_offsets); } else if (outputIndex == 1) { ret.d[1] = inputs[1].d[0]; // vp_base_anchors_dims[0] ret.d[1] = exprBuilder.constant(2 + 2 + 1 + fmap_hw); } else { ret.d[1] = exprBuilder.constant(1); ret.d[2] = exprBuilder.constant(1); } cout << "-------------------------SgnetAnchorsVpDynamic::" "getOutputDimensions-----------1" << endl; return ret; }

nvinfer1::DataType SgnetAnchorsVpDynamic::getOutputDataType( int index, const nvinfer1::DataType *inputTypes, int nbInputs) const noexcept {

nvinfer1::DataType type0[] = {nvinfer1::DataType::kFLOAT, nvinfer1::DataType::kFLOAT, nvinfer1::DataType::kBOOL}; nvinfer1::DataType type1[] = {nvinfer1::DataType::kHALF, nvinfer1::DataType::kHALF, nvinfer1::DataType::kBOOL}; nvinfer1::DataType index_type = (inputTypes[1] == nvinfer1::DataType::kFLOAT) ? type0[index] : type1[index]; cout << "index:" << index << endl; cout << "index_type:" << (int)(index_type) << endl; return index_type; //return inputTypes[1]; }

class SgnetAnchorsVpDynamic : public nvinfer1::IPluginV2DynamicExt { public: SgnetAnchorsVpDynamic(const std::string &name, const nvinfer1::Dims img_hw, int32_t fmap_hw, int32_t n_offsets); SgnetAnchorsVpDynamic(const std::string name, const void *data, size_t length); SgnetAnchorsVpDynamic() = delete; // IPluginV2DynamicExt Methods nvinfer1::IPluginV2DynamicExt *clone() const noexcept override; nvinfer1::DimsExprs getOutputDimensions( int outputIndex, const nvinfer1::DimsExprs *inputs, int nbInputs, nvinfer1::IExprBuilder &exprBuilder) noexcept override; bool supportsFormatCombination(int pos, const nvinfer1::PluginTensorDesc *inOut, int nbInputs, int nbOutputs) noexcept override; void configurePlugin(const nvinfer1::DynamicPluginTensorDesc *in, int nbInputs, const nvinfer1::DynamicPluginTensorDesc *out, int nbOutputs) noexcept override; size_t getWorkspaceSize(const nvinfer1::PluginTensorDesc *inputs, int nbInputs, const nvinfer1::PluginTensorDesc *outputs, int nbOutputs) const noexcept override; int enqueue(const nvinfer1::PluginTensorDesc *inputDesc, const nvinfer1::PluginTensorDesc *outputDesc, const void *const *inputs, void *const *outputs, void *workspace, cudaStream_t stream) noexcept override; void attachToContext(cudnnContext *cudnnContext, cublasContext *cublasContext, nvinfer1::IGpuAllocator *gpuAllocator) noexcept override; void detachFromContext() noexcept override; // IPluginV2Ext Methods nvinfer1::DataType getOutputDataType(int index, const nvinfer1::DataType *inputTypes, int nbInputs) const noexcept override;

// IPluginV2 Methods const char *getPluginType() const noexcept override; const char *getPluginVersion() const noexcept override; int getNbOutputs() const noexcept override; int initialize() noexcept override; void terminate() noexcept override; size_t getSerializationSize() const noexcept override; void serialize(void *buffer) const noexcept override; void destroy() noexcept override; void setPluginNamespace(const char *pluginNamespace) noexcept override; const char *getPluginNamespace() const noexcept override;

private: const std::string mLayerName; std::string mNamespace; nvinfer1::Dims img_hw; int32_t fmap_hw; int32_t n_offsets; cublasHandle_t m_cublas_handle; // To prevent compiler warnings. };

Registering tensor: onnx::Reshape_571 for ONNX tensor: onnx::Reshape_571

Is your plugin's output tensor is the input of a reshape node?

yes,Structures like the one above

yes,Structures like the one above

don't know what's the problem @zerollzeng

I can't see the root cause with only those logs, can you share your plugin code here? @zerollzeng Thank you so much, I probably know what the problem is, it should be that getNbOutputs() is not implemented int getNbOutputs() const noexcept override { return _output_lengths.size(); }

could you also provide the ONNX file? It looks to be a failure in ONNX parser

I think the user has solved the problem, close. Feel free to reopen it if needed.