Evaluation Metrics

❓ Questions & Help

Details

For next-item prediction task, when we do evaluation on test dataset, I noticed the label for each sequence is the last time, how can we get the recall@k with only 1 target?

Thank you for the question @liguo88! Could you clarify what you mean by get the recall@k with only 1 target?

does it mean your input sequence has only one element?

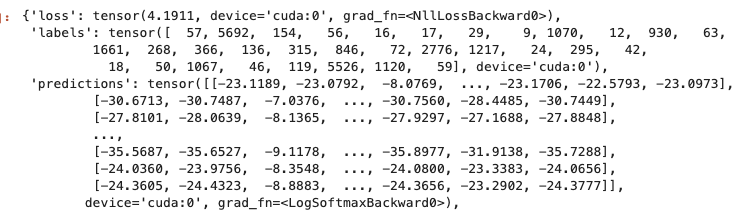

Thanks a lot for your reply @sararb. When I am trying to evaluate the model, I am using following script and the screenshot is "response".

Thanks a lot for your reply @sararb. When I am trying to evaluate the model, I am using following script and the screenshot is "response".

model.eval()

response = model(batch, training=False)

So the batch_size = 32 and we see the labels is a tensor with shape [32]. Then for each sequence in this batch, the target is only one item which is the exactly the last item of each sequence in this batch.

When we calculate metric for example recall, with the first sequence, the target is only 57, it is not a sequence, so recall will be either 0 or 1. Is this a problem? Or I misunderstand the calculation part of recall?

@liguo88 thank you for your clarification! So in your example, we got 32 targets and a predictions tensor of shape [32, vocab_size]. Meaning for each target (57 for example) the model returns scores over all items in the catalog (shape: [1, vocab_size]).

So to compute the recall@k of the target "57": we will first extract the top-k ids with the highest scores from the prediction scores. Then, we use this list of ids to compute the recall (i.e. check if "57" is in this list of ids or not)

Hope it answers your question?

@sararb Thanks a lot for your answer. Yes I understand. Just to be confirm, for one example,

-

the recall will be 0 or 1 since it has only one target, correct?

-

Also for precision@10 will be 0 or 1/10, so the highest value will be 0.1 for precision, even for the average the highest should be 0.1. But what I got is

avg_precision_at_10 = 0.3691472113132477, how could this be possible? -

In the prediction part, will

model.eval()andresponse = model(batch, training=False)also mask the last item? We should not mask the last item for prediction, right? -

For next_item prediction, the output is the length of number of items and based on the softmax to get top k recommendations. Is it possible to make it as a generated model that each time we predict one item and then use this item to generate next one? I am not sure which way it better. Because if we want to recommend a sequence of items, not sure how good the model will be by choose top softmax corresponding items.

closed