Reinforcement-learning-with-tensorflow

Reinforcement-learning-with-tensorflow copied to clipboard

Reinforcement-learning-with-tensorflow copied to clipboard

simply_PPO中与环境交互时为什么不使用old_pi而是pi

with tf.variable_scope('sample_action'): self.sample_op = tf.squeeze(pi.sample(1), axis=0) # choosing action

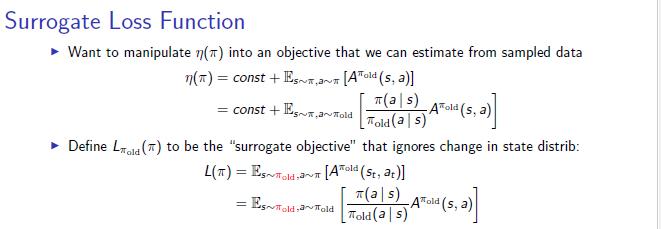

代码中与环境交互使用的是new_pi, 但是根据surrogate loss 公式的话,

不应该都用old pi 交互吗

不应该都用old pi 交互吗

Check algorithm 3 on page 12 in this paper. Gradients should be computed with respect to pi & these gradients are used for global & local updates.

EDIT: See algorithm 1 (line 2) on page 3 in the same paper for details on trajectory sampling.

Check algorithm 3 on page 12 in this paper. Gradients should be computed with respect to pi & these gradients are used for global & local updates.

Can you read Chinese?

#为什么不是用old_pi去选动作,而是用pi? 这个和后面的代码安排顺序有关。 #后面代码的大致执行流程是这样的:先拿pi去选出一回合的(s1,a1,s2,a2...),然后把pi复制到old_pi,然后固定old_pi不变、更新多次pi。可以看到其实就等价于是用的old_pi选动作。