sd-webui-controlnet

sd-webui-controlnet copied to clipboard

sd-webui-controlnet copied to clipboard

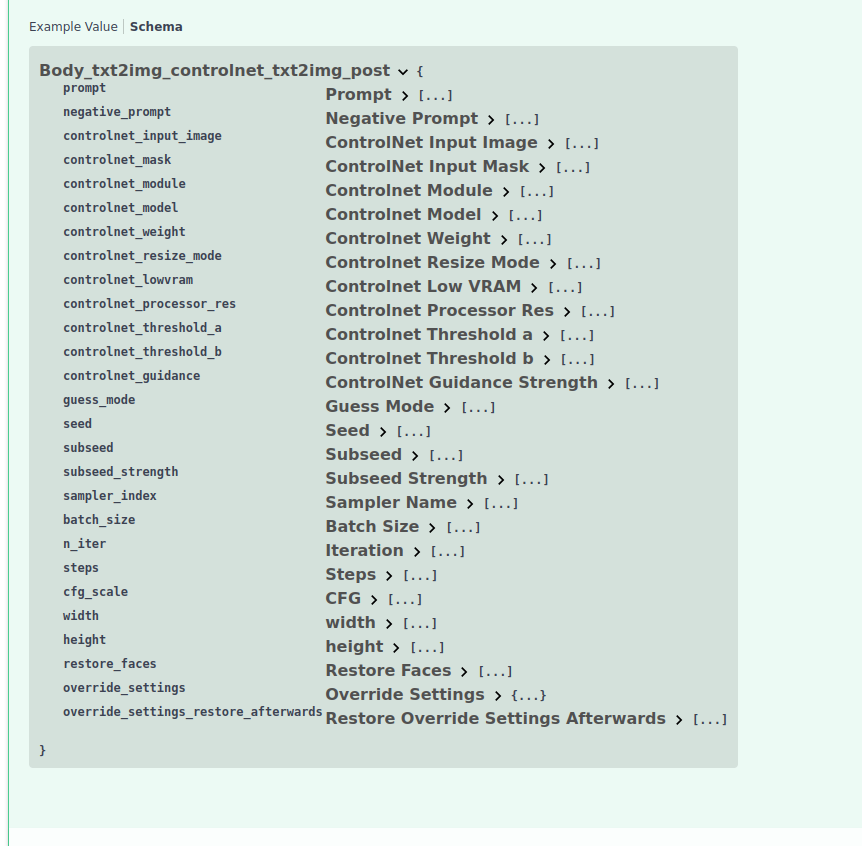

added an API layer

This API layer replicates SD-WebUI's txt2img and img2img pipeline, with added script parameters for ControlNet.

It has two caveats that needs addressed, but currently in a working state with full support of SD-WebUI + ControlNet features.

- I still don't know what's the first arg of p.script_args: I observed it to be 0. If someone can validate this!

- This api layer soon need to be able to automatically pull in other AlwaysVisible scripts' parameters, and only update those for ControlNet. Any advice appreciated!

Can anyone confirm that Text-To-Image works? I'm getting bad outputs that seem like normal Text-To-Image. Everything loads and there are no errors though. And could you also return the generated control image at either the first or last image index if the pre-processor was used?

"controlnet_input_image": [img], "controlnet_module": 'openpose', "controlnet_model": 'control_sd15_openpose [fef5e48e]',

This is how json objects are formated and those that are necessary. My setup works fine this way with depth, pose and scribble. Img is a base64 string for the image that goes into the ControlNet UI on the SD WebUI.

EDIT: control img can be added but procrastinating on it. If interested in doing so, just need to encode the img in the second index of the output that is in numpy array format.

Good. Small advice:

- Move API stuff to another file, like

api.py - Maybe we should add some examples in the readme.

I see the issue now. Doesn't work with Euler apparently? Switched to DDIM and I'm getting proper results now.

Edit: Just tried with DPM++ 2M and the output was bad as well. Using canny btw

Edit: Just tested with depth and the same thing happens. It works with DDIM and PLMS but with Euler, LMS, Heun or DPM++ 2M the results become random. I've tried most of the samplers now and ControlNet appears to only be applying to DDIM and PLMS. They all work in the webui though. It could just be an issue with my installation.

I've dropped this change in, but seems to break when visiting the docs; http://127.0.0.1:7860/docs#/

For those this doesn't work, forgot the highlight it only works only when ControlNet is installed as a script in img2img and txt2img. Try it without other scripts installed in the extension tab.

Good. Small advice:

- Move API stuff to another file, like

api.py- Maybe we should add some examples in the readme.

Will do when I have time. Quick Q:

I see in your script, that you arrange script args based on script index. Any tips in populating script args for multiple alwayson_scripts properly? Thanks!

For those this doesn't work, forgot the highlight it only works only when ControlNet is installed as a script in img2img and txt2img. Try it without other scripts installed in the extension tab.

@kft334 this might be the issue

Creating a new API was a wonderful idea, even if it's 'makeshift'. It makes things so much easier to edit.

One thing though, I pass in the controlnet_input_image as an array of base64 strings, and it throws an error ("Incorrect Padding").

Replacing

cn_image = Image.open(io.BytesIO(base64.b64decode(controlnet_input_image[0])))

with

cn_image = decode_base64_to_image(controlnet_input_image[0])

Fixed that error.

Hello! I'm the author of the post on Reddit. I have a couple of suggestions to make your api layer better.

- You should add GET methods to retrieve the available Preprocessors and Models, just like in the standard A1111 api.

- It's better to make it in a different file. It will be easier to maintain.

I still don't know what would be the best solution from the architectural standpoint. You cloned the whole A1111 api for txt2img and img2img. I don't think it's a good idea, as it's hard to maintain. You'll have to track changes both in A1111 api and in the ControlNet extension. My solution is not great either. I made the api only to retrieve the models, and created an A1111 script to control the ControlNet extension, so a user only have to add the Script name and arguments to the existing API call. It's hacky too (and adds an extra script with UI), but at least, you don't have to worry about maintaining the cloned API. I don't know if there is another way to do this apart from adding the ControlNet to the core of A1111. Any suggestions would be appreciated.

Hello! I'm the author of the post on Reddit. I have a couple of suggestions to make your api layer better.

- You should add GET methods to retrieve the available Preprocessors and Models, just like in the standard A1111 api.

- It's better to make it in a different file. It will be easier to maintain.

I still don't know what would be the best solution from the architectural standpoint. You cloned the whole A1111 api for txt2img and img2img. I don't think it's a good idea, as it's hard to maintain. You'll have to track changes both in A1111 api and in the ControlNet extension. My solution is not great either. I made the api only to retrieve the models, and created an A1111 script to control the ControlNet extension, so a user only have to add the Script name and arguments to the existing API call. It's hacky too (and adds an extra script with UI), but at least, you don't have to worry about maintaining the cloned API. I don't know if there is another way to do this apart from adding the ControlNet to the core of A1111. Any suggestions would be appreciated.

Hey, I really loved your work! And great suggestions : )

Yeah definitely this is a super makeshift effort : ) I just needed quick and dirty for the moment, but as you said maintaining will be a headache potentially. I think adding the 1) GET methods, 2) move to separate script (done on my system locally), 3) returning control results as you and others suggested would be good for the moment. But also a fan of exploring the right implementation model (which would be why I wanted to start this thread) that would be needed going forward.

@sangww - having problems running this, the fallback case line 350 always is True. therefore control net is not used? Am I missing something?

@sangww - having problems running this, the fallback case line 350 always is True. therefore control net is not used? Am I missing something?

Shouldn't be true. I have updated the commit which also includes an example api call from a python notebook. This could be helpful in comparing with your setup There are a few things to check given how makeshift is this:

- Do you have other scripts installed on txt2img and img2img? Should have none for this to correctly work in the current implementation. You could simply disable those (such as additional network)

- Do you see any error message? I wonder if the input image for controlnet is correctly encoded, which could lead to a failed processing.

No error message, no extra modules, installed your branch on a fresh install - I use the same encoding for the input for ControlNet as the initImage (without the "data:image/png;base64," substring) and still the flag is checked True.

Could you double check if this is occurring for you too? -> There is no exception clause in the chain of execution afterward - whence there is no error displayed.

No error message, no extra modules, installed your branch on a fresh install - I use the same encoding for the input for ControlNet as the initImage (without the "data:image/png;base64," substring) and still the flag is checked True.

Could you double check if this is occurring for you too? -> There is no exception clause in the chain of execution afterward - whence there is no error displayed.

That is strange indeed. I am suspecting it could be the extension's own seeting. Could you try: go to settings tab -> ControlNet -> ensure true is set for "Allow other script to control this extension"? Edit: tested with my config and it worked as expected.

I have the same issue still, with the settings changes.

# todo: extend to include wither alwaysvisible scripts

processed = scripts.scripts_img2img.run(p, *(p.script_args))

if processed is None: # fall back

processed = process_images(p)

Processed is always none here

The new version of ControlNet adds Guidance strength, but there is no such parameter in the api. The default value of Guidance strength is zero when calling the api to generate, resulting in serious error in the result. Please add this parameter to the api or tell me how to add it? Please refer to the above three pictures for the difference in results.

add more entries to script_args should fix it. (PR welcome https://github.com/Mikubill/sd-webui-controlnet/blob/5fed282f93ef28952dc517b0bb2e8dc3a1cca278/scripts/api.py#L170-L180

@Mikubill Confused, when I add the following code like other codes it doesn't work.

controlnet_guidance: float = Body(1.0, title='ControlNet Guidance Strength'),

cn_args={``````````"guidance":controlnet_guidance,}

p.script_args = (```````````````cn_args["guidance"],)

I see the issue now. Doesn't work with Euler apparently? Switched to DDIM and I'm getting proper results now.

Edit: Just tried with DPM++ 2M and the output was bad as well. Using canny btw

Edit: Just tested with depth and the same thing happens. It works with DDIM and PLMS but with Euler, LMS, Heun or DPM++ 2M the results become random. I've tried most of the samplers now and ControlNet appears to only be applying to DDIM and PLMS. They all work in the webui though. It could just be an issue with my installation.

This is why I wait like 1-2 weeks after a commit to update anything. Thanks for the comment. I'll wait a while longer before updating.

@Mikubill Confused, when I add the following code like other codes it doesn't work.

controlnet_guidance: float = Body(1.0, title='ControlNet Guidance Strength'), cn_args={``````````"guidance":controlnet_guidance,} p.script_args = (```````````````cn_args["guidance"],)

For those this doesn't work, forgot the highlight it only works only when ControlNet is installed as a script in img2img and txt2img. Try it without other scripts installed in the extension tab.

@kft334 this might be the issue

Can anyone confirm that Text-To-Image works? I'm getting bad outputs that seem like normal Text-To-Image. Everything loads and there are no errors though. And could you also return the generated control image at either the first or last image index if the pre-processor was used?

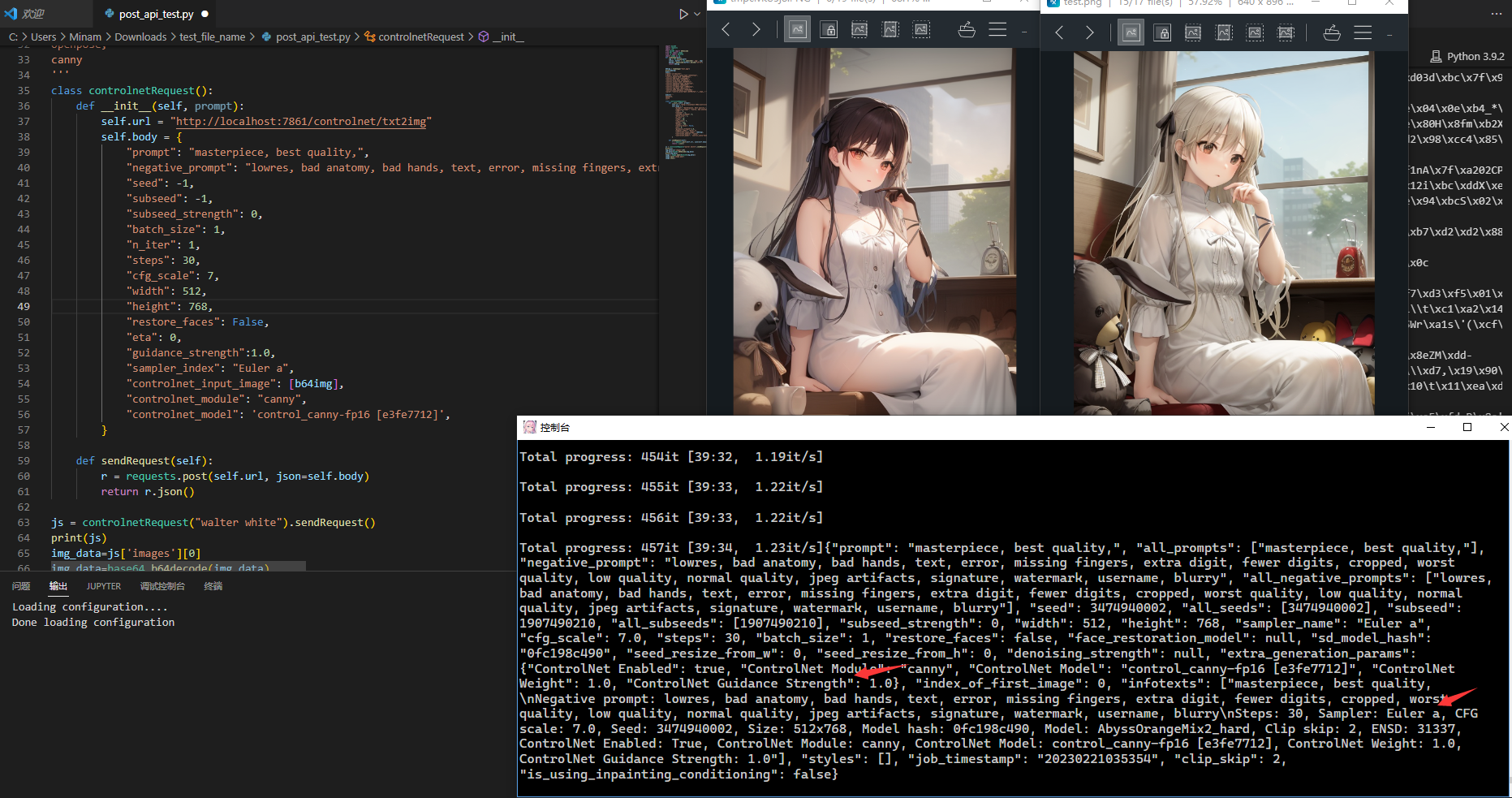

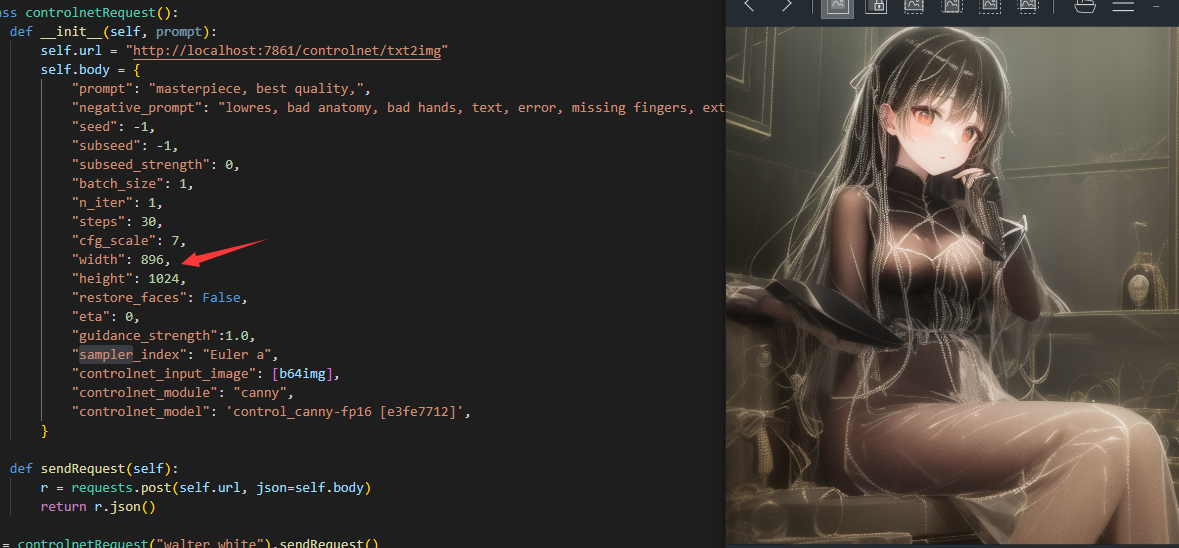

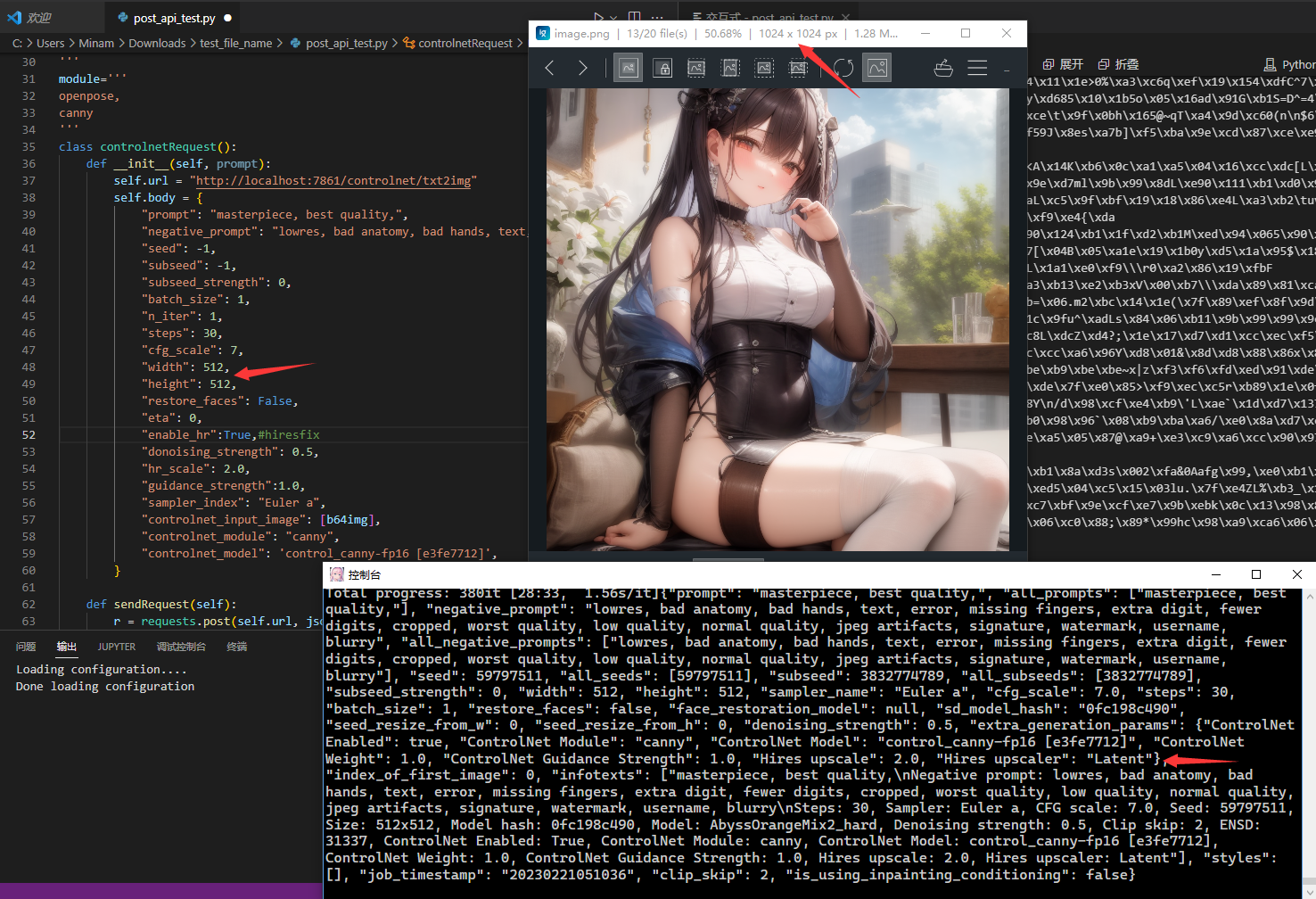

Note that the fourteenth position in Figure 1 is the parameter of guidance_strength. Its value of 0 will cause serious errors in the results of Eluer a and other models (you can modify its value in the webui to view its impact on each Sampler, and DDIM will Has little effect). In addition, the api.py in the path sd-webui\extensions\sd-webui-controlnet\scripts will not take effect after modification, and it needs to be placed under sd-webui\scripts. Figure 2 is the result of Euler a after modifying guidance_strength=1.0. Here are the new questions: I don't know why there are some color errors in the picture when the resolution is 896x1024 (see Figure 3)

I think I have a general understanding of how it works for this api.py. I added a guidance_strength parameter to solve some problems that the Sampler does not work (such as Euler a). For the problem that the resolution is too large and the color is wrong, I added Hiresfix related parameters to solve it, I have submitted the relevant changes.

I have the same issue still, with the settings changes.

# todo: extend to include wither alwaysvisible scripts processed = scripts.scripts_img2img.run(p, *(p.script_args)) if processed is None: # fall back processed = process_images(p)Processed is always none here

I am also facing the same issue. After a quick look, it seems like processed is None because script_index == 0 in script.run(). Launching with --nowebui btw.

+1 on GET request for retrieving all preprocessors and controlnet models

I have the same issue still, with the settings changes.

# todo: extend to include wither alwaysvisible scripts processed = scripts.scripts_img2img.run(p, *(p.script_args)) if processed is None: # fall back processed = process_images(p)Processed is always none here

I am also facing the same issue. After a quick look, it seems like

processedis None becausescript_index == 0in script.run(). Launching with --nowebui btw.

Processed is always none here, What should I do

My problem is as follows: `ERROR: Exception in ASGI application Traceback (most recent call last): File "/home/xxxx/xx/stable-diffusion-webui/venv/lib/python3.8/site-packages/anyio/streams/memory.py", line 94, in receive return self.receive_nowait() File "/home/xxxx/xx/stable-diffusion-webui/venv/lib/python3.8/site-packages/anyio/streams/memory.py", line 89, in receive_nowait raise WouldBlock anyio.WouldBlock

During handling of the above exception, another exception occurred:

Traceback (most recent call last): File "/home/xxxx/xx/stable-diffusion-webui/venv/lib/python3.8/site-packages/starlette/middleware/base.py", line 77, in call_next message = await recv_stream.receive() File "/home/xxxx/xx/stable-diffusion-webui/venv/lib/python3.8/site-packages/anyio/streams/memory.py", line 114, in receive raise EndOfStream anyio.EndOfStream

During handling of the above exception, another exception occurred:

Traceback (most recent call last): File "/home/xxxx/xx/stable-diffusion-webui/venv/lib/python3.8/site-packages/uvicorn/protocols/http/h11_impl.py", line 407, in run_asgi result = await app( # type: ignore[func-returns-value] File "/home/xxxx/xx/stable-diffusion-webui/venv/lib/python3.8/site-packages/uvicorn/middleware/proxy_headers.py", line 78, in call return await self.app(scope, receive, send) File "/home/xxxx/xx/stable-diffusion-webui/venv/lib/python3.8/site-packages/fastapi/applications.py", line 271, in call await super().call(scope, receive, send) File "/home/xxxx/xx/stable-diffusion-webui/venv/lib/python3.8/site-packages/starlette/applications.py", line 125, in call await self.middleware_stack(scope, receive, send) File "/home/xxxx/xx/stable-diffusion-webui/venv/lib/python3.8/site-packages/starlette/middleware/errors.py", line 184, in call raise exc File "/home/xxxx/xx/stable-diffusion-webui/venv/lib/python3.8/site-packages/starlette/middleware/errors.py", line 162, in call await self.app(scope, receive, _send) File "/home/xxxx/xx/stable-diffusion-webui/venv/lib/python3.8/site-packages/starlette/middleware/base.py", line 104, in call response = await self.dispatch_func(request, call_next) File "/home/xxxx/xx/stable-diffusion-webui/modules/api/api.py", line 96, in log_and_time res: Response = await call_next(req) File "/home/xxxx/xx/stable-diffusion-webui/venv/lib/python3.8/site-packages/starlette/middleware/base.py", line 80, in call_next raise app_exc File "/home/xxxx/xx/stable-diffusion-webui/venv/lib/python3.8/site-packages/starlette/middleware/base.py", line 69, in coro await self.app(scope, receive_or_disconnect, send_no_error) File "/home/xxxx/xx/stable-diffusion-webui/venv/lib/python3.8/site-packages/starlette/middleware/gzip.py", line 24, in call await responder(scope, receive, send) File "/home/xxxx/xx/stable-diffusion-webui/venv/lib/python3.8/site-packages/starlette/middleware/gzip.py", line 44, in call await self.app(scope, receive, self.send_with_gzip) File "/home/xxxx/xx/stable-diffusion-webui/venv/lib/python3.8/site-packages/starlette/middleware/exceptions.py", line 79, in call raise exc File "/home/xxxx/xx/stable-diffusion-webui/venv/lib/python3.8/site-packages/starlette/middleware/exceptions.py", line 68, in call await self.app(scope, receive, sender) File "/home/xxxx/xx/stable-diffusion-webui/venv/lib/python3.8/site-packages/fastapi/middleware/asyncexitstack.py", line 21, in call raise e File "/home/xxxx/xx/stable-diffusion-webui/venv/lib/python3.8/site-packages/fastapi/middleware/asyncexitstack.py", line 18, in call await self.app(scope, receive, send) File "/home/xxxx/xx/stable-diffusion-webui/venv/lib/python3.8/site-packages/starlette/routing.py", line 706, in call await route.handle(scope, receive, send) File "/home/xxxx/xx/stable-diffusion-webui/venv/lib/python3.8/site-packages/starlette/routing.py", line 276, in handle await self.app(scope, receive, send) File "/home/xxxx/xx/stable-diffusion-webui/venv/lib/python3.8/site-packages/starlette/routing.py", line 66, in app response = await func(request) File "/home/xxxx/xx/stable-diffusion-webui/venv/lib/python3.8/site-packages/fastapi/routing.py", line 237, in app raw_response = await run_endpoint_function( File "/home/xxxx/xx/stable-diffusion-webui/venv/lib/python3.8/site-packages/fastapi/routing.py", line 163, in run_endpoint_function return await dependant.call(**values) File "/home/xxxx/xx/stable-diffusion-webui/extensions/sd-webui-controlnet/scripts/api.py", line 220, in txt2img processed = process_images(p) File "/home/xxxx/xx/stable-diffusion-webui/modules/processing.py", line 486, in process_images res = process_images_inner(p) File "/home/xxxx/xx/stable-diffusion-webui/modules/processing.py", line 657, in process_images_inner x_sample = modules.face_restoration.restore_faces(x_sample) File "/home/xxxx/xx/stable-diffusion-webui/modules/face_restoration.py", line 19, in restore_faces return face_restorer.restore(np_image) File "/home/xxxx/xx/stable-diffusion-webui/modules/codeformer_model.py", line 88, in restore self.create_models() File "/home/xxxx/xx/stable-diffusion-webui/modules/codeformer_model.py", line 65, in create_models checkpoint = torch.load(ckpt_path)['params_ema'] File "/home/xxxx/xx/stable-diffusion-webui/modules/safe.py", line 106, in load return load_with_extra(filename, extra_handler=global_extra_handler, *args, **kwargs) File "/home/xxxx/xx/stable-diffusion-webui/modules/safe.py", line 151, in load_with_extra return unsafe_torch_load(filename, *args, **kwargs) File "/home/xxxx/xx/stable-diffusion-webui/venv/lib/python3.8/site-packages/torch/serialization.py", line 777, in load with _open_zipfile_reader(opened_file) as opened_zipfile: File "/home/xxxx/xx/stable-diffusion-webui/venv/lib/python3.8/site-packages/torch/serialization.py", line 282, in init super(_open_zipfile_reader, self).init(torch._C.PyTorchFileReader(name_or_buffer)) RuntimeError: PytorchStreamReader failed reading zip archive: failed finding central directory ` What's wrong?? run with --disable-safe-unpickle --nowebui

No option to set SD model?

ah okay, I think I have to call /sdapi/v1/options first to set the model, thanks!

ah okay, I think I have to call /sdapi/v1/options first to set the model, thanks!

https://github.com/Mikubill/sd-webui-controlnet/blob/main/example/test_api.ipynb In fact, you could use sampler index item to set the model in run time. It works the same as sampler_name.

--nowebui

It is hard to see from the log what the issue is. Looks like .pth/.ckpt file issue roughly. Does it run when you use control net via GUI?