windows-powershell-docs

windows-powershell-docs copied to clipboard

windows-powershell-docs copied to clipboard

New-VirtualDisk WriteCache mechanism

I find the WriteCache-related parameters description not detailed enough:

-

At first, I was even struggling to understand, whether it stores the data in the performance tier or in system RAM cache? Or is it trying to account for the on-disk RAM cache? (AFAIU after some googling, it stores data on the performance-tier devices, but via some different mechanism. But that wasn't easy to understand. IMHO, docs could be significantly improved here.)

-

How does it distribute the cached data data across the higher-performance-tier devices, if there's more than one? What does

If the number or size of the solid-state drives (SSDs) or journal disks in the storage pool is not sufficient

mean? How many SSDs would I need? Does it work like 2-way mirror and thus requires at least 2 devices? Or maybe it works like a 3-way mirror? Or maybe it just stores the data inside the performance tier, just like the normal data that was moved there based on the heatmap? If not, does the result depend on what my higher-performance-tier is?

- During my experiments (mostly based on this nice article), when using 3 SSD drives in the performance tier, I found that I have to substract

3*WriteCacheSizefromSSDTierSizes, which looks like a 3-way mirror. Even though my SSD tierResiliencySettingNameisSimple. This behavior seem to be undocumented.

- During my experiments (mostly based on this nice article), when using 3 SSD drives in the performance tier, I found that I have to substract

-

This article mentions that at least "shorter-than-cluster-size" writes do utilize the higher-performance-tier somehow:

If a 4K write is made to a range currently in the capacity tier, ReFS must read the entire cluster from the capacity tier into the performance tier before making the write

- I was unable to find such details in any more-official doc. It looks like there's just no official description about how write-caching works and what to expect from it.

- ~This article mentions that in "long-write" cases the write cache is bypassed (they mostly discuss Parity spaces though).

since Windows 10 build 1809, if you do that, Windows will completely bypass the cache and do full stripe writesIs that true for all scenarios (e.g. "simple" spaces)? Is Storage Spaces fundamentally limited by the capacity tier performance for sequential burst writes, even if my "burst" size is smaller that theWriteCacheSize?~ - ~When would the result of such a write operation be flushed back to the capacity tier? After the

WriteCachecapacity exceeded? As soon as possible, regardless of the existing IOPS-load on the capacity tier?~- After a couple of diskspd runs with 16KB and 64KB random writes to a 32GB file on a filesystem with 64KB cluster size and 33GB WriteCache, it is evident that WriteCache does not help smaller-that-cluster-size writes (e.g. there's probably no attempt to copy everything to the WriteCache), but 64KB writes are notably faster than they would be on a plain pair of HDDs that I have in the capacity tier (thus, it looks like Storage Spaces is not trying to flush them to HDDs too early)

diskspd64.exe -d200 -Z100M -w100 -c32G -b16K -o32 -t1 -r -S M:\temp\diskspd.junk~1 MB/sdiskspd64.exe -d200 -Z100M -w100 -c32G -b64K -o32 -t1 -r -S M:\temp\diskspd.junk~1500 MB/s . OK, that could be a somewhat too performance-specific point for the doc in question, so I'll cross it out. Meanwhile, IMHO, it still would be nice to see some simple benchmarks (e.g. not complex multi-node setups) like this somewhere in official docs.

- After a couple of diskspd runs with 16KB and 64KB random writes to a 32GB file on a filesystem with 64KB cluster size and 33GB WriteCache, it is evident that WriteCache does not help smaller-that-cluster-size writes (e.g. there's probably no attempt to copy everything to the WriteCache), but 64KB writes are notably faster than they would be on a plain pair of HDDs that I have in the capacity tier (thus, it looks like Storage Spaces is not trying to flush them to HDDs too early)

-

Why do you recommend such a tiny write cache size (1GB)? What could go wrong if I set WriteCacheSize to a few TB? CPU use? RAM use? Latency? I've found pretty old statements that it should be kept below 16GB, but they still do not explain why. Is there any simple reproducible experiment that demonstrates the disadvantages of the larger WBC?

Some background: https://superuser.com/q/1098636/135260

Document Details

⚠ Do not edit this section. It is required for docs.microsoft.com ➟ GitHub issue linking.

- ID: 075ae2cc-e21d-b785-a7da-1651776428a8

- Version Independent ID: d2de71c0-90d0-1b25-7412-2a4ab53cdc99

- Content: New-VirtualDisk (Storage)

- Content Source: docset/winserver2022-ps/storage/New-VirtualDisk.md

- Product: w10

- Technology: windows

- GitHub Login: @JasonGerend

- Microsoft Alias: jgerend

To make it easier for you to submit feedback on articles on learn.microsoft.com, we're transitioning our feedback system from GitHub Issues to a new experience.

As part of the transition, this GitHub Issue will be moved to a private repository. We're moving Issues to another repository so we can continue working on Issues that were open at the time of the transition. When this Issue is moved, you'll no longer be able to access it.

If you want to provide additional information before this Issue is moved, please update this Issue before December 15th, 2023.

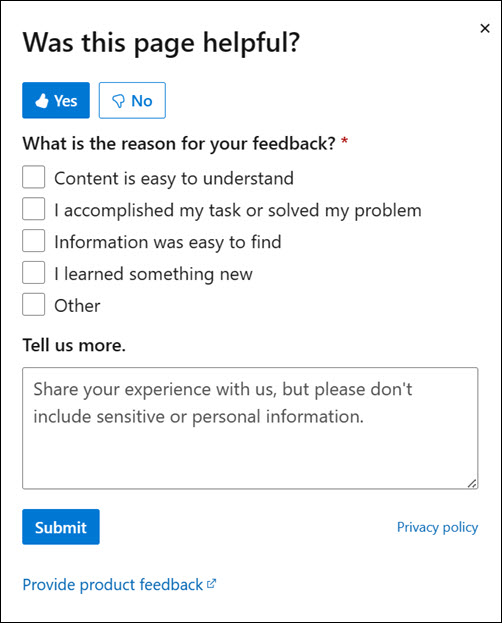

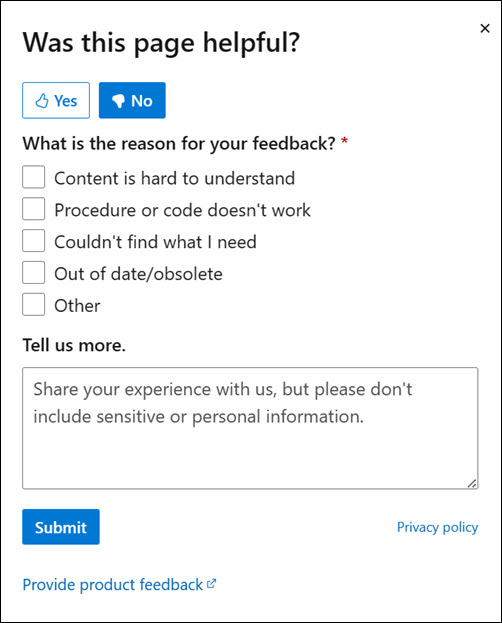

With the new experience, you no longer need to sign in to GitHub to enter and submit your feedback. Instead, you can choose directly on each article's page whether the article was helpful. Then you can then choose one or more reasons for your feedback and optionally provide additional context before you select Submit.

Here's what the new experience looks like.

Note: The new experience is being rolled out across learn.microsoft.com in phases. If you don't see the new experience on an article, please check back later.

First, select whether the article was helpful:

Then, choose at least one reason for your feedback and optionally provide additional details about your feedback:

| Article was helpful | Article was unhelpful |

|---|---|

|

|

Finally, select Submit and you're done!