GPU accelerated UMAP and HDBSCAN issues: memory and predict

Hello everyone,

First issue: memory

cuml.manifold.Umap is crashing with the following error every time whenever I fit_transform on more than 1500000 documents.

2022-07-27 15:40:09,788 - BERTopic - Transformed documents to Embeddings

Traceback (most recent call last):

File "/home/natethegreat/bertopic/bertopic_model_cuml.py", line 17, in

The same error does not occur if I use the cpu versions of umap and hdbscan. My understanding is that it happens because (obviously) the amount of dedicated gpu memory is much smaller than regular ram (8gb vs 128gb in my case).

Are there any options to circumvent this issue? Like maybe we could split the process into smaller batches or use shared gpu memory?

Also, a model trained with less than 1500000 documents has two weird things to it:

- The resulting topics often have duplicated words inside e.g. "14_gold_gold money_silver_money gold"

- Regardless of whether or not training is successful the message "Segmentation fault" is printed at the end

Second issue: model.predict With a model trained with GPU accelerated versions of umap and hdbscan running model.transform([sentence]) causes the following error:

Traceback (most recent call last):

File "

Thank you regardless of whether something comes out of this.

code:

from bertopic import BERTopic from cuml.cluster import HDBSCAN from cuml.manifold import UMAP from sklearn.feature_extraction.text import CountVectorizer import pickle

umap_model = UMAP(n_components=5, n_neighbors=15, min_dist=0.0) hdbscan_model = HDBSCAN(min_samples=10, gen_min_span_tree=True)

vectorizer_model = CountVectorizer(ngram_range=(1, 2), stop_words="english", min_df=100)

topic_model = BERTopic(umap_model=umap_model, hdbscan_model=hdbscan_model, vectorizer_model=vectorizer_model, verbose=True, calculate_probabilities=False, low_memory=True)

docs = pickle.load(open("docs_0.35.pkl", "rb")) # <--- 220 mb. approx. 1.6m samples

topic_model.fit(docs)

pickle.dump(topic_model, open("bert_model_2.0.pkl", "wb"))

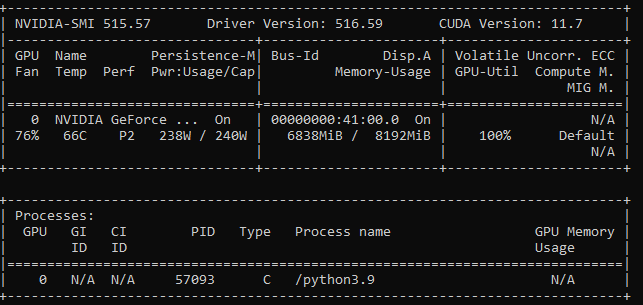

nvidia-smi:

Hello, I get same problem as Second issue: model.predict

Did you manage to solve it?

Sadly not. Still waiting for someone to bail us out

The first issue is likely an out-of-memory. Though UMAP should not spike memory too significantly, it's possible that the requirements plus other data on your GPU may be causing an OOM. UMAP creates a sparse KNN graph on the order of n_samples * n_neighbors, so reducing the number of neighbors will reduce memory needs for that data structure.

The predict error is due to cuML's HDBSCAN not yet supporting approximate_predict. Please see https://github.com/MaartenGr/BERTopic/issues/647#issuecomment-1199863402 for more context.

Yes, as beckernick said, just remove the hdbscan=... in your bertopic model when you initialized, no problems after that! :)

Thank you guys!

approximate_predict function for HDBSCAN: https://github.com/rapidsai/cuml/pull/4872 Nightly Release: https://github.com/rapidsai/cuml/releases/tag/v22.10.00a

Installed the nightly release but I'm still getting the error mentioned above... Any ideas?

@sebastien-mcrae The support for for cuML's HDBSCAN as a 1-on-1 replacement for the CPU HDBSCAN is not yet implemented in BERTopic and will take some time before it is fully implemented.

In your case, when you use cuML's HDBSCAN it recognizes it as a cluster model, not necessarily an HDBSCAN-like model. As such, it defaults back to what is expected from cluster models in BERTopic, namely that it needs .fit and .predict functions for it to work.

Due to inactivity and with the v0.13 release of BERTopic supporting more native functions of cuML, I'll be closing this for now. If you have any questions or want to continue the discussion, I'll make sure to re-open the issue!