State is unclear in the `star` pointer.

Intro

I've been trying to understand what makes state so tricky in MESA/star. This is my attempt at expressing what's wrong, and what we might do about it.

Everything here has some caveats, the biggest of which is a 'so far as I know' that should be attached to every statement about MESA.

Current State

There are at least six different kinds of state that live in star_data:

- Metadata. Flags and configuration, mostly defined in

star_data_step_input.inc. - The 'ground truth' structure variables. E.g.

xh(structure),xa(composition),mlt_vc(for TDC). - Starting values. Used for evaluating time derivatives.

- Copies of structure variables. e.g.

s%lnRis a copy ofs%xh(i_lnR). Presumably this is for convenience and code clarity. Note that these are copies and not pointers. - Intermediate results. For instance results of

eoscalls or intermediate calculations likecsoundandz53bar. - Outputs that go in history or profiles or pgstar windows or which are used for debugging.

There are also at least three different problems we routinely hit with state:

- Sometimes an output becomes an intermediate result when a piece of code is written that relies on it. These may need updating at different points in time, resulting in inconsistencies like #66.

- Different kinds of state need to be updated at different times when

starperforms iterations, starts a step, finishes a step, retries, model writing/reading, etc. This was a major source of bugs in the development of TDC, and even determining when a particular piece of state gets updated is challenging/time-consuming. - It is not clear which intermediate results depend on which other intermediate results, and what those dependencies look like. This makes it easy for things to get out of sync. This came up in developing

eps_mdot, where at one points%Rands%lnRgot out of sync.

Proposed Solution

I am not sure of the best solution here, but the best I've come up with is to the data inside star_data into six sub-types:

metastructstarteaseinteroutput

These correspond to the different kinds of state above.

A few examples:

s%v_flagbecomess%meta%v_flags%xabecomess%struct%xas%lnRbecomess%ease%lnRs%mlt_vc_oldbecomess%start%mlt_vcs%eps_mdotbecomess%inter%eps_mdots%center_yebecomess%output%center_ye

These sub-types help organize the logic, at the cost of being more verbose. But for instance you could pass them directly to subroutines, so a routine that calculates outputs could be passes s%output -> o and then you write to o%center_ye.

We can also attach rules to some of the sub-types:

metaonly gets touched byrun_star_extrasand initialization routines. Flags don't get changed by the rest ofstar.structonly gets modified by the solver. In some cases this just means copying an intermediate variable (e.g. there can be anmlt_vcvariable that lives ininter, but the 'ground truth' will always be the version the solver writes intostruct). This ensures that you know for sure thatstructdata was last updated at the end of the most recent iteration.startvariables only get set at the start of a step or by initialization routines (including model reading).easevariables would be written once per iteration, always at the end of a solver call. This ensures that they remain just convenient places to put copies of structure variables and/or one-off derived quantities likeeosoutputs.intervariables would be a sort of catch-all for state we can't easily put in the other buckets. The goal should be for this to be a small category.outputvariables would be written once per step. They would never be read except by the profile/history/pgstar/model writing routines. We could enforce this with a derived type, or else check for it with a linter.

I think this would make the state much more manageable, and make it a lot clearer what gets modified where.

I'd be very interested to hear what others think though!

I agree state is impossible to reason about in star/. I think there's room to consolidate your proposal (maybe?). As well as plenty of bike-shedding over names :)

Each set on inputs (star_job, controls, pgstar) should just be moved to their own s% struct (star_job is already done. So that would be a s%star_job, s%controls, s%pgstar. This would be mostly just to tidy things up and make clearer what something is. That handles the 'meta' option. We can also put all the pgstar data in a s% pgstar struct.

Things like v_flag should just get moved into s% star_job as that's the place it is controlled from.

I think for the other state the better way(?) is think about things are:

- Things that must survive between steps (xa, xh): s% state

- Things that are valid only for that timestep (lnR, R, eps_mdot): s% step.

- Things that need saving between steps (*_start or mlt_vc_old): s%start

If step and start where the same type we could just do some pointer juggling so that at the end of the step we just move the s% step pointer to s%start and make a new s% step struct. That way s% step must always be reset for each step and start is just the last step (some stuff in start might need to be de-allocated to save memory).

For things you call output we do already have the ability to just call into the history/profile routines to get them (star_get_history_output and star_get_profile_output) and for things like center_ye do they even need to be stored? Should we just say you always need to do a lookup to get them?

I think that's a great way to consolidate. More detailed thoughts:

Each set on inputs (star_job, controls, pgstar) should just be moved to their own s% struct (star_job is already done. So that would be a s%star_job, s%controls, s%pgstar. This would be mostly just to tidy things up and make clearer what something is. That handles the 'meta' option. We can also put all the pgstar data in a s% pgstar struct.

Perfect!

If step and start where the same type we could just do some pointer juggling so that at the end of the step we just move the s% step pointer to s%start and make a new s% step struct. That way s% step must always be reset for each step and start is just the last step (some stuff in start might need to be de-allocated to save memory).

I really like this idea. I think it'll simplify things a lot. I'd be inclined to not de-allocate at first (for simplicity). If we discover there's a memory issue we can always add that down the road.

For things you call output we do already have the ability to just call into the history/profile routines to get them (star_get_history_output and star_get_profile_output) and for things like center_ye do they even need to be stored? Should we just say you always need to do a lookup to get them?

They do not need to be stored. Let's make them all calls on the fly.

Things that are valid only for that timestep (lnR, R, eps_mdot): s% step.

I think it might be good to further subdivide these into things only valid for a step and things only valid for an iteration. For instance eps_mdot gets set in the implicit mdot loop and is only valid for a step, whereas lnR is only valid for an iteration. Thoughts?

One complication is we actually have two loops:

- The implicit mdot loop

- The solver iterations once we set

mdot.

So in principle there are three kinds of variables we might want:

- Valid for the whole step (though I think all of this might end up in

startand the various controls structs). - Valid for a given

mdot(e.g.eps_mdot). - Valid for a given iteration (e.g.

lnR).

But I'm not sure what the right way to handle this is...

Thoughts?

In terms of the two loops, how much is actually changed in the mdot loop? I thought it was just mdot and eps_mdot? Is anything else touched?

If we assume that everything in s%start was 0 at the start of the step, then it seems simpler to just set them into s%step at the start of the step. If we have to retry the step (and get a new mdot) we would be throwing s%step away anyway.

For solver iterations do we keep any information between iterations? We could have a s%step%iter and s%step%prev_iter if we just need the previous iteration. Or have a s%step%iter() array if we need a variable number of iterations saved (saved as in we need all information not just a distilled summary).

I think a fair amount gets re-evaluated each mdot loop. It's mdot and eps_mdot and element diffusion and rotation and premixing/predictive mixing, I think.

If we assume that everything in s%start was 0 at the start of the step, then it seems simpler to just set them into s%step at the start of the step. If we have to retry the step (and get a new mdot) we would be throwing s%step away anyway.

Not sure what you're saying here?

For solver iterations do we keep any information between iterations? We could have a s%step%iter and s%step%prev_iter if we just need the previous iteration. Or have a s%step%iter() array if we need a variable number of iterations saved (saved as in we need all information not just a distilled summary).

We don't keep info from prior iterations other than the current state, but there are lots of things that we set in previous iterations that need to be updated each iteration (e.g. lnR, T, ...). I'd ideally like to make that as explicit as possible so we don't have issues where variables either slip through the cracks and don't get updated, or else where they do get updated but in a monolithic set_vars call that no one understands.

So I think it'd be good to have some way of (1) gathering all the data that get updated on a per-iteration basis in one place/struct and (2) invalidating that information at the end of each iteration. I think your proposal of s%step%iter is all we need for that?

Me neither I entirely forgot that we don't just set mdot but actually take the mass off during the first loop. Also I forget about everything else that happens then like diffusion.

We might need to think about making some sort of document that, at least in broad strokes, documents what should be set/altered when. Because I seem to already be confused about what happens when.

This is also a good opportunity to make it much clearer what is and what is not kept constant through a timestep. This way we can have s% start% D_mix making it explicit for anyone seeing the code that this is not updated through iterations. But I'm not sure about s% step% iter, for a lot of stuff I think it would defeat the purpose to have things like 's% step% iter% lnR(k)' in the equations. In terms of the step structure I think we currently have something like:

- Step starts

- Pre-adjustment before Newton solver, mass loss, element diffusion rotation, etc...

- Newton solver starts

- Multiple iterations

- Post-adjustment after Newton solver (rotation for example is split by default, with half of angular momentum transport being done before the step and half after).

- End of step

I'm not sure if there are more post-adjustment steps besides rotation. If there's not then I think it is a good time to see if we can do away with the bizarre split of rotation. Other than that then I think we can work with (reused some names from both of your suggestions):

s% struct: contains xa, xh, star_mass, dq, and others.s% state: derived quantities forms% struct, updated religiously after any operation that modifiess% structs% start: derived quantities forms% struct, updated religiously after any operation that modifiess% structbefore newton iterations.s% end: same information ass% start, except evaluated froms% structafter Newton iterations.

I think the start and end values would be useful for cases where it is unclear why there are apparent inconsistencies in the output, particularly with mixing. In this sense start keeps a record of the quantities that were actually used in the equations while end tells you what you'd actually have if they were evaluated at the end.

But I'm not sure about s% step% iter, for a lot of stuff I think it would defeat the purpose to have things like 's% step% iter% lnR(k)'

Remember that we can pass these sub-structs directly to subroutines, so we could directly pass the iter struct and then call iter%lnR.

If there's not then I think it is a good time to see if we can do away with the bizarre split of rotation.

I agree. Is the idea to fix up the new rotation equation with auto_diff? ;-)

Regarding your suggestion:

- I don't understand the state/struct split. What's the conceptual notion there?

- I'm not sure I trust 'update religiously after...'. That feels like a quick way to get back to where we are now with monolithic

set_varscalls. I'd much rather split by when things get updated, which makes it easy to (1) invalidate old results and (2) check whether we've correctly updated everything at the right time. - I don't think we should have s%end. If we want something available for output and it only depends on the state at the end of the step we can evaluate it on the fly and don't need to store it.

A new suggestion:

- Things set by inlists and immediate derived quantities (e.g.

v_flag) go in s%star_job, s%controls, s%pgstar. - Things that must survive between steps: s% state (xa, xh, mlt_vc).

mlt_vcsits here because TDC needs it to survive. - Starting values and things that get set once per step (before the mdot loop): s%start (e.g.

lnd_start). - Things that get set during the mdot loop but held fixed during iterations: s%start_iter (

eps_mdot, etc.) - Things that get set during iterations and are only valid for one iteration: s%iter (

lnR, etc.)

The control flow is then a lot simpler:

- When we read inlists we store their data and immediate derived quantities (e.g. flags) in the relevant struct.

- At the start of a step we read data from s%state into s%start. We then compute any other starting quantities and store them in s%start.

- Inside the mdot loop we evaluate quantities that need to know

mdotand happen afteradjust_mass, and store them in s%start_iter. - Inside iterations we set and use quantities in s%iter.

- For debugging it's then easy to inject a 'nullify everything in s%iter after each iteration' step. Similarly we can nullify everything in s%start_iter after each mdot loop step. This makes it easy to track down out-of-sync state.

- Because each piece of state has a defined time when it can be modified, if you notice a problem with a piece of state it's not hard to figure out where that problem happened.

Thoughts?

Remember that we can pass these sub-structs directly to subroutines, so we could directly pass the iter struct and then call iter%lnR.

I know that, was bringing it up because I did not see the benefit of having that additional sublayer of information. Thought the original idea from Rob was regarding whether we want to preserve the data between iterations. I do not think we need that information.

I agree. Is the idea to fix up the new rotation equation with auto_diff? ;-)

It is, but through the last year I've become progressively bad at estimating when I'll have time for significant dev work. I would make an other category for parts that right now require special treatment, but is our target to remove completely in the future.

- I don't understand the state/struct split. What's the conceptual notion there?

Was thinking of state containing the minimally required information to describe the model, and struct to be anything derived from these quantities and ensured for the end user, or through the majority of the private code, to be consistent with the data in state (of course, it cannot be consistent during an operation that modifies xa or xh). Choice of names in what I suggested might not be the best.

- I'm not sure I trust 'update religiously after...'. That feels like a quick way to get back to where we are now with monolithic set_vars calls. I'd much rather split by when things get updated, which makes it easy to (1) invalidate old results and (2) check whether we've correctly updated everything at the right time.

I think a lot of the confusion of state comes from not understanding when quantities are off-sync with xa and xh. This is present in the current monolithic call. There is a multipurpose set_vars_if_needed but we also have the subroutine set_hydro_vars which is called in various parts with specific indications of what to evaluate and what not. In my suggestion this would be replaced with a single subroutine with no options that would modify everything it is concerned with. The number of calls to such a subroutine should be very small.

For any operation that we have previous to the iterations that modifies these fundamental properties (mass adjustment, remesh, element diffusion, rotation, split burn), shouldn't we update most of the information for it to be available for the following operation?

- I don't think we should have s%end. If we want something available for output and it only depends on the state at the end of the step we can evaluate it on the fly and don't need to store it.

Here was thinking on just explicitly repeat the operations that go into s% start but loading them into s% end instead (they would be the same type). Feels like a straightforward thing to do and have for debug, but also to shut off by default. But its true that its uses can be very limited.

Comments on your specific suggestion:

- Things set by inlists and immediate derived quantities (e.g. v_flag) go in s%star_job, s%controls, s%pgstar.

Think we all agree on this one.

- Things that must survive between steps: s% state (xa, xh, mlt_vc). mlt_vc sits here because TDC needs it to survive.

Rather than defining this as "things that must survive" I'd rather think of it as the minimal set required to describe the state of the model. I would then add j_rot into it (though long term I want it to be just an extra part of xh). So I agree we need this, just with a different definition.

- Things that get set during the mdot loop but held fixed during iterations: s%start_iter (eps_mdot, etc.)

- Things that get set during iterations and are only valid for one iteration: s%iter (lnR, etc.)

These two are the parts where I think there can be a lot of confusion. For example for our current situation where we have diffusion coefficients kept constant through a step (so they would end in start_iter), we would like to make use of things that in this scheme would fall into iter, for example lnR. These quantities in iter, which are just directly derived from state are also needed much furher back in the step as well, for instance for remeshing. That's why I think its ideal to have a set of data that we take care (religiously as I said before) to keep in sync with the quantities in state.

So within your suggestion, I'd just change s% iter to be something that contains data derived from s% state and ensured to be kept in sync with it. This then includes it being consistent through Newton iterations.

I know that, was bringing it up because I did not see the benefit of having that additional sublayer of information. Thought the original idea from Rob was regarding whether we want to preserve the data between iterations. I do not think we need that information.

Ah totally agreed! Sorry about that.

It is, but through the last year I've become progressively bad at estimating when I'll have time for significant dev work. I would make an other category for parts that right now require special treatment, but is our target to remove completely in the future.

Ok.

Was thinking of state containing the minimally required information to describe the model, and struct to be anything derived from these quantities and ensured for the end user, or through the majority of the private code, to be consistent with the data in state (of course, it cannot be consistent during an operation that modifies xa or xh). Choice of names in what I suggested might not be the best.

I'm fine with that split existing. I think between the three of us we've pointed at two distinctions:

- When does this data get set?

- What (conceptually) is this data? Your state/struct split is based on (2), I think Rob's suggestion was more looking at (1), and I've been back and forth between them (currently leaning towards (1))...

I think a lot of the confusion of state comes from not understanding when quantities are off-sync with xa and xh. This is present in the current monolithic call. There is a multipurpose set_vars_if_needed but we also have the subroutine set_hydro_vars which is called in various parts with specific indications of what to evaluate and what not. In my suggestion this would be replaced with a single subroutine with no options that would modify everything it is concerned with. The number of calls to such a subroutine should be very small.

If we end up with just a single call to this routine per iteration I'm happy. But it can't become a thing that we call just to cover ourselves.

For any operation that we have previous to the iterations that modifies these fundamental properties (mass adjustment, remesh, element diffusion, rotation, split burn), shouldn't we update most of the information for it to be available for the following operation?

Yes.

Rather than defining this as "things that must survive" I'd rather think of it as the minimal set required to describe the state of the model. I would then add j_rot into it (though long term I want it to be just an extra part of xh). So I agree we need this, just with a different definition.

I like this clarification. The minimal set of things that we need to define the state is a good description. And indeed whatever rotation stuff doesn't live in xh should go here.

These two are the parts where I think there can be a lot of confusion. For example for our current situation where we have diffusion coefficients kept constant through a step (so they would end in start_iter), we would like to make use of things that in this scheme would fall into iter, for example lnR. These quantities in iter, which are just directly derived from state are also needed much furher back in the step as well, for instance for remeshing. That's why I think its ideal to have a set of data that we take care (religiously as I said before) to keep in sync with the quantities in state. So within your suggestion, I'd just change s% iter to be something that contains data derived from s% state and ensured to be kept in sync with it. This then includes it being consistent through Newton iterations.

Ok, I agree. I still want to call it iter, I think, to emphasize that it gets updated every iteration. But we can have this one get set at the outset (inside the mdot loop, before the first iteration) and then get updated once per iteration after that.

Sound good? I think we're converging...

Think we're converging too. Don't mind keeping iter as the name even if it is updated at other places. A call to update the values on iter would happen after (not written in their order in a step, just the ones that pop to mind):

- loading a model/photo

- remeshing

- mdot

- element diffusion

- split burn

- any angular momentum transport outside the Newton (currently we have this both before and after)

- every iteration

And as element diffusion, split burn and am transport use substeps, they should keep things consistent between substeps (or perhaps they have an additional layer of complexity that requires a different split between start and modified values...). It's still a sizable amount of variable adjusting calls, but I think it's a reasonable amount to manage. It might be a bit wasteful to do things like repeated EOS calls that won't be used much (guess we do that now anyways). But I'm happy to pay a reasonable performance prize in exchange of certainty that things are properly synced, and that developers suffer less headaches from MESA state confusion.

One small detail I think we'd actually want to keep in state would be the different variable flags. Having v_flag = .true. is part of the state of the star, the same goes for the network and indices that indicate where in xh is each thing. I do not see v_flag (or equivalents) as part of star_job, star_job just has various options to modify flags that define the state of the star. If we keep all this info then retries/redos could be made much more straightforward as well, just keep a full copy of state in state_start and revert to that. This would undo remeshing, which then makes it a bit wasteful as it would repeat the same remesh process. But retrying should be a relatively minor amount of time in any reasonable run to begin with (though binary does plenty of redos as part of its implicit loop to get mass transfer rates). Simplifying the retry process can also prevent nasty photo checksum problems produced by missing to update some bits during retries. Keeping all this info in state also means that saving photos or models just requires storing the info in state.

This is an excellent and important discussion and I think we should encourage/spread the discussion more widely within the team. I think we've learned that design choices deep within MESA gather a lot of inertia, so it's worth getting the wider perspective of those of us peering in from the outside before making drastic changes that'll see us adding more % to every variable.

Yes this certainly needs buy in from the other developers before we start changing anything. I don't really want us to be making the changes before the release (unless entirely down in a separate branch, that isn't merged until after we release). So there is still plenty of time for discussion and to finalize details.

Fully agreed that this needs broad discussion, and that it shouldn't happen before the release (except, as Rob says, in a branch). It'll be a decent amount of work too, so I don't think anyone's going to just go off and try it lightly.

Re @orlox: That all sounds good to me. I don't think I agree with your full list though:

- loading a model/photo shouldn't require setting

iteruntil we actually are ready to do things (i.e. this can just be a call that happens at the start of the mdot loop). The whole point is thatiterdoesn't contain anything that's needed to specify the state of the model. - element diffusion and split burn both happen inside the implicit mdot loop, so this gets handled by a single setting of

iterat the start of the loop (meaning, inside the loop at the start).

I agree that the rotation calculations that happen after the newton solve will need an extra iter setting.

As summary of where we are for further discussion, the current proposal I believe is to define five sub-structs that live inside the star pointer. These would divide up the current state of star_data as follows:

- Things set by inlists go in s%star_job, s%controls, s%pgstar.

- The minimum state needed to define the model (which must survive between steps): s% state (xa, xh, mlt_vc, flags, rotation info).

- Starting values and things that get set once per step (before the mdot loop): s%start (e.g. lnd_start).

- Things that get set during the mdot loop but held fixed during iterations: s%start_iter (eps_mdot, etc.)

- Things that get set during iterations and are only valid for one iteration: s%iter (lnR, etc.)

The idea is that (1) is what we read from inlists, (2) is what we need to restore/set when we load/save models, have retries/redo's, etc., and then (3-5) are different kinds of intermediate data that we compute at different stages in evolve, organized by when they get set/updated. This should make it very clear which data are updated when, and make it easy to ensure that everything is synchronized appropriately. This would also let us get rid of the monolithic set_vars calls (which do different things depending on various flags, and which touch lots of variables each call) and replace them with more legible routines that target specific stages of evolution.

Outputs would be calculated on-the-fly at the end of the step. Though we need to think more about items that need saving/accumulating for output purposes (e.g. num_tdc_iters).

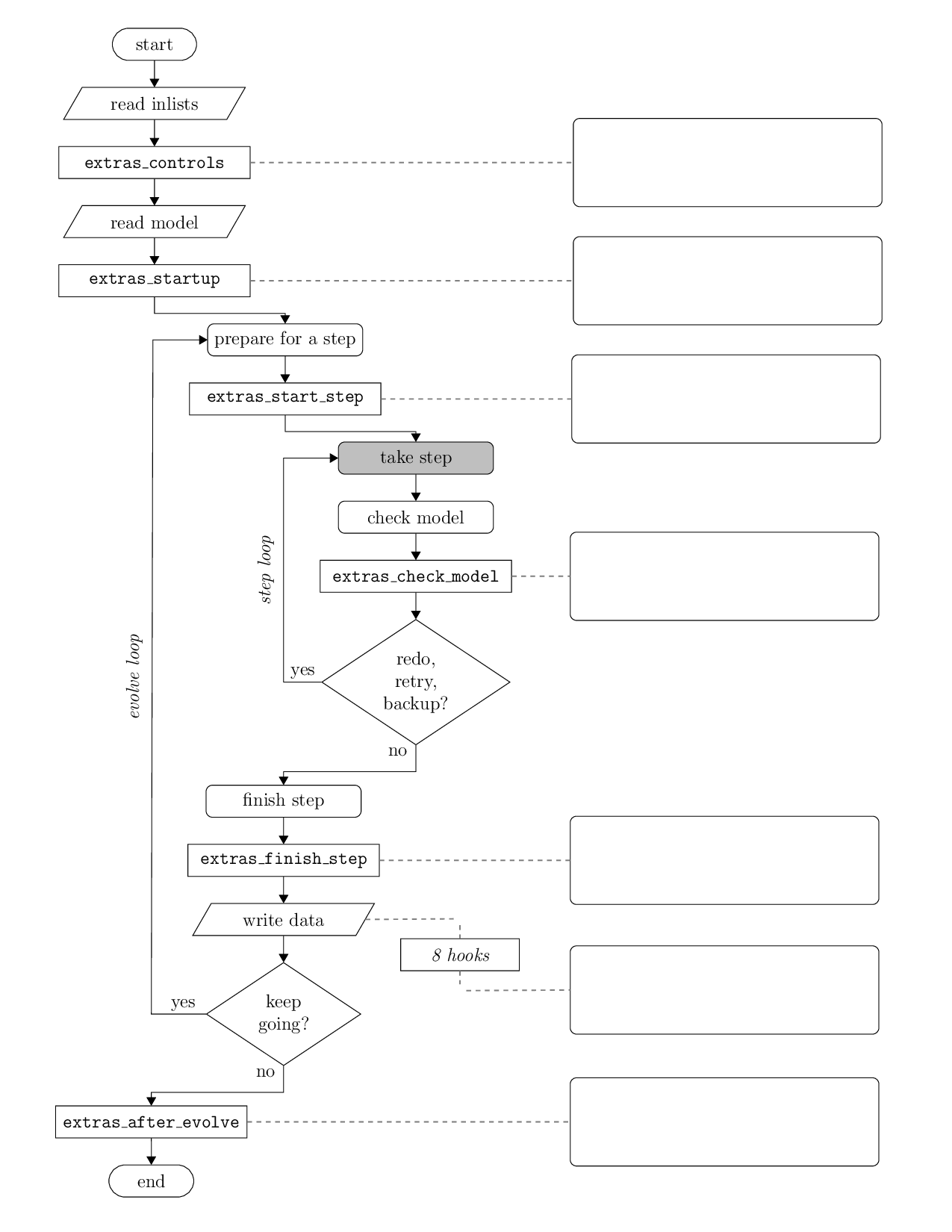

It would be amazing if someone could produce a flowchart for the various loops and logical constructs, something like Josiah's flowchart for showing where the extra procedures in run_stars_extra.f90 are called:

Something like this? (Happy to iterate on this...)

Thanks! This is definitely a good start for me and already raises some thoughts, some of which I could probably answer by looking at the source myself but I'd still turn to those who've mucked around in MESA's depths to hope to understand what is (and should be) going on.

- This might be tangential to the star-state issue but why do we separate this into two parts? If

relaxrepresents things like relaxing to target profiles (e.g.relax_entropy) or inlist-specified options (e.g. relaxing the surface optical depth), why aren't those just special cases ofdo_evolve_step_part2? - Does the

implicit_mdot_loopneed a new name, perhaps to align with the variable substruct that contains things set at the start of each iteration in the loop? It looks like it does more than justmdotbut perhaps it only loops to getmdot. i.e., withoutmdot, it isn't a loop, in which case the name isn't that misleading. - Is the separation of

do_solve_omega_mixfromdo_struct_burn_mixwhat we mean when we say rotation isn't implicitly solved like the rest of the hydro variables?

Anyway, given this structure, your most recent set of variable classes makes sense in that it aligns with the logic of what the code is doing.

I think the relax is in its own section as we don't want retries to undo the work, while everything else in part2 needs redoing after a retry.

The implicit_mdot_loop is only a loop if we set mdot implicitly.

Rotation is currently not implicit but could become so (I think @orlox is/will be working on this).

I just had a quick call with @evbauer about other things, and we talked about the question of "Does s%state get touched during Newton iterations in this proposal?" Similar questions pertain to other stages (e.g. does conv_premix alter the state variables?). I could see it going either way:

- If we say yes, then both

s%stateands%itersget touched during iterations, which is a less clean abstraction. - If we say no, then we need to store a copy of

state(or at least the data it contains) insideiters, and then copy that back out when we finish a step.

I lean towards 'no' here but it's a very slight leaning. And there could be other options apart from yes and no...

I think state shouldn't get touched during a step. It should be the clean starting point. We already kinda of copy state when we make the convience arrays like lnR (atleast for xh) though xa might need copying.

If I want throw away the entire step (like in a retry) I should be able to just start from just state without knowing anything other than a new dt.

Ok. So then to summarize where we are:

The proposed division is:

- Things set by inlists go in s%star_job, s%controls, s%pgstar.

- The minimum state needed to define the model (which must survive between steps): s% state (xa, xh, mlt_vc, flags, rotation info). This only gets modified at the end of a step and on model/photo loading.

- Starting values and things that get set once per step (before the mdot loop): s%start (e.g. lnd_start).

- Things that get set during the mdot loop but held fixed during iterations: s%start_iter (eps_mdot, etc.)

- Things that get set during iterations and are only valid for one iteration: s%iter (lnR, etc.). This includes a copy of xa and xh that we can modify.

With this, the control flow of a step looks like:

- Set starting and convenience values in s%start.

- Enter implicit mdot loop.

- Copy contents of s%state into s%iter.

- Evaluate adjust_mass, updating the state only in s%iter.

- Set values for eps_mdot, element diffusion, etc. in s%start_iter.

- Enter Newton iteration loop (struct_burn_mix)

- Set working data needed to evaluate equations and partials in s%iter.

- Evaluate equations and partials, storing results in s%iter.

- Newton solve. Update s%iter%xa and s%iter%xh.

- Check convergence. If converged, exit Newton loop.

- If debugging, nullify all of s%iter except for the bits copied over from s%state (Newton iterations build on each other!).

- Check mdot convergence criteria. If converged, exit mdot loop.

- If debugging, nullify s%start_iter.

- Check if the step is successful.

- If so, copy s%iter%xa and s%iter%xh into s%state.

- If not, trigger a retry. Nothing's been changed in s%state so no persistent state needs to be touched.

- If debugging, nullify s%start, s%start_iter, and s%iter.

A slightly cleaner (but more expensive) approach would be:

- Things set by inlists go in s%star_job, s%controls, s%pgstar.

- The minimum state needed to define the model (which must survive between steps) go in a

statestruct (xa, xh, mlt_vc, flags, rotation info). - Starting values and things that get set once per step (before the mdot loop): s%start (e.g. lnd_start). This includes s%start%state.

- Things that get set during the mdot loop but held fixed during iterations: s%start_iter (eps_mdot, etc.). This includes s%start_iter%state.

- Things that get set during iterations and are only valid for one iteration: s%iter (lnR, etc.). This includes s%iter%state.

This requires us to hang on to more copies of the state data, but if we do that the control flow simplifies:

- Set starting and convenience values in s%start.

- Enter implicit mdot loop.

- Copy s%start%state into s%start_iter%state.

- Evaluate adjust_mass, updating the state only in s%start_iter.

- Set values for eps_mdot, element diffusion, etc. in s%start_iter.

- Copy s%start_iter%state into s%iter%state.

- Enter Newton iteration loop (struct_burn_mix)

- Set working data needed to evaluate equations and partials in s%iter.

- Evaluate equations and partials, storing results in s%iter.

- Newton solve. Update s%iter%state.

- Check convergence. If converged, exit Newton loop.

- If debugging, nullify all of s%iter except s%iter%state (Newton iterations build on each other!).

- Check mdot convergence criteria. If converged, exit mdot loop.

- If debugging, nullify s%start_iter.

- Check if the step is successful.

- If so, copy s%iter%state into s%start, and nullify the rest of s%start.

- If not, trigger a retry. Nothing's been changed in s%start so no persistent state needs to be touched.

- If debugging, nullify s%start_iter, and s%iter.

In this version, the state begins in start and we always have that copy to restore if we need to. It gets passed to start_iter and modified with adjust_mass, and thereafter plays the same role as something we can restore if we need. It then gets passed into iter, which we use to iteratively build the new state in the Newton iterations. If we accept the end result and exit both loops then we can just copy this state back into start and nullify the rest of start. Otherwise we just throw out everything we just did, retain start%state, and try again.

Conceptually this let's us think about each struct as having a state part and a working part (though I wouldn't advocate making the latter its own sub-struct... that's just too much). And all that matters is that we only touch the state part in the right places, and don't rely on the working part out-of-scope (which we can enforce by nullifying it at the appropriate junctures).

Thoughts?

I'm not in a terrible rush to establish things at this level of detail but I think the second scheme is what I imagined. I don't think the memory overhead is large (at least not in my science). We're talking about array of the size of xh plus xa (plus a few other bits), which even for 10 000 points and 100 species is of order 1 million floats, so ~8 MB, right? In my case it's more like ~3 000×25=75 000 floats, so not even 1MB.

But I also don't see much difference between the two schemes except some naming differences. I don't see why we need to copy s%star_iter%state into s%iter%state. Why can't we keep working on star_iter in the Newton iterations? We need the stellar model we start with, which we won't touch, and a copy to work with. Once we've finished converging the working model, that becomes the new starting model. If we fail to converge, we start again by copying the (old) starting model to the working model and trying a smaller timestep. Both schemes above appear to handle three models but the model being worked on only ever falls back to the starting model, not the mass-adjusted one.

The real performance problem I expect is when someone starts down this road and discovers we've deleted some array of logs or exps that were being cached for efficiency.

My brain has had this on the backburner for a few hours and I think my confusion about naming is because I'm confusing what copies of the "star" we need with the substructure we want to use to distinguish when different variable are or should be set or safe to manipulate.

We sometimes need to compute finite differences in time between the end of adjust_mass and the current value inside the Newton iteration loop. This can be done by storing a copy of the state in the start_iter struct, or by just copying the bits we need to finite difference, but somehow we need to store some data from after adjust_mass and before the Newton iterations have taken place...

Ideas for a plan of operations:

Stage 1:

- Start moving outputs to on-the-fly calculations

- These are easy to do

- Can be done one at a time and done by anyone.

- Have a low chance of merge conflicts

- Move inlists options to their own derived types

- pgstar one should be easy as most places don't care about the pgstar internals

- I'll give this one ago.

- Moving the controls will be more disruptive

- pgstar one should be easy as most places don't care about the pgstar internals

Stage 2:

- Start moving each type of thing into its own derived type

- At this time we can just statically create the derived types (no fancy moving stuff around between steps/iterations).

- That should make it mostly a find and replace job to move things over

- We can do each derived type & variable one at a time to minimize breakages i.e:

- make the new iter type.

- Move the declaration of lnR from star to s% iter

- find and replace s% lnR to s%iter% LnR

- loop back to the start and move the next array

- We don't change the assumptions at this point on things or where they are set, we only replace s% something with s% new% something.

- This should minimize the breakage and can be done piecemeal one variable at a time.

- Also doesn't matter at this stage if we know exactly where everything will go yet.

Stage 3:

- Now we start to do the heavy work of taking advantage of the new structures

- Start doing things like working out when a variable is set and whether it should be set there or at some other point int time.

- These will be larger and more disruptive changes.

I like this plan. And just to clarify, during stage 2 we're moving things based on the intended time-at-which-it-is-touched (so that we can make that change later in stage 3), right?

Yes or at least our best guess.

- Start moving outputs to on-the-fly calculations

I thought I'd open an issue with some examples of how this might play out. I expected some cases where something is computed deep in star and only used to write histories or profiles but I didn't see any. The simplest cases I found were things that are used both in the history output and as timestep controls. e.g. center_degeneracy and friends, which are set in report.f90. These aren't just the value at the central cell but rather averaged over center_avg_value_dq, defined in &controls (default=1d-8). There are others (e.g. he_core_mass) that are likewise set somewhere else and used for both purposes.

Which all means I'm now less clear about what to do. Do we just pile these into another structure called report or something (though perhaps that clashes with the module; maybe info)? We probably at least need something to make it clear that these are set after a timestep for either output or timestep control.

I'm now disinclined from computing on the fly because the calculations are less trivial, several things are computed together, and once we refactor both uses (output and timestep control) we'd probably end up quite close to where the code already is.

When I say outputs I really means anything than can be derived from the stellar structure, so yes things like core masses, center/surface/min/max/avg values but doesn't have to only be things we output in history/profile. These should not be put in another structure. Say we where saving the mass coordinate of max_log_T that will depend on whether your looking at the start/end, or a intermediate point in the step.

So we need a function call that would take the mass and temperature array (but not s or s%id) as arguments and return the mass coordinate. That way it can be computed at any point in a step. A lot of these things probably already have a function that computes them, we just want to a) remove their dependency on s and b) remove the s% saved_version of them.

Look for instance at the problems we had in #81 with delta_nu/nu_max. Is there really any need for something like delta_nu/nu_max to be computed at the start of a run? No but it is/was because it ended up in some monolithic report call that gets called in places no one expected it to be called.

So wouldn't it be nicer if delta_nu was just computed as and when (and even if) someone actually needed it? Where we can be more certain that its inputs have actually been set at this time.