R2Score does not match sklearn.metrics.r2_score

🐛 Bug

The torchmetrics.R2Score outputs are not consistently matching what I get from sklearn.metrics.r2_score. When I test on the tensors and arrays from both of the docs pages they are equal, but when I use my own data I get a large negative number from torchmetrics and a reasonable one from sklearn.

Code sample

import torch

from torchmetrics import R2Score

import sklearn.metrics

target = torch.tensor([3, -0.5, 2, 7])

preds = torch.tensor([2.5, 0.0, 2, 8])

tm_r2score = R2Score()

print(tm_r2score(preds, target))

y_true = [3, -0.5, 2, 7]

y_pred = [2.5, 0.0, 2, 8]

print(sklearn.metrics.r2_score(y_true, y_pred))

uno = torch.tensor(sdata_train["log(max_activity)_SCALED"].values) # 7946 values

dos = torch.tensor(sdata_train["log(max_activity)_SCALED_PREDICTIONS"].values) # 7946 values

print(tm_r2score(uno, dos))

uno_x, dos_x = uno.numpy(), dos.numpy()

print(sklearn.metrics.r2_score(uno_x, dos_x))

>>> tensor(0.9486) # dummy data torchmetrics out

>>> 0.9486081370449679 # dummy data sklearn out

>>> tensor(-17.8863) # my data torchmetrics out

>>> 0.16492233358169228 # my data sklearn out

Expected behavior

I would expect these to be equal, and based on a scatterplot of the true vs predicted, I would assume sklearn is correct.

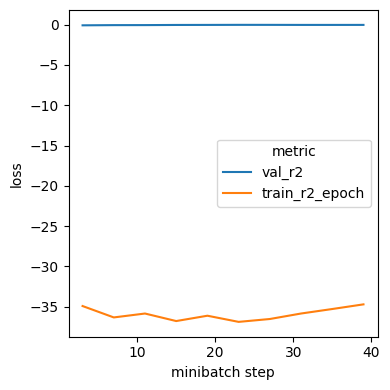

When I log the R2Score in a PL model over training I also see these large negative values for train.

Environment

TorchMetrics installed from pip v0.9.3 Python 3.7.12, PyTorch 1.11.0 Linux OS

Additional context

Am I misunderstanding what R2Score is doing?

Hi! thanks for your contribution!, great first issue!

Hi @adamklie, Could you please provide us with some testing data that creates this discrepancy between our implementation and sklearns?

After looking at the code again, it seems clear to be that the difference is simply due to the input order. Sklearn expects input as metric(target, preds) whereas we in torchmetrics expect it to be metric(preds, target).

In the example uno looks like the true values and dos are the predicted values an the correct comparison would therefore be:

uno = torch.tensor(sdata_train["log(max_activity)_SCALED"].values)

dos = torch.tensor(sdata_train["log(max_activity)_SCALED_PREDICTIONS"].values)

print(tm_r2score(dos, uno)) # this line is changed

uno_x, dos_x = uno.numpy(), dos.numpy()

print(sklearn.metrics.r2_score(uno_x, dos_x))

Closing issue.